“Light field transfer: global illumination between real and synthetic objects” by Cossairt, Nayar and Ramamoorthi

Conference:

Type(s):

Title:

- Light field transfer: global illumination between real and synthetic objects

Presenter(s)/Author(s):

Abstract:

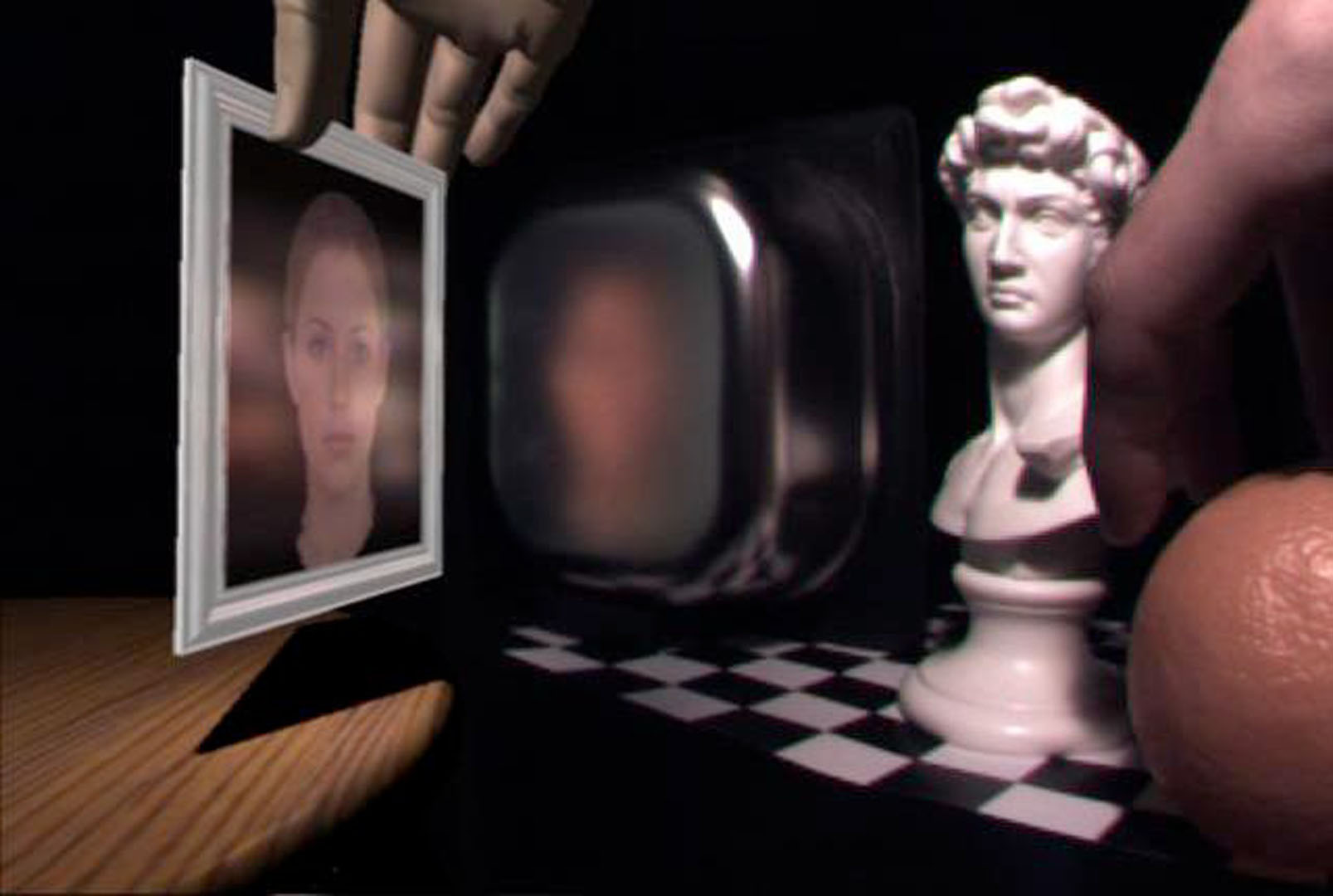

We present a novel image-based method for compositing real and synthetic objects in the same scene with a high degree of visual realism. Ours is the first technique to allow global illumination and near-field lighting effects between both real and synthetic objects at interactive rates, without needing a geometric and material model of the real scene. We achieve this by using a light field interface between real and synthetic components—thus, indirect illumination can be simulated using only two 4D light fields, one captured from and one projected onto the real scene. Multiple bounces of interreflections are obtained simply by iterating this approach. The interactivity of our technique enables its use with time-varying scenes, including dynamic objects. This is in sharp contrast to the alternative approach of using 6D or 8D light transport functions of real objects, which are very expensive in terms of acquisition and storage and hence not suitable for real-time applications. In our method, 4D radiance fields are simultaneously captured and projected by using a lens array, video camera, and digital projector. The method supports full global illumination with restricted object placement, and accommodates moderately specular materials. We implement a complete system and show several example scene compositions that demonstrate global illumination effects between dynamic real and synthetic objects. Our implementation requires a single point light source and dark background.

References:

1. Arnaldi, B., Pueyo, X., and Vilaplana, J. 1991. On the division of environments by virtual walls for radiosity computation. In EGWR ’91, 198–205.Google Scholar

2. Debevec, P. 1998. Rendering synthetic objects into real scenes. In SIGGRAPH ’98, 189–198. Google ScholarDigital Library

3. Fournier, A., Gunawan, A., and Romanzin, C. 1993. Common illumination between real and computer generated scenes. In Proc. of Graph. Interface, 254–262.Google Scholar

4. Fujii, K., Grossberg, M., and Nayar, S. 2005. A projector-camera system with real-time photometric adaptation for dynamic environments. In CVPR ’05, 814–821. Google ScholarDigital Library

5. Garg, G., Talvala, E., Levoy, M., and Lensch, H. 2006. Symmetric photography: Exploiting data-sparseness in reflectance fields. In EGSR ’06, 251–262. Google ScholarCross Ref

6. Gibson, S., and Murta, A. 2000. Interactive rendering with real-world illumination. In EGWR ’00, 365–376. Google ScholarDigital Library

7. Grossberg, M., Peri, H., Nayar, S., and Belhumeur, P. 2004. Making one object look like another: controlling appearance using a projector-camera system. In CVPR ’04, 452–459.Google Scholar

8. Jacobs, K., and Loscos, C. 2006. Classification of illumination methods for mixed reality. Comp. Graph. Forum 25, 1, 29–51.Google ScholarCross Ref

9. Masselus, V., Peers, P., Dutré, P., and Willems, Y. 2003. Relighting with 4D incident light fields. In SIGGRAPH ’03, 613–620. Google ScholarDigital Library

10. Matusik, W., and Pfister, H. 2004. 3D TV: a scalable system for real-time acquisition, transmission, and autostereoscopic display of dynamic scenes. In SIGGRAPH ’04, 814–824. Google ScholarDigital Library

11. Nayar, S., Krishnan, G., Grossberg, M., and Raskar, R. 2006. Fast separation of direct and global components of a scene using high frequency illumination. In SIGGRAPH 06, 935–944. Google ScholarDigital Library

12. Ramamoorthi, R., and Hanrahan, P. 2001. A signal-processing framework for inverse rendering. In SIGGRAPH ’01, 117–128. Google ScholarDigital Library

13. Raskar, R., Welch, G., Low, K.-L., and Bandyopadhyay, D. 2001. Shader Lamps: Animating real objects with image based illumination. In EGWR ’01, 89–101. Google ScholarDigital Library

14. Sato, I., Sato, Y., and Ikeuchi, K. 1999. Acquiring a radiance distribution to superimpose virtual objects onto a real scene. IEEE Trans. on Vis. and Comp. Graph. 5, 1, 1–12. Google ScholarDigital Library

15. Sen, P., Chen, B., Garg, G., Marschner, S., Horowitz, M., Levoy, M., and Lensch, H. 2005. Dual photography. In SIGGRAPH ’05, 745–755. Google ScholarDigital Library

16. Unger, J., Wenger, A., Hawkins, T., Gardner, A., and Debevec, P. 2003. Capturing and rendering with incident light fields. In EGSR ’03, 141–149. Google ScholarDigital Library

17. Yang, R., Huang, X., Li, S., and Jaynes, C. 2008. Toward the light field display: Autostereoscopic rendering via a cluster of projectors. IEEE Trans. on Vis. and Comp. Graph. 14, 1, 84–96. Google ScholarDigital Library

18. Yu, Y., Debevec, P., Malik, J., and Hawkins, T. 1999. Inverse global illumination: recovering reflectance models of real scenes from photographs. In SIGGRAPH ’99, 215–224. Google ScholarDigital Library

19. Zhang, Z. 2000. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22, 11, 1330–1334. Google ScholarDigital Library