“Let there be color!: joint end-to-end learning of global and local image priors for automatic image colorization with simultaneous classification”

Conference:

Type(s):

Title:

- Let there be color!: joint end-to-end learning of global and local image priors for automatic image colorization with simultaneous classification

Session/Category Title: INTRINSIC IMAGES

Presenter(s)/Author(s):

Moderator(s):

Abstract:

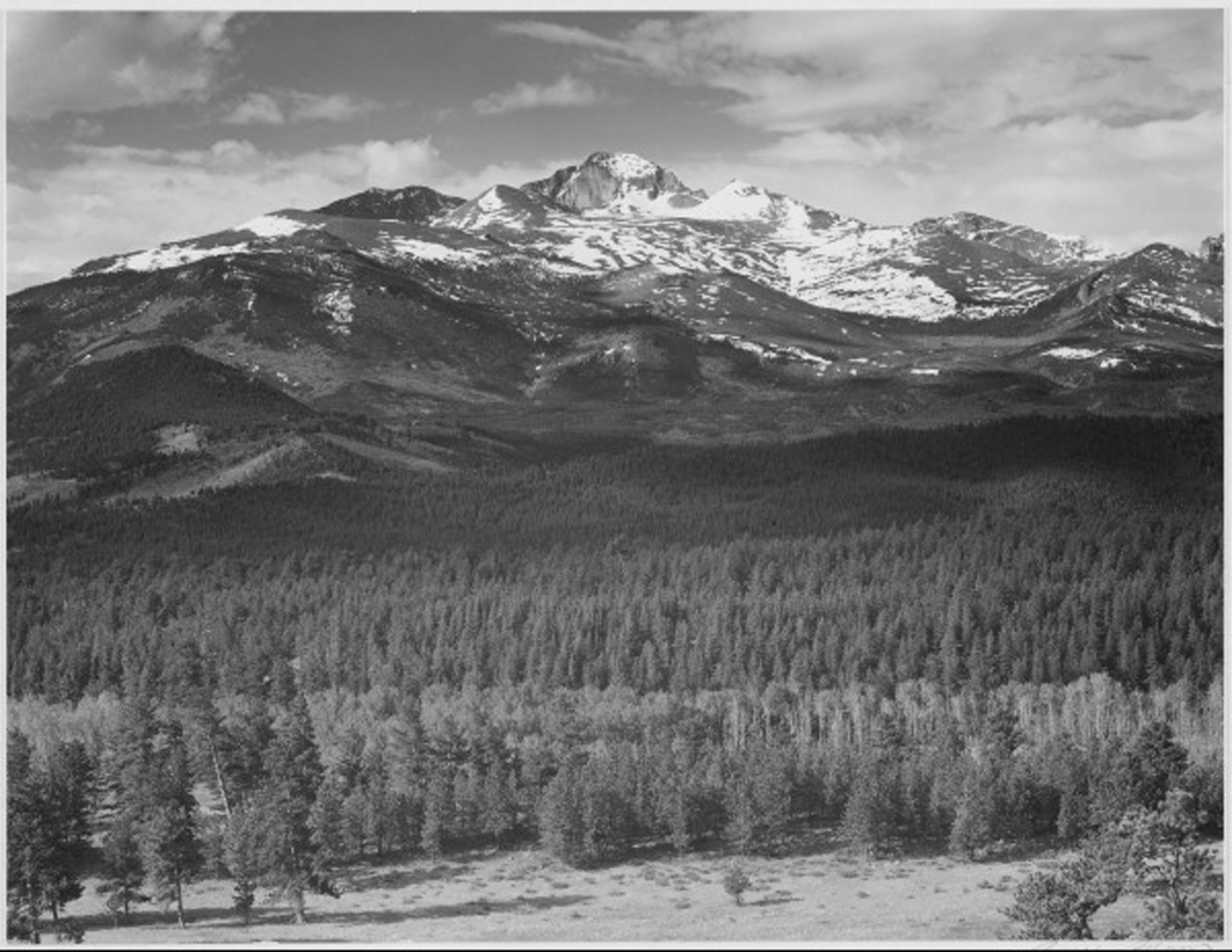

We present a novel technique to automatically colorize grayscale images that combines both global priors and local image features. Based on Convolutional Neural Networks, our deep network features a fusion layer that allows us to elegantly merge local information dependent on small image patches with global priors computed using the entire image. The entire framework, including the global and local priors as well as the colorization model, is trained in an end-to-end fashion. Furthermore, our architecture can process images of any resolution, unlike most existing approaches based on CNN. We leverage an existing large-scale scene classification database to train our model, exploiting the class labels of the dataset to more efficiently and discriminatively learn the global priors. We validate our approach with a user study and compare against the state of the art, where we show significant improvements. Furthermore, we demonstrate our method extensively on many different types of images, including black-and-white photography from over a hundred years ago, and show realistic colorizations.

References:

1. An, X., and Pellacini, F. 2008. AppProp: All-pairs appearance-space edit propagation. ACM Trans. Graph. 27, 3 (Aug.), 40:1–40:9. Google ScholarDigital Library

2. Bell, S., and Bala, K. 2015. Learning visual similarity for product design with convolutional neural networks. ACM Trans. on Graphics (SIGGRAPH) 34, 4. Google ScholarDigital Library

3. Bromley, J., Guyon, I., Lecun, Y., Säckinger, E., and Shah, R. 1994. Signature verification using a “siamese” time delay neural network. In NIPS.Google Scholar

4. Charpiat, G., Hofmann, M., and Schölkopf, B. 2008. Automatic image colorization via multimodal predictions. In ECCV. Google ScholarDigital Library

5. Chen, X., Zou, D., Zhao, Q., and Tan, P. 2012. Manifold preserving edit propagation. ACM Trans. Graph. 31, 6 (Nov.), 132:1–132:7. Google ScholarDigital Library

6. Cheng, Z., Yang, Q., and Sheng, B. 2015. Deep colorization. In Proceedings of ICCV 2015, 29–43. Google ScholarDigital Library

7. Chia, A. Y.-S., Zhuo, S., Gupta, R. K., Tai, Y.-W., Cho, S.-Y., Tan, P., and Lin, S. 2011. Semantic colorization with internet images. ACM Trans. Graph. 30, 6, 156:1–156:8. Google ScholarDigital Library

8. Dong, C., Loy, C. C., He, K., and Tang, X. 2016. Image super-resolution using deep convolutional networks. PAMI 38, 2, 295–307. Google ScholarDigital Library

9. Eigen, D., and Fergus, R. 2015. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In ICCV. Google ScholarDigital Library

10. Fischer, P., Dosovitskiy, A., Ilg, E., Häusser, P., Hazirbas, C., Golkov, V., van der Smagt, P., Cremers, D., and Brox, T. 2015. Flownet: Learning optical flow with convolutional networks.Google Scholar

11. Fukushima, K. 1988. Neocognitron: A hierarchical neural network capable of visual pattern recognition. Neural networks 1, 2, 119–130.Google Scholar

12. Gupta, R. K., Chia, A. Y.-S., Rajan, D., Ng, E. S., and Zhiyong, H. 2012. Image colorization using similar images. In ACM International Conference on Multimedia, 369–378. Google ScholarDigital Library

13. Huang, Y.-C., Tung, Y.-S., Chen, J.-C., Wang, S.-W., and Wu, J.-L. 2005. An adaptive edge detection based colorization algorithm and its applications. In ACM International Conference on Multimedia, 351–354. Google ScholarDigital Library

14. Ioffe, S., and Szegedy, C. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In ICML.Google Scholar

15. Irony, R., Cohen-Or, D., and Lischinski, D. 2005. Colorization by example. In Eurographics Conference on Rendering Techniques, 201–210. Google ScholarDigital Library

16. Krizhevsky, A., Sutskever, I., and Hinton, G. E. 2012. Imagenet classification with deep convolutional neural networks. In NIPS.Google Scholar

17. LeCun, Y., Bottou, L., Bengio, Y., and Haffner, P. 1998. Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 11, 2278–2324.Google ScholarCross Ref

18. Levin, A., Lischinski, D., and Weiss, Y. 2004. Colorization using optimization. ACM Transactions on Graphics 23, 689–694. Google ScholarDigital Library

19. Li, Y., Ju, T., and Hu, S.-M. 2010. Instant propagation of sparse edits on images and videos. Computer Graphics Forum 29, 7, 2049–2054.Google ScholarCross Ref

20. Liu, X., Wan, L., Qu, Y., Wong, T.-T., Lin, S., Leung, C.-S., and Heng, P.-A. 2008. Intrinsic colorization. ACM Transactions on Graphics (SIGGRAPH Asia 2008 issue) 27, 5 (December), 152:1–152:9. Google ScholarDigital Library

21. Long, J., Shelhamer, E., and Darrell, T. 2015. Fully convolutional networks for semantic segmentation. In CVPR.Google Scholar

22. Luan, Q., Wen, F., Cohen-Or, D., Liang, L., Xu, Y.-Q., and Shum, H.-Y. 2007. Natural image colorization. In Eurographics Conference on Rendering Techniques, 309–320. Google ScholarDigital Library

23. Pitié, F., Kokaram, A. C., and Dahyot, R. 2007. Automated colour grading using colour distribution transfer. Comput. Vis. Image Underst. 107, 1–2 (July), 123–137. Google ScholarDigital Library

24. Qu, Y., Wong, T.-T., and Heng, P.-A. 2006. Manga colorization. ACM Trans. Graph. 25, 3 (July), 1214–1220. Google ScholarDigital Library

25. Reinhard, E., Ashikhmin, M., Gooch, B., and Shirley, P. 2001. Color transfer between images. IEEE Computer Graphics and Applications 21, 5 (sep), 34–41. Google ScholarDigital Library

26. Rumelhart, D., Hinton, G., and Williams, R. 1986. Learning representations by back-propagating errors. In Nature.Google Scholar

27. Shen, W., Wang, X., Wang, Y., Bai, X., and Zhang, Z. 2015. Deepcontour: A deep convolutional feature learned by positive-sharing loss for contour detection. In CVPR.Google Scholar

28. Simonyan, K., and Zisserman, A. 2015. Very deep convolutional networks for large-scale image recognition. In ICLR.Google Scholar

29. Springenberg, J. T., Dosovitskiy, A., Brox, T., and Riedmiller, M. A. 2015. Striving for simplicity: The all convolutional net. In ICLR Workshop Track.Google Scholar

30. Sýkora, D., Dingliana, J., and Collins, S. 2009. Lazy-Brush: Flexible painting tool for hand-drawn cartoons. Computer Graphics Forum 28, 2, 599–608.Google ScholarCross Ref

31. Tai, Y., Jia, J., and Tang, C. 2005. Local color transfer via probabilistic segmentation by expectation-maximization. In CVPR, 747–754. Google ScholarDigital Library

32. Wang, L., Guo, S., Huang, W., and Qiao, Y. 2015. Places205-vggnet models for scene recognition. CoRR abs/1508.01667.Google Scholar

33. Wang, L., Lee, C., Tu, Z., and Lazebnik, S. 2015. Training deeper convolutional networks with deep supervision. CoRR abs/1505.02496.Google Scholar

34. Wang, X., Fouhey, D. F., and Gupta, A. 2015. Designing deep networks for surface normal estimation. In CVPR.Google Scholar

35. Welsh, T., Ashikhmin, M., and Mueller, K. 2002. Transferring color to greyscale images. ACM Trans. Graph. 21, 3 (July), 277–280. Google ScholarDigital Library

36. Wu, F., Dong, W., Kong, Y., Mei, X., Paul, J.-C., and Zhang, X. 2013. Content-based colour transfer. Computer Graphics Forum 32, 1, 190–203.Google ScholarCross Ref

37. Xu, K., Li, Y., Ju, T., Hu, S.-M., and Liu, T.-Q. 2009. Efficient affinity-based edit propagation using k-d tree. ACM Trans. Graph. 28, 5 (Dec.), 118:1–118:6. Google ScholarDigital Library

38. Xu, L., Yan, Q., and Jia, J. 2013. A sparse control model for image and video editing. ACM Trans. Graph. 32, 6 (Nov.), 197:1–197:10. Google ScholarDigital Library

39. Yatziv, L., Yatziv, L., Sapiro, G., and Sapiro, G. 2004. Fast image and video colorization using chrominance blending. IEEE Transaction on Image Processing 15, 2006. Google ScholarDigital Library

40. Zeiler, M. D. 2012. ADADELTA: an adaptive learning rate method. CoRR abs/1212.5701.Google Scholar

41. Zhou, B., Lapedriza, A., Xiao, J., Torralba, A., and Oliva, A. 2014. Learning deep features for scene recognition using places database. In NIPS. Google ScholarDigital Library