“Interactive region-based linear 3D face models” by Tena, Torre and Matthews

Conference:

Type(s):

Title:

- Interactive region-based linear 3D face models

Presenter(s)/Author(s):

Abstract:

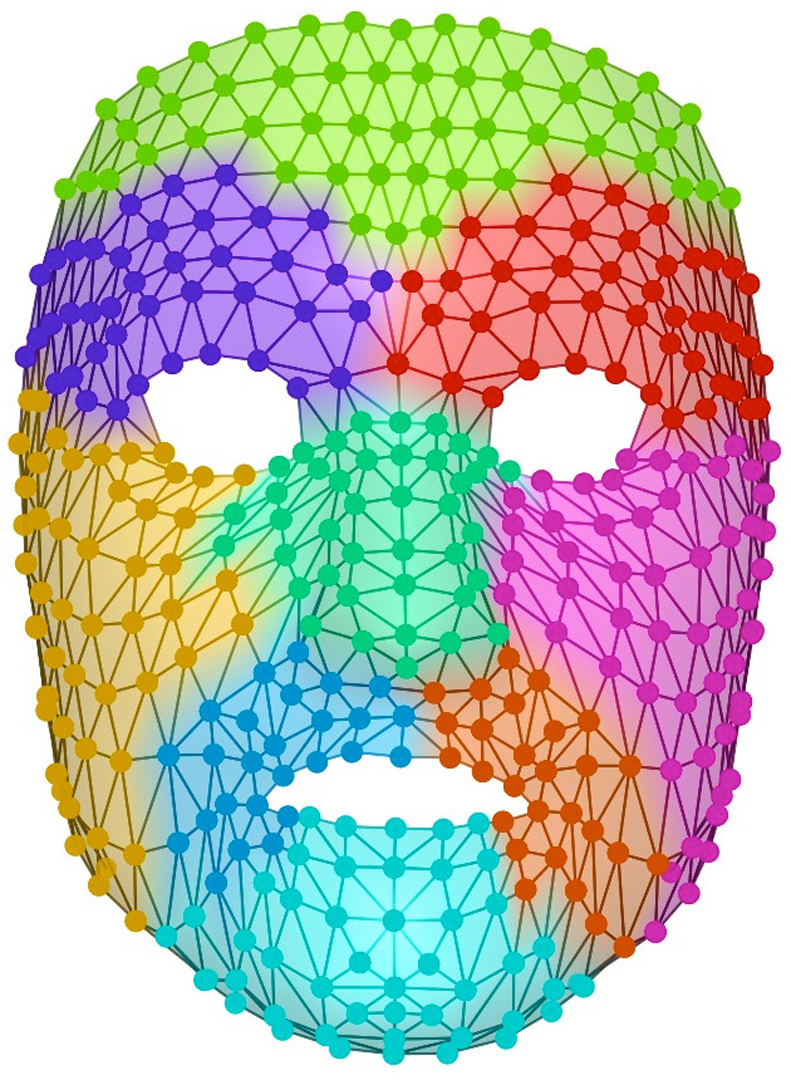

Linear models, particularly those based on principal component analysis (PCA), have been used successfully on a broad range of human face-related applications. Although PCA models achieve high compression, they have not been widely used for animation in a production environment because their bases lack a semantic interpretation. Their parameters are not an intuitive set for animators to work with. In this paper we present a linear face modelling approach that generalises to unseen data better than the traditional holistic approach while also allowing click-and-drag interaction for animation. Our model is composed of a collection of PCA sub-models that are independently trained but share boundaries. Boundary consistency and user-given constraints are enforced in a soft least mean squares sense to give flexibility to the model while maintaining coherence. Our results show that the region-based model generalises better than its holistic counterpart when describing previously unseen motion capture data from multiple subjects. The decomposition of the face into several regions, which we determine automatically from training data, gives the user localised manipulation control. This feature allows to use the model for face posing and animation in an intuitive style.

References:

1. Allen, B., Curless, B., and Popović, Z. 2003. The space of human body shapes: Reconstruction and parameterization from range scans. ACM Transactions on Graphics 22, 3 (July), 587–594. Google ScholarDigital Library

2. Bergeron, P., and Lachapelle, P. 1985. Controlling facial expressions and body movements in the computer-generated animated short “Tonly De Peltrie”. In Computer Graphics, Advanced Computer Animation seminar notes.Google Scholar

3. Black, M., and Yacoob, Y. 1995. Tracking and recognizing rigid and non-rigid facial motions using local parametric models of image motion. In Proceedings of the Fifth International Conference on Computer Vision, 374–381. Google ScholarDigital Library

4. Blanz, V., and Vetter, T. 1999. A morphable model for the synthesis of 3D faces. In Proceedings of SIGGRAPH, 187–194. Google Scholar

5. Buck, I., Finkelstein, A., Jacobs, C., Klein, A., Salesin, D. H., Seims, J., Szeliski, R., and Toyama, K. 2000. Performance-driven hand-drawn animation. In Proceedings of the 1st International Symposium on Non-photorealistic Animation and Rendering, 101–108. Google Scholar

6. Cootes, T. F., Edwards, G. J., and Taylor, C. J. 1998. Active appearance models. In Proceedings of the 5th European Conference on Computer Vision, 484–498. Google ScholarDigital Library

7. DeCarlo, D., and Metaxas, D. 2000. Optical flow constraints on deformable models with applications to face tracking. International Journal of Computer Vision 38, 2 (July), 99–127. Google ScholarCross Ref

8. Dryden, I. L., and Mardia, K. V. 2002. Statistical Shape Analysis. John Wiley & Sons.Google Scholar

9. Edwards, G. J., Cootes, T. F., and Taylor, C. J. 1998. Face recognition using active appearance models. In Proceedings of the 5th European Conference on Computer Vision, 581–595. Google ScholarDigital Library

10. Ekman, P., and Friesen, W. V. 1978. Facial Action Coding System: A Technique for the Measurement of Facial Movement. Consulting Psychologists Press, Palo Alto, CA.Google Scholar

11. Feng, W.-W., Kim, B.-U., and Yu, Y. 2008. Real-time data driven deformation using kernel canonical correlation analysis. ACM Transactions on Graphics 27 (August), 91:1–91:9. Google ScholarDigital Library

12. Joshi, P., Tien, W. C., Desbrun, M., and Pighin, F. 2003. Learning controls for blend shape based realistic facial animation. In Proceedings of ACM SIGGRAPH/Eurographics symposium on Computer Animation, 187–192. Google ScholarDigital Library

13. Lau, M., Chai, J., Xu, Y.-Q., and Shum, H.-Y. 2009. Face poser: Interactive modeling of 3D facial expressions using facial priors. ACM Transactions on Graphics 29, 1 (Dec.), 3:1–3:17. Google ScholarDigital Library

14. Lawrence, N. D. 2007. Learning for larger datasets with the gaussian process latent variable model. In International Workshop on Artificial Intelligence and Statistics. Google Scholar

15. Lewis, J. P., and Anjyo, K. 2010. Direct manipulation blend-shapes. Computer Graphics and Applications, IEEE 30, 4 (July), 42–50. Google Scholar

16. Matthews, I., and Baker, S. 2004. Active appearance models revisited. International Journal of Computer Vision 60 (November), 135–164. Google ScholarDigital Library

17. Meyer, M., and Anderson, J. 2007. Key point subspace acceleration and soft caching. ACM Transactions on Graphics 26, 3 (July), 74:1–74:8. Google ScholarDigital Library

18. Ng, A. Y., Jordan, M. I., and Weiss, Y. 2001. On spectral clustering: Analysis and an algorithm. In Advances in Neural Information Processing Systems, MIT Press, 849–856.Google Scholar

19. Nishino, K., Nayar, S. K., and Jebara, T. 2005. Clustered blockwise PCA for representing visual data. IEEE Transactions on Pattern Analysis Machine Intelligence 27 (October), 1675–1679. Google ScholarDigital Library

20. Noh, J.-Y., Fidaleo, D., and Neumann, U. 2000. Animated deformations with radial basis functions. In Proceedings of the ACM symposium on Virtual reality software and technology, 166–174. Google ScholarDigital Library

21. Pentland, A., Moghaddam, B., and Starner, T. 1994. View-based and modular eigenspaces for face recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, 84–91.Google Scholar

22. Peyras, J., Bartoli, A., Mercier, H., and Dalle, P. 2007. Segmented AAMs improve person-independent face fitting. In British Machine Vision Conference.Google Scholar

23. Pighin, F., Hecker, J., Lischinski, D., Szeliski, R., and Salesin, D. H. 1998. Synthesizing realistic facial expressions from photographs. In Proceedings of SIGGRAPH, 75–84. Google Scholar

24. Sorkine, O., Cohen-Or, D., Lipman, Y., Alexa, M., Rössl, C., and Seidel, H.-P. 2004. Laplacian surface editing. In Proceedings of the 2004 Eurographics/ACM SIGGRAPH symposium on Geometry processing, 175–184. Google Scholar

25. Tena, J. R., Hamouz, M., Hilton, A., and Illingworth, J. 2006. A validated method for dense non-rigid 3D face registration. In Proceedings of the IEEE International Conference on Video and Signal Based Surveillance. Google Scholar

26. Tenenbaum, J. B., de Silva, V., and Langford, J. C. 2000. A global geometric framework for nonlinear dimensionality reduction. Science 290, 5500, 2319–2323.Google Scholar

27. Turk, M., and Pentland, A. 1991. Eigenfaces for recognition. Journal of Cognitive Neuroscience 3 (January), 71–86. Google ScholarDigital Library

28. Vlasic, D., Brand, M., Pfister, H., and Popović, J. 2005. Face transfer with multilinear models. ACM Transactions on Graphics 24, 3 (Aug.), 426–433. Google ScholarDigital Library

29. Zhang, L., Snavely, N., Curless, B., and Seitz, S. M. 2004. Spacetime faces: High resolution capture for modeling and animation. ACM Transactions on Graphics 23, 3 (Aug.), 548–558. Google ScholarDigital Library

30. Zhang, Q., Liu, Z., Guo, B., Terzopoulos, D., and Shum, H.-Y. 2006. Geometry-driven photorealistic facial expression synthesis. IEEE Transactions on Visualization and Computer Graphics 12 (January), 48–60. Google ScholarDigital Library