“Interactive Dance Performance Evaluation using Timing and Accuracy Similarity” by Kim and Kim

Conference:

Type(s):

Entry Number: 67

Title:

- Interactive Dance Performance Evaluation using Timing and Accuracy Similarity

Presenter(s)/Author(s):

Abstract:

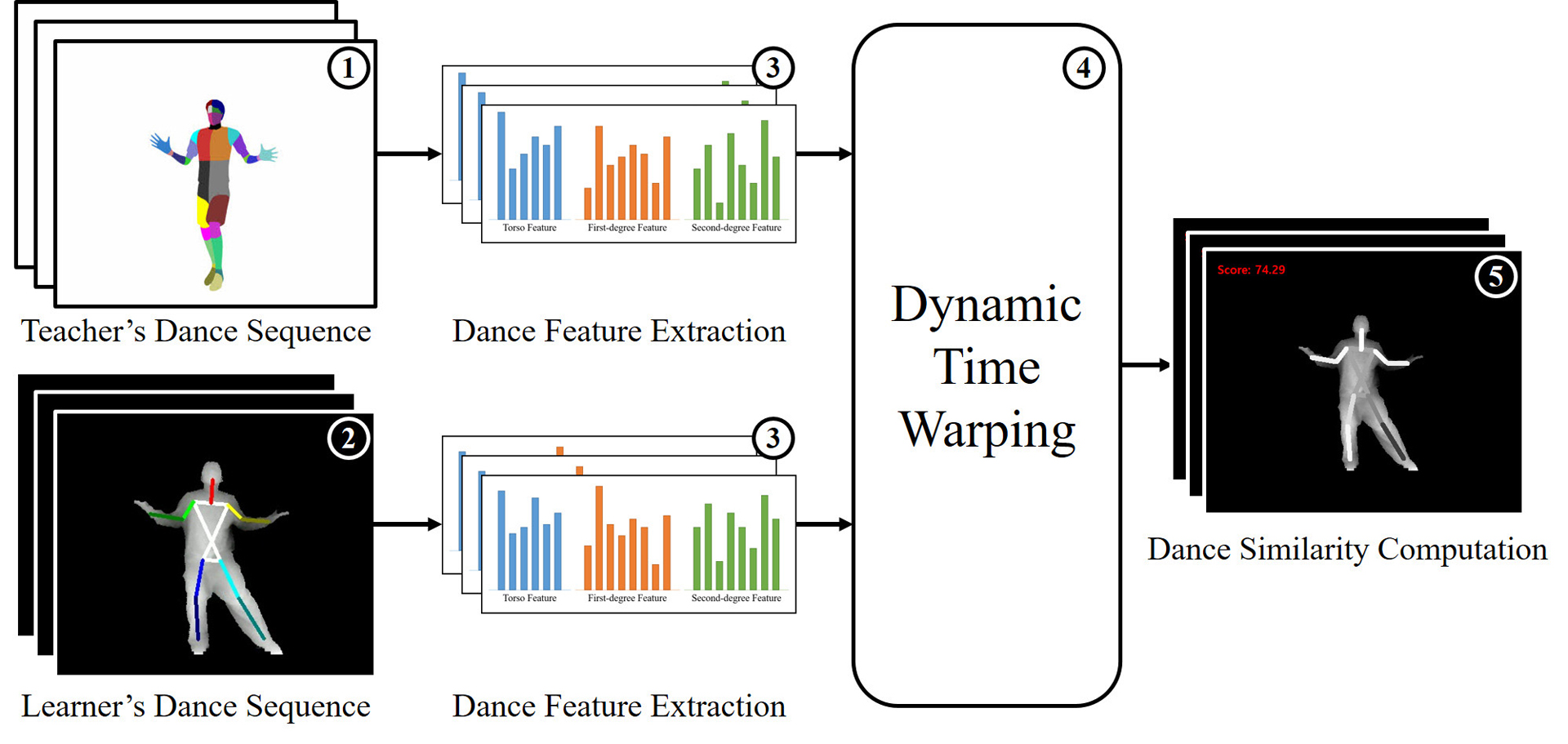

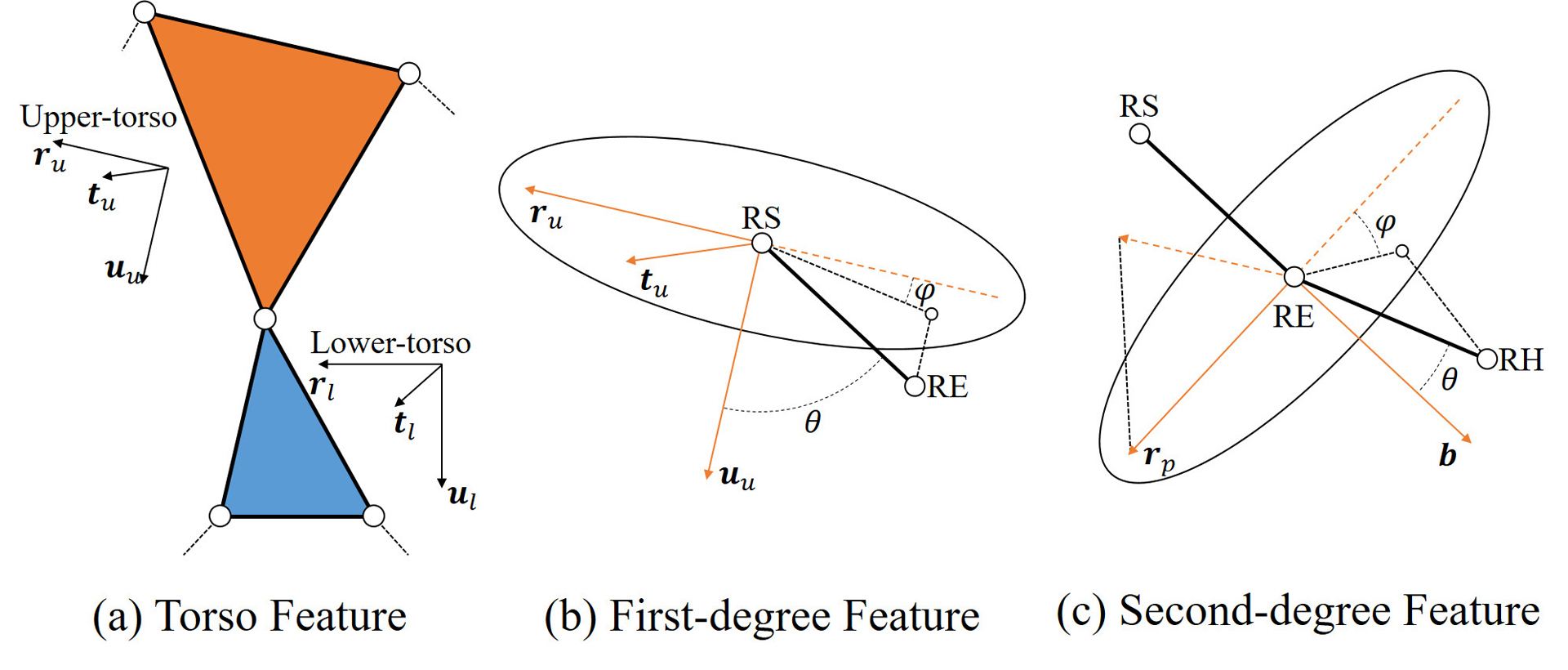

This paper presents a dance performance evaluation how well a learner mimics the teacher’s dance as follows. We estimate the human skeletons, then extract dance features such as torso and first and second-degree feature, and compute the similarity score between the teacher and the learner dance sequence in terms of timing and pose accuracies. To validate the proposed dance evaluation method, we conducted several experiments on a large K-Pop dance database. The proposed methods achieved 98% concordance with experts’ evaluation on dance performance.

References:

- J. Huang and D. Altamar. 2016. Pose Estimation on Depth Images with Convolutional Neural Network. (2016).

- Y. Kim and D. Kim. 2015. Efficient body part tracking using ridge data and data pruning. In 15th International Conference on Humanoid Robots. IEEE, 114–120.

- F. Ofli, G. Kurillo, Š. Obdržálek, R. Bajcsy, H. Jimison, and M. Pavel. 2016. Design and evaluation of an interactive exercise coaching system for older adults: lessons learned. Journal of biomedical and health informatics 20, 1 (2016), 201–212.

- M. Raptis, D. Kirovski, and H. Hoppe. 2011. Real-time classification of dance gestures from skeleton animation. In SIGGRAPH/Eurographics symposium on computer.

- M. Reyes, G. Dominguez, and S. Escalera. 2011. Featureweighting in dynamic timewarping for gesture recognition in depth data. In ICCVW. IEEE, 1182–1188.

- R. Schramm, C. Jung, and E. Miranda. 2015. Dynamic time warping for music conducting gestures evaluation. Transactions on Multimedia 17, 2 (2015), 243–255.

- J. Sung, C. Ponce, B. Selman, and A. Saxena. 2011. Human Activity Detection from RGBD Images. plan, activity, and intent recognition 64 (2011).

- L. Xia, C. Chen, and J. Aggarwal. 2012. View invariant human action recognition using histograms of 3d joints. In Computer vision and pattern recognition workshops.

- X. Yang and Y. Tian. 2014. Effective 3d action recognition using eigenjoints. Journal of Visual Communication and Image Representation 25, 1 (2014), 2–11

Keyword(s):

Acknowledgements:

This research was partially supported by the MSIT (Ministry of Science, ICT), Korea, under either the SW Starlab support program (IITP-2017-0-00897) or the development of predictive visual intelligence technology (IITP-2014-0-00059).