“Integrating multiple depth sensors into the virtual video camera” by Ruhl, Berger, Lipski, Klose, Schroeder, et al. …

Conference:

Type(s):

Title:

- Integrating multiple depth sensors into the virtual video camera

Presenter(s)/Author(s):

Abstract:

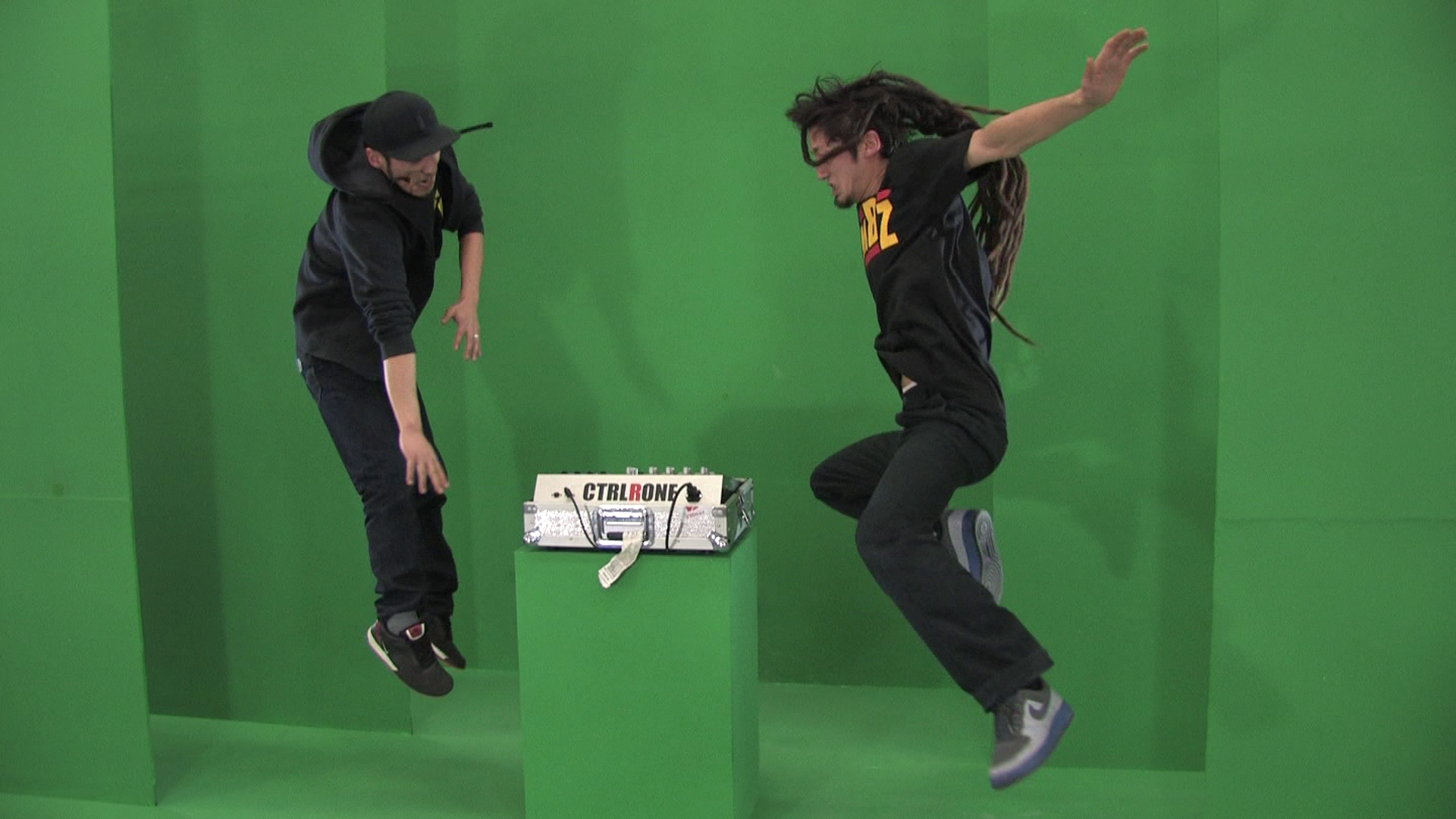

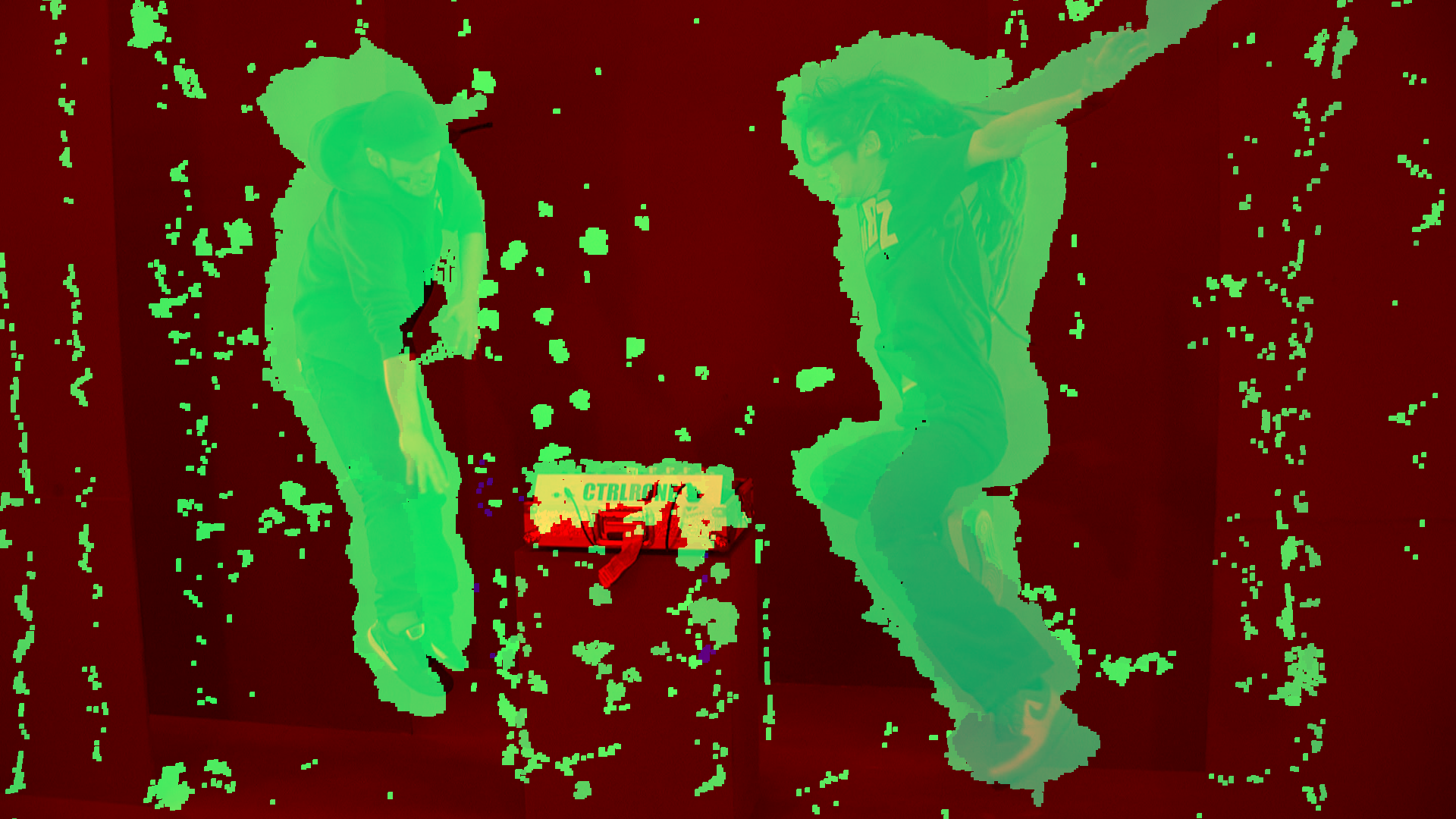

In this ongoing work, we present our efforts to incorporate depth sensors [Microsoft Corp 2010] into a multi camera system for free view-point video [Lipski et al. 2010]. Both the video cameras and the depth sensors are consumer grade. Our free-viewpoint system, the Virtual Video Camera, uses image-based rendering to create novel views between widely spaced (up to 15 degrees) cameras, using dense image correspondences. The introduction of multiple depth sensors into the system allows us to obtain approximate depth information for many pixels, thereby providing a valuable hint for estimating pixel correspondences between cameras.

References:

1. Lipski, C., Linz, C., Berger, K., Sellent, A., and Magnor, M. 2010. Virtual video camera: Image-based viewpoint navigation through space and time. Computer Graphics Forum 29, 8, 2555–2568.

2. Microsoft Corp, 2010. Kinect for Xbox 360, November. Redmond WA.

Additional Images: