“GREIL-Crowds: Crowd Simulation with Deep Reinforcement Learning and Examples” by Charalambous, Pettre, Vassiliades, Chrysanthou and Pelechano

Conference:

Type(s):

Title:

- GREIL-Crowds: Crowd Simulation with Deep Reinforcement Learning and Examples

Session/Category Title: Character Animation: Interaction

Presenter(s)/Author(s):

Moderator(s):

Abstract:

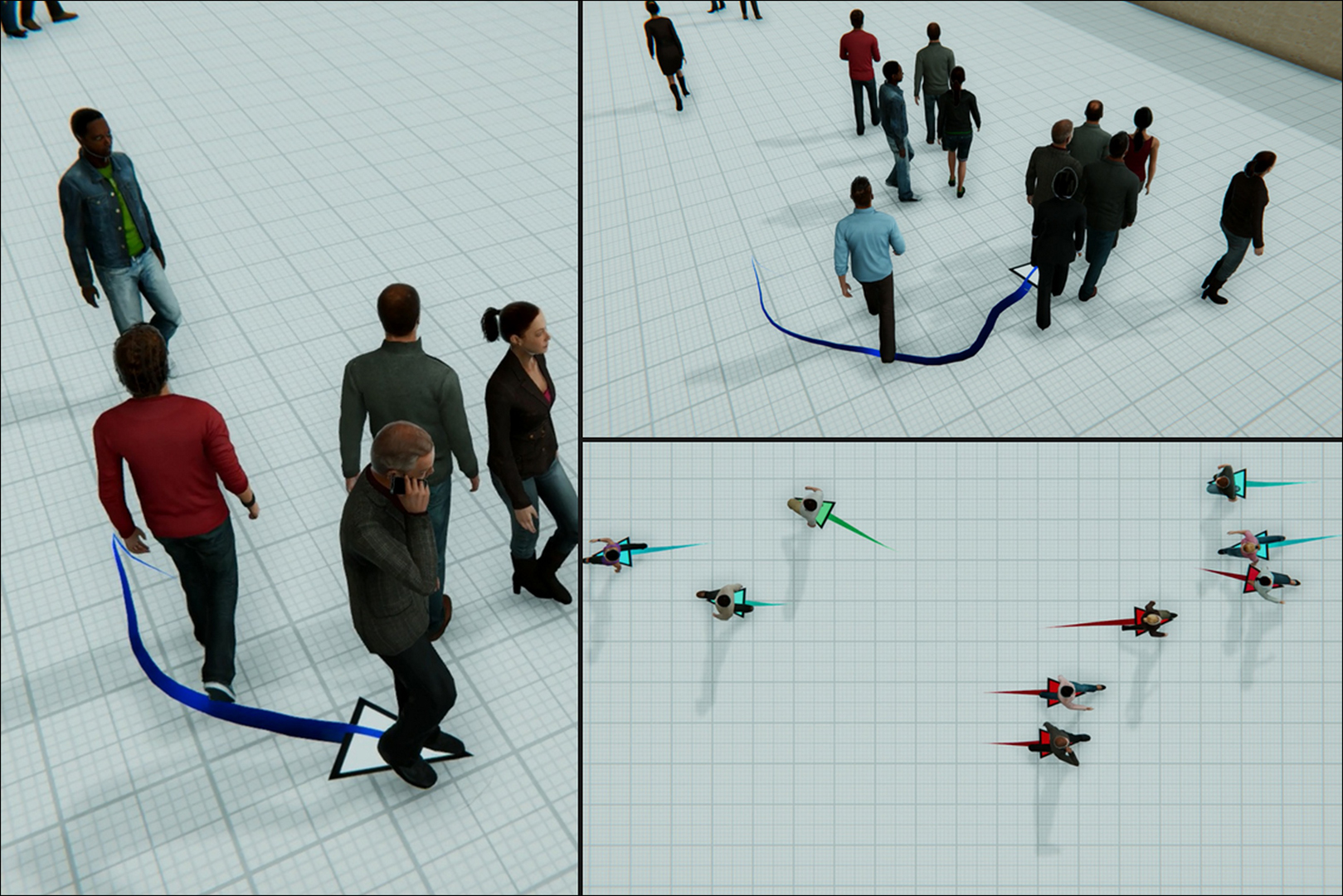

Simulating crowds with realistic behaviors is a difficult but very important task for a variety of applications. Quantifying how a person balances between different conflicting criteria such as goal seeking, collision avoidance and moving within a group is not intuitive, especially if we consider that behaviors differ largely between people. Inspired by recent advances in Deep Reinforcement Learning, we propose Guided REinforcement Learning (GREIL) Crowds, a method that learns a model for pedestrian behaviors which is guided by reference crowd data. The model successfully captures behaviors such as goal seeking, being part of consistent groups without the need to define explicit relationships and wandering around seemingly without a specific purpose. Two fundamental concepts are important in achieving these results: (a) the per agent state representation and (b) the reward function. The agent state is a temporal representation of the situation around each agent. The reward function is based on the idea that people try to move in situations/states in which they feel comfortable in. Therefore, in order for agents to stay in a comfortable state space, we first obtain a distribution of states extracted from real crowd data; then we evaluate states based on how much of an outlier they are compared to such a distribution. We demonstrate that our system can capture and simulate many complex and subtle crowd interactions in varied scenarios. Additionally, the proposed method generalizes to unseen situations, generates consistent behaviors and does not suffer from the limitations of other data-driven and reinforcement learning approaches.

References:

1. Pieter Abbeel and Andrew Y. Ng. 2004. Apprenticeship Learning via Inverse Reinforcement Learning. In Proceedings of the Twenty-first International Conference on Machine Learning (Banff, Alberta, Canada) (ICML ’04). ACM, New York, NY, USA, 1–.

2. Alexandre Alahi, Kratarth Goel, Vignesh Ramanathan, Alexandre Robicquet, Li Fei-Fei, and Silvio Savarese. 2016. Social lstm: Human trajectory prediction in crowded spaces. (2016), 961–971.

3. Javad Amirian, Jean-Bernard Hayet, and Julien Pettré. 2019. Social ways: Learning multi-modal distributions of pedestrian trajectories with gans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. 0–0.

4. Marc G Bellemare, Will Dabney, and Rémi Munos. 2017. A distributional perspective on reinforcement learning. (2017), 449–458.

5. Nicola Bellomo and Christian Dogbe. 2011. On the modeling of traffic and crowds: A survey of models, speculations, and perspectives. SIAM review 53, 3 (2011), 409–463.

6. Panayiotis Charalambous and Yiorgos Chrysanthou. 2014. The PAG Crowd: A Graph Based Approach for Efficient Data-Driven Crowd Simulation. Computer Graphics Forum 33, 8 (2014), 95–108.

7. Panayiotis Charalambous, Ioannis Karamouzas, Stephen J. Guy, and Yiorgos Chrysanthou. 2014. A Data-Driven Framework for Visual Crowd Analysis. Computer Graphics Forum 33, 7 (2014), 41–50.

8. N. Courty and T. Corpetti. 2007. Crowd motion capture. Computer Animation and Virtual Worlds 18, 4–5 (2007), 361–370.

9. Chelsea Finn, Sergey Levine, and Pieter Abbeel. 2016. Guided cost learning: Deep inverse optimal control via policy optimization. In International Conference on Machine Learning. 49–58.

10. Julio E Godoy, Ioannis Karamouzas, Stephen J Guy, and Maria Gini. 2015. Adaptive learning for multi-agent navigation. In Proceedings of the 2015 International Conference on Autonomous Agents and Multiagent Systems. International Foundation for Autonomous Agents and Multiagent Systems, 1577–1585.

11. Agrim Gupta, Justin Johnson, Li Fei-Fei, Silvio Savarese, and Alexandre Alahi. 2018. Social gan: Socially acceptable trajectories with generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2255–2264.

12. Stephen J. Guy, Jur van den Berg, Wenxi Liu, Rynson Lau, Ming C. Lin, and Dinesh Manocha. 2012. A Statistical Similarity Measure for Aggregate Crowd Dynamics. ACM Trans. Graph. 31, 6, Article 190 (Nov. 2012), 11 pages.

13. Tuomas Haarnoja, Haoran Tang, Pieter Abbeel, and Sergey Levine. 2017. Reinforcement learning with deep energy-based policies. In International conference on machine learning. PMLR, 1352–1361.

14. Edward T Hall. 1963. A system for the notation of proxemic behavior. American anthropologist 65, 5 (1963), 1003–1026.

15. Dirk Helbing and Péter Molnár. 1995. Social force model for pedestrian dynamics. Phys. Rev. E 51, 5 (May 1995), 4282–4286.

16. Peter Henry, Christian Vollmer, Brian Ferris, and Dieter Fox. 2010. Learning to navigate through crowded environments. In Robotics and Automation (ICRA), 2010 IEEE International Conference on. IEEE, 981–986.

17. Matteo Hessel, Joseph Modayil, Hado Van Hasselt, Tom Schaul, Georg Ostrovski, Will Dabney, Dan Horgan, Bilal Piot, Mohammad Azar, and David Silver. 2018. Rainbow: Combining improvements in deep reinforcement learning. In Proceedings of the AAAI conference on artificial intelligence, Vol. 32.

18. H. Hildenbrandt, C. Carere, and CK Hemelrijk. 2010. Self-organized aerial displays of thousands of starlings: a model. Behavioral Ecology 21, 6 (2010), 1349.

19. Kaidong Hu, Brandon Haworth, Glen Berseth, Vladimir Pavlovic, Petros Faloutsos, and Mubbasir Kapadia. 2021. Heterogeneous crowd simulation using parametric reinforcement learning. IEEE Transactions on Visualization and Computer Graphics (2021).

20. Eunjung Ju, Myung Geol Choi, Minji Park, Jehee Lee, Kang Hoon Lee, and Shigeo Takahashi. 2010. Morphable Crowds. ACM Trans. Graph. 29, 6, Article 140 (Dec. 2010), 10 pages.

21. Mubbasir Kapadia, Matt Wang, Shawn Singh, Glenn Reinman, and Petros Faloutsos. 2011. Scenario space: characterizing coverage, quality, and failure of steering algorithms. In Proceedings of the 2011 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (Vancouver, British Columbia, Canada) (SCA ’11). ACM, New York, NY, USA, 53–62.

22. T. Kwon, K.H. Lee, J. Lee, and S. Takahashi. 2008. Group motion editing. In ACM Transactions on Graphics (TOG), Vol. 27. ACM, 80.

23. Yu-Chi Lai, Stephen Chenney, and ShaoHua Fan. 2005. Group motion graphs. In SCA ’05: Proceedings of the 2005 ACM SIGGRAPH/Eurographics symposium on Computer animation (Los Angeles, California). 281–290.

24. Jaedong Lee, Jungdam Won, and Jehee Lee. 2018. Crowd simulation by deep reinforcement learning. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games. 1–7.

25. Kang Hoon Lee, Myung Geol Choi, Qyoun Hong, and Jehee Lee. 2007. Group Behavior from Video: A Data-driven Approach to Crowd Simulation. In Proceedings of the 2007 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (San Diego, California) (SCA ’07). Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, 109–118. http://dl.acm.org/citation.cfm?id=1272690.1272706

26. Alon Lerner, Yiorgos Chrysanthou, and Dani Lischinski. 2007. Crowds by Example. Computer Graphics Forum 26, 3 (2007), 655–664.

27. Alon Lerner, Yiorgos Chrysanthou, Ariel Shamir, and Daniel Cohen-Or. 2010. Context-Dependent Crowd Evaluation. Computer Graphics Forum 29, 7 (2010), 2197–2206.

28. Sergey Levine and Vladlen Koltun. 2013. Guided policy search. In Proceedings of the 30th International Conference on Machine Learning (ICML-13). 1–9.

29. Yi Li, Marc Christie, Orianne Siret, Richard Kulpa, and Julien Pettré. 2012. Cloning Crowd Motions. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation (Lausanne, Switzerland) (SCA ’12). Eurographics Association, Goslar Germany, Germany, 201–210. http://dl.acm.org/citation.cfm?id=2422356.2422385

30. Libin Liu and Jessica Hodgins. 2017. Learning to schedule control fragments for physics-based characters using deep q-learning. ACM Transactions on Graphics (TOG) 36, 3 (2017), 29.

31. Pinxin Long, Tingxiang Fan, Xinyi Liao, Wenxi Liu, Hao Zhang, and Jia Pan. 2018. Towards optimally decentralized multi-robot collision avoidance via deep reinforcement learning. In 2018 IEEE international conference on robotics and automation (ICRA). IEEE, 6252–6259.

32. Karttikeya Mangalam, Yang An, Harshayu Girase, and Jitendra Malik. 2021. From goals, waypoints & paths to long term human trajectory forecasting. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 15233–15242.

33. Francisco Martinez-Gil, Miguel Lozano, and Fernando Fernández. 2011. Multi-Agent Reinforcement Learning for Simulating Pedestrian Navigation. In International Workshop on Adaptive and Learning Agents. 53.

34. Ronald A. Metoyer and Jessica K. Hodgins. 2003. Reactive Pedestrian Path Following from Examples. In CASA ’03: Proceedings of the 16th International Conference on Computer Animation and Social Agents (CASA 2003). IEEE Computer Society, Washington, DC, USA, 149.

35. Volodymyr Mnih, Adria Puigdomenech Badia, Mehdi Mirza, Alex Graves, Timothy Lillicrap, Tim Harley, David Silver, and Koray Kavukcuoglu. 2016. Asynchronous methods for deep reinforcement learning. In International Conference on Machine Learning. 1928–1937.

36. Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A Rusu, Joel Veness, Marc G Bellemare, Alex Graves, Martin Riedmiller, Andreas K Fidjeland, Georg Ostrovski, et al. 2015. Human-level control through deep reinforcement learning. Nature 518, 7540 (2015), 529–533.

37. Mehdi Moussaïd, Niriaska Perozo, Simon Garnier, Dirk Helbing, and Guy Theraulaz. 2010. The walking behaviour of pedestrian social groups and its impact on crowd dynamics. PloS one 5, 4 (2010), e10047.

38. S. R. Musse, C. R. Jung, A. Braun, and J. J. Junior. 2006. Simulating the Motion of Virtual Agents Based on Examples. In ACM/EG Symposium on Computer Animation, Short Papers. Vienna, Austria.

39. Andreas Panayiotou, Theodoros Kyriakou, Marilena Lemonari, Yiorgos Chrysanthou, and Panayiotis Charalambous. 2022. CCP: Configurable Crowd Profiles. In ACM SIGGRAPH 2022 Conference Proceedings. 1–10.

40. Sebastien Paris, Julien Pettre, and Stephane Donikian. 2007. Pedestrian Reactive Navigation for Crowd Simulation: a Predictive Approach. Computer Graphics Forum 26, 3 (2007), 665–674.

41. Nuria Pelechano, Jan M Allbeck, Mubbasir Kapadia, and Norman I Badler. 2016. Simulating heterogeneous crowds with interactive behaviors. CRC Press.

42. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel Van de Panne. 2018. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions On Graphics (TOG) 37, 4 (2018), 1–14.

43. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel Van De Panne. 2017. Deeploco: Dynamic locomotion skills using hierarchical deep reinforcement learning. ACM Transactions on Graphics (TOG) 36, 4 (2017), 41.

44. Julien Pettré, Jan Ondrej, Anne-Hélène Olivier, Armel Crétual, and Stéphane Donikian. 2009. Experiment-based Modeling, Simulation and Validation of Interactions between Virtual Walkers. In ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 189–198.

45. Tim Salzmann, Boris Ivanovic, Punarjay Chakravarty, and Marco Pavone. 2020. Trajectron++: Dynamically-feasible trajectory forecasting with heterogeneous data. In European Conference on Computer Vision. Springer, 683–700.

46. Tom Schaul, John Quan, Ioannis Antonoglou, and David Silver. 2015. Prioritized experience replay. arXiv preprint arXiv:1511.05952 (2015).

47. Florian Siebel and Wolfram Mauser. 2006. On the fundamental diagram of traffic flow. SIAM J. Appl. Math. 66, 4 (2006), 1150–1162.

48. Richard S Sutton and Andrew G Barto. 2018. Reinforcement learning: An introduction. MIT press.

49. Adrien Treuille, Yongjoon Lee, and Zoran Popović. 2007. Near-optimal Character Animation with Continuous Control. ACM Trans. Graph. 26, 3, Article 7 (July 2007).

50. B. van Basten, S. Jansen, and I. Karamouzas. 2009. Exploiting motion capture to enhance avoidance behaviour in games. Motion in Games (2009), 29–40.

51. Hado van Hasselt, Arthur Guez, and David Silver. 2016. Deep Reinforcement Learning with Double Q-Learning.

52. He Wang, Jan Ondrej, and Carol O’Sullivan. 2017. Trending Paths: A New Semantic-Level Metric for Comparing Simulated and Real Crowd Data. IEEE Transactions on Visualization and Computer Graphics 23, 5 (May 2017), 1454–1464.

53. Ziyu Wang, Tom Schaul, Matteo Hessel, Hado Hasselt, Marc Lanctot, and Nando Freitas. 2016. Dueling network architectures for deep reinforcement learning. In International conference on machine learning. PMLR, 1995–2003.

54. Christopher JCH Watkins and Peter Dayan. 1992. Q-learning. Machine learning 8, 3–4 (1992), 279–292.

55. D. Wolinski, S. J. Guy, A.-H. Olivier, M. Lin, D. Manocha, and J. Pettré. 2014. Parameter estimation and comparative evaluation of crowd simulations. Computer Graphics Forum 33, 2 (2014), 303–312.

56. Markus Wulfmeier, Peter Ondruska, and Ingmar Posner. 2015. Maximum entropy deep inverse reinforcement learning. arXiv preprint arXiv:1507.04888 (2015).

57. M Zhao, W Cai, and SJ Turner. 2017. CLUST: Simulating Realistic Crowd Behaviour by Mining Pattern from Crowd Videos. In Computer Graphics Forum. Wiley Online Library.

58. M. Zhao and V. Saligrama. 2009. Anomaly detection with score functions based on nearest neighbor graphs. In Advances in Neural Information Processing Systems.

59. Brian D. Ziebart, Andrew Maas, J. Andrew Bagnell, and Anind K. Dey. 2008. Maximum Entropy Inverse Reinforcement Learning. In Proc. AAAI. 1433–1438.