“Facial retargeting with automatic range of motion alignment”

Conference:

Type(s):

Title:

- Facial retargeting with automatic range of motion alignment

Session/Category Title: Faces & Hair

Presenter(s)/Author(s):

Moderator(s):

Abstract:

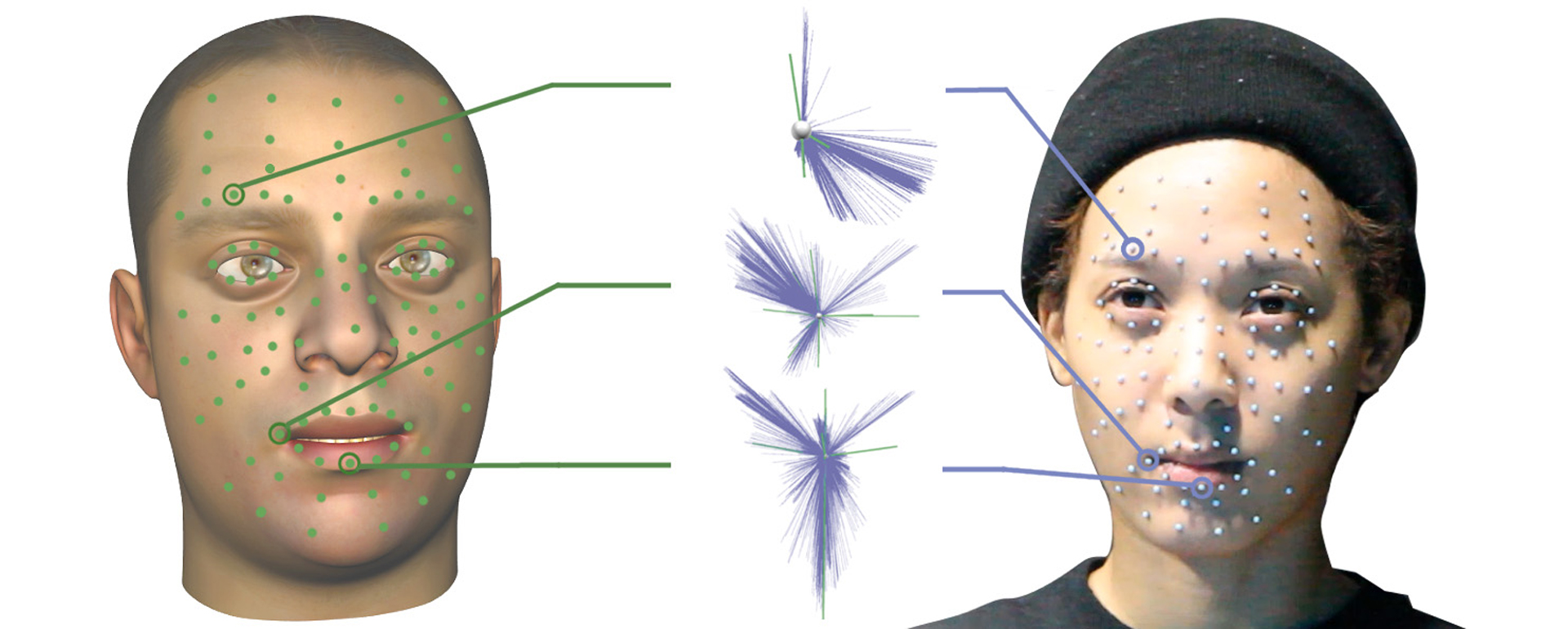

While facial capturing focuses on accurate reconstruction of an actor’s performance, facial animation retargeting has the goal to transfer the animation to another character, such that the semantic meaning of the animation remains. Because of the popularity of blendshape animation, this effectively means to compute suitable blendshape weights for the given target character. Current methods either require manually created examples of matching expressions of actor and target character, or are limited to characters with similar facial proportions (i.e., realistic models). In contrast, our approach can automatically retarget facial animations from a real actor to stylized characters. We formulate the problem of transferring the blendshapes of a facial rig to an actor as a special case of manifold alignment, by exploring the similarities of the motion spaces defined by the blendshapes and by an expressive training sequence of the actor. In addition, we incorporate a simple, yet elegant facial prior based on discrete differential properties to guarantee smooth mesh deformation. Our method requires only sparse correspondences between characters and is thus suitable for retargeting marker-less and marker-based motion capture as well as animation transfer between virtual characters.

References:

1. Ken Anjyo, Hideki Todo, and J. P. Lewis. 2012. A Practical Approach to Direct Manipulation Blendshapes. Journal of Graphics Tools 16, 3 (2012), 160–176. Google ScholarCross Ref

2. Autodesk. 2016. MAYA. (2016). www.autodesk.com/mayaGoogle Scholar

3. Vincent Barrielle, Nicolas Stoiber, and Cédric Cagniart. 2016. BlendForces: A Dynamic Framework for Facial Animation. Computer Graphics Forum 35, 2 (2016). Google ScholarDigital Library

4. Mikhail Belkin and Partha Niyogi. 2005. Towards a Theoretical Foundation for Laplacian-Based Manifold Methods. In Proc. Conference on Learning Theory. 486–500. Google ScholarDigital Library

5. Bernd Bickel, Mario Botsch, Roland Angst, Wojciech Matusik, Miguel Otaduy, Hanspeter Pfister, and Markus Gross. 2007. Multi-scale Capture of Facial Geometry and Motion. ACM Trans. Graph. 26, 3 (2007). Google ScholarDigital Library

6. Mario Botsch and Olga Sorkine. 2008. On Linear Variational Surface Deformation Methods. IEEE Trans. Vis. Comput. Graphics 14, 1 (2008), 213–230. Google ScholarDigital Library

7. Sofien Bouaziz and Mark Pauly. 2014. Semi-Supervised Facial Animation Retargeting. Technical Report 202143. EPFL.Google Scholar

8. Sofien Bouaziz, Yangang Wang, and Mark Pauly. 2013. Online Modeling for Realtime Facial Animation. ACM Trans. Graph. 32, 4 (2013), 40:1–40:10.Google ScholarDigital Library

9. Christoph Bregler, Lorie Loeb, Erika Chuang, and Hrishi Deshpande. 2002. Turning to the Masters: Motion Capturing Cartoons. ACM Trans. Graph. 21, 3 (2002), 399–407. Google ScholarDigital Library

10. Ian Buck, Adam Finkelstein, Charles Jacobs, Allison Klein, David H. Salesin, Joshua Seims, Richard Szeliski, and Kentaro Toyama. 2000. Performance-driven Hand-drawn Animation. In Proc. Symp. on Non-Photorealistic Animation and Rendering. 101–108. Google ScholarDigital Library

11. Chen Cao, Qiming Hou, and Kun Zhou. 2014. Displaced Dynamic Expression Regression for Real-time Facial Tracking and Animation. ACM Trans. Graph. 33, 4 (2014), 43:1–43:10.Google ScholarDigital Library

12. Erika Chuang and Christoph Bregler. 2002. Performance Driven Animation using Blendshape Interpolation. Technical Report CS-TR-2002-02. Stanford University.Google Scholar

13. Patrick Coleman, Jacobo Bibliowicz, Karan Singh, and Michael Gleicher. 2008. Staggered Poses: A Character Motion Representation for Detail-preserving Editing of Pose and Coordinated Timing. In Proc. Symp. on Computer Animation. 137–146.Google Scholar

14. Zhen Cui, Shiguang Shan, Haihong Zhang, Shihong Lao, and Xilin Chen. 2012. Image Sets Alignment for Video-Based Face Recognition. In IEEE Conference on Computer Vision and Pattern Recognition. 2626–2633.Google Scholar

15. Zhigang Deng, Pei-Ying Chiang, Pamela Fox, and Ulrich Neumann. 2006. Animating Blendshape Faces by Cross-mapping Motion Capture Data. In Proc. Symp. on Interactive 3D Graphics and Games. 43–48. Google ScholarDigital Library

16. Paul Ekman and Wallace V. Friesen. 1978. Facial Action Coding System: A Technique for the Measurement of Facial Movement. Consulting Psychologists Press.Google Scholar

17. Ke Fan, Ajmal Mian, Wanquan Liu, and Ling Li. 2016. Unsupervised manifold alignment using soft-assign technique. Machine Vision and Applications 27, 6 (2016), 929–942. Google ScholarCross Ref

18. GaËl Guennebaud, Benoît Jacob, and others. 2016. Eigen v3.3. (2016). http://eigen.tuxfamily.orgGoogle Scholar

19. Alexandru Eugen Ichim, Sofien Bouaziz, and Mark Pauly. 2015. Dynamic 3D Avatar Creation from Hand-held Video Input. ACM Trans. Graph. 34, 4 (2015), 45:1–45:14.Google ScholarDigital Library

20. Alexandru-Eugen Ichim, Ladislav Kavan, Merlin Nimier-David, and Mark Pauly. 2016. Building and Animating User-specific Volumetric Face Rigs. In Proc. Symp. on Computer Animation. 107–117.Google Scholar

21. Eric Jones, Travis Oliphant, Pearu Peterson, and others. 2001. SciPy: Open source scientific tools for Python. (2001). http://www.scipy.org/Google Scholar

22. Natasha Kholgade, Iain Matthews, and Yaser Sheikh. 2011. Content Retargeting Using Parameter-parallel Facial Layers. In Proc. Symp. on Computer Animation. 195–204. Google ScholarDigital Library

23. Ravikrishna Kolluri, Jonathan R. Shewchuk, and James F. O’Brien. 2004. Spectral Surface Reconstruction from Noisy Point Clouds. In Proc. Symp. of Geometry Processing. 11–21. Google ScholarDigital Library

24. Jérôme Kunegis, Stephan Schmidt, Andreas Lommatzsch, Jürgen Lerner, Ernesto W. De Luca, and Sahin Albayrak. 2010. Spectral Analysis of Signed Graphs for Clustering, Prediction and Visualization. In Proc. Int. Conference on Data Mining.Google ScholarCross Ref

25. Manfred Lau, Jinxiang Chai, Ying-Qing Xu, and Heung-Yeung Shum. 2009. Face Poser: Interactive Modeling of 3D Facial Expressions Using Facial Priors. ACM Trans. Graph. 29, 1 (2009), 3:1–3:17.Google ScholarDigital Library

26. J. P. Lewis, Ken Anjyo, Taehyun Rhee, Mengjie Zhang, Fred Pighin, and Zhigang Deng. 2014. Practice and Theory of Blendshape Facial Models. In Eurographics State of the Art Reports.Google Scholar

27. J. P. Lewis, Jonathan Mooser, Zhigang Deng, and Ulrich Neumann. 2005. Reducing Blendshape Interference by Selected Motion Attenuation. In Proc. Symp. on Interactive 3D Graphics and Games. 25–29.Google ScholarDigital Library

28. Hao Li, Thibaut Weise, and Mark Pauly. 2010. Example-based Facial Rigging. ACM Trans. Graph. 29, 4 (2010), 32:1–32:6.Google ScholarDigital Library

29. Hao Li, Jihun Yu, Yuting Ye, and Chris Bregler. 2013. Realtime Facial Animation with On-the-fly Correctives. ACM Trans. Graph. 32, 4 (2013), 42:1–42:10.Google ScholarDigital Library

30. Junyong Noh and Ulrich Neumann. 2001. Expression Cloning. In Proc. of SIGGRAPH. 277–288. Google ScholarDigital Library

31. Verónica Orvalho, Pedro Bastos, Frederic Parke, Bruno Oliveira, and Xenxo Alvarez. 2012. A Facial Rigging Survey. In Eurographics State of the Art Reports.Google Scholar

32. Verónica Costa Orvalho, Ernesto Zacur, and Antonio Susin. 2008. Transferring the Rig and Animations from a Character to Different Face Models. Computer Graphics Forum 27, 8 (2008), 1997–2012. Google ScholarCross Ref

33. Sinno Jialin Pan and Qiang Yang. 2010. A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering 22, 10 (2010), 1345–1359. Google ScholarDigital Library

34. Frederic I. Parke and Keith Waters. 2008. Computer Facial Animation (2 ed.). AK Peters Ltd.Google Scholar

35. Yuru Pei, Fengchun Huang, Fuhao Shi, and Hongbin Zha. 2012. Unsupervised Image Matching Based on Manifold Alignment. IEEE Transactions on Pattern Analysis and Machine Intelligence 34, 8 (2012), 1658–1664. Google ScholarDigital Library

36. Ulrich Pinkall and Konrad Polthier. 1993. Computing discrete minimal surfaces and their conjugates. Experimental Mathematics 2, 1 (1993), 15–36. Google ScholarCross Ref

37. Jun Saito. 2013. Smooth Contact-aware Facial Blendshapes Transfer. In Proc. Symp. on Digital Production. 7–12. Google ScholarDigital Library

38. Jaewoo Seo, Geoffrey Irving, J. P. Lewis, and Junyong Noh. 2011. Compression and Direct Manipulation of Complex Blendshape Models. ACM Trans. Graph. 30, 6 (2011), 164:1–164:10.Google ScholarDigital Library

39. Yeongho Seol, J. P. Lewis, Jaewoo Seo, Byungkuk Choi, Ken Anjyo, and Junyong Noh. 2012. Spacetime Expression Cloning for Blendshapes. ACM Trans. Graph. 31, 2 (2012), 14:1–14:12.Google ScholarDigital Library

40. Yeongho Seol, Wan-Chun Ma, and J. P. Lewis. 2016. Creating an Actor-specific Facial Rig from Performance Capture. In Proc. Symp. on Digital Production. 13–17. Google ScholarDigital Library

41. Yeongho Seol, Jaewoo Seo, Paul Hyunjin Kim, J. P. Lewis, and Junyong Noh. 2011. Artist Friendly Facial Animation Retargeting. ACM Trans. Graph. 30, 6 (2011), 162:1–162:10.Google ScholarDigital Library

42. Jaewon Song, Byungkuk Choi, Yeongho Seol, and Junyong Noh. 2011. Characteristic facial retargeting. Computer Animation and Virtual Worlds 22, 2–3 (2011), 187–194.Google ScholarDigital Library

43. Robert W. Sumner and Jovan Popović. 2004. Deformation Transfer for Triangle Meshes. ACM Trans. Graph. 23, 3 (2004), 399–405. Google ScholarDigital Library

44. Justus Thies, Michael Zollhöfer, Marc Stamminger, Christian Theobalt, and Matthias Nießner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In IEEE Conference on Computer Vision and Pattern Recognition. 2387–2395. Google ScholarDigital Library

45. Chang Wang and Sridhar Mahadevan. 2009. Manifold Alignment Without Correspondence. In Proc. International Joint Conference on Artifical Intelligence. 1273–1278.Google Scholar

46. Chang Wang and Sridhar Mahadevan. 2011. Heterogeneous Domain Adaptation Using Manifold Alignment. In Proc. International Joint Conference on Artificial Intelligence. 1541–1546.Google Scholar

47. Chang Wang and Sridhar Mahadevan. 2013. Manifold Alignment Preserving Global Geometry. In Proc. International Joint Conference on Artificial Intelligence. 1743–1749.Google Scholar

48. Yang Wang, Xiaolei Huang, Chan-Su Lee, Song Zhang, Zhiguo Li, Dimitris Samaras, Dimitris Metaxas, Ahmed Elgammal, and Peisen Huang. 2004. High Resolution Acquisition, Learning and Transfer of Dynamic 3-D Facial Expressions. Computer Graphics Forum 23, 3 (2004), 677–686. Google ScholarCross Ref

49. Thibaut Weise, Sofien Bouaziz, Hao Li, and Mark Pauly. 2011. Realtime Performance-based Facial Animation. ACM Trans. Graph. 30, 4 (2011), 77:1–77:10.Google ScholarDigital Library

50. Feng Xu, Jinxiang Chai, Yilong Liu, and Xin Tong. 2014. Controllable High-fidelity Facial Performance Transfer. ACM Trans. Graph. 33, 4 (2014), 42:1–42:11.Google ScholarDigital Library

51. Hui Zou and Trevor Hastie. 2005. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 67, 2 (2005), 301–320. Google ScholarCross Ref