“Face transfer with multilinear models” by Vlasic, Brand, Pfister and Popović

Conference:

Type(s):

Title:

- Face transfer with multilinear models

Presenter(s)/Author(s):

Abstract:

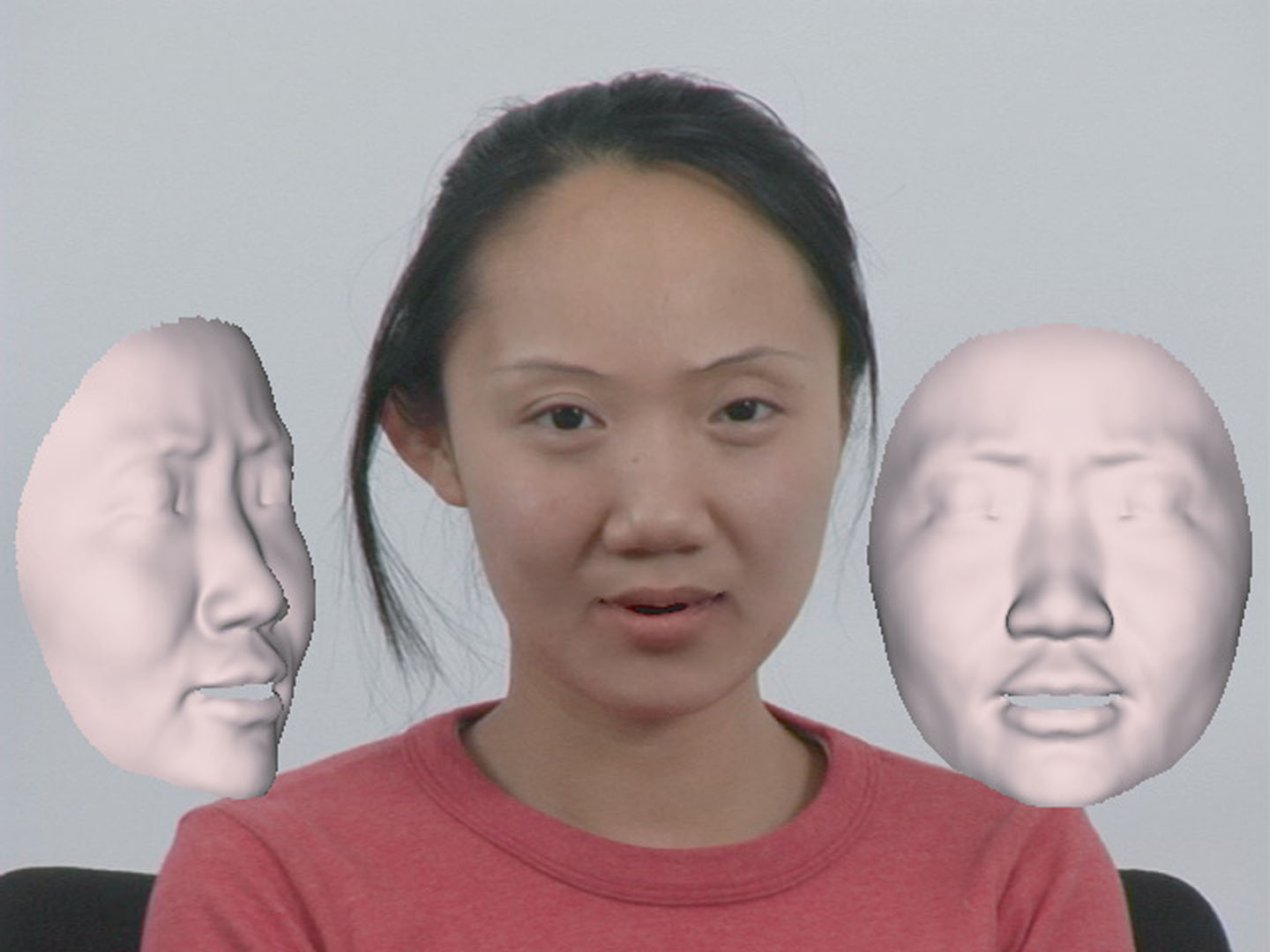

Face Transfer is a method for mapping videorecorded performances of one individual to facial animations of another. It extracts visemes (speech-related mouth articulations), expressions, and three-dimensional (3D) pose from monocular video or film footage. These parameters are then used to generate and drive a detailed 3D textured face mesh for a target identity, which can be seamlessly rendered back into target footage. The underlying face model automatically adjusts for how the target performs facial expressions and visemes. The performance data can be easily edited to change the visemes, expressions, pose, or even the identity of the target—the attributes are separably controllable. This supports a wide variety of video rewrite and puppetry applications.Face Transfer is based on a multilinear model of 3D face meshes that separably parameterizes the space of geometric variations due to different attributes (e.g., identity, expression, and viseme). Separability means that each of these attributes can be independently varied. A multilinear model can be estimated from a Cartesian product of examples (identities × expressions × visemes) with techniques from statistical analysis, but only after careful preprocessing of the geometric data set to secure one-to-one correspondence, to minimize cross-coupling artifacts, and to fill in any missing examples. Face Transfer offers new solutions to these problems and links the estimated model with a face-tracking algorithm to extract pose, expression, and viseme parameters.

References:

1. Allen, B., Curless, B., and Popović, Z. 2003. The space of human body shapes: Reconstruction and parameterization from range scans. ACM Transactions on Graphics 22, 3 (July), 587–594. Google ScholarDigital Library

2. Bascle, B., and Blake, A. 1998. Separability of pose and expression in facial tracking and animation. In International Conference on Computer Vision (ICCV), 323–328. Google ScholarDigital Library

3. Birchfield, S., 1996. KLT: An implementation of the kanade-lucas-tomasi feature tracker. http://www.ces.clemson.edu/~stb/.Google Scholar

4. Blanz, V., and Vetter, T. 1999. A morphable model for the synthesis of 3D faces. In Proceedings of SIGGRAPH 99, Computer Graphics Proceedings, Annual Conference Series, 187–194. Google ScholarDigital Library

5. Blanz, V., Basso, C., Poggio, T., and Vetter, T. 2003. Reanimating faces in images and video. Computer Graphics Forum 22, 3 (Sept.), 641–650.Google ScholarCross Ref

6. Brand, M. E., and Bhotika, R. 2001. Flexible flow for 3D nonrigid tracking and shape recovery. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 1, 315–322.Google Scholar

7. Brand, M. E. 2002. Incremental singular value decomposition of uncertain data with missing values. In European Conference on Computer Vision (ECCV), vol. 2350, 707–720. Google ScholarDigital Library

8. Bregler, C., Covell, M., and Slaney, M. 1997. Video rewrite: Driving visual speech with audio. In Proceedings of SIGGRAPH 97, Computer Graphics Proceedings, Annual Conference Series, 353–360. Google ScholarDigital Library

9. Bregler, C., Hertzmann, A., and Biermann, H. 2000. Recovering non-rigid 3D shape from image streams. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 2, 690–696.Google Scholar

10. Cao, Y., Faloutsos, P., and Pighin, F. 2003. Unsupervised learning for speech motion editing. In Eurographics/SIGGRAPH Symposium on Computer Animation (SCA), 225–231. Google ScholarDigital Library

11. Chai, J.-X., Xiao, J., and Hodgins, J. 2003. Vision-based control of 3D facial animation. In Eurographics/SIGGRAPH Symposium on Computer Animation (SCA), 193–206. Google ScholarDigital Library

12. Chuang, E. S., Deshpande, H., and Bregler, C. 2002. Facial expression space learning. In Pacific Conference on Computer Graphics and Applications (PG), 68–76. Google ScholarDigital Library

13. De Lathauwer, L. 1997. Signal Processing based on Multilinear Algebra. PhD thesis, Katholieke Universiteit Leuven, Belgium.Google Scholar

14. DeCarlo, D., and Metaxas, D. 1996. The integration of optical flow and deformable models with applications to human face shape and motion estimation. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 231–238. Google ScholarDigital Library

15. DeCarlo, D., and Metaxas, D. 2000. Optical flow constraints on deformable models with applications to face tracking. International Journal of Computer Vision 38, 2, 99–127. Google ScholarDigital Library

16. Essa, I., Basu, S., Darrell, T., and Pentland, A. 1996. Modeling, tracking and interactive animation of faces and heads: Using input from video. In Computer Animation ’96, 68–79. Google ScholarDigital Library

17. Ezzat, T., and Poggio, T. 2000. Visual speech synthesis by morphing visemes. International Journal of Computer Vision 38, 1, 45–57. Google ScholarDigital Library

18. Freeman, W. T., and Tenenbaum, J. B. 1997. Learning bilinear models for two factor problems in vision. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 554–560. Google ScholarDigital Library

19. Georghiades, A., Belhumeur, P., and Kriegman, D. 2001. From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI) 23, 6, 643–660. Google ScholarDigital Library

20. Gotsman, C., Gu, X., and Sheffer, A. 2003. Fundamentals of spherical parameterization for 3D meshes. ACM Transactions on Graphics 22, 3 (July), 358–363. Google ScholarDigital Library

21. Jones, T. R., Durand, F., and Desbrun, M. 2003. Non-iterative, feature-preserving mesh smoothing. ACM Transactions on Graphics 22, 3 (July), 943–949. Google ScholarDigital Library

22. Koch, R. M., Gross, M. H., Carls, F. R., Von Büren, D. F., Fankhauser, G., and Parish, Y. 1996. Simulating facial surgery using finite element methods. In Proceedings of SIGGRAPH 96, Computer Graphics Proceedings, Annual Conference Series, 421–428. Google ScholarDigital Library

23. Kraevoy, V., and Sheffer, A. 2004. Cross-parameterization and compatible remeshing of 3D models. ACM Transactions on Graphics 23, 3 (Aug.), 861–869. Google ScholarDigital Library

24. Kroonenberg, P. M., and De Leeuw, J. 1980. Principal component analysis of three-mode data by means of alternating least squares algorithms. Psychometrika 45, 69–97.Google ScholarCross Ref

25. Lee, Y., Terzopoulos, D., and Waters, K. 1995. Realistic modeling for facial animation. In Proceedings of SIGGRAPH 95, Computer Graphics Proceedings, Annual Conference Series, 55–62. Google ScholarDigital Library

26. Li, H., Roivainen, P., and Forchheimer, R. 1993. 3-D motion estimation in model-based facial image coding. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI) 15, 6, 545-555. Google ScholarDigital Library

27. Noh, J.-Y., and Neumann, U. 2001. Expression cloning. In Proceedings of SIGGRAPH 2001, Computer Graphics Proceedings, Annual Conference Series, 277–288. Google ScholarDigital Library

28. Parke, F. I. 1974. A parametric model for human faces. PhD thesis, University of Utah, Salt Lake City, Utah. Google ScholarDigital Library

29. Parke, F. I. 1982. Parameterized models for facial animation. IEEE Computer Graphics & Applications 2 (Nov.), 61–68.Google ScholarDigital Library

30. Pentland, A., and Sclaroff, S. 1991. Closed-form solutions for physically based shape modeling and recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI) 13, 7, 715–729. Google ScholarDigital Library

31. Pérez, P., Gangnet, M., and Blake, A. 2003. Poisson image editing. ACM Transactions on Graphics 22, 3 (July), 313–318. Google ScholarDigital Library

32. Pighin, F., Hecker, J., Lischinski, D., Szeliski, R., and Salesin, D. H. 1998. Synthesizing realistic facial expressions from photographs. In Proceedings of SIGGRAPH 98, Computer Graphics Proceedings, Annual Conference Series, 75–84. Google ScholarDigital Library

33. Pighin, F. H., Szeliski, R., and Salesin, D. 1999. Resynthesizing facial animation through 3d model-based tracking. In International Conference on Computer Vision (ICCV), 143–150.Google Scholar

34. Praun, E., and Hoppe, H. 2003. Spherical parameterization and remeshing. ACM Transactions on Graphics 22, 3 (July), 340–349. Google ScholarDigital Library

35. Robertson, B. 2004. Locomotion. Computer Graphics World (Dec.).Google Scholar

36. Roweis, S. 1997. EM algorithms for PCA and SPCA. In Advances in neural information processing systems 10 (NIPS), 626–632. Google ScholarDigital Library

37. Sirovich, L., and Kirby, M. 1987. Low dimensional procedure for the characterization of human faces. Journal of the Optical Society of America A 4, 519–524.Google ScholarCross Ref

38. Sumner, R. W., and Popović, J. 2004. Deformation transfer for triangle meshes. ACM Transactions on Graphics 23, 3 (Aug.), 399–405. Google ScholarDigital Library

39. Tipping, M. E., and Bishop, C. M. 1999. Probabilistic principal component analysis. Journal of the Royal Statistical Society, Series B 61, 3, 611–622.Google ScholarCross Ref

40. Torresani, L., Yang, D., Alexander, E., and Bregler, C. 2001. Tracking and modeling non-rigid objects with rank constraints. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 1, 493–450.Google Scholar

41. Tucker, L. R. 1966. Some mathematical notes on three-mode factor analysis. Psychometrika 31, 3 (Sept.), 279–311.Google ScholarCross Ref

42. Vasilescu, M. A. O., and Terzopoulos, D. 2002. Multilinear analysis of image ensembles: Tensorfaces. In European Conference on Computer Vision (ECCV), 447–460. Google ScholarDigital Library

43. Vasilescu, M. A. O., and Terzopoulos, D. 2004. Tensortextures: multilinear image-based rendering. ACM Transactions on Graphics 23, 3 (Aug.), 336-342. Google ScholarDigital Library

44. Viola, P., and Jones, M. 2001. Rapid object detection using a boosted cascade of simple features. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 1, 511–518.Google Scholar

45. Wang, Y., Huang, X., Lee, C.-S., Zhang, S., Li, Z., Samaras, D., Metaxas, D., Elgammal, A., and Huang, P. 2004. High resolution acquisition, learning and transfer of dynamic 3-d facial expressions. Computer Graphics Forum 23, 3 (Sept.), 677–686.Google ScholarCross Ref

46. Waters, K. 1987. A muscle model for animating three-dimensional facial expression. In Computer Graphics (Proceedings of SIGGRAPH 87), vol. 21, 17–24. Google ScholarDigital Library

47. Williams, L. 1990. Performance-driven facial animation. In Computer Graphics (Proceedings of SIGGRAPH 90), vol. 24, 235–242. Google ScholarDigital Library

48. Zhang, L., Snavely, N., Curless, B., and Seitz, S. M. 2004. Space-time faces: high resolution capture for modeling and animation. ACM Transactions on Graphics 23, 3 (Aug.), 548-558. Google ScholarDigital Library