“Face Expression Synthesis Based on a Facial Motion Distribution Chart” by Yotsukura, Morishima and Nakamura

Conference:

Type(s):

Entry Number: 085

Title:

- Face Expression Synthesis Based on a Facial Motion Distribution Chart

Presenter(s)/Author(s):

Abstract:

In recent years, 3D computer graphic techniques are used for virtual human and cartoon characters in the entertainment industry. Their facial expressions and mouth movements are natural and smooth. However, these successful results require a tremendous amount of time and effort on the part of accomplished CG creators. In fact, the technique of producing facial expressions for a face mesh object typically calls for preparing all of the transformed objects after changing expressions. Then, using blend shape (another way of saying “morphing”) deformers, we can change the neutral face object into the transformed objects.

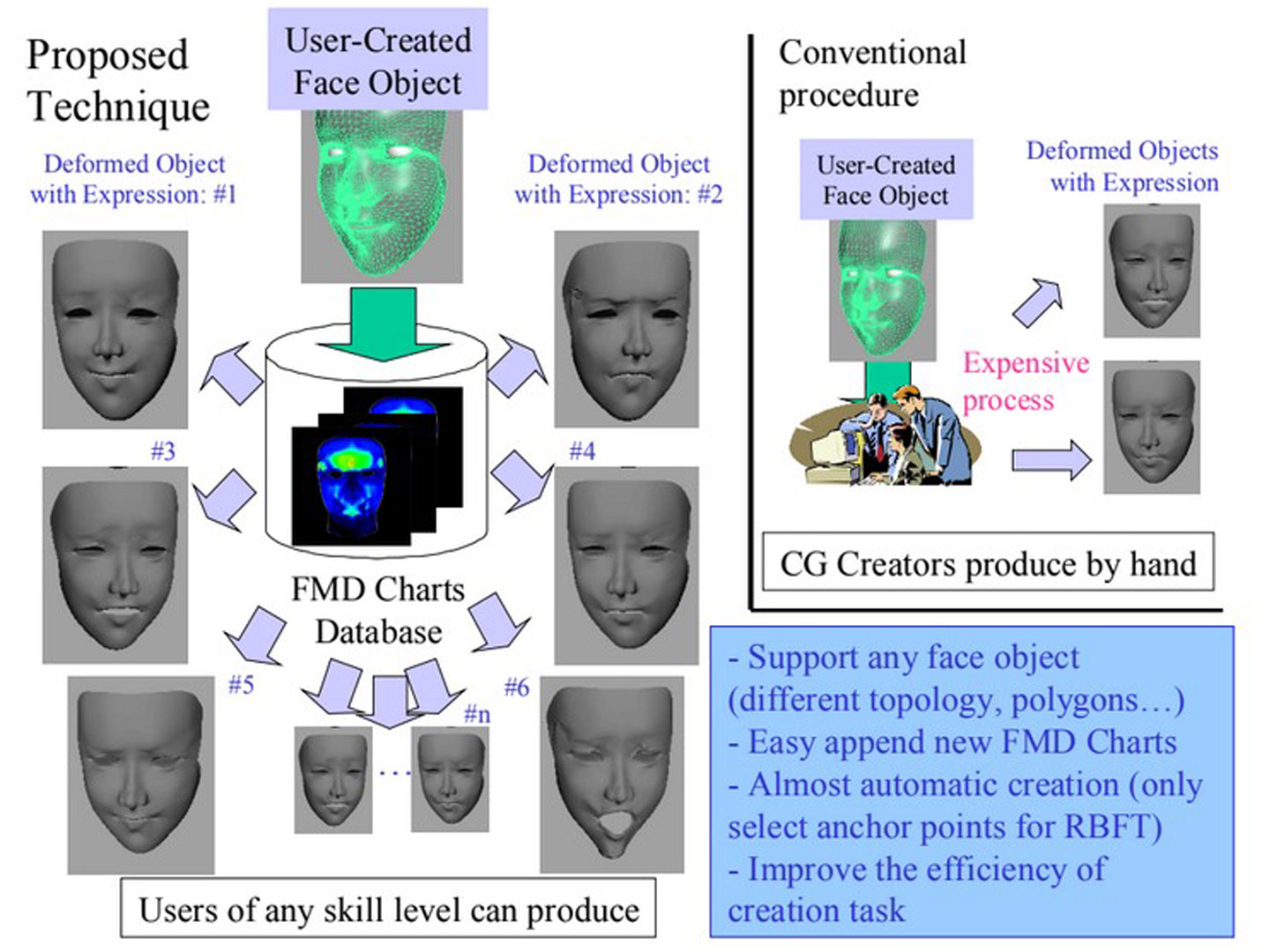

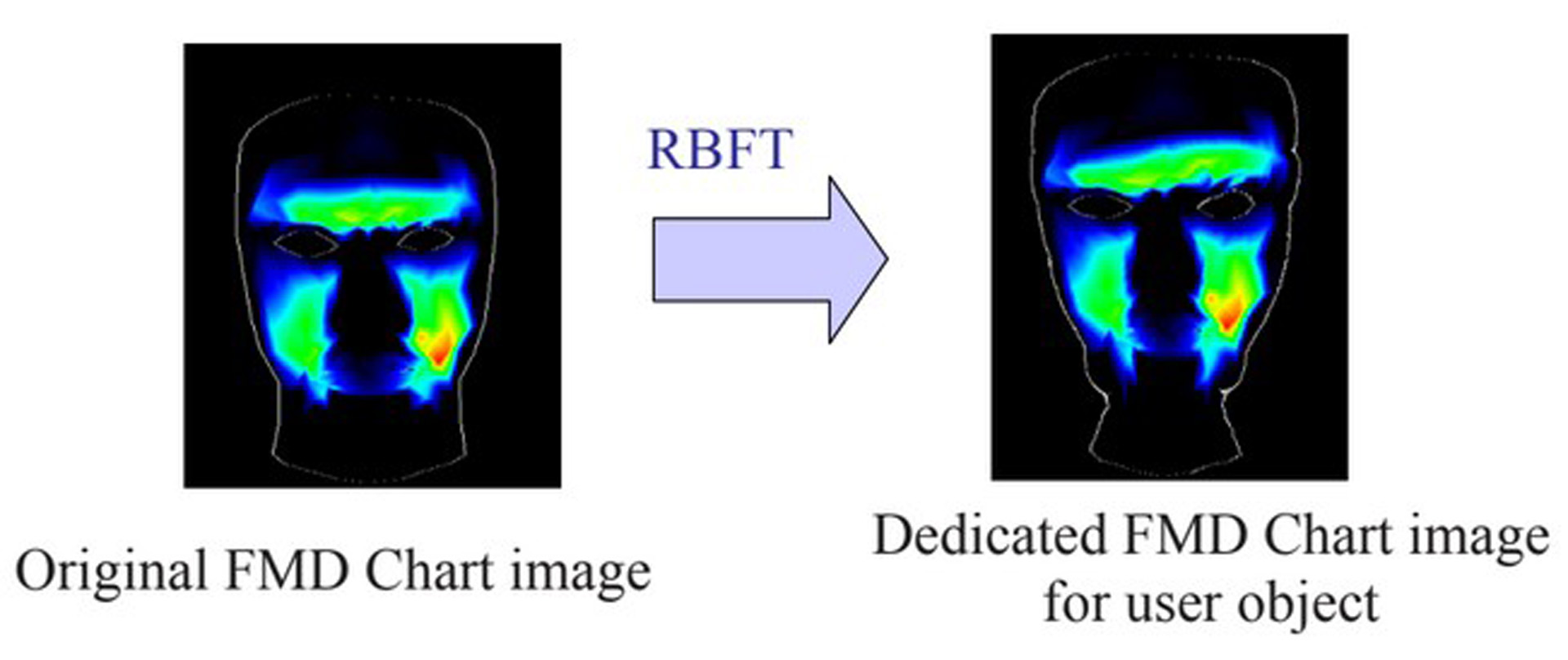

To solve these problems, we propose a technique to create deformed models of the expressions from any user-created face object in a short period of time (Figure 1). First, we create “Facial Motion Distribution Chart” (FMD Chart) which describes the 3D displacement difference of mesh nodes of the face object between an neutral object and a deformed objects. In order to deform a object from the user object using the FMD Chart, we modified the chart smoothly using Radial Basis Function Translation (RBFT). The user who created the face object can thereby create deformed objects with expressions automatically. In other words, the user face object can attain the necessary deformed expressions by preparing various charts of expressions that include realistic, cartoon like and personalized facial expressions. This technique is similar to expression cloning [Noh and Neumann 2001] using RBFT. However we improve accuracy and usability of cloning the face object. For example, we employ FMD-chart of the image-space field (similar to u-v texturespace), which can exactly match the target mesh to separate the upper and lower lips.

This paper describes the definition of FMD Chart, the method for creating the chart, and fitting the user face object and the deformed object. We also demonstrate the results of a re-synthesized user object with facial expression.

References:

1. Noh, J., and Neumann, U. 2001, Expression Cloning. In Proceedings of ACM SIGGRAPH 2001, ACM Press / ACM SIGGRAPH, New York. E. Fiume, Ed., Computer Graphics Proceedings, Annual Conference Series, ACM, 277–288.

Acknowledgements:

This research was supported in part by the National Institute of Information and Communications Technology of Japan.