“Dynamic response for motion capture animation” by Zordan, Majkowska, Chiu and Fast

Conference:

Type(s):

Title:

- Dynamic response for motion capture animation

Presenter(s)/Author(s):

Abstract:

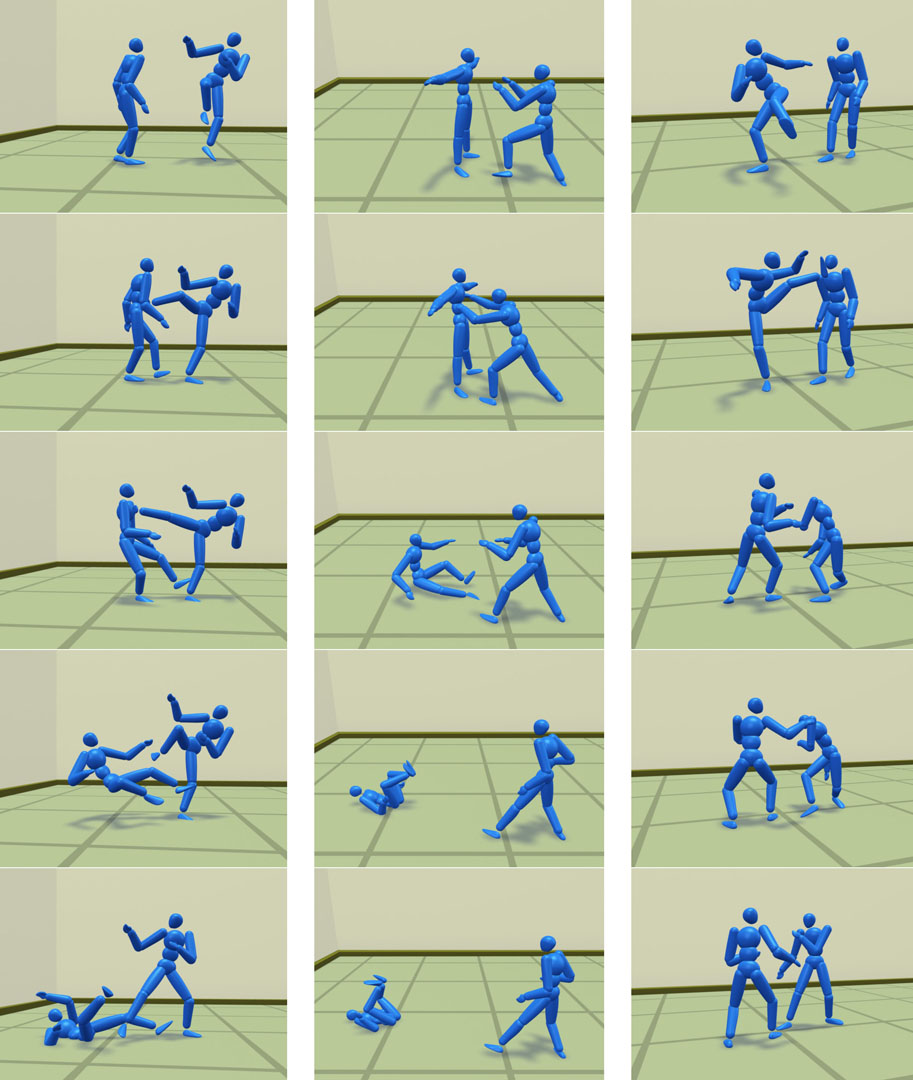

Human motion capture embeds rich detail and style which is difficult to generate with competing animation synthesis technologies. However, such recorded data requires principled means for creating responses in unpredicted situations, for example reactions immediately following impact. This paper introduces a novel technique for incorporating unexpected impacts into a motion capture-driven animation system through the combination of a physical simulation which responds to contact forces and a specialized search routine which determines the best plausible re-entry into motion library playback following the impact. Using an actuated dynamic model, our system generates a physics-based response while connecting motion capture segments. Our method allows characters to respond to unexpected changes in the environment based on the specific dynamic effects of a given contact while also taking advantage of the realistic movement made available through motion capture. We show the results of our system under various conditions and with varying responses using martial arts motion capture as a testbed.

References:

1. Arikan, O., and Forsyth, D. A. 2002. Synthesizing constrained motions from examples. ACM Transactions on Graphics 21, 3 (July), 483–490. Google ScholarDigital Library

2. Faloutsos, P., Van De Panne, M., and Terzopoulos, D. 2001. Composable controllers for physics-based character animation. In Proceedings of ACM SIGGRAPH 2001, 251–260. Google ScholarDigital Library

3. Komura, T., Leung, H., and Kuffner, J. 2004. Animating reactive motions for biped locomotion. In Proc. ACM Symp. on Virtual Reality Software and Technology (VRST ’04). Google ScholarDigital Library

4. Kovar, L., Gleicher, M., and Pighin, F. 2002. Motion graphs. ACM Transactions on Graphics 21, 3 (July), 473–482. Google ScholarDigital Library

5. Lee, J., Chai, J., Reitsma, P. S. A., Hodgins, J. K., and Pollard, N. S. 2002. Interactive control of avatars animated with human motion data. ACM Transactions on Graphics 21, 3 (July), 491–500. Google ScholarDigital Library

6. Li, Y., Wang. T., and Shum, H.-Y. 2002. Motion texture: A two-level statistical model for character motion synthesis. ACM Transactions on Graphics 21, 3 (July), 465–472. Google ScholarDigital Library

7. Mandel, M., 2004. Versatile and interactive virtual humans: Hybrid use of data-driven and dynamics-based motion synthesis. Master’s Thesis. Carnegie Mellon University.Google Scholar

8. Metoyer, R., 2002. Building behaviors with examples. Ph.D. Dissertation, Georgia Institute of Technology. Google ScholarDigital Library

9. Naturalmotion, 2005. Endorphin. www.naturalmotion.com.Google Scholar

10. Oshita, M., and Makinouchi, A. 2001. A dynamic motion control technique for human-like articulated figures. Computer Graphics Forum (Eurographics 2001) 20, 3, 192–202.Google Scholar

11. Pollard, N. S., and Behmaram-Mosavat, F. 2000. Force-based motion editing for locomotion tasks. In Proceedings of the IEEE International Conference on Robotics and Automation.Google Scholar

12. Pollard, N. S. 1999. Simple machines for scaling human motion. In Computer Animation and Simulation ’99, Eurographics Workshop, 3–11.Google ScholarCross Ref

13. Popović, Z., and Witkin, A. 1999. Physically based motion transformation. In Proceedings of ACM SIGGRAPH 1999, 11–20. Google ScholarDigital Library

14. Reitsma, P. S. A., and Pollard, N. S. 2003. Perceptual metrics for character animation: Sensitivity to errors in ballistic motion. ACM Transactions on Graphics 22, 3 (July), 537–542. Google ScholarDigital Library

15. Rose, C., Guenter, B., Bodenheimer, B., and Cohen, M. F. 1996. Efficient generation of motion transitions using spacetime constraints. In Proceedings of ACM SIGGRAPH 1996, 147–154. Google ScholarDigital Library

16. Schódl, A., Szeliski, R., Salesin, D. H., and Essa, I. 2000. Video textures. In Proceedings of ACM SIGGRAPH 2000, 489–498. Google ScholarDigital Library

17. Shapiro, A., Pighin, F., and Faloutsos, P. 2003. Hybrid control for interactive character animation. In Pacific Graphics 2003, 455–461. Google ScholarDigital Library

18. Smith, R., 2005. Open dynamics engine. www.ode.org.Google Scholar

19. Wang, J., and Bodenheimer, B. 2004. Computing the duration of motion transitions: an empirical approach. In ACM SIGGRAPH / Eurographics Symposium on Computer Animation, 335–344. Google ScholarDigital Library

20. Zordan, V. B., and Hodgins, J. K. 2002. Motion capture-driven simulations that hit and react. In ACM SIGGRAPH / Eurographics Symposium on Computer Animation, 89–96. Google ScholarDigital Library

21. Zordan, V. B., and Horst, N. V. D. 2003. Mapping optical motion capture data to skeletal motion using a physical model. In ACM SIGGRAPH / Eurographics Symposium on Computer Animation, 140–145. Google ScholarDigital Library