“Diffusion Image Analogies” by Šubrtová, Lukáč, Čech, Futschik, Shechtman, et al. …

Conference:

Type(s):

Title:

- Diffusion Image Analogies

Session/Category Title: Neural Image Generation and Editing

Presenter(s)/Author(s):

Moderator(s):

Abstract:

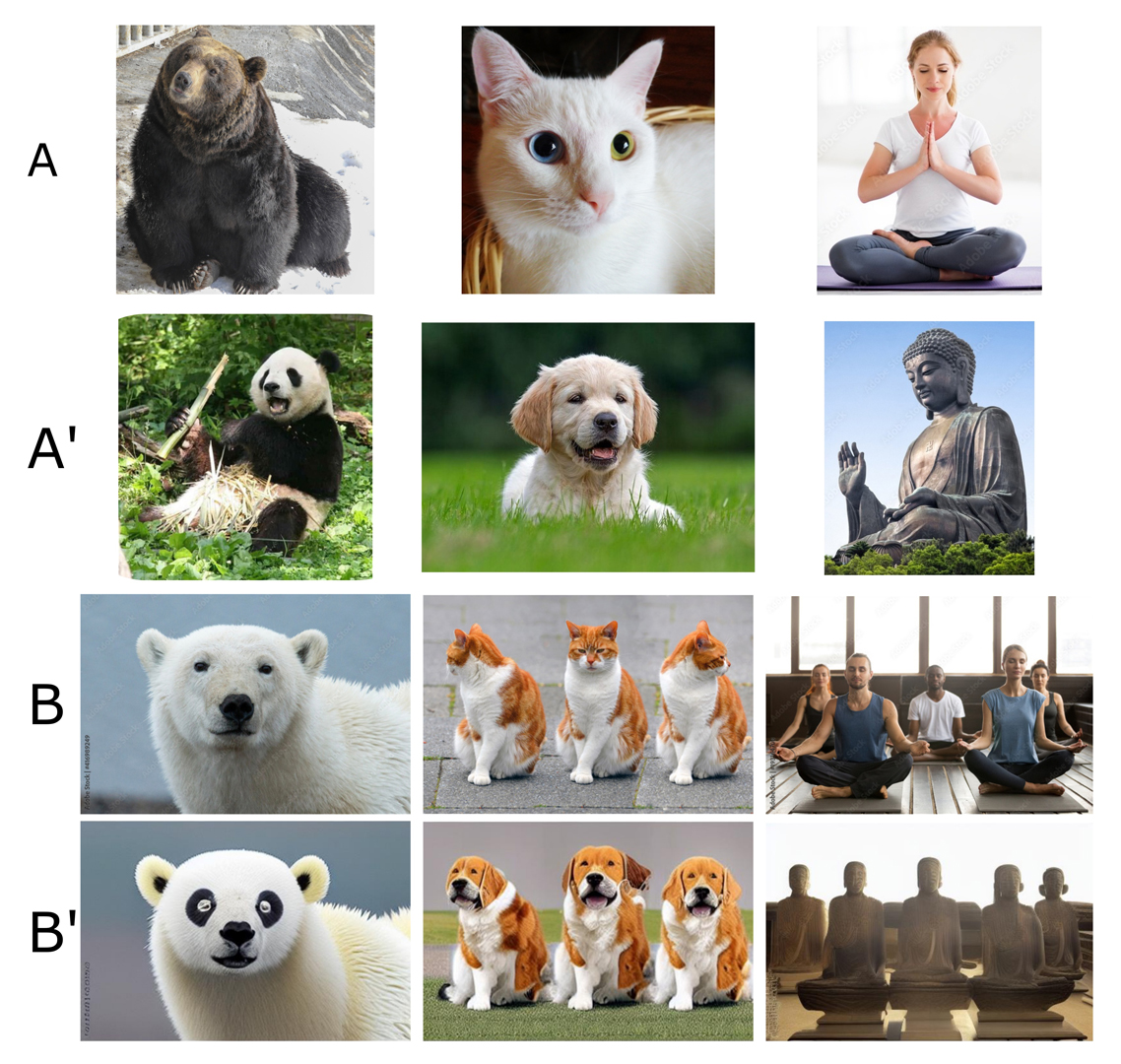

In this paper we present Diffusion Image Analogies—an example-based image editing approach that builds upon the concept of image analogies originally introduced by Hertzmann et al. [2001]. Given a pair of images that specify the intent of a specific transition, our approach enables to modify the target image in a way that it follows the analogy specified by this exemplar. In contrast to previous techniques which were able to capture analogies mostly on the low-level textural details our approach handles also changes in higher level semantics including transition of object domain, change of facial expression, or stylization. Although similar modifications can be achieved using diffusion models guided by text prompts [Rombach et al. 2022] our approach can operate solely in the domain of images without the need to specify the user’s intent using textual form. We demonstrate power of our approach in various challenging scenarios where the specified analogy would be difficult to transfer using previous techniques.

References:

1. Michael Ashikhmin. 2001. Synthesizing Natural Textures. In Proceedings of Symposium on Interactive 3D graphics. 217–226.

2. Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022. Blended Diffusion for Text-driven Editing of Natural Images. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 18208–18209.

3. Amir Bar, Yossi Gandelsman, Trevor Darrell, Amir Globerson, and Alexei A Efros. 2022. Visual Prompting via Image Inpainting. In Advances in Neural Information Processing Systems.

4. Connelly Barnes, Eli Shechtman, Adam Finkelstein, and Dan B Goldman. 2009. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Transactions on Graphics 28, 3 (2009), 24.

5. Pierre Bénard, Forrester Cole, Michael Kass, Igor Mordatch, James Hegarty, Martin Sebastian Senn, Kurt Fleischer, Davide Pesare, and Katherine Breeden. 2013. Stylizing Animation By Example. ACM Transactions on Graphics 32, 4 (2013), 119.

6. Albert S Berahas, Jorge Nocedal, and Martin Takac. 2016. A Multi-Batch L-BFGS Method for Machine Learning. In Advances in Neural Information Processing Systems.

7. Tim Brooks, Aleksander Holynski, and Alexei A. Efros. 2023. InstructPix2Pix: Learning to Follow Image Editing Instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

8. Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel Ziegler, Jeffrey Wu, Clemens Winter, Chris Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language Models are Few-Shot Learners. In Advances in Neural Information Processing Systems. 1877–1901.

9. Mathilde Caron, Hugo Touvron, Ishan Misra, Herve Jegou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. 2021. Emerging properties in self-supervised vision transformers. In Proceedings of IEEE International Conference on Computer Vision. 9650–9660.

10. Olga Diamanti, Connelly Barnes, Sylvain Paris, Eli Shechtman, and Olga Sorkine-Hornung. 2015. Synthesis of Complex Image Appearance from Limited Exemplars. ACM Transactions on Graphics 34, 2 (2015), 22.

11. Jakub Fišer, Ondřej Jamriška, Michal Lukáč, Eli Shechtman, Paul Asente, Jingwan Lu, and Daniel Sýkora. 2016. StyLit: Illumination-Guided Example-Based Stylization of 3D Renderings. ACM Transactions on Graphics 35, 4 (2016), 92.

12. Jakub Fišer, Ondřej Jamriška, David Simons, Eli Shechtman, Jingwan Lu, Paul Asente, Michal Lukáč, and Daniel Sýkora. 2017. Example-Based Synthesis of Stylized Facial Animations. ACM Transactions on Graphics 36, 4 (2017), 155.

13. William T. Freeman, Thouis R. Jones, and Egon C. Pasztor. 2002. Example-Based Super-Resolution. IEEE Computer Graphics and Applications 22, 2 (2002), 56–65.

14. David Futschik, Menglei Chai, Chen Cao, Chongyang Ma, Aleksei Stoliar, Sergey Korolev, Sergey Tulyakov, Michal Kučera, and Daniel Sýkora. 2019. Real-Time Patch-Based Stylization of Portraits Using Generative Adversarial Network. In Proceedings of the ACM/EG Expressive Symposium. 33–42.

15. David Futschik, Michal Kučera, Michal Lukáč, Zhaowen Wang, Eli Shechtman, and Daniel Sýkora. 2021. STALP: Style Transfer with Auxiliary Limited Pairing. Computer Graphics Forum 40, 2 (2021), 563–573.

16. Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H. Bermano, Gal Chechik, and Daniel Cohen-Or. 2022a. An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion. In arXiv:2208.01618.

17. Rinon Gal, Or Patashnik, Haggai Maron, Amit H. Bermano, Gal Chechik, and Daniel Cohen-Or. 2022b. StyleGAN-NADA: CLIP-Guided Domain Adaptation of Image Generators. ACM Transactions on Graphics 41, 4 (2022), 141.

18. Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2016. Image Style Transfer Using Convolutional Neural Networks. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 2414–2423.

19. Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Prompt-to-prompt image editing with cross attention control. In arXiv:2208.01626.

20. Aaron Hertzmann, Charles E. Jacobs, Nuria Oliver, Brian Curless, and David H. Salesin. 2001. Image Analogies. In SIGGRAPH Conference Proceedings. 327–340.

21. Jonathan Ho, Chitwan Saharia, William Chan, David J. Fleet, Mohammad Norouzi, and Tim Salimans. 2022. Cascaded Diffusion Models for High Fidelity Image Generation. Journal of Machine Learning Research 23, 47 (2022), 1–33.

22. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 5967–5976.

23. Ondřej Jamriška, Šárka Sochorová, Ondřej Texler, Michal Lukáč, Jakub Fišer, Jingwan Lu, Eli Shechtman, and Daniel Sýkora. 2019. Stylizing Video by Example. ACM Transactions on Graphics 38, 4 (2019), 107.

24. Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. 2023. Imagic: Text-Based Real Image Editing with Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

25. Nicholas I. Kolkin, Jason Salavon, and Gregory Shakhnarovich. 2019. Style Transfer by Relaxed Optimal Transport and Self-Similarity. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 10051–10060.

26. Gihyun Kwon and Jong Chul Ye. 2023. Diffusion-based Image Translation using disentangled style and content representation. In Proceedings of International Conference on Learning Representations.

27. LambdaLabsML. 2022. Lambda Diffusers – Stable Diffusion Image Variations. https://github.com/LambdaLabsML/lambda-diffusers

28. Chuan Li and Michael Wand. 2016. Combining Markov Random Fields and Convolutional Neural Networks for Image Synthesis. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 2479–2486.

29. Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. 2022. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. In International Conference on Machine Learning. 12888–12900.

30. Yijun Li, Chen Fang, Jimei Yang, Zhaowen Wang, Xin Lu, and Ming-Hsuan Yang. 2017. Universal Style Transfer via Feature Transforms. In Advances in Neural Information Processing Systems. 385–395.

31. Jing Liao, Yuan Yao, Lu Yuan, Gang Hua, and Sing Bing Kang. 2017. Visual Attribute Transfer Through Deep Image Analogy. ACM Transactions on Graphics 36, 4 (2017), 120.

32. Ming-Yu Liu, Xun Huang, Arun Mallya, Tero Karras, Timo Aila, Jaakko Lehtinen, and Jan Kautz. 2019. Few-Shot Unsupervised Image-to-Image Translation. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 10551–10560.

33. Ilya Loshchilov and Frank Hutter. 2019. Decoupled Weight Decay Regularization. In International Conference on Learning Representations.

34. Chenlin Meng, Yutong He, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. 2022. SDEdit: Guided Image Synthesis and Editing with Stochastic Differential Equations. In Proceedings of International Conference on Learning Representations.

35. Tomáš Mikolov, Kai Chen, Greg Corrado, and Jeffrey Dean. 2013. Efficient Estimation of Word Representations in Vector Space. In Proceedings of International Conference on Learning Representations.

36. Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Null-text Inversion for Editing Real Images using Guided Diffusion Models. In arXiv:2211.09794.

37. Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. 2022. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. In Proceedings of International Conference on Machine Learning. 16784–16804.

38. Taesung Park, Alexei A. Efros, Richard Zhang, and Jun-Yan Zhu. 2020. Contrastive Learning for Unpaired Image-to-Image Translation. In Proceedings of European Conference on Computer Vision.

39. Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery. In Proceedings of IEEE International Conference on Computer Vision. 2085–2094.

40. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning. 8748–8763.

41. Alec Radford, Jeff Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. 2019. Language Models are Unsupervised Multitask Learners. (2019).

42. Aditya Ramesh. 2022. How DALL·E 2 Works. http://adityaramesh.com/posts/dalle2/dalle2.html

43. Lincoln Ritter, Wilmot Li, Brian Curless, Maneesh Agrawala, and David Salesin. 2006. Painting With Texture. In Proceedings of Eurographics Symposium on Rendering. 371–376.

44. Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10684–10695.

45. Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2023. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

46. Karen Simonyan and Andrew Zisserman. 2014. Very Deep Convolutional Networks for Large-Scale Image Recognition. CoRR abs/1409.1556 (2014).

47. Jiaming Song, Chenlin Meng, and Stefano Ermon. 2021. Denoising Diffusion Implicit Models. In Proceedings of International Conference on Learning Representations.

48. Yoad Tewel, Yoav Shalev, Idan Schwartz, and Lior Wolf. 2022. ZeroCap: Zero-Shot Image-to-Text Generation for Visual-Semantic Arithmetic. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 17897–17907.

49. Ondřej Texler, David Futschik, Jakub Fišer, Michal Lukáč, Jingwan Lu, Eli Shechtman, and Daniel Sýkora. 2020a. Arbitrary Style Transfer Using Neurally-Guided Patch-Based Synthesis. Computers & Graphics 87 (2020), 62–71.

50. Ondřej Texler, David Futschik, Michal Kučera, Ondřej Jamriška, Šárka Sochorová, Menglei Chai, Sergey Tulyakov, and Daniel Sýkora. 2020b. Interactive Video Stylization Using Few-Shot Patch-Based Training. ACM Transactions on Graphics 39, 4 (2020), 73.

51. Narek Tumanyan, Omer Bar-Tal, Shai Bagon, and Tali Dekel. 2022. Splicing ViT Features for Semantic Appearance Transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10748–10757.

52. Pan Zhang, Bo Zhang, Dong Chen, Lu Yuan, and Fang Wen. 2020. Cross-domain Correspondence Learning for Exemplar-based Image Translation. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 5143–5153.

53. Yang Zhou, Huajie Shi, Dani Lischinski, Minglun Gong, Johannes Kopf, and Hui Huang. 2017. Analysis and Controlled Synthesis of Inhomogeneous Textures. Computer Graphics Forum 36, 2 (2017), 199–212.

54. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. 2017. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of IEEE International Conference on Computer Vision. 2242–2251.