“Dictionary Fields: Learning a Neural Basis Decomposition” by Chen, Xu, Wei, Tang, Su, et al. …

Conference:

Type(s):

Title:

- Dictionary Fields: Learning a Neural Basis Decomposition

Session/Category Title: Deep Geometric Learning

Presenter(s)/Author(s):

Moderator(s):

Abstract:

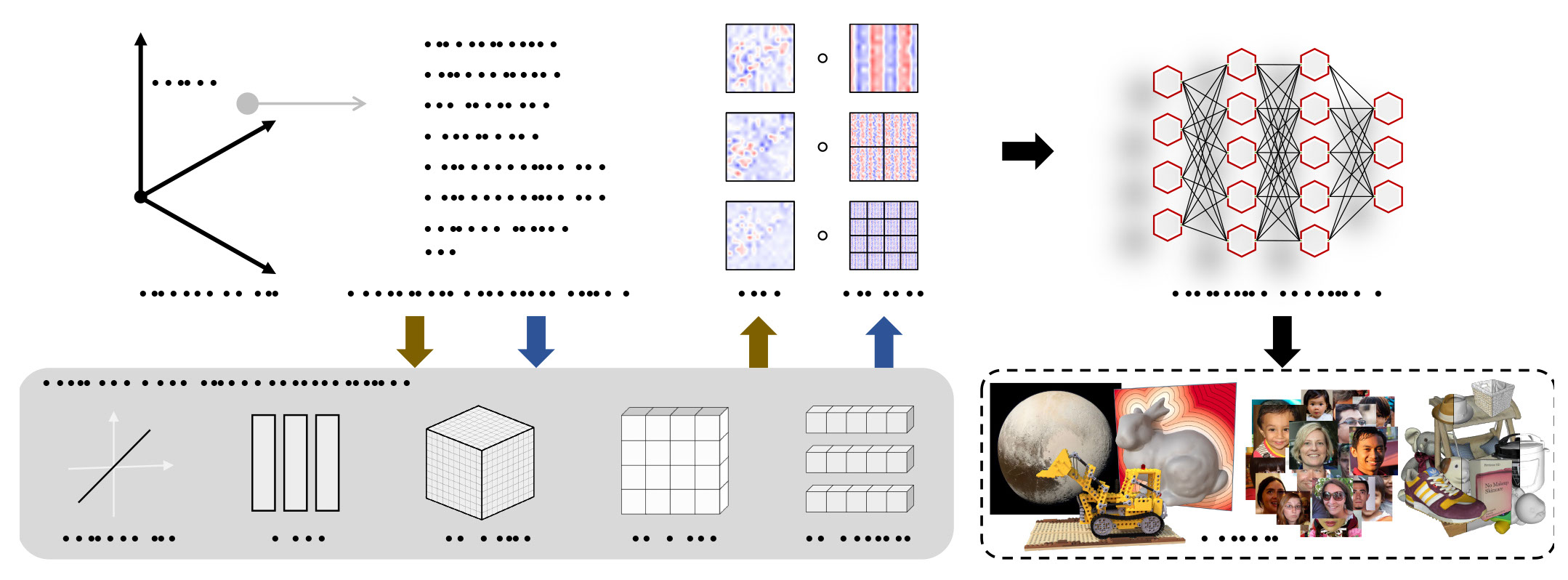

We present Dictionary Fields, a novel neural representation which decomposes a signal into a product of factors, each represented by a classical or neural field representation, operating on transformed input coordinates. More specifically, we factorize a signal into a coefficient field and a basis field, and exploit periodic coordinate transformations to apply the same basis functions across multiple locations and scales. Our experiments show that Dictionary Fields lead to improvements in approximation quality, compactness, and training time when compared to previous fast reconstruction methods. Experimentally, our representation achieves better image approximation quality on 2D image regression tasks, higher geometric quality when reconstructing 3D signed distance fields, and higher compactness for radiance field reconstruction tasks. Furthermore, Dictionary Fields enable generalization to unseen images/3D scenes by sharing bases across signals during training which greatly benefits use cases such as image regression from partial observations and few-shot radiance field reconstruction.

References:

1. Eirikur Agustsson and Radu Timofte. 2017. NTIRE 2017 Challenge on Single Image Super-Resolution: Dataset and Study.

2. Nasir Ahmed, T_ Natarajan, and Kamisetty R Rao. 1974. Discrete cosine transform. IEEE transactions on Computers (1974).

3. Kara-Ali Aliev, Dmitry Ulyanov, and Victor S. Lempitsky. 2019. Neural Point-Based Graphics. arXiv.org 1906.08240 (2019).

4. Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul P. Srinivasan, and Peter Hedman. 2022. Mip-nerf 360: Unbounded anti-aliased neural radiance fields. In CVPR.

5. Sai Bi, Zexiang Xu, Pratul P. Srinivasan, Ben Mildenhall, Kalyan Sunkavalli, Milos Hasan, Yannick Hold-Geoffroy, David J. Kriegman, and Ravi Ramamoorthi. 2020a. Neural Reflectance Fields for Appearance Acquisition. arXiv.org 2008.03824 (2020).

6. Sai Bi, Zexiang Xu, Kalyan Sunkavalli, Miloš Hašan, Yannick Hold-Geoffroy, David Kriegman, and Ravi Ramamoorthi. 2020b. Deep reflectance volumes: Relightable reconstructions from multi-view photometric images. (2020).

7. Mark Boss, Raphael Braun, Varun Jampani, Jonathan T Barron, Ce Liu, and Hendrik Lensch. 2021a. Nerd: Neural reflectance decomposition from image collections. In ICCV.

8. Mark Boss, Varun Jampani, Raphael Braun, Ce Liu, Jonathan Barron, and Hendrik Lensch. 2021b. Neural-pil: Neural pre-integrated lighting for reflectance decomposition. NeurIPS (2021).

9. Rohan Chabra, Jan Eric Lenssen, Eddy Ilg, Tanner Schmidt, Julian Straub, Steven Lovegrove, and Richard Newcombe. 2020. Deep Local Shapes: Learning Local SDF Priors for Detailed 3D Reconstruction. In ECCV.

10. Eric R. Chan, Connor Z. Lin, Matthew A. Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas Guibas, Jonathan Tremblay, Sameh Khamis, Tero Karras, and Gordon Wetzstein. 2022. Efficient Geometry-aware 3D Generative Adversarial Networks. In CVPR.

11. Eric R. Chan, Marco Monteiro, Petr Kellnhofer, Jiajun Wu, and Gordon Wetzstein. 2021. Pi-GAN: Periodic Implicit Generative Adversarial Networks for 3D-Aware Image Synthesis. In CVPR.

12. Anpei Chen, Zexiang Xu, Andreas Geiger, Jingyi Yu, and Hao Su. 2022. TensoRF: Tensorial Radiance Fields. In ECCV.

13. Anpei Chen, Zexiang Xu, Fuqiang Zhao, Xiaoshuai Zhang, Fanbo Xiang, Jingyi Yu, and Hao Su. 2021b. MVSNeRF: Fast Generalizable Radiance Field Reconstruction from Multi-View Stereo. In ICCV.

14. Yinbo Chen, Sifei Liu, and Xiaolong Wang. 2021a. Learning Continuous Image Representation With Local Implicit Image Function. In CVPR.

15. Zhiqin Chen and Hao Zhang. 2019. Learning Implicit Fields for Generative Shape Modeling. In CVPR.

16. Ricardo L. de Queiroz and Philip A. Chou. 2016. Compression of 3D Point Clouds Using a Region-Adaptive Hierarchical Transform. TIP (2016).

17. Laura Downs, Anthony Francis, Nate Koenig, Brandon Kinman, Ryan Hickman, Krista Reymann, Thomas B McHugh, and Vincent Vanhoucke. 2022. Google Scanned Objects: A High-Quality Dataset of 3D Scanned Household Items. arXiv.org 2204.11918 (2022).

18. Michael Elad, J-L Starck, Philippe Querre, and David L Donoho. 2005. Simultaneous cartoon and texture image inpainting using morphological component analysis (MCA). Applied and computational harmonic analysis (2005).

19. Rizal Fathony, Anit Kumar Sahu, Devin Willmott, and J. Zico Kolter. 2021. Multiplicative Filter Networks. In ICLR.

20. Sara Fridovich-Keil, Giacomo Meanti, Frederik Warburg, Benjamin Recht, and Angjoo Kanazawa. 2023. K-Planes: Explicit Radiance Fields in Space, Time, and Appearance. arXiv.org 2301.10241 (2023).

21. Sara Fridovich-Keil, Alex Yu, Matthew Tancik, Qinhong Chen, Benjamin Recht, and Angjoo Kanazawa. 2022. Plenoxels: Radiance Fields without Neural Networks. In CVPR.

22. Xueyang Fu, Zheng-Jun Zha, Feng Wu, Xinghao Ding, and John Paisley. 2019. JPEG Artifacts Reduction via Deep Convolutional Sparse Coding. In ICCV.

23. Jun Gao, Tianchang Shen, Zian Wang, Wenzheng Chen, Kangxue Yin, Daiqing Li, Or Litany, Zan Gojcic, and Sanja Fidler. 2022. GET3D: A Generative Model of High Quality 3D Textured Shapes Learned from Images. In Advances In Neural Information Processing Systems.

24. Alexander Grossmann and Jean Morlet. 1984. Decomposition of Hardy functions into square integrable wavelets of constant shape. SIAM journal on mathematical analysis (1984).

25. Felix Heide, Wolfgang Heidrich, and Gordon Wetzstein. 2015. Fast and flexible convolutional sparse coding. In CVPR.

26. Binbin Huang, Xinhao Yan, Anpei Chen, Shenghua Gao, and Jingyi Yu. 2022. PREF: Phasorial Embedding Fields for Compact Neural Representations. arXiv.org 2205.13524 (2022).

27. Ka-Hei Hui, Ruihui Li, Jingyu Hu, and Chi-Wing Fu. 2022. Neural Wavelet-domain Diffusion for 3D Shape Generation. In ACM Trans. on Graphics, Soon Ki Jung, Jehee Lee, and Adam W. Bargteil (Eds.).

28. Chiyu Jiang, Avneesh Sud, Ameesh Makadia, Jingwei Huang, Matthias Nießner, and Thomas Funkhouser. 2020. Local Implicit Grid Representations for 3D Scenes. In CVPR.

29. Tero Karras, Samuli Laine, and Timo Aila. 2018. A Style-Based Generator Architecture for Generative Adversarial Networks. arXiv.org (2018).

30. Mijeong Kim, Seonguk Seo, and Bohyung Han. 2022. InfoNeRF: Ray Entropy Minimization for Few-Shot Neural Volume Rendering. In CVPR.

31. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. In ICLR.

32. Arno Knapitsch, Jaesik Park, Qian-Yi Zhou, and Vladlen Koltun. 2017. Tanks and Temples: Benchmarking Large-Scale Scene Reconstruction. ACM Trans. on Graphics 36, 4 (2017).

33. Sosuke Kobayashi, Eiichi Matsumoto, and Vincent Sitzmann. 2022. Decomposing NeRF for Editing via Feature Field Distillation. In NIPS.

34. Jonas Kulhanek, Erik Derner, Torsten Sattler, and Robert Babuska. 2022. ViewFormer: NeRF-Free Neural Rendering from Few Images Using Transformers. In ECCV, Shai Avidan, Gabriel J. Brostow, Moustapha Cisse, Giovanni Maria Farinella, and Tal Hassner (Eds.).

35. Alexandr Kuznetsov, Krishna Mullia, Zexiang Xu, Miloš Hašan, and Ravi Ramamoorthi. 2021. NeuMIP: multi-resolution neural materials. ACM Trans. on Graphics (2021).

36. Daniel Lee and H Sebastian Seung. 2000. Algorithms for non-negative matrix factorization. Advances in neural information processing systems (2000).

37. Tianye Li, Mira Slavcheva, Michael Zollhoefer, Simon Green, Christoph Lassner, Changil Kim, Tanner Schmidt, Steven Lovegrove, Michael Goesele, and Zhaoyang Lv. 2021. Neural 3D Video Synthesis. arXiv.org 2103.02597 (2021).

38. Zhengqi Li, Simon Niklaus, Noah Snavely, and Oliver Wang. 2020. Neural Scene Flow Fields for Space-Time View Synthesis of Dynamic Scenes. arXiv.org 2011.13084 (2020).

39. David B. Lindell, Dave Van Veen, Jeong Joon Park, and Gordon Wetzstein. 2022. Bacon: Band-limited Coordinate Networks for Multiscale Scene Representation. In CVPR.

40. Lingjie Liu, Jiatao Gu, Kyaw Zaw Lin, Tat-Seng Chua, and Christian Theobalt. 2020. Neural Sparse Voxel Fields. In NeurIPS.

41. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. In ACM Trans. on Graphics.

42. Julien Mairal, Francis R. Bach, Jean Ponce, and Guillermo Sapiro. 2009. Online dictionary learning for sparse coding. In ICML, Andrea Pohoreckyj Danyluk, Léon Bottou, and Michael L. Littman (Eds.).

43. Matthew, Aditya Kusupati, Alex Fang, Vivek Ramanujan, Aniruddha Kembhavi, Roozbeh Mottaghi, and Ali Farhadi. 2023. Neural Radiance Field Codebooks. arXiv.org 2301.04101 (2023).

44. Lars Mescheder, Michael Oechsle, Michael Niemeyer, Sebastian Nowozin, and Andreas Geiger. 2019. Occupancy Networks: Learning 3D Reconstruction in Function Space. In CVPR.

45. Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing scenes as neural radiance fields for view synthesis. In ECCV.

46. Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Trans. on Graphics (2022).

47. Michael Niemeyer, Jonathan Barron, Ben Mildenhall, Mehdi S. M. Sajjadi, Andreas Geiger, and Noha Radwan. 2022. RegNeRF: Regularizing Neural Radiance Fields for View Synthesis from Sparse Inputs. In CVPR.

48. Michael Niemeyer, Lars M. Mescheder, Michael Oechsle, and Andreas Geiger. 2020. Differentiable Volumetric Rendering: Learning Implicit 3D Representations without 3D Supervision. In CVPR.

49. Bruno A Olshausen and David J Field. 1996. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. (1996).

50. Bruno A Olshausen and David J Field. 1997. Sparse coding with an overcomplete basis set: A strategy employed by V1? Vision research (1997).

51. Jeong Joon Park, Peter Florence, Julian Straub, Richard A. Newcombe, and Steven Lovegrove. 2019. DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation. In CVPR.

52. Keunhong Park, Utkarsh Sinha, Jonathan T Barron, Sofien Bouaziz, Dan B Goldman, Steven M Seitz, and Ricardo Martin-Brualla. 2021. Nerfies: Deformable neural radiance fields. In ICCV.

53. Lang Peng, Zhirong Chen, Zhangjie Fu, Pengpeng Liang, and Erkang Cheng. 2022. BEVSegFormer: Bird’s Eye View Semantic Segmentation From Arbitrary Camera Rigs. arXiv.org 2203.04050 (2022).

54. Albert Pumarola, Enric Corona, Gerard Pons-Moll, and Francesc Moreno-Noguer. 2021. D-NeRF: Neural Radiance Fields for Dynamic Scenes. In CVPR.

55. Gilles Rainer, Wenzel Jakob, Abhijeet Ghosh, and Tim Weyrich. 2019. Neural btf compression and interpolation. In Computer Graphics Forum. Wiley Online Library.

56. Sameera Ramasinghe and Simon Lucey. 2021. Learning Positional Embeddings for Coordinate-MLPs. arXiv.org 2112.11577 (2021).

57. Sameera Ramasinghe, Violetta Shevchenko, Gil Avraham, and Anton Van Den Hengel. 2023. BaLi-RF: Bandlimited Radiance Fields for Dynamic Scene Modeling. arXiv.org 2302.13543 (2023).

58. Ron Rubinstein, Michael Zibulevsky, and Michael Elad. 2008. Efficient implementation of the K-SVD algorithm using batch orthogonal matching pursuit. Technical Report. Computer Science Department, Technion.

59. Katja Schwarz, Yiyi Liao, Michael Niemeyer, and Andreas Geiger. 2020. GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis. In NeurIPS.

60. Shayan Shekarforoush, David Lindell, David J Fleet, and Marcus A Brubaker. 2022. Residual multiplicative filter networks for multiscale reconstruction. (2022).

61. Vincent Sitzmann, Julien N.P. Martel, Alexander W. Bergman, David B. Lindell, and Gordon Wetzstein. 2020. Implicit Neural Representations with Periodic Activation Functions. In NIPS.

62. Liangchen Song, Anpei Chen, Zhong Li, Zhang Chen, Lele Chen, Junsong Yuan, Yi Xu, and Andreas Geiger. 2023. NeRFPlayer: Streamable Dynamic Scene Representation with Decomposed Neural Radiance Fields. TVCG (2023).

63. Cheng Sun, Min Sun, and Hwann-Tzong Chen. 2022. Direct Voxel Grid Optimization: Super-fast Convergence for Radiance Fields Reconstruction. CVPR (2022).

64. Towaki Takikawa, Alex Evans, Jonathan Tremblay, Thomas Müller, Morgan McGuire, Alec Jacobson, and Sanja Fidler. 2022. Variable Bitrate Neural Fields. In ACM Trans. on Graphics.

65. Matthew Tancik, Pratul Srinivasan, Ben Mildenhall, Sara Fridovich-Keil, Nithin Raghavan, Utkarsh Singhal, Ravi Ramamoorthi, Jonathan Barron, and Ren Ng. 2020. Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains. In NeurIPS.

66. Danhang Tang, Mingsong Dou, Peter Lincoln, Philip L. Davidson, Kaiwen Guo, Jonathan Taylor, Sean Ryan Fanello, Cem Keskin, Adarsh Kowdle, Sofien Bouaziz, Shahram Izadi, and Andrea Tagliasacchi. 2018. Real-time compression and streaming of 4D performances. ACM Trans. on Graphics (2018).

67. Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019. Deferred neural rendering: image synthesis using neural textures. ACM Trans. on Graphics (2019).

68. Dor Verbin, Peter Hedman, Ben Mildenhall, Todd Zickler, Jonathan T. Barron, and Pratul P. Srinivasan. 2022. Ref-NeRF: Structured View-Dependent Appearance for Neural Radiance Fields. CVPR (2022).

69. Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. 2021. NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction. In NeurIPS.

70. John Wright, Allen Y. Yang, Arvind Ganesh, S. Shankar Sastry, and Yi Ma. 2009. Robust Face Recognition via Sparse Representation. (2009).

71. Zhijie Wu, Yuhe Jin, and Kwang Moo Yi. 2022. Neural Fourier Filter Bank. arXiv.org 2212.01735 (2022).

72. Yinghao Xu, Sida Peng, Ceyuan Yang, Yujun Shen, and Bolei Zhou. 2022. 3D-aware Image Synthesis via Learning Structural and Textural Representations. CVPR (2022).

73. Jianchao Yang, John Wright, Thomas S. Huang, and Yi Ma. 2010. Image Super-Resolution Via Sparse Representation. TIP (2010).

74. Jianchao Yang, Kai Yu, Yihong Gong, and Thomas S. Huang. 2009. Linear spatial pyramid matching using sparse coding for image classification. In CVPR.

75. Lior Yariv, Jiatao Gu, Yoni Kasten, and Yaron Lipman. 2021. Volume rendering of neural implicit surfaces. In NeurIPS.

76. Alex Yu, Vickie Ye, Matthew Tancik, and Angjoo Kanazawa. 2021. pixelNeRF: Neural Radiance Fields From One or Few Images. In CVPR.

77. Zehao Yu, Songyou Peng, Michael Niemeyer, Torsten Sattler, and Andreas Geiger. 2022. MonoSDF: Exploring Monocular Geometric Cues for Neural Implicit Surface Reconstruction. In NeurIPS.

78. Jimuyang Zhang and Eshed Ohn-Bar. 2021. Learning by Watching. In CVPR.

79. Yanan Zhang, Jiaxin Chen, and Di Huang. 2022. CAT-Det: Contrastively Augmented Transformer for Multi-modal 3D Object Detection. In CVPR.

80. Hongyi Zheng, Hongwei Yong, and Lei Zhang. 2021. Deep Convolutional Dictionary Learning for Image Denoising. In CVPR.

81. Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo magnification: learning view synthesis using multiplane images. ACM Trans. on Graphics (2018).

82. Junqiu Zhu, Yaoyi Bai, Zilin Xu, Steve Bako, Edgar Velázquez-Armendáriz, Lu Wang, Pradeep Sen, Miloš Hašan, and Ling-Qi Yan. 2021. Neural complex luminaires: representation and rendering. ACM Trans. on Graphics (2021).