“Deep Motion Transfer without Big Data” by Kwon, Yu, Jang, Cho, Lee, et al. …

Conference:

Type(s):

Title:

- Deep Motion Transfer without Big Data

Presenter(s)/Author(s):

Entry Number: 58

Abstract:

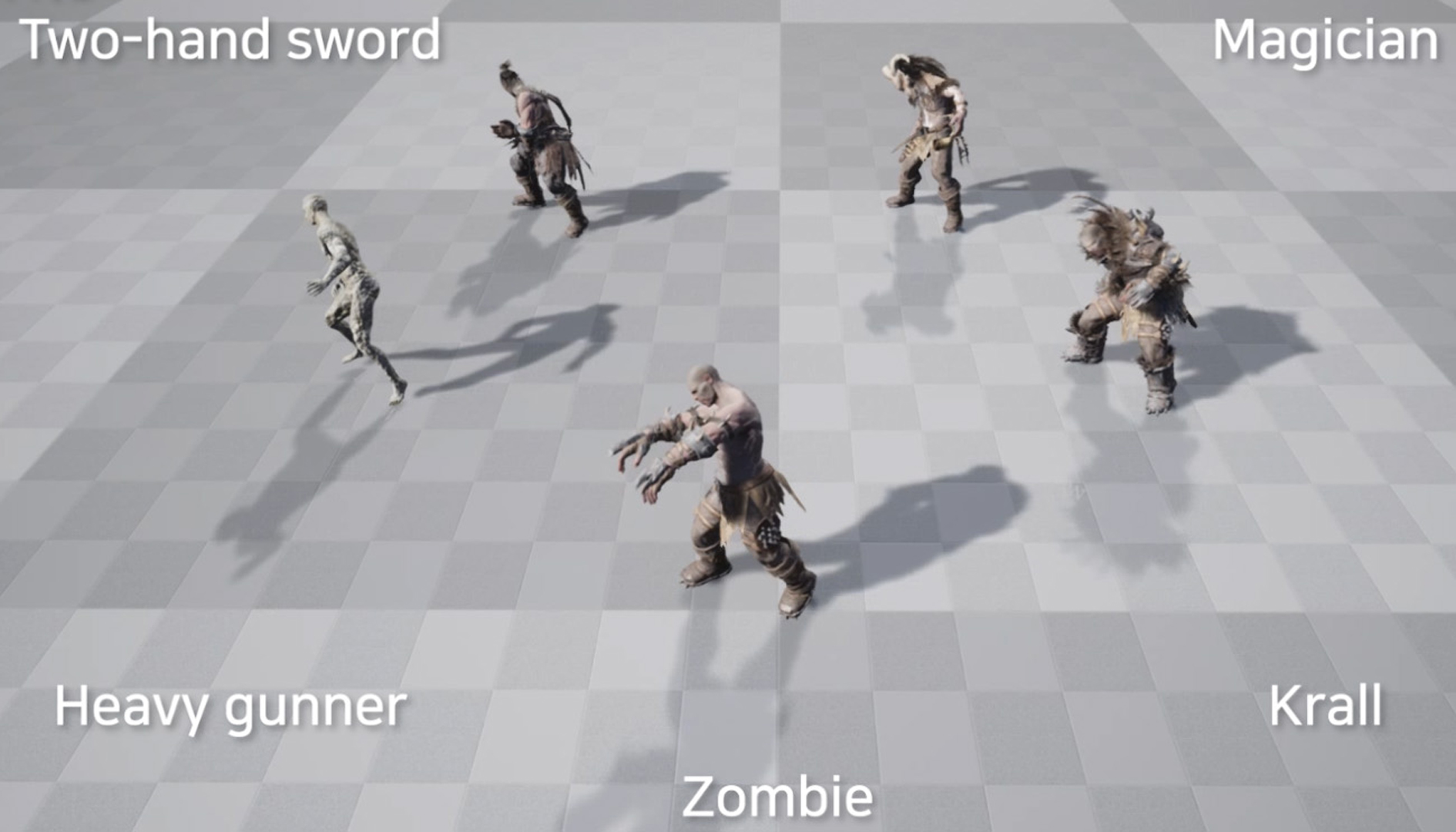

This paper presents a novel motion transfer algorithm that copies content motion into a specific style character. The input consists of two motions. One is a content motion such as walking or running, and the other is movement style such as zombie or Krall. The algorithm automatically generates the synthesized motion such as walking zombie, walking Krall, running zombie, or running Krall. In order to obtain natural results, the method adopts the generative power of deep neural networks. Compared to previous neural approaches, the proposed algorithm shows better quality, runs extremely fast, does not require big data, and supports user controllable style weights.

References:

- Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2016. Image Style Transfer using Convolutional Neural Networks. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2414–2423.

- Kun He, Yan Wang, and John E. Hopcroft. 2016. A Powerful Generative Model using Random Weights for the Deep Image Representation. In Advances in Neural Information Processing Systems 29 (NIPS). 631–639.

- Daniel Holden, Jun Saito, and Taku Komura. 2016. A Deep Learning Framework for Character Motion Synthesis and Editing. ACM Trans. Graph. 35 (2016), 138:1– 138:11.