“DCT-net: domain-calibrated translation for portrait stylization” by Men, Yao, Cui, Lian and Xie

Conference:

Type(s):

Title:

- DCT-net: domain-calibrated translation for portrait stylization

Presenter(s)/Author(s):

Abstract:

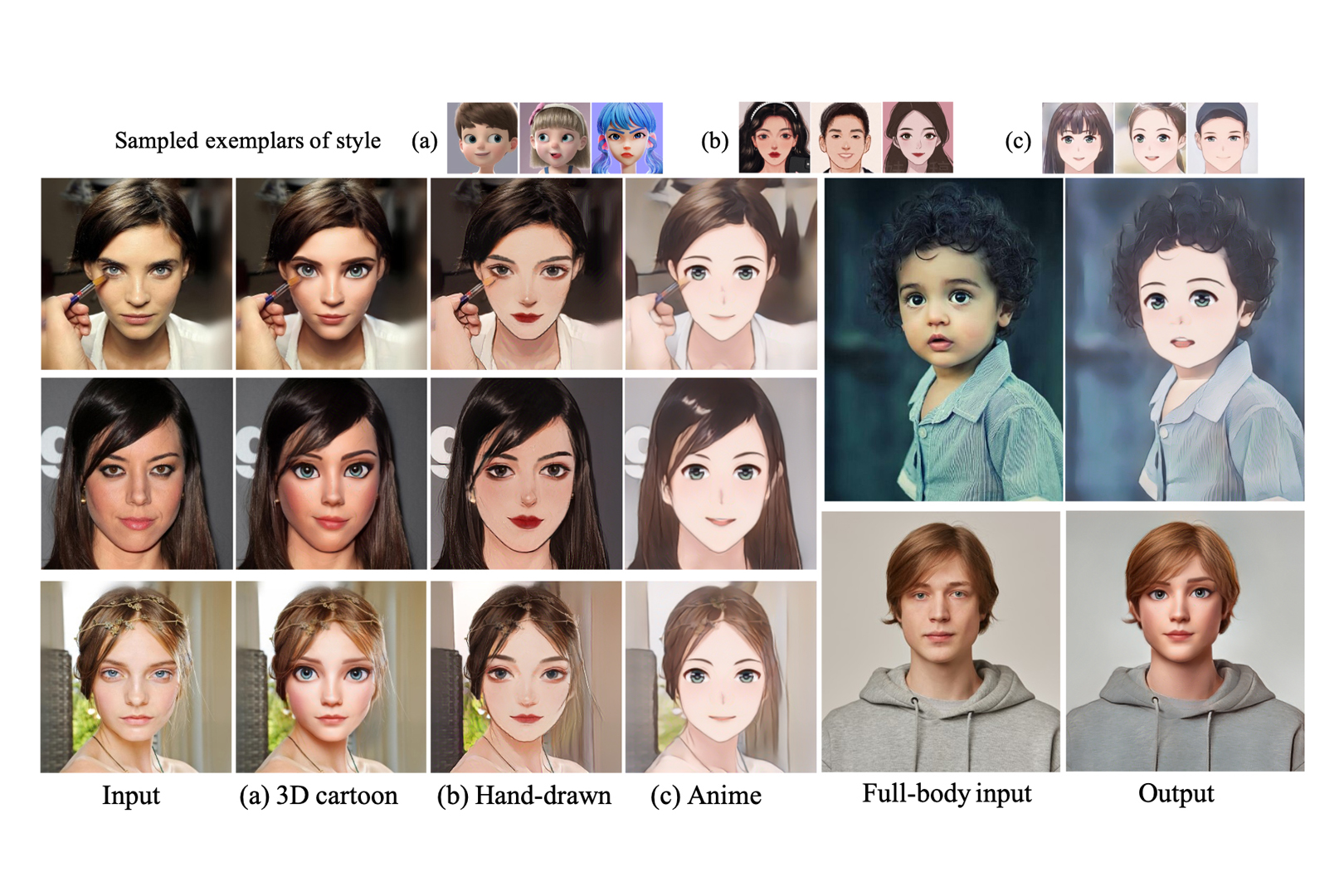

This paper introduces DCT-Net, a novel image translation architecture for few-shot portrait stylization. Given limited style exemplars (~100), the new architecture can produce high-quality style transfer results with advanced ability to synthesize high-fidelity contents and strong generality to handle complicated scenes (e.g., occlusions and accessories). Moreover, it enables full-body image translation via one elegant evaluation network trained by partial observations (i.e., stylized heads). Few-shot learning based style transfer is challenging since the learned model can easily become overfitted in the target domain, due to the biased distribution formed by only a few training examples. This paper aims to handle the challenge by adopting the key idea of “calibration first, translation later” and exploring the augmented global structure with locally-focused translation. Specifically, the proposed DCT-Net consists of three modules: a content adapter borrowing the powerful prior from source photos to calibrate the content distribution of target samples; a geometry expansion module using affine transformations to release spatially semantic constraints; and a texture translation module leveraging samples produced by the calibrated distribution to learn a fine-grained conversion. Experimental results demonstrate the proposed method’s superiority over the state of the art in head stylization and its effectiveness on full image translation with adaptive deformations. Our code is publicly available at https://github.com/menyifang/DCT-Net.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019. Image2stylegan: How to embed images into the stylegan latent space?. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4432–4441.Google ScholarCross Ref

2. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020. Image2stylegan++: How to edit the embedded images?. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8296–8305.Google ScholarCross Ref

3. Animeface 2009. Anime face landmark detector. Animeface. https://github.com/nagadomi/animeface-2009/.Google Scholar

4. David Bau, Jun-Yan Zhu, Jonas Wulff, William Peebles, Hendrik Strobelt, Bolei Zhou, and Antonio Torralba. 2019a. Inverting layers of a large generator. In ICLR Workshop, Vol. 2. 4.Google Scholar

5. David Bau, Jun-Yan Zhu, Jonas Wulff, William Peebles, Hendrik Strobelt, Bolei Zhou, and Antonio Torralba. 2019b. Seeing what a gan cannot generate. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4502–4511.Google ScholarCross Ref

6. Kaidi Cao, Jing Liao, and Lu Yuan. 2018. Carigans: Unpaired photo-to-caricature translation. arXiv preprint arXiv:1811.00222 (2018).Google Scholar

7. Jie Chen, Gang Liu, and Xin Chen. 2019. AnimeGAN: A Novel Lightweight GAN for Photo Animation. In International Symposium on Intelligence Computation and Applications. Springer, 242–256.Google Scholar

8. Yang Chen, Yu-Kun Lai, and Yong-Jin Liu. 2018. Cartoongan: Generative adversarial networks for photo cartoonization. In Proceedings of the IEEE conference on computer vision and pattern recognition. 9465–9474.Google ScholarCross Ref

9. Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. Stargan v2: Diverse image synthesis for multiple domains. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8188–8197.Google ScholarCross Ref

10. Antonia Creswell and Anil Anthony Bharath. 2018. Inverting the generator of a generative adversarial network. IEEE transactions on neural networks and learning systems 30, 7 (2018), 1967–1974.Google Scholar

11. Jiankang Deng, Jia Guo, Niannan Xue, and Stefanos Zafeiriou. 2019. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4690–4699.Google ScholarCross Ref

12. Leon Gatys, Alexander S Ecker, and Matthias Bethge. 2015. Texture synthesis using convolutional neural networks. In Advances in neural information processing systems.Google Scholar

13. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In IEEE Conference on Computer Vision and Pattern Recognition.Google ScholarCross Ref

14. Julia Gong, Yannick Hold-Geoffroy, and Jingwan Lu. 2020. AutoToon: Automatic Geometric Warping for Face Cartoon Generation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 360–369.Google ScholarCross Ref

15. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. Advances in neural information processing systems 27 (2014).Google Scholar

16. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Advances in neural information processing systems 30 (2017).Google Scholar

17. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal unsupervised image-to-image translation. In Proceedings of the European conference on computer vision (ECCV). 172–189.Google ScholarDigital Library

18. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.Google ScholarCross Ref

19. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4401–4410.Google ScholarCross Ref

20. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8110–8119.Google ScholarCross Ref

21. Parneet Kaur, Hang Zhang, and Kristin Dana. 2019. Photo-realistic facial texture transfer. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, 2097–2105.Google ScholarCross Ref

22. Junho Kim, Minjae Kim, Hyeonwoo Kang, and Kwang Hee Lee. 2020. U-GAT-IT: Unsupervised Generative Attentional Networks with Adaptive Layer-Instance Normalization for Image-to-Image Translation. In International Conference on Learning Representations. https://openreview.net/forum?id=BJlZ5ySKPHGoogle Scholar

23. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

24. Jan Eric Kyprianidis, John Collomosse, Tinghuai Wang, and Tobias Isenberg. 2012. State of the” art”: A taxonomy of artistic stylization techniques for images and video. IEEE transactions on visualization and computer graphics 19, 5 (2012), 866–885.Google Scholar

25. Cheng-Han Lee, Ziwei Liu, Lingyun Wu, and Ping Luo. 2020. MaskGAN: Towards Diverse and Interactive Facial Image Manipulation. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

26. Ming-Yu Liu, Thomas Breuel, and Jan Kautz. 2017. Unsupervised image-to-image translation networks. In Advances in neural information processing systems. 700–708.Google Scholar

27. Fangchang Ma, Ulas Ayaz, and Sertac Karaman. 2019. Invertibility of convolutional generative networks from partial measurements. (2019).Google Scholar

28. Mehdi Mirza and Simon Osindero. 2014. Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784 (2014).Google Scholar

29. Utkarsh Ojha, Yijun Li, Jingwan Lu, Alexei A Efros, Yong Jae Lee, Eli Shechtman, and Richard Zhang. 2021. Few-shot Image Generation via Cross-domain Correspondence. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10743–10752.Google ScholarCross Ref

30. Guim Perarnau, Joost Van De Weijer, Bogdan Raducanu, and Jose M ?lvarez. 2016. Invertible conditional gans for image editing. arXiv preprint arXiv:1611.06355 (2016).Google Scholar

31. Justin NM Pinkney and Doron Adler. 2020. Resolution Dependent GAN Interpolation for Controllable Image Synthesis Between Domains. arXiv preprint arXiv:2010.05334 (2020).Google Scholar

32. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in style: a stylegan encoder for image-to-image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2287–2296.Google ScholarCross Ref

33. Daniel Roich, Ron Mokady, Amit H Bermano, and Daniel Cohen-Or. 2021. Pivotal Tuning for Latent-based Editing of Real Images. arXiv preprint arXiv:2106.05744 (2021).Google Scholar

34. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. Springer, 234–241.Google ScholarCross Ref

35. Ahmed Selim, Mohamed Elgharib, and Linda Doyle. 2016. Painting style transfer for head portraits using convolutional neural networks. ACM Transactions on Graphics (ToG) 35, 4 (2016), 1–18.Google ScholarDigital Library

36. Yichun Shi, Debayan Deb, and Anil K Jain. 2019. Warpgan: Automatic caricature generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10762–10771.Google ScholarCross Ref

37. Karen Simonyan and Andrew Zisserman. 2014. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).Google Scholar

38. Guoxian Song, Linjie Luo, Jing Liu, Wan-Chun Ma, Chunpong Lai, Chuanxia Zheng, and Tat-Jen Cham. 2021. AgileGAN: stylizing portraits by inversion-consistent transfer learning. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–13.Google ScholarDigital Library

39. Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. 2021. Designing an encoder for stylegan image manipulation. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–14.Google ScholarDigital Library

40. Yuri Viazovetskyi, Vladimir Ivashkin, and Evgeny Kashin. 2020. Stylegan2 distillation for feed-forward image manipulation. In European Conference on Computer Vision. Springer, 170–186.Google ScholarDigital Library

41. Hao Wang, Yitong Wang, Zheng Zhou, Xing Ji, Dihong Gong, Jingchao Zhou, Zhifeng Li, and Wei Liu. 2018b. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 5265–5274.Google ScholarCross Ref

42. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018a. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE conference on computer vision and pattern recognition. 8798–8807.Google ScholarCross Ref

43. Xinrui Wang and Jinze Yu. 2020. Learning to Cartoonize Using White-Box Cartoon Representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8090–8099.Google ScholarCross Ref

44. Weihao Xia, Yulun Zhang, Yujiu Yang, Jing-Hao Xue, Bolei Zhou, and Ming-Hsuan Yang. 2021. Gan inversion: A survey. arXiv preprint arXiv:2101.05278 (2021).Google Scholar

45. Kaipeng Zhang, Zhanpeng Zhang, Zhifeng Li, and Yu Qiao. 2016. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Processing Letters 23, 10 (2016), 1499–1503.Google ScholarCross Ref

46. Jiapeng Zhu, Yujun Shen, Deli Zhao, and Bolei Zhou. 2020. In-domain gan inversion for real image editing. In European conference on computer vision. Springer, 592–608.Google ScholarDigital Library

47. Jun-Yan Zhu, Philipp Kr?henb?hl, Eli Shechtman, and Alexei A Efros. 2016. Generative visual manipulation on the natural image manifold. In European conference on computer vision. Springer, 597–613.Google ScholarCross Ref

48. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.Google ScholarCross Ref