“Correspondence Neural Network for Line Art Colorization” by , Do, Nguyen, Pham, Nguyen, et al. …

Conference:

Type(s):

Entry Number: 44

Title:

- Correspondence Neural Network for Line Art Colorization

Presenter(s)/Author(s):

Abstract:

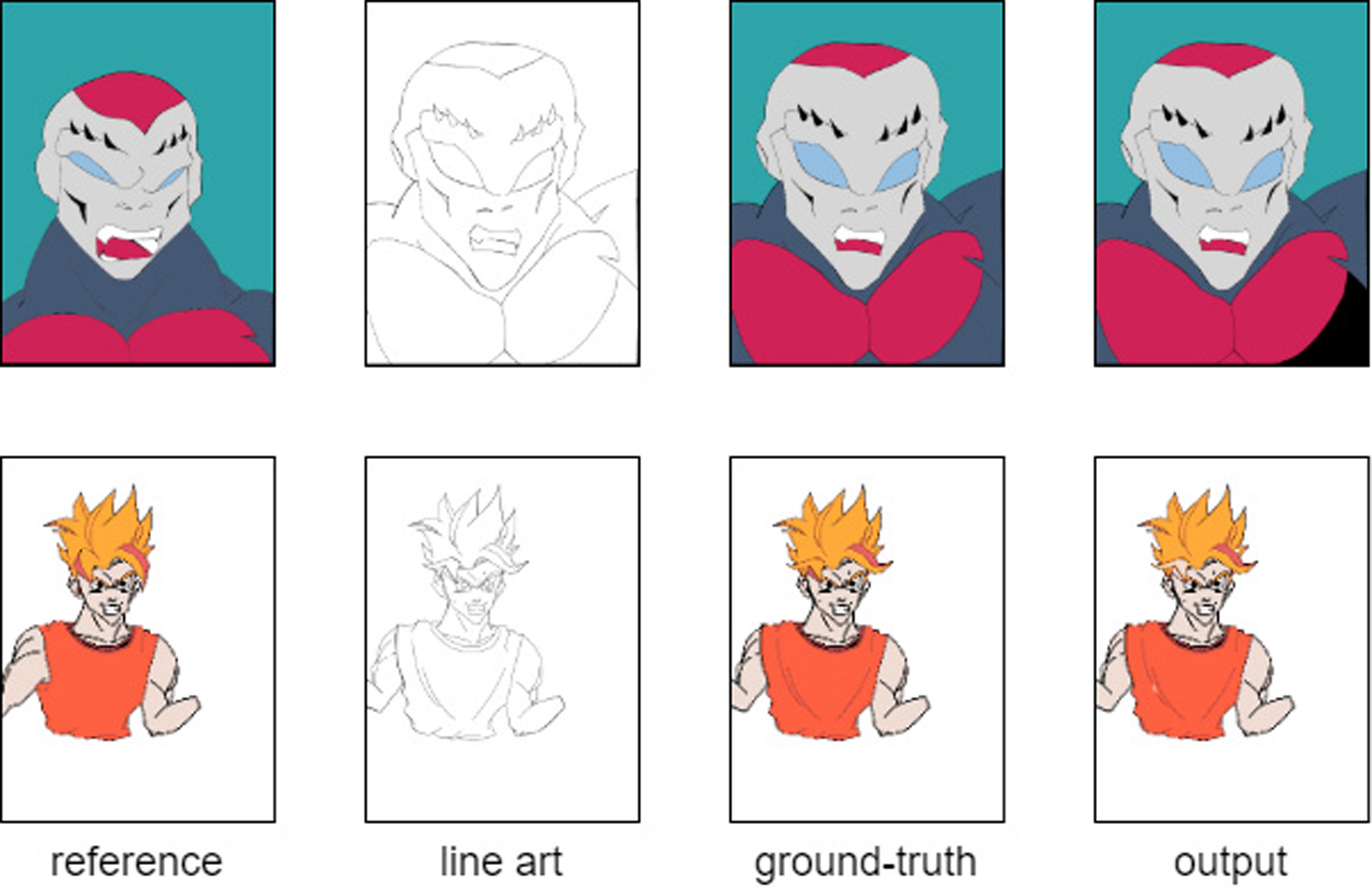

Line art colorization is a critical process in animation production, but this procedure is usually tedious and time-consuming. There are hint-based colorization tools [LvMin Zhang and Liu 2018], but they fail to transfer colors to a new sketch without user intervention. GAN-based style transfer methods [Goodfellow et al. 2014] focus on style rather than correct colors, and suffer from color bleeding. GAN models are also data hungry, since they need lots of training examples to generalize, which are not readily available in animation industry. In addition, color propagation methods [Meyer et al. 2018] target mainly grayscale photos. Compared to this kind of data, line arts lack texture information.

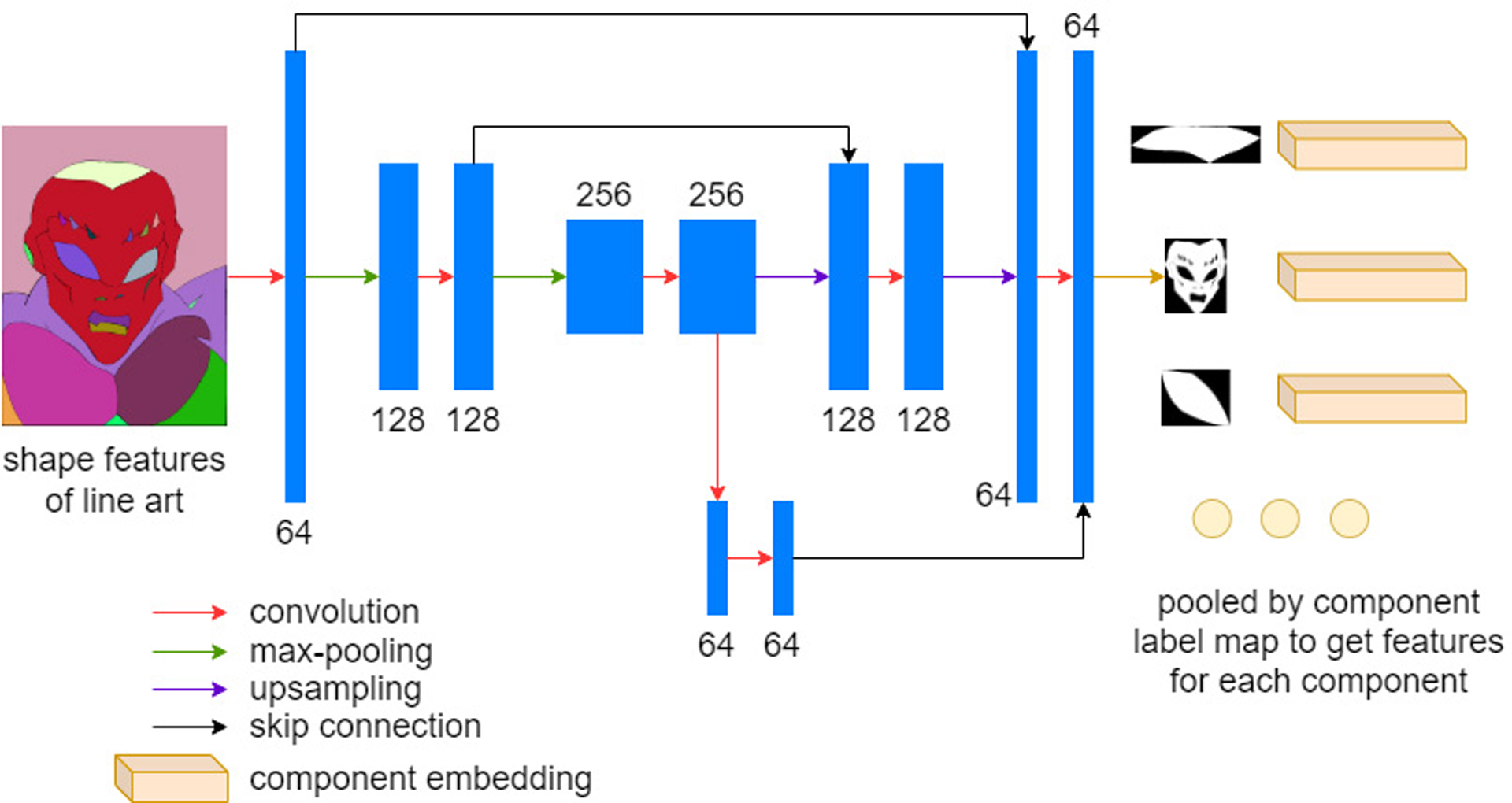

In this work, we propose a deep architecture to colorize line arts based on a reference image by finding corresponding (connected) components. The proposed method is inspired by Universal Correspondence Network [Choy et al. 2016]. Here, we apply U-net [Ronneberger et al. 2015] for feature extraction on component level instead of pixel level. After decomposing a line art into a set of components, we extract, for each component, an embedding vector by a network. These embeddings are used to determine component correspondences between the reference and a target line art, so reference colors can be propagated onto corresponding regions.

Keyword(s):

Acknowledgements:

This research was supported by Cinnamon AI. We thank members from Cinnamon AI who provided insight and expertise that greatly assisted the research.