“Composite Motion Learning with Task Control” by Xu, Shang, Zordan and Karamouzas

Conference:

Type(s):

Title:

- Composite Motion Learning with Task Control

Session/Category Title: Character Animation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

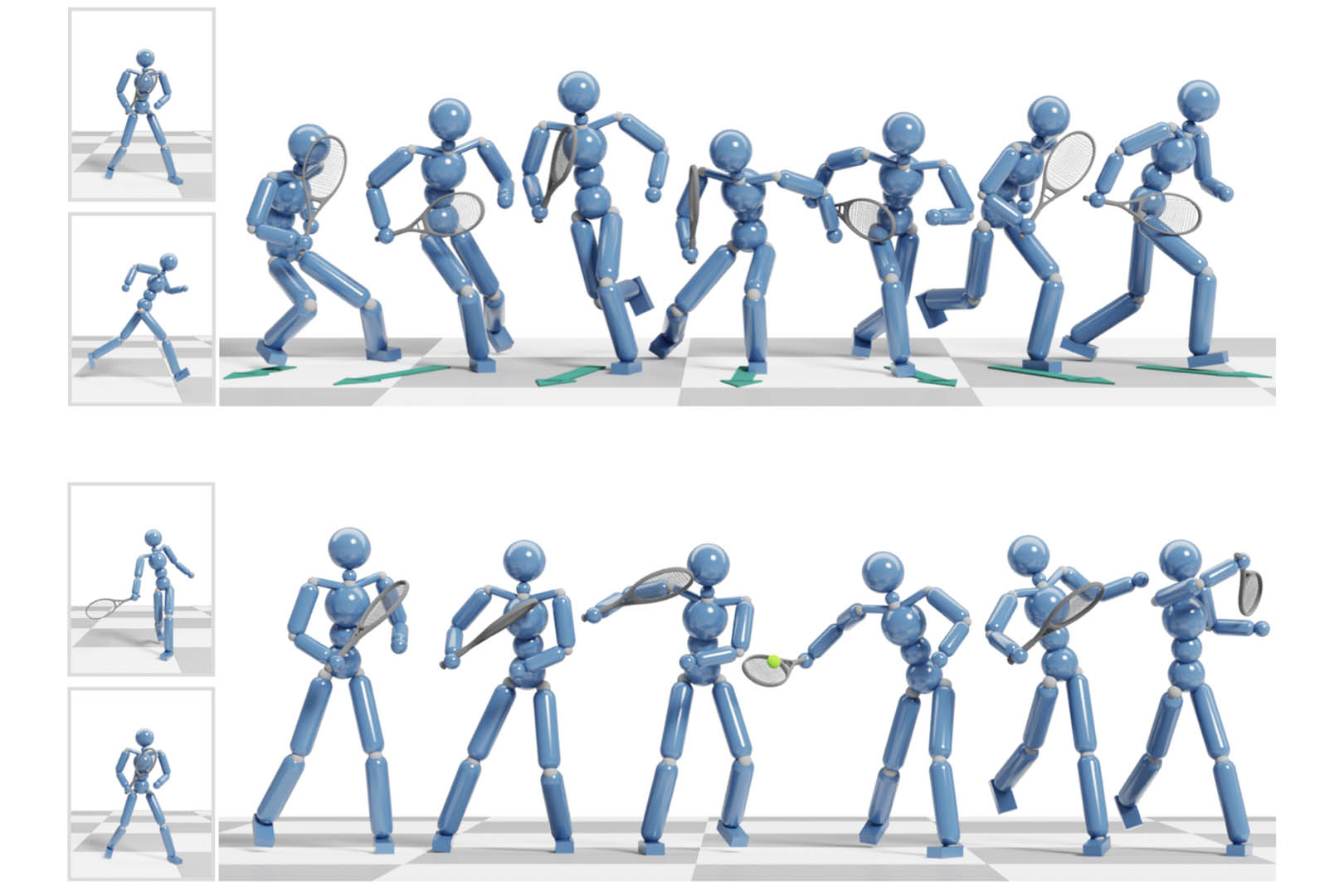

We present a deep learning method for composite and task-driven motion control for physically simulated characters. In contrast to existing data-driven approaches using reinforcement learning that imitate full-body motions, we learn decoupled motions for specific body parts from multiple reference motions simultaneously and directly by leveraging the use of multiple discriminators in a GAN-like setup. In this process, there is no need of any manual work to produce composite reference motions for learning. Instead, the control policy explores by itself how the composite motions can be combined automatically. We further account for multiple task-specific rewards and train a single, multi-objective control policy. To this end, we propose a novel framework for multi-objective learning that adaptively balances the learning of disparate motions from multiple sources and multiple goal-directed control objectives. In addition, as composite motions are typically augmentations of simpler behaviors, we introduce a sample-efficient method for training composite control policies in an incremental manner, where we reuse a pre-trained policy as the meta policy and train a cooperative policy that adapts the meta one for new composite tasks. We show the applicability of our approach on a variety of challenging multi-objective tasks involving both composite motion imitation and multiple goal-directed control. Code is available at https://motion-lab.github.io/CompositeMotion.

References:

1. Yeuhi Abe, Marco Da Silva, and Jovan Popović. 2007. Multiobjective control with frictional contacts. In ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 249–258.

2. Eduardo Alvarado, Damien Rohmer, and Marie-Paule Cani. 2022. Generating Upper-Body Motion for Real-Time Characters Making their Way through Dynamic Environments. Computer Graphics Forum 41, 8 (2022).

3. Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: data-driven responsive control of physics-based characters. ACM Transactions On Graphics 38, 6 (2019), 1–11.

4. Nuttapong Chentanez, Matthias Müller, Miles Macklin, Viktor Makoviychuk, and Stefan Jeschke. 2018. Physics-Based motion capture imitation with deep reinforcement learning. In ACM SIGGRAPH Conference on Motion, Interaction and Games.

5. Junyoung Chung, Caglar Gulcehre, KyungHyun Cho, and Yoshua Bengio. 2014. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555 (2014).

6. Alexander Clegg, Wenhao Yu, Jie Tan, C Karen Liu, and Greg Turk. 2018. Learning to dress: Synthesizing human dressing motion via deep reinforcement learning. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–10.

7. Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2010. Generalized biped walking control. ACM Transactions on Graphics 29, 4 (2010), 130.

8. Danilo Borges da Silva, Rubens Fernandes Nunes, Creto Augusto Vidal, Joaquim B Cavalcante-Neto, Paul G Kry, and Victor B Zordan. 2017. Tunable robustness: An artificial contact strategy with virtual actuator control for balance. Computer Graphics Forum 36, 8 (2017), 499–510.

9. Marco Da Silva, Yeuhi Abe, and Jovan Popović. 2008. Simulation of human motion data using short-horizon model-predictive control. Computer Graphics Forum 27, 2 (2008), 371–380.

10. Martin De Lasa and Aaron Hertzmann. 2009. Prioritized optimization for task-space control. In IEEE/RSJ International Conference on Intelligent Robots and Systems. 5755–5762.

11. Martin De Lasa, Igor Mordatch, and Aaron Hertzmann. 2010. Feature-based locomotion controllers. ACM Transactions on Graphics 29, 4 (2010), 1–10.

12. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. 2017. Improved training of wasserstein gans. Advances in Neural Information Processing Systems 30 (2017).

13. Perttu Hämäläinen, Joose Rajamäki, and C Karen Liu. 2015. Online control of simulated humanoids using particle belief propagation. ACM Transactions on Graphics 34, 4 (2015), 1–13.

14. Tatsuya Harada, Sou Taoka, Taketoshi Mori, and Tomomasa Sato. 2004. Quantitative evaluation method for pose and motion similarity based on human perception. In IEEE/RAS International Conference on Humanoid Robots, Vol. 1. 494–512.

15. Félix G. Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Transactions on Graphics 39, 4 (2020).

16. Jonathan Ho and Stefano Ermon. 2016. Generative adversarial imitation learning. Advances in Neural Information Processing Systems 29 (2016).

17. Deok-Kyeong Jang, Soomin Park, and Sung-Hee Lee. 2022. Motion Puzzle: Arbitrary Motion Style Transfer by Body Part. ACM Transactions on Graphics 41, 3 (2022).

18. Won-Seob Jang, Won-Kyu Lee, In-Kwon Lee, and Jehee Lee. 2008. Enriching a motion database by analogous combination of partial human motions. The Visual Computer 24, 4 (2008), 271–280.

19. Andrej Karpathy and Michiel Van De Panne. 2012. Curriculum learning for motor skills. In Canadian Conference on Advances in Artificial Intelligence. Springer, 325–330.

20. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

21. Taesoo Kwon and Jessica K Hodgins. 2010. Control systems for human running using an inverted pendulum model and a reference motion capture sequence. In Proceedings of the 2010 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 129–138.

22. Taesoo Kwon and Jessica K Hodgins. 2017. Momentum-mapped inverted pendulum models for controlling dynamic human motions. ACM Transactions on Graphics 36, 1 (2017), 1–14.

23. Seunghwan Lee, Phil Sik Chang, and Jehee Lee. 2022. Deep Compliant Control. In ACM SIGGRAPH 2022 Conference Proceedings. Association for Computing Machinery.

24. Seyoung Lee, Sunmin Lee, Yongwoo Lee, and Jehee Lee. 2021. Learning a family of motor skills from a single motion clip. ACM Transactions on Graphics 40, 4 (2021), 1–13.

25. Seunghwan Lee, Moonseok Park, Kyoungmin Lee, and Jehee Lee. 2019. Scalable muscle-actuated human simulation and control. ACM Transactions on Graphics 38, 4 (2019), 1–13.

26. Yoonsang Lee, Sungeun Kim, and Jehee Lee. 2010. Data-driven biped control. ACM Transactions on Graphics 29, 4 (2010), 129.

27. Jae Hyun Lim and Jong Chul Ye. 2017. Geometric GAN. arXiv preprint arXiv:1705.02894 (2017).

28. Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel Van De Panne. 2020. Character controllers using motion vaes. ACM Transactions on Graphics 39, 4 (2020), 40–1.

29. Libin Liu and Jessica Hodgins. 2018. Learning basketball dribbling skills using trajectory optimization and deep reinforcement learning. ACM Transactions on Graphics 37, 4 (2018), 1–14.

30. Libin Liu, Michiel van de Panne, and KangKang Yin. 2016. Guided learning of control graphs for physics-based characters. ACM Transactions on Graphics 35, 3 (2016), 1–14.

31. Libin Liu, KangKang Yin, and Baining Guo. 2015. Improving sampling-based motion control. Computer Graphics Forum 34, 2 (2015), 415–423.

32. Libin Liu, KangKang Yin, Michiel van de Panne, and Baining Guo. 2012. Terrain runner: control, parameterization, composition, and planning for highly dynamic motions. ACM Transactions on Graphics 31, 6 (2012), 154–1.

33. Libin Liu, KangKang Yin, Michiel van de Panne, Tianjia Shao, and Weiwei Xu. 2010. Sampling-based contact-rich motion control. In ACM SIGGRAPH 2010 papers. 1–10.

34. Adriano Macchietto, Victor Zordan, and Christian R Shelton. 2009. Momentum control for balance. In ACM SIGGRAPH 2009 papers. 1–8.

35. Viktor Makoviychuk, Lukasz Wawrzyniak, Yunrong Guo, Michelle Lu, Kier Storey, Miles Macklin, David Hoeller, Nikita Rudin, Arthur Allshire, Ankur Handa, et al. 2021. Isaac Gym: High performance GPU-based physics simulation for robot learning. arXiv preprint arXiv:2108.10470 (2021).

36. Josh Merel, Leonard Hasenclever, Alexandre Galashov, Arun Ahuja, Vu Pham, Greg Wayne, Yee Whye Teh, and Nicolas Heess. 2019. Neural Probabilistic Motor Primitives for Humanoid Control. In International Conference on Learning Representations.

37. Josh Merel, Yuval Tassa, Dhruva TB, Sriram Srinivasan, Jay Lemmon, Ziyu Wang, Greg Wayne, and Nicolas Heess. 2017. Learning human behaviors from motion capture by adversarial imitation. arXiv preprint arXiv:1707.02201 (2017).

38. Josh Merel, Saran Tunyasuvunakool, Arun Ahuja, Yuval Tassa, Leonard Hasenclever, Vu Pham, Tom Erez, Greg Wayne, and Nicolas Heess. 2020. Catch & Carry: reusable neural controllers for vision-guided whole-body tasks. ACM Transactions on Graphics 39, 4 (2020), 39–1.

39. Igor Mordatch and Emo Todorov. 2014. Combining the benefits of function approximation and trajectory optimization. In Robotics: Science and Systems, Vol. 4.

40. Igor Mordatch, Emanuel Todorov, and Zoran Popović. 2012. Discovery of complex behaviors through contact-invariant optimization. ACM Transactions on Graphics 31, 4 (2012), 1–8.

41. Uldarico Muico, Yongjoon Lee, Jovan Popović, and Zoran Popović. 2009. Contact-aware nonlinear control of dynamic characters. In ACM SIGGRAPH 2009 papers. 1–9.

42. Ofir Nachum, Michael Ahn, Hugo Ponte, Shixiang Gu, and Vikash Kumar. 2019. Multiagent manipulation via locomotion using hierarchical sim2real. arXiv preprint arXiv:1908.05224 (2019).

43. Soohwan Park, Hoseok Ryu, Seyoung Lee, Sunmin Lee, and Jehee Lee. 2019. Learning predict-and-simulate policies from unorganized human motion data. ACM Transactions on Graphics 38, 6 (2019), 1–11.

44. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions on Graphics 37, 4 (2018), 1–14.

45. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel van de Panne. 2017. Deeploco: Dynamic locomotion skills using hierarchical deep reinforcement learning. ACM Transactions on Graphics 36, 4 (2017), 1–13.

46. Xue Bin Peng, Michael Chang, Grace Zhang, Pieter Abbeel, and Sergey Levine. 2019. MCP: Learning Composable Hierarchical Control with Multiplicative Compositional Policies. Advances in Neural Information Processing Systems 32 (2019), 3686–3697.

47. Xue Bin Peng, Yunrong Guo, Lina Halper, Sergey Levine, and Sanja Fidler. 2022. ASE: Large-Scale Reusable Adversarial Skill Embeddings for Physically Simulated Characters. ACM Transactions on Graphics 41, 4 (2022).

48. Xue Bin Peng, Ze Ma, Pieter Abbeel, Sergey Levine, and Angjoo Kanazawa. 2021. AMP: Adversarial motion priors for stylized physics-based character control. ACM Transactions on Graphics 40, 4 (2021).

49. Avinash Ranganath, Pei Xu, Ioannis Karamouzas, and Victor Zordan. 2019. Low dimensional motor skill learning using coactivation. In ACM SIGGRAPH Conference on Motion, Interaction and Games. 1–10.

50. John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. 2017. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017).

51. Asako Soga, Yuho Yazaki, Bin Umino, and Motoko Hirayama. 2016. Body-part motion synthesis system for contemporary dance creation. In ACM SIGGRAPH 2016 Posters. 1–2.

52. Kwang Won Sok, Manmyung Kim, and Jehee Lee. 2007. Simulating biped behaviors from human motion data. In ACM SIGGRAPH 2007 papers. 107–es.

53. Sebastian Starke, He Zhang, Taku Komura, and Jun Saito. 2019. Neural State Machine for Character-Scene Interactions. ACM Transactions on Graphics 38, 6, Article 209 (2019).

54. Sebastian Starke, Yiwei Zhao, Fabio Zinno, and Taku Komura. 2021. Neural animation layering for synthesizing martial arts movements. ACM Transactions on Graphics 40, 4 (2021), 1–16.

55. Jie Tan, Karen Liu, and Greg Turk. 2011. Stable proportional-derivative controllers. IEEE Computer Graphics and Applications 31, 4 (2011), 34–44.

56. Jeff KT Tang, Howard Leung, Taku Komura, and Hubert PH Shum. 2008. Emulating human perception of motion similarity. Computer Animation and Virtual Worlds 19, 3–4 (2008), 211–221.

57. Yuval Tassa, Tom Erez, and Emanuel Todorov. 2012. Synthesis and stabilization of complex behaviors through online trajectory optimization. In IEEE/RSJ International Conference on Intelligent Robots and Systems. 4906–4913.

58. Yuval Tassa, Nicolas Mansard, and Emo Todorov. 2014. Control-limited differential dynamic programming. In IEEE International Conference on Robotics and Automation. 1168–1175.

59. Hado P van Hasselt, Arthur Guez, Matteo Hessel, Volodymyr Mnih, and David Silver. 2016. Learning values across many orders of magnitude. Advances in Neural Information Processing Systems 29 (2016).

60. Kevin Wampler, Zoran Popović, and Jovan Popović. 2014. Generalizing locomotion style to new animals with inverse optimal regression. ACM Transactions on Graphics 33, 4 (2014), 1–11.

61. Tingwu Wang, Yunrong Guo, Maria Shugrina, and Sanja Fidler. 2020. Unicon: Universal neural controller for physics-based character motion. arXiv preprint arXiv:2011.15119 (2020).

62. Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2020. A scalable approach to control diverse behaviors for physically simulated characters. ACM Transactions on Graphics 39, 4 (2020), 33–1.

63. Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2021. Control strategies for physically simulated characters performing two-player competitive sports. ACM Transactions on Graphics 40, 4 (2021), 1–11.

64. Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2022. Physics-based character controllers using conditional VAEs. ACM Transactions on Graphics 41, 4 (2022), 1–12.

65. Jungdam Won, Jungnam Park, and Jehee Lee. 2018. Aerobatics control of flying creatures via self-regulated learning. ACM Transactions on Graphics 37, 6 (2018), 1–10.

66. Chun-Chih Wu and Victor Zordan. 2010. Goal-directed stepping with momentum control. In ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 113–118.

67. Zhaoming Xie, Hung Yu Ling, Nam Hee Kim, and Michiel van de Panne. 2020. ALLSTEPS: Curriculum-Driven Learning of Stepping Stone Skills. Computer Graphics Forum 39 (2020), 213–224.

68. Pei Xu and Ioannis Karamouzas. 2021. A GAN-Like Approach for Physics-Based Imitation Learning and Interactive Character Control. Proceedings of the ACM on Computer Graphics and Interactive Techniques 4, 3 (2021).

69. Zeshi Yang and Zhiqi Yin. 2021. Efficient hyperparameter optimization for physics-based character animation. Proceedings of the ACM on Computer Graphics and Interactive Techniques 4, 1 (2021), 1–19.

70. Yuho Yazaki, Asako Soga, Bin Umino, and Motoko Hirayama. 2015. Automatic composition by body-part motion synthesis for supporting dance creation. In International Conference on Cyberworlds. IEEE, 200–203.

71. Yuting Ye and C Karen Liu. 2010a. Optimal feedback control for character animation using an abstract model. In ACM SIGGRAPH 2010 papers. 1–9.

72. Yuting Ye and C Karen Liu. 2010b. Synthesis of responsive motion using a dynamic model. In Computer Graphics Forum, Vol. 29. 555–562.

73. KangKang Yin, Kevin Loken, and Michiel van de Panne. 2007. Simbicon: Simple biped locomotion control. ACM Transactions on Graphics 26, 3 (2007), 105–es.

74. Chao Yu, Akash Velu, Eugene Vinitsky, Yu Wang, Alexandre Bayen, and Yi Wu. 2021. The surprising effectiveness of PPO in cooperative, multi-agent games. arXiv preprint arXiv:2103.01955 (2021).

75. Wenhao Yu, Greg Turk, and C Karen Liu. 2018. Learning symmetric and low-energy locomotion. ACM Transactions on Graphics 37, 4 (2018), 1–12.

76. Victor Zordan, David Brown, Adriano Macchietto, and KangKang Yin. 2014. Control of rotational dynamics for ground and aerial behavior. IEEE Transactions on Visualization and Computer Graphics 20, 10 (2014), 1356–1366.

77. Victor Zordan and Jessica K Hodgins. 2002. Motion capture-driven simulations that hit and react. In ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 89–96.