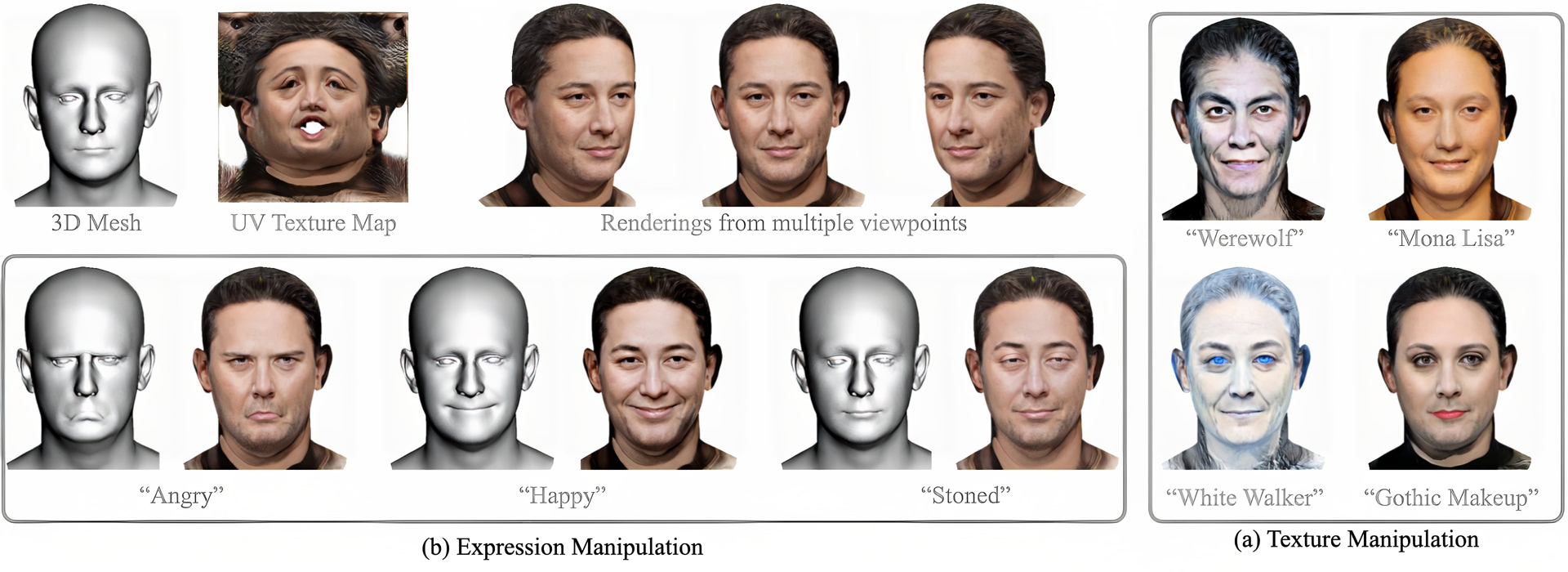

“ClipFace: Text-guided Editing of Textured 3D Morphable Models” by Thies, Dai and Niessner

Conference:

Type(s):

Title:

- ClipFace: Text-guided Editing of Textured 3D Morphable Models

Session/Category Title:

- Making Faces With Neural Avatars

Presenter(s)/Author(s):

Moderator(s):

Abstract:

We propose ClipFace, a novel self-supervised approach for text-guided editing of textured 3D morphable model of faces. Specifically, we employ user-friendly language prompts to enable control of the expressions as well as appearance of 3D faces. We leverage the geometric expressiveness of 3D morphable models, which inherently possess limited controllability and texture expressivity, and develop a self-supervised generative model to jointly synthesize expressive, textured, and articulated faces in 3D. We enable high-quality texture generation for 3D faces by adversarial self-supervised training, guided by differentiable rendering against collections of real RGB images. Controllable editing and manipulation are given by language prompts to adapt texture and expression of the 3D morphable model. To this end, we propose a neural network that predicts both texture and expression latent codes of the morphable model. Our model is trained in a self-supervised fashion by exploiting differentiable rendering and losses based on a pre-trained CLIP model. Once trained, our model jointly predicts face textures in UV-space, along with expression parameters to capture both geometry and texture changes in facial expressions in a single forward pass. We further show the applicability of our method to generate temporally changing textures for a given animation sequence.

References:

1. Rameen Abdal, Peihao Zhu, John Femiani, Niloy Mitra, and Peter Wonka. 2022. CLIP2StyleGAN: Unsupervised Extraction of StyleGAN Edit Directions. In ACM SIGGRAPH 2022 Conference Proceedings (Vancouver, BC, Canada) (SIGGRAPH ?22). Association for Computing Machinery, New York, NY, USA, Article 48, 9?pages. https://doi.org/10.1145/3528233.3530747

2. Rameen Abdal, Peihao Zhu, Niloy?J. Mitra, and Peter Wonka. 2021. StyleFlow: Attribute-Conditioned Exploration of StyleGAN-Generated Images Using Conditional Continuous Normalizing Flows. ACM Trans. Graph. (May 2021). https://doi.org/10.1145/3447648

3. Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022. Blended diffusion for text-driven editing of natural images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18208?18218.

4. David Bau, Alex Andonian, Audrey Cui, YeonHwan Park, Ali Jahanian, Aude Oliva, and Antonio Torralba. 2021. Paint by Word. arXiv:arXiv:2103.10951

5. Volker Blanz and Thomas Vetter. 1999. A Morphable Model for the Synthesis of 3D Faces. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques(SIGGRAPH ?99). ACM Press/Addison-Wesley Publishing Co., USA, 187?194. https://doi.org/10.1145/311535.311556

6. Zehranaz Canfes, M.?Furkan Atasoy, Alara Dirik, and Pinar Yanardag. 2022. Text and Image Guided 3D Avatar Generation and Manipulation. https://doi.org/10.48550/ARXIV.2202.06079

7. Eric?R. Chan, Connor?Z. Lin, Matthew?A. Chan, Koki Nagano, Boxiao Pan, Shalini?De Mello, Orazio Gallo, Leonidas Guibas, Jonathan Tremblay, Sameh Khamis, Tero Karras, and Gordon Wetzstein. 2021. Efficient Geometry-aware 3D Generative Adversarial Networks. In arXiv.

8. Katherine Crowson. 2021. VQGAN-CLIP. https://github.com/nerdyrodent/VQGAN-CLIP

9. Boris Dayma, Suraj Patil, Pedro Cuenca, Khalid Saifullah, Tanishq Abraham, Phuc Le?Khac, Luke Melas, and Ritobrata Ghosh. 2021. DALL?E Mini. https://doi.org/10.5281/zenodo.5146400

10. Yu Deng, Jiaolong Yang, Dong Chen, Fang Wen, and Xin Tong. 2020. Disentangled and Controllable Face Image Generation via 3D Imitative-Contrastive Learning. In IEEE Computer Vision and Pattern Recognition.

11. Haven Feng. 2019. Photometric FLAME Fitting. https://github.com/HavenFeng/photometric_optimization.

12. Yao Feng, Haiwen Feng, Michael?J. Black, and Timo Bolkart. 2021. Learning an Animatable Detailed 3D Face Model from In-the-Wild Images. ACM Transactions on Graphics (ToG), Proc. SIGGRAPH 40, 4 (Aug. 2021), 88:1?88:13.

13. Rinon Gal, Or Patashnik, Haggai Maron, Gal Chechik, and Daniel Cohen-Or. 2021. StyleGAN-NADA: CLIP-Guided Domain Adaptation of Image Generators. arxiv:2108.00946?[cs.CV]

14. Baris Gecer, Jiankang Deng, and Stefanos Zafeiriou. 2021a. OSTeC: One-Shot Texture Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 7628?7638.

15. Baris Gecer, Alexander Lattas, Stylianos Ploumpis, Jiankang Deng, Athanasios Papaioannou, Stylianos Moschoglou, and Stefanos Zafeiriou. 2020. Synthesizing Coupled 3D Face Modalities by Trunk-Branch Generative Adversarial Networks. In Proceedings of the European conference on computer vision (ECCV). Springer.

16. Baris Gecer, Stylianos Ploumpis, Irene Kotsia, and Stefanos Zafeiriou. 2019. GANFIT: Generative Adversarial Network Fitting for High Fidelity 3D Face Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

17. Baris Gecer, Stylianos Ploumpis, Irene Kotsia, and Stefanos?P Zafeiriou. 2021b. Fast-GANFIT: Generative Adversarial Network for High Fidelity 3D Face Reconstruction. IEEE Transactions on Pattern Analysis and Machine Intelligence (2021).

18. Thomas Gerig, Andreas Morel-Forster, Clemens Blumer, Bernhard Egger, Marcel L?thi, Sandro Sch?nborn, and Thomas Vetter. 2017. Morphable Face Models – An Open Framework. https://doi.org/10.48550/ARXIV.1709.08398

19. Partha Ghosh, Pravir?Singh Gupta, Roy Uziel, Anurag Ranjan, Michael?J. Black, and Timo Bolkart. 2020. GIF: Generative Interpretable Faces. In International Conference on 3D Vision (3DV). 868?878. http://gif.is.tue.mpg.de/

20. Fangzhou Hong, Mingyuan Zhang, Liang Pan, Zhongang Cai, Lei Yang, and Ziwei Liu. 2022. AvatarCLIP: Zero-Shot Text-Driven Generation and Animation of 3D Avatars. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1?19.

21. Nikolay Jetchev. 2021. ClipMatrix: Text-controlled Creation of 3D Textured Meshes. https://doi.org/10.48550/ARXIV.2109.12922

22. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In International Conference on Learning Representations. https://openreview.net/forum?id=Hk99zCeAb

23. Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. 2020a. Training Generative Adversarial Networks with Limited Data. In Proc. NeurIPS.

24. Tero Karras, Samuli Laine, and Timo Aila. 2019. A Style-Based Generator Architecture for Generative Adversarial Networks. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4396?4405. https://doi.org/10.1109/CVPR.2019.00453

25. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020b. Analyzing and Improving the Image Quality of StyleGAN. In Proc. CVPR.

26. Nasir?Mohammad Khalid, Tianhao Xie, Eugene Belilovsky, and Popa Tiberiu. 2022. CLIP-Mesh: Generating textured meshes from text using pretrained image-text models. SIGGRAPH Asia 2022 Conference Papers (December 2022).

27. Umut Kocasari, Alara Dirik, Mert Tiftikci, and Pinar Yanardag. 2022. StyleMC: Multi-Channel Based Fast Text-Guided Image Generation and Manipulation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). 895?904.

28. Marek Kowalski, Stephan?J. Garbin, Virginia Estellers, Tadas Baltru?aitis, Matthew Johnson, and Jamie Shotton. 2020. CONFIG: Controllable Neural Face Image Generation. In European Conference on Computer Vision (ECCV).

29. Samuli Laine, Janne Hellsten, Tero Karras, Yeongho Seol, Jaakko Lehtinen, and Timo Aila. 2020. Modular Primitives for High-Performance Differentiable Rendering. ACM Transactions on Graphics 39, 6 (2020).

30. Alexandros Lattas, Stylianos Moschoglou, Baris Gecer, Stylianos Ploumpis, Vasileios Triantafyllou, Abhijeet Ghosh, and Stefanos Zafeiriou. 2020. AvatarMe: Realistically Renderable 3D Facial Reconstruction “In-the-Wild”. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

31. Alexandros Lattas, Stylianos Moschoglou, Stylianos Ploumpis, Baris Gecer, Abhijeet Ghosh, and Stefanos?P Zafeiriou. 2021. AvatarMe++: Facial Shape and BRDF Inference with Photorealistic Rendering-Aware GANs. IEEE Transactions on Pattern Analysis and Machine Intelligence (2021).

32. Myunggi Lee, Wonwoong Cho, Moonheum Kim, David?I. Inouye, and Nojun Kwak. 2020. StyleUV: Diverse and High-fidelity UV Map Generative Model. ArXiv abs/2011.12893 (2020).

33. Ruilong Li, Karl Bladin, Yajie Zhao, Chinmay Chinara, Owen Ingraham, Pengda Xiang, Xinglei Ren, Pratusha Prasad, Bipin Kishore, Jun Xing, and Hao Li. 2020. Learning Formation of Physically-Based Face Attributes. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

34. Tianye Li, Timo Bolkart, Michael.?J. Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans. ACM Transactions on Graphics, (Proc. SIGGRAPH Asia) 36, 6 (2017), 194:1?194:17.

35. Yuchen Liu, Zhixin Shu, Yijun Li, Zhe Lin, Richard Zhang, and S.?Y. Kung. 2022. 3D-FM GAN: Towards 3D-Controllable Face Manipulation. ArXiv abs/2208.11257 (2022).

36. Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael?J. Black. 2015. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 34, 6 (Oct. 2015), 248:1?248:16.

37. Huiwen Luo, Koki Nagano, Han-Wei Kung, Qingguo Xu, Zejian Wang, Lingyu Wei, Liwen Hu, and Hao Li. 2021. Normalized Avatar Synthesis Using StyleGAN and Perceptual Refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 11662?11672.

38. Richard?T. Marriott, Sami Romdhani, and Liming Chen. 2021. A 3D GAN for Improved Large-pose Facial Recognition. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021), 13440?13450.

39. Oscar Michel, Roi Bar-On, Richard Liu, Sagie Benaim, and Rana Hanocka. 2022. Text2Mesh: Text-Driven Neural Stylization for Meshes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 13492?13502.

40. Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 2085?2094.

41. Mathis Petrovich, Michael?J. Black, and G?l Varol. 2022. TEMOS: Generating diverse human motions from textual descriptions. In European Conference on Computer Vision (ECCV).

42. Alec Radford, Jong?Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning(Proceedings of Machine Learning Research, Vol.?139), Marina Meila and Tong Zhang (Eds.). PMLR, 8748?8763. https://proceedings.mlr.press/v139/radford21a.html

43. Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical Text-Conditional Image Generation with CLIP Latents. https://doi.org/10.48550/ARXIV.2204.06125

44. Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. 2021. Zero-Shot Text-to-Image Generation. https://doi.org/10.48550/ARXIV.2102.12092

45. Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2022. DreamBooth: Fine Tuning Text-to-image Diffusion Models for Subject-Driven Generation. (2022).

46. Ron Slossberg, Ibrahim Jubran, and Ron Kimmel. 2022. Unsupervised High-Fidelity Facial Texture Generation and Reconstruction. In Computer Vision ? ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23?27, 2022, Proceedings, Part XIII (Tel Aviv, Israel). Springer-Verlag, Berlin, Heidelberg, 212?229. https://doi.org/10.1007/978-3-031-19778-9_13

47. Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick P?rez, Michael Z?llhofer, and Christian Theobalt. 2020a. StyleRig: Rigging StyleGAN for 3D Control over Portrait Images, CVPR 2020. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE.

48. Ayush Tewari, Mohamed Elgharib, Mallikarjun?B R, Florian Bernard, Hans-Peter Seidel, Patrick P?rez, Michael Zollh?fer, and Christian Theobalt. 2020b. PIE: Portrait Image Embedding for Semantic Control. ACM Trans. Graph. (2020).

49. Can Wang, Menglei Chai, Mingming He, Dongdong Chen, and Jing Liao. 2022a. CLIP-NeRF: Text-and-Image Driven Manipulation of Neural Radiance Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 3835?3844.

50. Lizhen Wang, Zhiyuan Chen, Tao Yu, Chenguang Ma, Liang Li, and Yebin Liu. 2022b. FaceVerse: A Fine-Grained and Detail-Controllable 3D Face Morphable Model From a Hybrid Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 20333?20342.

51. Tianyi Wei, Dongdong Chen, Wenbo Zhou, Jing Liao, Zhentao Tan, Lu Yuan, Weiming Zhang, and Nenghai Yu. 2022. HairCLIP: Design Your Hair by Text and Reference Image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 18072?18081.

52. Kim Youwang, Kim Ji-Yeon, and Tae-Hyun Oh. 2022. CLIP-Actor: Text-Driven Recommendation and Stylization for Animating Human Meshes. In ECCV.

53. zllrunning. 2018. face-parsing.PyTorch. https://github.com/zllrunning/face-parsing.PyTorch.