“CLIP-PAE: Projection-Augmentation Embedding to Extract Relevant Features for a Disentangled, Interpretable and Controllable Text-Guided Face Manipulation” by Zhou, Zhong and Öztireli

Conference:

Type(s):

Title:

- CLIP-PAE: Projection-Augmentation Embedding to Extract Relevant Features for a Disentangled, Interpretable and Controllable Text-Guided Face Manipulation

Session/Category Title:

- Text-Guided Generation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

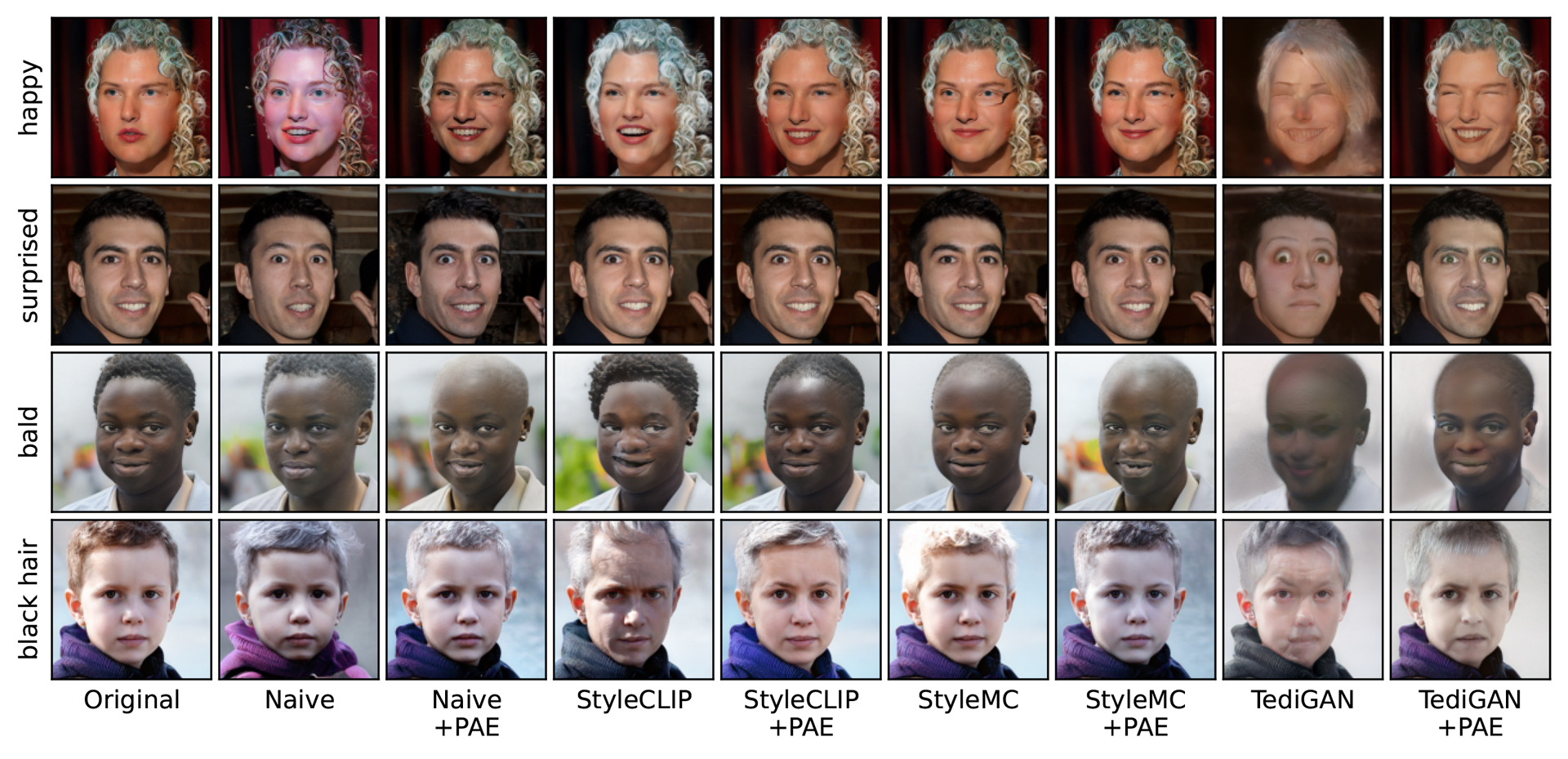

Recently introduced Contrastive Language-Image Pre-Training (CLIP) [Radford et al. 2021] bridges images and text by embedding them into a joint latent space. This opens the door to ample literature that aims to manipulate an input image by providing a textual explanation. However, due to the discrepancy between image and text embeddings in the joint space, using text embeddings as the optimization target often introduces undesired artifacts in the resulting images. Disentanglement, interpretability, and controllability are also hard to guarantee for manipulation. To alleviate these problems, we propose to define corpus subspaces spanned by relevant prompts to capture specific image characteristics. We introduce CLIP projection-augmentation embedding (PAE) as an optimization target to improve the performance of text-guided image manipulation. Our method is a simple and general paradigm that can be easily computed and adapted, and smoothly incorporated into any CLIP-based image manipulation algorithm. To demonstrate the effectiveness of our method, we conduct several theoretical and empirical studies. As a case study, we utilize the method for text-guided semantic face editing. We quantitatively and qualitatively demonstrate that PAE facilitates a more disentangled, interpretable, and controllable face image manipulation with state-of-the-art quality and accuracy.

References:

1. László Antal and Zalán Bodó. 2021. Feature axes orthogonalization in semantic face editing. In 2021 IEEE 17th International Conference on Intelligent Computer Communication and Processing (ICCP). IEEE, 163–169.

2. Zehranaz Canfes, M Furkan Atasoy, Alara Dirik, and Pinar Yanardag. 2022. Text and Image Guided 3D Avatar Generation and Manipulation. arXiv preprint arXiv:2202.06079 (2022).

3. Anton Cherepkov, Andrey Voynov, and Artem Babenko. 2021. Navigating the gan parameter space for semantic image editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3671–3680.

4. Antonia Creswell and Anil Anthony Bharath. 2018. Inverting the generator of a generative adversarial network. IEEE transactions on neural networks and learning systems 30, 7 (2018), 1967–1974.

5. Jiankang Deng, Jia Guo, Niannan Xue, and Stefanos Zafeiriou. 2019. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 4690–4699.

6. Sinuo Deng, Lifang Wu, Ge Shi, Lehao Xing, and Meng Jian. 2022. Learning to Compose Diversified Prompts for Image Emotion Classification. arXiv preprint arXiv:2201.10963 (2022).

7. Prafulla Dhariwal and Alexander Nichol. 2021. Diffusion models beat gans on image synthesis. Advances in Neural Information Processing Systems 34 (2021).

8. Finale Doshi-Velez and Been Kim. 2018. Considerations for evaluation and generalization in interpretable machine learning. In Explainable and interpretable models in computer vision and machine learning. Springer, 3–17.

9. Paul Ekman. 1992. An argument for basic emotions. Cognition & emotion 6, 3-4 (1992), 169–200.

10. Aviv Gabbay, Niv Cohen, and Yedid Hoshen. 2021. An image is worth more than a thousand words: Towards disentanglement in the wild. Advances in Neural Information Processing Systems 34 (2021), 9216–9228.

11. Rinon Gal, Or Patashnik, Haggai Maron, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022. StyleGAN-NADA: CLIP-guided domain adaptation of image generators. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1–13.

12. Felipe González-Pizarro and Savvas Zannettou. 2022. Understanding and Detecting Hateful Content using Contrastive Learning. arXiv preprint arXiv:2201.08387 (2022).

13. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. Advances in neural information processing systems 27 (2014).

14. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Advances in neural information processing systems 30 (2017).

15. Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising Diffusion Probabilistic Models. In Advances in Neural Information Processing Systems, H. Larochelle, M. Ranzato, R. Hadsell, M.F. Balcan, and H. Lin (Eds.). Vol. 33. Curran Associates, Inc., 6840–6851. https://proceedings.neurips.cc/paper/2020/file/4c5bcfec8584af0d967f1ab10179ca4b-Paper.pdf

16. Xianxu Hou, Linlin Shen, Or Patashnik, Daniel Cohen-Or, and Hui Huang. 2022a. FEAT: Face Editing with Attention. arXiv preprint arXiv:2202.02713 (2022).

17. Xianxu Hou, Xiaokang Zhang, Hanbang Liang, Linlin Shen, Zhihui Lai, and Jun Wan. 2022b. Guidedstyle: Attribute knowledge guided style manipulation for semantic face editing. Neural Networks 145 (2022), 209–220.

18. Ian T Jolliffe. 2002. Principal component analysis for special types of data. Springer.

19. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2017. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196 (2017).

20. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 4401–4410.

21. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

22. Siavash Khodadadeh, Shabnam Ghadar, Saeid Motiian, Wei-An Lin, Ladislau Bölöni, and Ratheesh Kalarot. 2022. Latent to Latent: A Learned Mapper for Identity Preserving Editing of Multiple Face Attributes in StyleGAN-Generated Images. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 3184–3192.

23. Umut Kocasari, Alara Dirik, Mert Tiftikci, and Pinar Yanardag. 2022. StyleMC: Multi-Channel Based Fast Text-Guided Image Generation and Manipulation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 895–904.

24. Bowen Li, Xiaojuan Qi, Thomas Lukasiewicz, and Philip Torr. 2019. Controllable text-to-image generation. Advances in Neural Information Processing Systems 32 (2019).

25. Boyi Li, Kilian Q Weinberger, Serge Belongie, Vladlen Koltun, and René Ranftl. 2022. Language-driven Semantic Segmentation. arXiv preprint arXiv:2201.03546 (2022).

26. Huan Ling, Karsten Kreis, Daiqing Li, Seung Wook Kim, Antonio Torralba, and Sanja Fidler. 2021. EditGAN: High-Precision Semantic Image Editing. Advances in Neural Information Processing Systems 34 (2021).

27. Michael J Lyons, Julien Budynek, Andre Plante, and Shigeru Akamatsu. 2000. Classifying facial attributes using a 2-d gabor wavelet representation and discriminant analysis. In Proceedings fourth IEEE international conference on automatic face and gesture recognition (Cat. No. PR00580). IEEE, 202–207.

28. Tim Miller. 2019. Explanation in artificial intelligence: Insights from the social sciences. Artificial intelligence 267 (2019), 1–38.

29. Nasir Mohammad Khalid, Tianhao Xie, Eugene Belilovsky, and Tiberiu Popa. 2022. CLIP-Mesh: Generating textured meshes from text using pretrained image-text models. In SIGGRAPH Asia 2022 Conference Papers. 1–8.

30. W James Murdoch, Chandan Singh, Karl Kumbier, Reza Abbasi-Asl, and Bin Yu. 2019. Definitions, methods, and applications in interpretable machine learning. Proceedings of the National Academy of Sciences 116, 44 (2019), 22071–22080.

31. Yotam Nitzan, Kfir Aberman, Qiurui He, Orly Liba, Michal Yarom, Yossi Gandelsman, Inbar Mosseri, Yael Pritch, and Daniel Cohen-Or. 2022. MyStyle: A Personalized Generative Prior. arXiv preprint arXiv:2203.17272 (2022).

32. Taesung Park, Jun-Yan Zhu, Oliver Wang, Jingwan Lu, Eli Shechtman, Alexei Efros, and Richard Zhang. 2020. Swapping autoencoder for deep image manipulation. Advances in Neural Information Processing Systems 33 (2020), 7198–7211.

33. Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. Styleclip: Text-driven manipulation of stylegan imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2085–2094.

34. Guim Perarnau, Joost Van De Weijer, Bogdan Raducanu, and Jose M Álvarez. 2016. Invertible conditional gans for image editing. arXiv preprint arXiv:1611.06355 (2016).

35. Stanislav Pidhorskyi, Donald A Adjeroh, and Gianfranco Doretto. 2020. Adversarial latent autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 14104–14113.

36. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, 2021. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning. PMLR, 8748–8763.

37. Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 (2022).

38. Ravi Kiran Reddy, Kumar Shubham, Gopalakrishnan Venkatesh, Sriram Gandikota, Sarthak Khoche, Dinesh Babu Jayagopi, and Gopalakrishnan Srinivasaraghavan. 2021. One-shot domain adaptation for semantic face editing of real world images using StyleALAE. arXiv preprint arXiv:2108.13876 (2021).

39. Bernhard Schölkopf, Alexander Smola, and Klaus-Robert Müller. 1997. Kernel principal component analysis. In International conference on artificial neural networks. Springer, 583–588.

40. Sefik Ilkin Serengil and Alper Ozpinar. 2021. HyperExtended LightFace: A Facial Attribute Analysis Framework. In 2021 International Conference on Engineering and Emerging Technologies (ICEET). IEEE, 1–4. https://doi.org/10.1109/ICEET53442.2021.9659697

41. Yujun Shen, Ceyuan Yang, Xiaoou Tang, and Bolei Zhou. 2020. Interfacegan: Interpreting the disentangled face representation learned by gans. IEEE transactions on pattern analysis and machine intelligence (2020).

42. Hengcan Shi, Munawar Hayat, and Jianfei Cai. 2022a. Unpaired Referring Expression Grounding via Bidirectional Cross-Modal Matching. arXiv preprint arXiv:2201.06686 (2022).

43. Hengcan Shi, Munawar Hayat, Yicheng Wu, and Jianfei Cai. 2022b. ProposalCLIP: Unsupervised Open-Category Object Proposal Generation via Exploiting CLIP Cues. arXiv preprint arXiv:2201.06696 (2022).

44. Laurens Van der Maaten and Geoffrey Hinton. 2008. Visualizing data using t-SNE.Journal of machine learning research 9, 11 (2008).

45. Yael Vinker, Ehsan Pajouheshgar, Jessica Y Bo, Roman Christian Bachmann, Amit Haim Bermano, Daniel Cohen-Or, Amir Zamir, and Ariel Shamir. 2022. Clipasso: Semantically-aware object sketching. arXiv preprint arXiv:2202.05822 (2022).

46. Zongze Wu, Dani Lischinski, and Eli Shechtman. 2021. Stylespace analysis: Disentangled controls for stylegan image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12863–12872.

47. Weihao Xia, Yujiu Yang, Jing-Hao Xue, and Baoyuan Wu. 2021. Tedigan: Text-guided diverse face image generation and manipulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2256–2265.

48. Zipeng Xu, Tianwei Lin, Hao Tang, Fu Li, Dongliang He, Nicu Sebe, Radu Timofte, Luc Van Gool, and Errui Ding. 2022. Predict, Prevent, and Evaluate: Disentangled Text-Driven Image Manipulation Empowered by Pre-Trained Vision-Language Model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18229–18238.

49. Ansheng You, Chenglin Zhou, Qixuan Zhang, and Lan Xu. 2021. Towards Controllable and Photorealistic Region-wise Image Manipulation. In Proceedings of the 29th ACM International Conference on Multimedia. 535–543.

50. Yun Zhang, Ruixin Liu, Yifan Pan, Dehao Wu, Yuesheng Zhu, and Zhiqiang Bai. 2021. GI-AEE: GAN Inversion Based Attentive Expression Embedding Network For Facial Expression Editing. In 2021 IEEE International Conference on Image Processing (ICIP). IEEE, 2453–2457.

51. Jun-Yan Zhu, Philipp Krähenbühl, Eli Shechtman, and Alexei A Efros. 2016. Generative visual manipulation on the natural image manifold. In European conference on computer vision. Springer, 597–613.

52. Alara Zindancıoğlu and T Metin Sezgin. 2021. Perceptually Validated Precise Local Editing for Facial Action Units with StyleGAN. arXiv preprint arXiv:2107.12143 (2021).