“Automatic music video generating system by remixing existing contents in video hosting service based on hidden Markov model” by Ohya and Morishima

Conference:

Type(s):

Title:

- Automatic music video generating system by remixing existing contents in video hosting service based on hidden Markov model

Presenter(s)/Author(s):

Abstract:

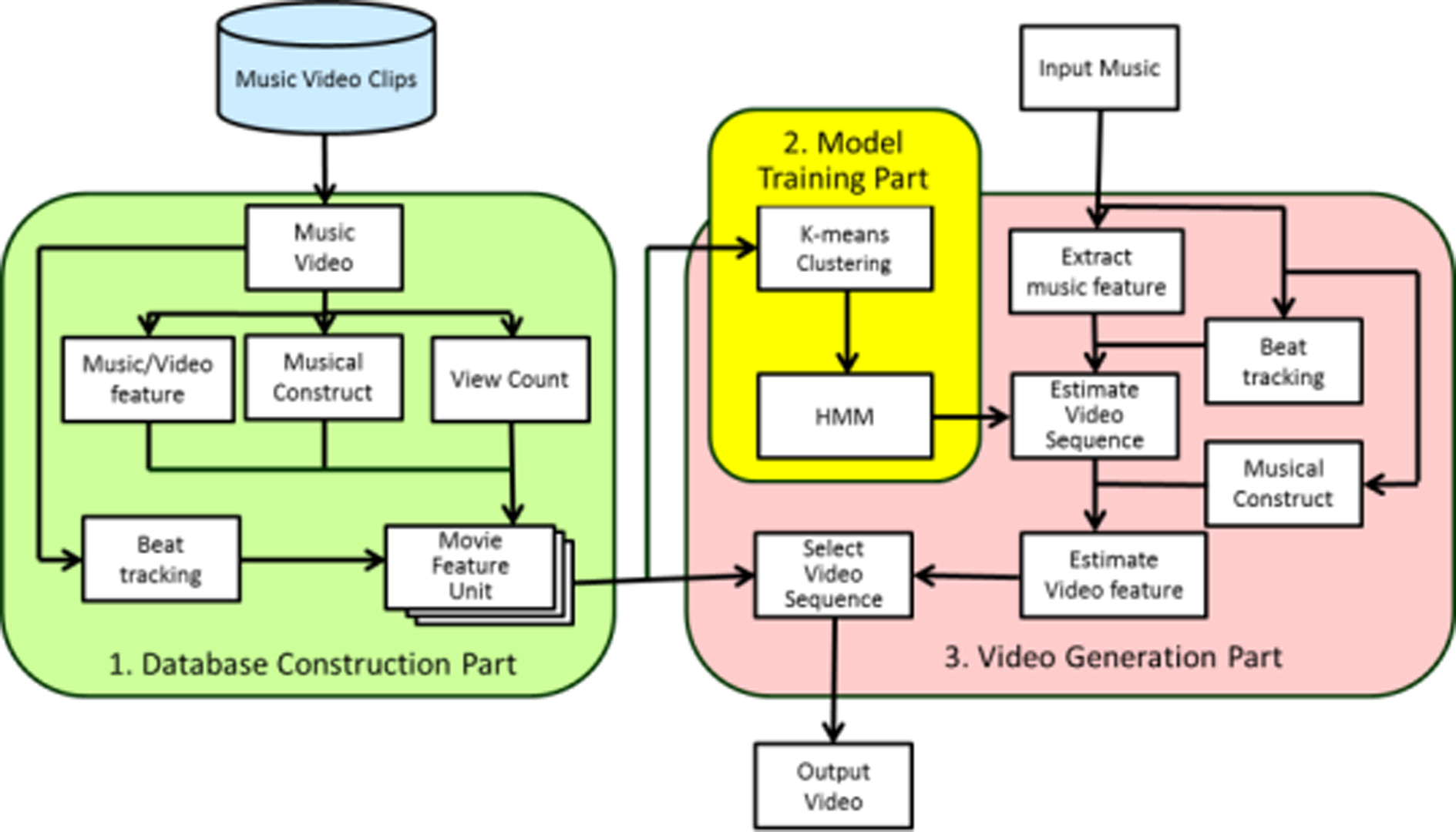

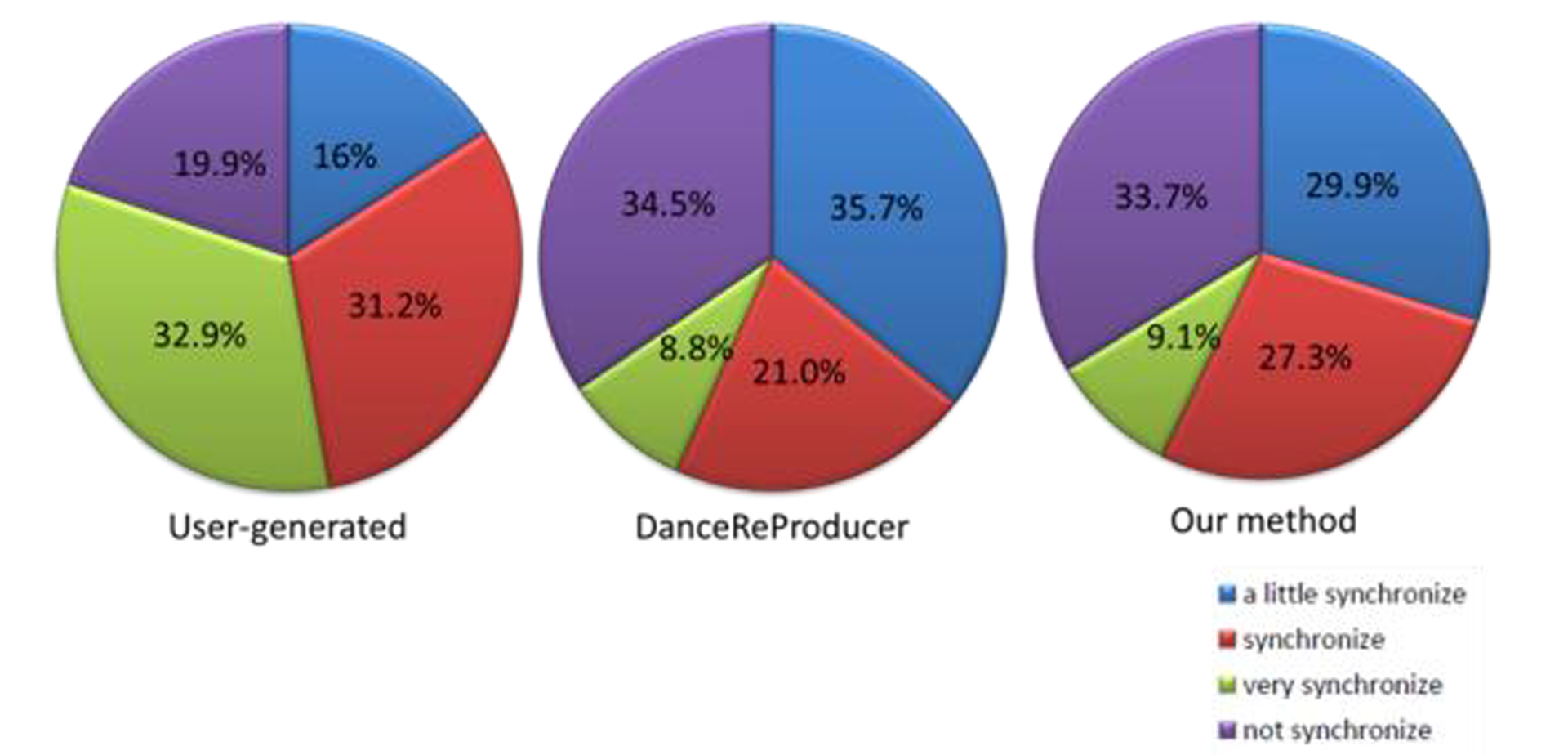

User-generated music video clip called MAD movie, which is a derivative (mixture or combination) of some original video clips, are gaining popularity on the web and a lot of them have been uploaded and are available on video hosting web services. Such a MAD music video clip consists of audio signals and video frames taken from other original video clips. In a MAD video clip, good music-to-image synchronization with respect to rhythm, impression, and context is important. Although it is easy to enjoy watching MAD videos, it is not easy to generate them. It is because a creator needs high-performance video editing software and spends a lot of time for editing video. Additionally, a creator is required video editing skill. DanceReProducer (Nakano et al [2011]) is a dance video authoring system that can automatically generate dance video appropriate to music by reusing existing dance video sequences. It trains correspondence relationship between music and video. However, DanceReProducer cannot train video sequence information because it only trains one-bar correspondence relationship. So we improved DanceReProducer to consider video sequence information by using Markov chain of latent variable and Forward Viterbi algorithm.

References:

1. T. Nakano, S. Murofushi, M. Goto, and S. Morishima. 2011. DanceReProducer: an Automatic Mashup Music Video Generation System by Reusing Dance Video Clips on the Web. Proceedings of the SMC 2011, pp.183–189, July 2011.