“Artisitically Directable Walk Generation” by Ivanov and Havaldar

Conference:

Title:

- Artisitically Directable Walk Generation

Session/Category Title:

- Virtual Presentations

Presenter(s)/Author(s):

Entry Number:

- 29

Abstract:

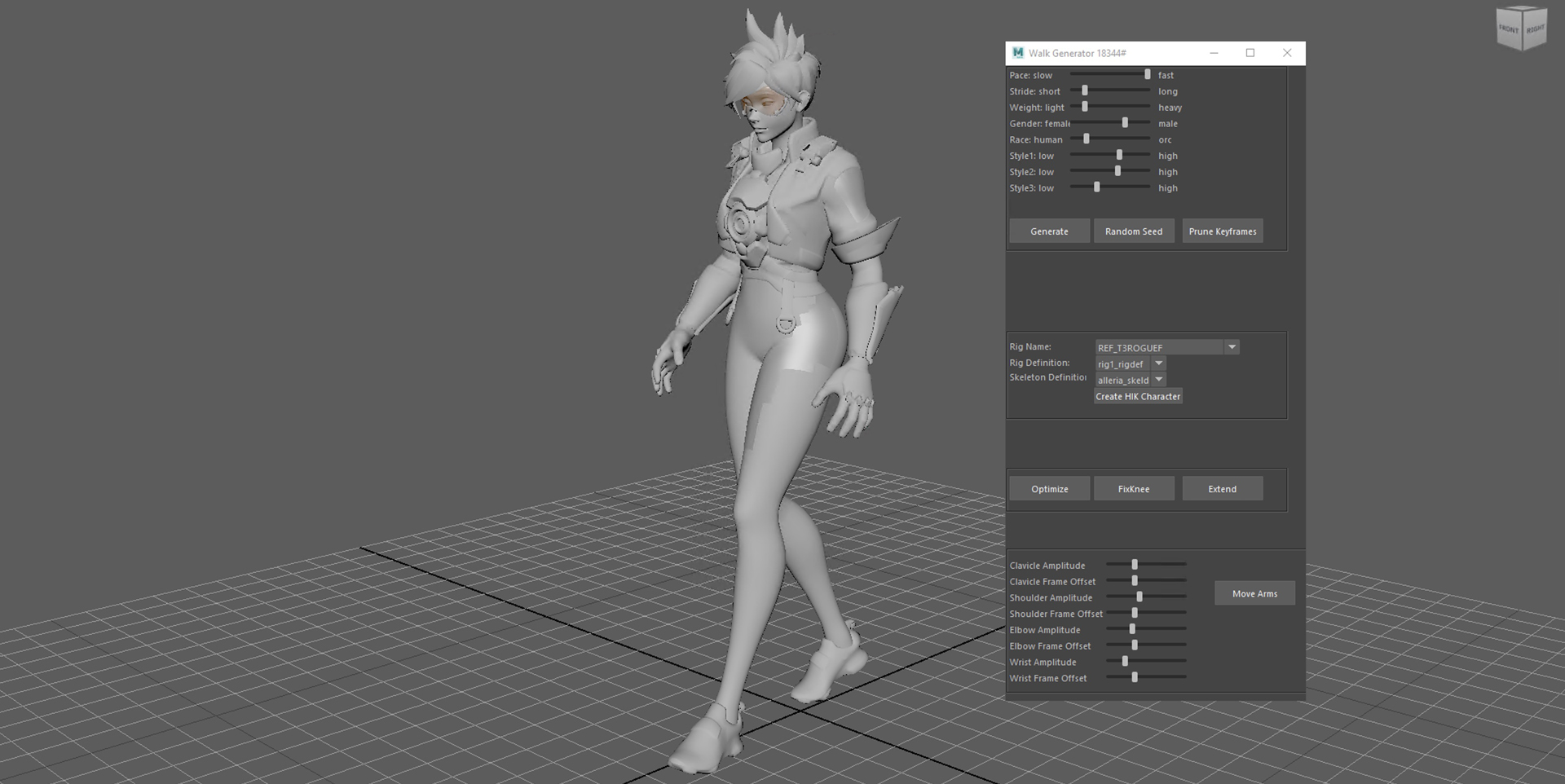

We present a framework for artistically directable walk generation. A generative network is trained using a motion capture dataset and a manually animated collection of walks. To accommodate an animator’s workflow, each walk is presented as a sequence of key poses. The generative framework allows to specify a set of traits including gender, stride, velocity and weight. A generated walk is designed to be the starting point when blocking an animation: an animator can introduce new keys on the controls.

References:

CMU. 2003. CMU mocap. (2003). http://mocap.cs.cmu.edu/Google Scholar

Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. GANSpace: Discovering Interpretable GAN Controls. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin (Eds.). https://proceedings.neurips.cc/paper/2020/hash/6fe43269967adbb64ec6149852b5cc3e-Abstract.htmlGoogle Scholar

Félix G. Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher J. Pal. 2021. Robust Motion In-betweening. CoRR abs/2102.04942(2021). arXiv:2102.04942https://arxiv.org/abs/2102.04942Google Scholar

Alon Shoshan, Nadav Bhonker, Igor Kviatkovsky, and Gérard G. Medioni. 2021. GAN-Control: Explicitly Controllable GANs. CoRR abs/2101.02477(2021). arXiv:2101.02477https://arxiv.org/abs/2101.02477Google Scholar

Yi Zhou, Jingwan Lu, Connelly Barnes, Jimei Yang, Sitao Xiang, and Hao Li. 2020. Generative Tweening: Long-term Inbetweening of 3D Human Motions. CoRR abs/2005.08891(2020). arXiv:2005.08891https://arxiv.org/abs/2005.08891Google Scholar