“AMP: adversarial motion priors for stylized physics-based character control” by Peng, Ma, Abbeel, Levine and Kanazawa

Conference:

Type(s):

Title:

- AMP: adversarial motion priors for stylized physics-based character control

Presenter(s)/Author(s):

Abstract:

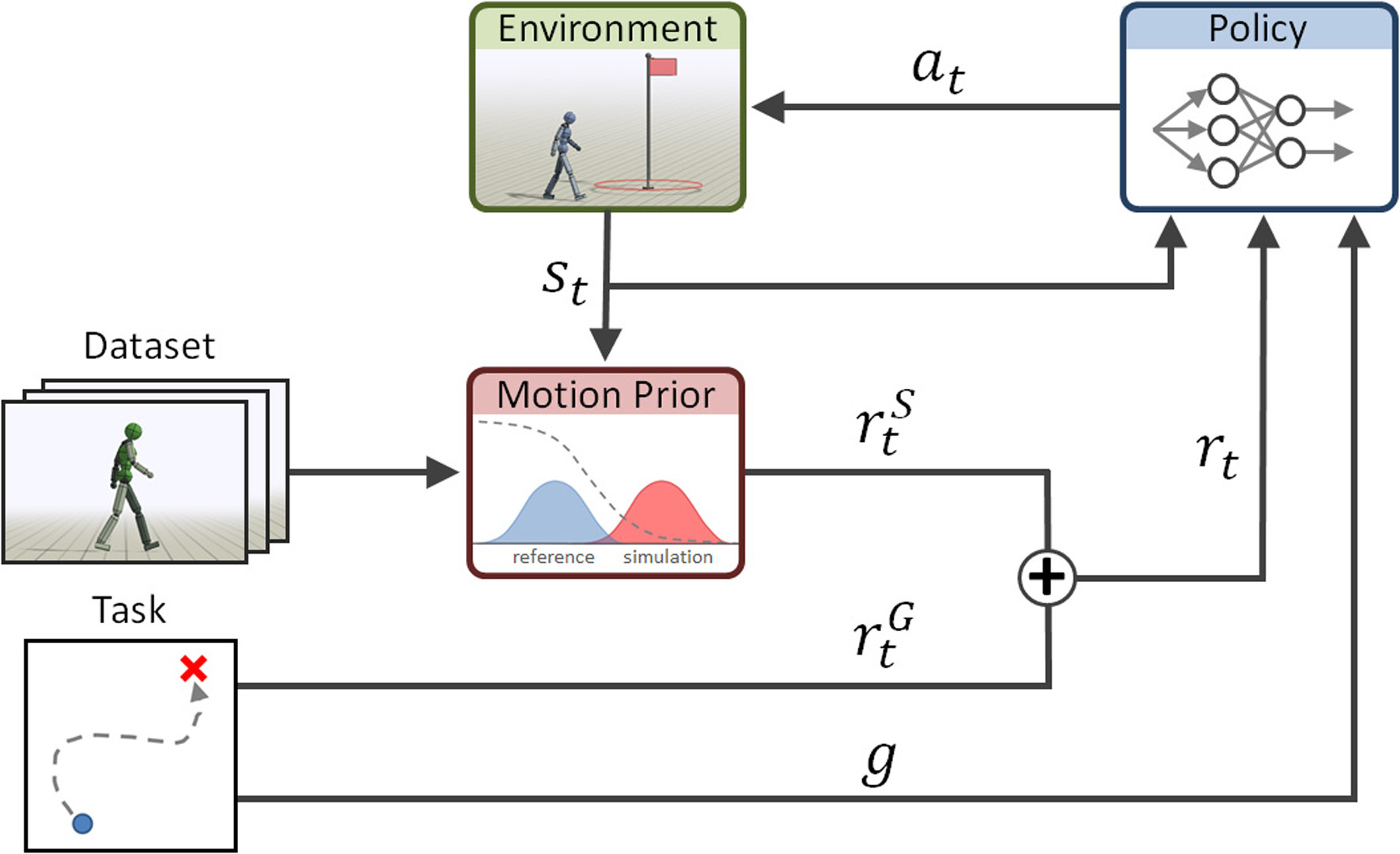

Synthesizing graceful and life-like behaviors for physically simulated characters has been a fundamental challenge in computer animation. Data-driven methods that leverage motion tracking are a prominent class of techniques for producing high fidelity motions for a wide range of behaviors. However, the effectiveness of these tracking-based methods often hinges on carefully designed objective functions, and when applied to large and diverse motion datasets, these methods require significant additional machinery to select the appropriate motion for the character to track in a given scenario. In this work, we propose to obviate the need to manually design imitation objectives and mechanisms for motion selection by utilizing a fully automated approach based on adversarial imitation learning. High-level task objectives that the character should perform can be specified by relatively simple reward functions, while the low-level style of the character’s behaviors can be specified by a dataset of unstructured motion clips, without any explicit clip selection or sequencing. For example, a character traversing an obstacle course might utilize a task-reward that only considers forward progress, while the dataset contains clips of relevant behaviors such as running, jumping, and rolling. These motion clips are used to train an adversarial motion prior, which specifies style-rewards for training the character through reinforcement learning (RL). The adversarial RL procedure automatically selects which motion to perform, dynamically interpolating and generalizing from the dataset. Our system produces high-quality motions that are comparable to those achieved by state-of-the-art tracking-based techniques, while also being able to easily accommodate large datasets of unstructured motion clips. Composition of disparate skills emerges automatically from the motion prior, without requiring a high-level motion planner or other task-specific annotations of the motion clips. We demonstrate the effectiveness of our framework on a diverse cast of complex simulated characters and a challenging suite of motor control tasks.

References:

1. Mart?n Abadi, Ashish Agarwal, Paul Barham, Eugene Brevdo, Zhifeng Chen, Craig Citro, Greg S. Corrado, Andy Davis, Jeffrey Dean, Matthieu Devin, Sanjay Ghemawat, Ian Goodfellow, Andrew Harp, Geoffrey Irving, Michael Isard, Yangqing Jia, Rafal Jozefowicz, Lukasz Kaiser, Manjunath Kudlur, Josh Levenberg, Dan Man?, Rajat Monga, Sherry Moore, Derek Murray, Chris Olah, Mike Schuster, Jonathon Shlens, Benoit Steiner, Ilya Sutskever, Kunal Talwar, Paul Tucker, Vincent Vanhoucke, Vijay Vasudevan, Fernanda Vi?gas, Oriol Vinyals, Pete Warden, Martin Wattenberg, Martin Wicke, Yuan Yu, and Xiaoqiang Zheng. 2015. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. http://tensorflow.org/ Software available from tensorflow.org.Google Scholar

2. Pieter Abbeel and Andrew Y. Ng. 2004. Apprenticeship Learning via Inverse Reinforcement Learning. In Proceedings of the Twenty-First International Conference on Machine Learning (Banff, Alberta, Canada) (ICML ’04). Association for Computing Machinery, New York, NY, USA, 1. Google ScholarDigital Library

3. Shailen Agrawal and Michiel van de Panne. 2016. Task-based Locomotion. ACM Transactions on Graphics (Proc. SIGGRAPH 2016) 35, 4 (2016).Google Scholar

4. M. Al Borno, M. de Lasa, and A. Hertzmann. 2013. Trajectory Optimization for Full-Body Movements with Complex Contacts. IEEE Transactions on Visualization and Computer Graphics 19, 8 (2013), 1405–1414. Google ScholarDigital Library

5. Martin Arjovsky, Soumith Chintala, and L?on Bottou. 2017. Wasserstein Generative Adversarial Networks (Proceedings of Machine Learning Research, Vol. 70), Doina Precup and Yee Whye Teh (Eds.). PMLR, International Convention Centre, Sydney, Australia, 214–223. http://proceedings.mlr.press/v70/arjovsky17a.htmlGoogle Scholar

6. Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: Data-Driven Responsive Control of Physics-Based Characters. ACM Trans. Graph. 38, 6, Article 206 (Nov. 2019), 11 pages. Google ScholarDigital Library

7. David Berthelot, Tom Schumm, and Luke Metz. 2017. BEGAN: Boundary Equilibrium Generative Adversarial Networks. CoRR abs/1703.10717 (2017). arXiv:1703.10717 http://arxiv.org/abs/1703.10717Google Scholar

8. Mariusz Bojarski, Davide Del Testa, Daniel Dworakowski, Bernhard Firner, Beat Flepp, Prasoon Goyal, Lawrence D. Jackel, Mathew Monfort, Urs Muller, Jiakai Zhang, Xin Zhang, Jake Zhao, and Karol Zieba. 2016. End to End Learning for Self-Driving Cars. CoRR abs/1604.07316 (2016). arXiv:1604.07316 http://arxiv.org/abs/1604.07316Google Scholar

9. W. Burgard, O. Brock, and C. Stachniss. 2008. Learning Omnidirectional Path Following Using Dimensionality Reduction. 257–264.Google Scholar

10. Nuttapong Chentanez, Matthias M?ller, Miles Macklin, Viktor Makoviychuk, and Stefan Jeschke. 2018. Physics-Based Motion Capture Imitation with Deep Reinforcement Learning. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games (Limassol, Cyprus) (MIG ’18). Association for Computing Machinery, New York, NY, USA, Article 1, 10 pages. Google ScholarDigital Library

11. CMU. [n.d.]. CMU Graphics Lab Motion Capture Database. http://mocap.cs.cmu.edu/.Google Scholar

12. Erwin Coumans et al. 2013. Bullet physics library. Open source: bulletphysics. org 15, 49 (2013), 5.Google Scholar

13. M. Da Silva, Y. Abe, and J. Popovic. 2008. Simulation of Human Motion Data using Short-Horizon Model-Predictive Control. Computer Graphics Forum (2008). Google ScholarCross Ref

14. Carlos Florensa, Yan Duan, and Pieter Abbeel. 2017. Stochastic Neural Networks for Hierarchical Reinforcement Learning. In Proceedings of the International Conference on Learning Representations (ICLR).Google Scholar

15. Thomas Geijtenbeek, Michiel van de Panne, and A. Frank van der Stappen. 2013. Flexible Muscle-Based Locomotion for Bipedal Creatures. ACM Transactions on Graphics 32, 6 (2013).Google ScholarDigital Library

16. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems 27, Z. Ghahramani, M. Welling, C. Cortes, N. D. Lawrence, and K. Q. Weinberger (Eds.). Curran Associates, Inc., 2672–2680. http://papers.nips.cc/paper/5423-generative-adversarial-nets.pdfGoogle ScholarDigital Library

17. F. Sebastin Grassia. 1998. Practical Parameterization of Rotations Using the Exponential Map. J. Graph. Tools 3, 3 (March 1998), 29–48. Google ScholarDigital Library

18. Keith Grochow, Steven L. Martin, Aaron Hertzmann, and Zoran Popovi?. 2004. Style-Based Inverse Kinematics. ACM Trans. Graph. 23, 3 (Aug. 2004), 522–531. Google ScholarDigital Library

19. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. 2017. Improved Training of Wasserstein GANs. In Advances in Neural Information Processing Systems 30, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.). Curran Associates, Inc., 5767–5777. http://papers.nips.cc/paper/7159-improved-training-of-wasserstein-gans.pdfGoogle Scholar

20. Tuomas Haarnoja, Kristian Hartikainen, Pieter Abbeel, and Sergey Levine. 2018. Latent Space Policies for Hierarchical Reinforcement Learning (Proceedings of Machine Learning Research, Vol. 80), Jennifer Dy and Andreas Krause (Eds.). PMLR, Stock-holmsm?ssan, Stockholm Sweden, 1851–1860. http://proceedings.mlr.press/v80/haarnoja18a.htmlGoogle Scholar

21. T. Harada, S. Taoka, T. Mori, and T. Sato. 2004. Quantitative evaluation method for pose and motion similarity based on human perception. In 4th IEEE/RAS International Conference on Humanoid Robots, 2004., Vol. 1. 494–512 Vol. 1. Google ScholarCross Ref

22. Karol Hausman, Jost Tobias Springenberg, Ziyu Wang, Nicolas Heess, and Martin Riedmiller. 2018. Learning an Embedding Space for Transferable Robot Skills. In International Conference on Learning Representations. https://openreview.net/forum?id=rk07ZXZRbGoogle Scholar

23. Nicolas Heess, Gregory Wayne, Yuval Tassa, Timothy P. Lillicrap, Martin A. Riedmiller, and David Silver. 2016. Learning and Transfer of Modulated Locomotor Controllers. CoRR abs/1610.05182 (2016). arXiv:1610.05182 http://arxiv.org/abs/1610.05182Google Scholar

24. Jonathan Ho and Stefano Ermon. 2016. Generative Adversarial Imitation Learning. In Advances in Neural Information Processing Systems 29, D. D. Lee, M. Sugiyama, U. V. Luxburg, I. Guyon, and R. Garnett (Eds.). Curran Associates, Inc., 4565–4573. http://papers.nips.cc/paper/6391-generative-adversarial-imitation-learning.pdfGoogle Scholar

25. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-Functioned Neural Networks for Character Control. ACM Trans. Graph. 36, 4, Article 42 (July 2017), 13 pages. Google ScholarDigital Library

26. Yifeng Jiang, Tom Van Wouwe, Friedl De Groote, and C. Karen Liu. 2019. Synthesis of Biologically Realistic Human Motion Using Joint Torque Actuation. ACM Trans. Graph. 38, 4, Article 72 (July 2019), 12 pages. Google ScholarDigital Library

27. Angjoo Kanazawa, Michael J. Black, David W. Jacobs, and Jitendra Malik. 2018. End-to-end Recovery of Human Shape and Pose. In Computer Vision and Pattern Regognition (CVPR).Google Scholar

28. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2017. Progressive Growing of GANs for Improved Quality, Stability, and Variation. CoRR abs/1710.10196 (2017). arXiv:1710.10196 http://arxiv.org/abs/1710.10196Google Scholar

29. Liyiming Ke, Matt Barnes, Wen Sun, Gilwoo Lee, Sanjiban Choudhury, and Siddhartha S. Srinivasa. 2019. Imitation Learning as f-Divergence Minimization. CoRR abs/1905.12888 (2019). arXiv:1905.12888 http://arxiv.org/abs/1905.12888Google Scholar

30. Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings. arXiv:http://arxiv.org/abs/1312.6114v10 [stat.ML]Google Scholar

31. Naveen Kodali, Jacob D. Abernethy, James Hays, and Zsolt Kira. 2017. How to Train Your DRAGAN. CoRR abs/1705.07215 (2017). arXiv:1705.07215 http://arxiv.org/abs/1705.07215Google Scholar

32. Taesoo Kwon and Jessica K. Hodgins. 2017. Momentum-Mapped Inverted Pendulum Models for Controlling Dynamic Human Motions. ACM Trans. Graph. 36, 4, Article 145d (Jan. 2017), 14 pages. Google ScholarDigital Library

33. Jehee Lee, Jinxiang Chai, Paul S. A. Reitsma, Jessica K. Hodgins, and Nancy S. Pollard. 2002. Interactive Control of Avatars Animated with Human Motion Data. ACM Trans. Graph. 21, 3 (July 2002), 491–500. Google ScholarDigital Library

34. Kyungho Lee, Seyoung Lee, and Jehee Lee. 2018. Interactive Character Animation by Learning Multi-Objective Control. ACM Trans. Graph. 37, 6, Article 180 (Dec. 2018), 10 pages. Google ScholarDigital Library

35. Seunghwan Lee, Moonseok Park, Kyoungmin Lee, and Jehee Lee. 2019. Scalable Muscle-Actuated Human Simulation and Control. ACM Trans. Graph. 38, 4, Article 73 (July 2019), 13 pages. Google ScholarDigital Library

36. Yoonsang Lee, Sungeun Kim, and Jehee Lee. 2010a. Data-Driven Biped Control. ACM Trans. Graph. 29, 4, Article 129 (July 2010), 8 pages. Google ScholarDigital Library

37. Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popovi?, and Zoran Popovi?. 2010b. Motion Fields for Interactive Character Locomotion. ACM Trans. Graph. 29, 6, Article 138 (Dec. 2010), 8 pages. Google ScholarDigital Library

38. Sergey Levine, Yongjoon Lee, Vladlen Koltun, and Zoran Popovi?. 2011. Space-Time Planning with Parameterized Locomotion Controllers. ACM Trans. Graph. 30, 3, Article 23 (May 2011), 11 pages. Google ScholarDigital Library

39. Sergey Levine, Jack M. Wang, Alexis Haraux, Zoran Popovi?, and Vladlen Koltun. 2012. Continuous Character Control with Low-Dimensional Embeddings. ACM Transactions on Graphics 31, 4 (2012), 28.Google ScholarDigital Library

40. Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel van de Panne. 2020. Character Controllers Using Motion VAEs. 39, 4 (2020).Google Scholar

41. Libin Liu, Michiel van de Panne, and KangKang Yin. 2016. Guided Learning of Control Graphs for Physics-Based Characters. ACM Transactions on Graphics 35, 3 (2016).Google ScholarDigital Library

42. Libin Liu, KangKang Yin, Michiel van de Panne, and Baining Guo. 2012. Terrain runner: control, parameterization, composition, and planning for highly dynamic motions. ACM Transactions on Graphics (TOG) 31, 6 (2012), 154.Google ScholarDigital Library

43. Libin Liu, KangKang Yin, Michiel van de Panne, Tianjia Shao, and Weiwei Xu. 2010. Sampling-based contact-rich motion control. ACM Trans. Graph. 29, 4, Article 128 (July 2010), 10 pages. Google ScholarDigital Library

44. Ying-Sheng Luo, Jonathan Hans Soeseno, Trista Pei-Chun Chen, and Wei-Chao Chen. 2020. CARL: Controllable Agent with Reinforcement Learning for Quadruped Locomotion. ACM Trans. Graph. 39, 4, Article 38 (July 2020), 10 pages. Google ScholarDigital Library

45. Corey Lynch, Mohi Khansari, Ted Xiao, Vikash Kumar, Jonathan Tompson, Sergey Levine, and Pierre Sermanet. 2020. Learning Latent Plans from Play. In Proceedings of the Conference on Robot Learning (Proceedings of Machine Learning Research, Vol. 100), Leslie Pack Kaelbling, Danica Kragic, and Komei Sugiura (Eds.). PMLR, 1113–1132. http://proceedings.mlr.press/v100/lynch20a.htmlGoogle Scholar

46. X. Mao, Q. Li, H. Xie, R. Y. K. Lau, Z. Wang, and S. P. Smolley. 2017. Least Squares Generative Adversarial Networks. In 2017 IEEE International Conference on Computer Vision (ICCV). 2813–2821. Google ScholarCross Ref

47. Josh Merel, Leonard Hasenclever, Alexandre Galashov, Arun Ahuja, Vu Pham, Greg Wayne, Yee Whye Teh, and Nicolas Heess. 2019. Neural Probabilistic Motor Primitives for Humanoid Control. In International Conference on Learning Representations. https://openreview.net/forum?id=BJl6TjRcY7Google Scholar

48. Josh Merel, Yuval Tassa, Dhruva TB, Sriram Srinivasan, Jay Lemmon, Ziyu Wang, Greg Wayne, and Nicolas Heess. 2017. Learning human behaviors from motion capture by adversarial imitation. CoRR abs/1707.02201 (2017). arXiv:1707.02201 http://arxiv.org/abs/1707.02201Google Scholar

49. Josh Merel, Saran Tunyasuvunakool, Arun Ahuja, Yuval Tassa, Leonard Hasenclever, Vu Pham, Tom Erez, Greg Wayne, and Nicolas Heess. 2020. Catch and Carry: Reusable Neural Controllers for Vision-Guided Whole-Body Tasks. ACM Trans. Graph. 39, 4, Article 39 (July 2020), 14 pages. Google ScholarDigital Library

50. Lars Mescheder, Andreas Geiger, and Sebastian Nowozin. 2018. Which Training Methods for GANs do actually Converge?. In Proceedings of the 35th International Conference on Machine Learning (Proceedings of Machine Learning Research, Vol. 80), Jennifer Dy and Andreas Krause (Eds.). PMLR, Stockholmsm?ssan, Stockholm Sweden, 3481–3490. http://proceedings.mlr.press/v80/mescheder18a.htmlGoogle Scholar

51. Igor Mordatch, Emanuel Todorov, and Zoran Popovi?. 2012. Discovery of Complex Behaviors through Contact-Invariant Optimization. ACM Trans. Graph. 31, 4, Article 43 (July 2012), 8 pages. Google ScholarDigital Library

52. Igor Mordatch, Jack M. Wang, Emanuel Todorov, and Vladlen Koltun. 2013. Animating Human Lower Limbs Using Contact-Invariant Optimization. ACM Trans. Graph. 32, 6, Article 203 (Nov. 2013), 8 pages. Google ScholarDigital Library

53. Uldarico Muico, Yongjoon Lee, Jovan Popovi?, and Zoran Popovi?. 2009. Contact-Aware Nonlinear Control of Dynamic Characters. In ACM SIGGRAPH 2009 Papers (New Orleans, Louisiana) (SIGGRAPH ’09). Association for Computing Machinery, New York, NY, USA, Article 81, 9 pages. Google ScholarDigital Library

54. Vinod Nair and Geoffrey E. Hinton. 2010. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on International Conference on Machine Learning (Haifa, Israel) (ICML’10). Omnipress, Madison, WI, USA, 807–814.Google Scholar

55. Sebastian Nowozin, Botond Cseke, and Ryota Tomioka. 2016. f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization. In Advances in Neural Information Processing Systems, D. Lee, M. Sugiyama, U. Luxburg, I. Guyon, and R. Garnett (Eds.), Vol. 29. Curran Associates, Inc., 271–279. https://proceedings.neurips.cc/paper/2016/file/cedebb6e872f539bef8c3f919874e9d7-Paper.pdfGoogle Scholar

56. Soohwan Park, Hoseok Ryu, Seyoung Lee, Sunmin Lee, and Jehee Lee. 2019. Learning Predict-and-Simulate Policies from Unorganized Human Motion Data. ACM Trans. Graph. 38, 6, Article 205 (Nov. 2019), 11 pages. Google ScholarDigital Library

57. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018a. Deep-Mimic: Example-guided Deep Reinforcement Learning of Physics-based Character Skills. ACM Trans. Graph. 37, 4, Article 143 (July 2018), 14 pages. Google ScholarDigital Library

58. Xue Bin Peng, Glen Berseth, and Michiel van de Panne. 2016. Terrain-adaptive Locomotion Skills Using Deep Reinforcement Learning. ACM Trans. Graph. 35, 4, Article 81 (July 2016), 12 pages. Google ScholarDigital Library

59. Xue Bin Peng, Glen Berseth, Kangkang Yin, and Michiel Van De Panne. 2017. DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning. ACM Trans. Graph. 36, 4, Article 41 (July 2017), 13 pages. Google ScholarDigital Library

60. Xue Bin Peng, Michael Chang, Grace Zhang, Pieter Abbeel, and Sergey Levine. 2019a. MCP: Learning Composable Hierarchical Control with Multiplicative Compositional Policies. In Advances in Neural Information Processing Systems 32, H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alch?-Buc, E. Fox, and R. Garnett (Eds.). Cur-ran Associates, Inc., 3681–3692. http://papers.nips.cc/paper/8626-mcp-learning-composable-hierarchical-control-with-multiplicative-compositional-policies.pdfGoogle Scholar

61. Xue Bin Peng, Angjoo Kanazawa, Jitendra Malik, Pieter Abbeel, and Sergey Levine. 2018b. SFV: Reinforcement Learning of Physical Skills from Videos. ACM Trans. Graph. 37, 6, Article 178 (Nov. 2018), 14 pages.Google ScholarDigital Library

62. Xue Bin Peng, Angjoo Kanazawa, Sam Toyer, Pieter Abbeel, and Sergey Levine. 2019b. Variational Discriminator Bottleneck: Improving Imitation Learning, Inverse RL, and GANs by Constraining Information Flow. In International Conference on Learning Representations. https://openreview.net/forum?id=HyxPx3R9tmGoogle Scholar

63. Dean A. Pomerleau. 1988. ALVINN: An Autonomous Land Vehicle in a Neural Network. In Proceedings of the 1st International Conference on Neural Information Processing Systems (NIPS’88). MIT Press, Cambridge, MA, USA, 305–313.Google Scholar

64. Alec Radford, Luke Metz, and Soumith Chintala. 2015. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. CoRR abs/1511.06434 (2015). arXiv:1511.06434 http://arxiv.org/abs/1511.06434Google Scholar

65. Marc H. Raibert and Jessica K. Hodgins. 1991. Animation of Dynamic Legged Locomotion. In Proceedings of the 18th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’91). Association for Computing Machinery, New York, NY, USA, 349–358. Google ScholarDigital Library

66. Stephane Ross, Geoffrey Gordon, and Drew Bagnell. 2011. A Reduction of Imitation Learning and Structured Prediction to No-Regret Online Learning (Proceedings of Machine Learning Research, Vol. 15), Geoffrey Gordon, David Dunson, and Miroslav Dud?k (Eds.). JMLR Workshop and Conference Proceedings, Fort Lauderdale, FL, USA, 627–635. http://proceedings.mlr.press/v15/ross11a.htmlGoogle Scholar

67. Alla Safonova and Jessica K. Hodgins. 2007. Construction and Optimal Search of Interpolated Motion Graphs. ACM Trans. Graph. 26, 3 (July 2007), 106–es. Google ScholarDigital Library

68. H. Sakoe and S. Chiba. 1978. Dynamic programming algorithm optimization for spoken word recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing 26, 1 (1978), 43–49. Google ScholarCross Ref

69. Tim Salimans, Ian J. Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. 2016. Improved Techniques for Training GANs. CoRR abs/1606.03498 (2016). arXiv:1606.03498 http://arxiv.org/abs/1606.03498Google Scholar

70. John Schulman, Philipp Moritz, Sergey Levine, Michael I. Jordan, and Pieter Abbeel. 2015. High-Dimensional Continuous Control Using Generalized Advantage Estimation. CoRR abs/1506.02438 (2015). arXiv:1506.02438Google Scholar

71. John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. 2017. Proximal Policy Optimization Algorithms. CoRR abs/1707.06347 (2017). arXiv:1707.06347 http://arxiv.org/abs/1707.06347Google Scholar

72. SFU. [n.d.]. SFU Motion Capture Database. http://mocap.cs.sfu.ca/.Google Scholar

73. Dana Sharon and Michiel van de Panne. 2005. Synthesis of Controllers for Stylized Planar Bipedal Walking. In Proc. of IEEE International Conference on Robotics and Animation.Google Scholar

74. Kwang Won Sok, Manmyung Kim, and Jehee Lee. 2007. Simulating Biped Behaviors from Human Motion Data. ACM Trans. Graph. 26, 3 (July 2007), 107–es. Google ScholarDigital Library

75. Sebastian Starke, He Zhang, Taku Komura, and Jun Saito. 2019. Neural State Machine for Character-Scene Interactions. ACM Trans. Graph. 38, 6, Article 209 (Nov. 2019), 14 pages. Google ScholarDigital Library

76. Richard S. Sutton and Andrew G. Barto. 1998. Introduction to Reinforcement Learning (1st ed.). MIT Press, Cambridge, MA, USA.Google ScholarDigital Library

77. Jie Tan, Yuting Gu, C. Karen Liu, and Greg Turk. 2014. Learning Bicycle Stunts. ACM Trans. Graph. 33, 4, Article 50 (July 2014), 12 pages. Google ScholarDigital Library

78. Jeff Tang, Howard Leung, Taku Komura, and Hubert Shum. 2008. Emulating human perception of motion similarity. Computer Animation and Virtual Worlds 19 (08 2008), 211–221. Google ScholarCross Ref

79. Yuval Tassa, Yotam Doron, Alistair Muldal, Tom Erez, Yazhe Li, Diego de Las Casas, David Budden, Abbas Abdolmaleki, Josh Merel, Andrew Lefrancq, Timothy P. Lillicrap, and Martin A. Riedmiller. 2018. DeepMind Control Suite. CoRR abs/1801.00690 (2018). arXiv:1801.00690 http://arxiv.org/abs/1801.00690Google Scholar

80. Faraz Torabi, Garrett Warnell, and Peter Stone. 2018. Generative Adversarial Imitation from Observation. CoRR abs/1807.06158 (2018). arXiv:1807.06158 http://arxiv.org/abs/1807.06158Google Scholar

81. Adrien Treuille, Yongjoon Lee, and Zoran Popovi?. 2007. Near-Optimal Character Animation with Continuous Control. In ACM SIGGRAPH 2007 Papers (San Diego, California) (SIGGRAPH ’07). Association for Computing Machinery, New York, NY, USA, 7–es. Google ScholarDigital Library

82. Michiel van de Panne, Ryan Kim, and Eugene Flume. 1994. Virtual Wind-up Toys for Animation. In Proceedings of Graphics Interface ’94. 208–215.Google Scholar

83. Kevin Wampler, Zoran Popovi?, and Jovan Popovi?. 2014. Generalizing Locomotion Style to New Animals with Inverse Optimal Regression. ACM Trans. Graph. 33, 4, Article 49 (July 2014), 11 pages. Google ScholarDigital Library

84. Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2009. Optimizing Walking Controllers. In ACM SIGGRAPH Asia 2009 Papers (Yokohama, Japan) (SIGGRAPH Asia ’09). Association for Computing Machinery, New York, NY, USA, Article 168, 8 pages. Google ScholarDigital Library

85. Jack M. Wang, Samuel R. Hamner, Scott L. Delp, and Vladlen Koltun. 2012. Optimizing Locomotion Controllers Using Biologically-Based Actuators and Objectives. ACM Trans. Graph. 31, 4, Article 25 (July 2012), 11 pages. Google ScholarDigital Library

86. Ziyu Wang, Josh S Merel, Scott E Reed, Nando de Freitas, Gregory Wayne, and Nicolas Heess. 2017. Robust Imitation of Diverse Behaviors. In Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.), Vol. 30. Cur-ran Associates, Inc., 5320–5329. https://proceedings.neurips.cc/paper/2017/file/044a23cadb567653eb51d4eb40acaa88-Paper.pdfGoogle Scholar

87. Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2020. A Scalable Approach to Control Diverse Behaviors for Physically Simulated Characters. ACM Trans. Graph. 39, 4, Article 33 (July 2020), 12 pages. Google ScholarDigital Library

88. Yuting Ye and C. Karen Liu. 2010. Synthesis of Responsive Motion Using a Dynamic Model. Computer Graphics Forum (2010). Google ScholarCross Ref

89. Wenhao Yu, Greg Turk, and C. Karen Liu. 2018. Learning Symmetric and Low-Energy Locomotion. ACM Trans. Graph. 37, 4, Article 144 (July 2018), 12 pages. Google ScholarDigital Library

90. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-Adaptive Neural Networks for Quadruped Motion Control. ACM Trans. Graph. 37, 4, Article 145 (July 2018), 11 pages. Google ScholarDigital Library

91. Brian D. Ziebart, Andrew Maas, J. Andrew Bagnell, and Anind K. Dey. 2008. Maximum Entropy Inverse Reinforcement Learning. In Proceedings of the 23rd National Conference on Artificial Intelligence – Volume 3 (Chicago, Illinois) (AAAI’08). AAAI Press, 1433–1438.Google ScholarDigital Library

92. Victor Brian Zordan and Jessica K. Hodgins. 2002. Motion Capture-Driven Simulations That Hit and React. In Proceedings of the 2002 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (San Antonio, Texas) (SCA ’02). Association for Computing Machinery, New York, NY, USA, 89–96. Google ScholarDigital Library