“Active refocusing of images and videos” by Moreno-Noguer, Belhumeur and Nayar

Conference:

Type(s):

Title:

- Active refocusing of images and videos

Presenter(s)/Author(s):

Abstract:

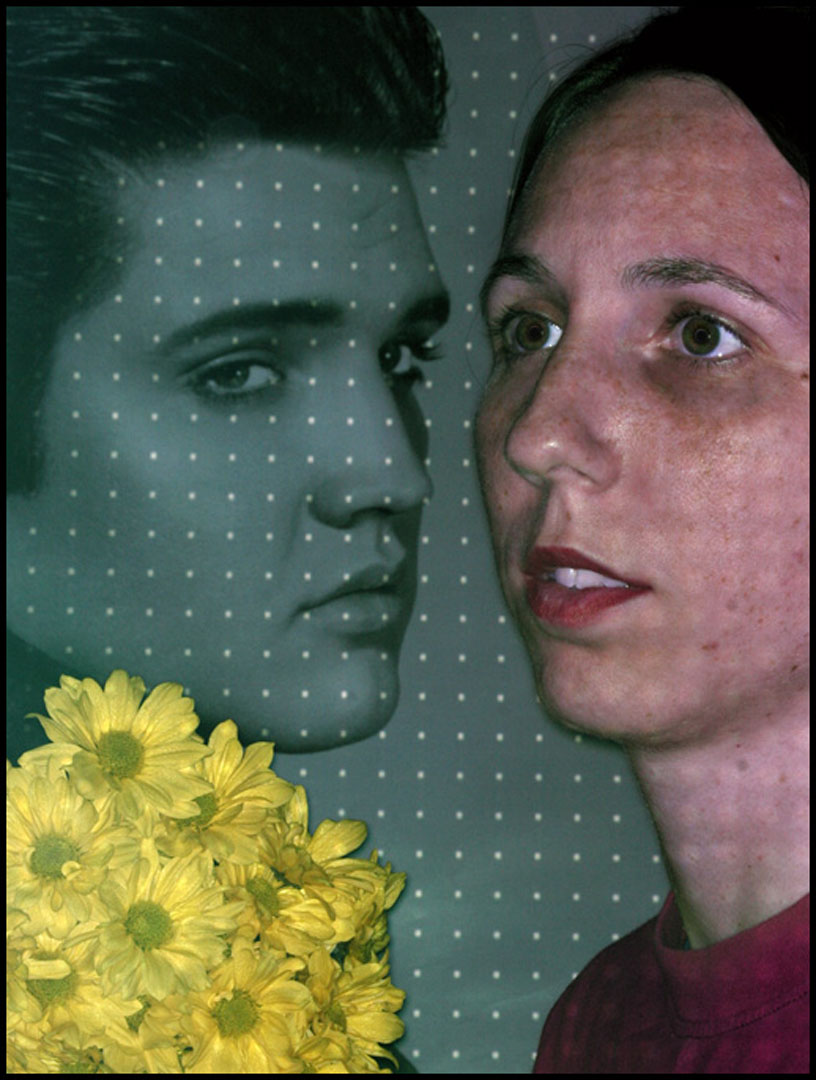

We present a system for refocusing images and videos of dynamic scenes using a novel, single-view depth estimation method. Our method for obtaining depth is based on the defocus of a sparse set of dots projected onto the scene. In contrast to other active illumination techniques, the projected pattern of dots can be removed from each captured image and its brightness easily controlled in order to avoid under- or over-exposure. The depths corresponding to the projected dots and a color segmentation of the image are used to compute an approximate depth map of the scene with clean region boundaries. The depth map is used to refocus the acquired image after the dots are removed, simulating realistic depth of field effects. Experiments on a wide variety of scenes, including close-ups and live action, demonstrate the effectiveness of our method.

References:

1. Agarwala, A., Dontcheva, M., Agrawala, M., Drucker, S., Colburn, A., Curless, B., Salesin, D., and Cohen, M. 2004. Interactive digital photomontage. In Proc. SIGGRAPH, 294–302. Google ScholarDigital Library

2. Asada, N., Fujiwara, H., and Matsuyama, T. 1998. Edge and depth from focus. Int. J. Comput. Vision 26, 2, 153–163. Google ScholarDigital Library

3. Burt, P. J., and Kolczynski, R. J. 1993. Enhanced image capture through fusion. In Proc. ICCV, 173–182.Google Scholar

4. Comaniciu, D., and Meer, P. 2002. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 24, 5, 603–619. Google ScholarDigital Library

5. Cook, R. L., Porter, T., and Carpenter, L. 1984. Distributed ray tracing. In Proc. SIGGRAPH, 137–145. Google ScholarDigital Library

6. Figueiredo, M. A., and Jain, A. K. 2002. Unsupervised learning of finite mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 24, 3, 381–396. Google ScholarDigital Library

7. Georgiev, T., Zheng, K. C., Curless, B., Salesin, D., Nayar, S. K., and Intwala, C. 2006. Spatio-angular resolution tradeoff in integral photography. In Proc. Eurographics Symposium on Rendering. Google ScholarCross Ref

8. Girod, B., and Adelson, E. 1990. System for ascertaining direction of blur in a range-from-defocus camera. In US Patent No. 4,965,422.Google Scholar

9. Girod, B., and Scherock, S. 1989. Depth from focus of structured light. In Proc. SPIE, vol. 1194, Optics, Illumination, and Image Sensing for Machine Vision.Google Scholar

10. Haeberli, P. 1994. A multifocus method for controlling depth of field. Graphica obscura web site. http://www.sgi.com/grafica/.Google Scholar

11. Hoiem, D., Efros, A. A., and Hebert, M. 2005. Automatic photo pop-up. In Proc. SIGGRAPH, 577–584. Google ScholarDigital Library

12. Horn, B. 1986. Robot Vision. MIT Press. Google ScholarDigital Library

13. Isaksen, A., McMillan, L., and Gortler, S. J. 2000. Dynamically reparameterized light fields. In Proc. SIGGRAPH, 297–306. Google ScholarDigital Library

14. Krishnan, A., and Ahuja, N. 1996. Panoramic image acquisition. In Proc. CVPR, 379. Google ScholarDigital Library

15. Levoy, M., and Hanrahan, P. 1996. Light field rendering. In Proc. SIGGRAPH, 31–42. Google ScholarDigital Library

16. Levoy, M., Chen, B., Vaish, V., Horowitz, M., McDowall, I., and Bolas, M. 2004. Synthetic aperture confocal imaging. In Proc. SIGGRAPH, 825–834. Google ScholarDigital Library

17. McGuire, M., Matusik, W., Pfister, H., Hughes, J. F., and Durand, F. 2005. Defocus video matting. In Proc. SIGGRAPH, 567–576. Google ScholarDigital Library

18. Nayar, S. K., and Nakagawa, Y. 1994. Shape from focus. IEEE Trans. Pattern Anal. Mach. Intell. 16, 8, 824–831. Google ScholarDigital Library

19. Nayar, S. K., Watanabe, M., and Noguchi, M. 1996. Real-time focus range sensor. IEEE Trans. Pattern Anal. Mach. Intell. 18, 12, 1186–1198. Google ScholarDigital Library

20. Ng, R., Levoy, M., Brdif, M., Duval, G., Horowitz, M., and Hanrahan, P. 2005. Light field photography with a hand-held plenoptic camera. In Tech Report CSTR 2005-02, Computer Science, Stanford University.Google Scholar

21. Pentland, A. P. 1987. A new sense for depth of field. IEEE Trans. Pattern Anal. Mach. Intell. 9, 4, 523–531. Google ScholarDigital Library

22. Potmesil, M., and Chakravarty, I. 1981. A lens and aperture camera model for synthetic image generation. In Proc. SIGGRAPH, 297–305. Google ScholarDigital Library

23. Proesmans, M., and Van Gool, L. 1997. One-shot active 3d image capture. In Proceedings SPIE, vol. 3023, Three-Dimensional Image Capture, 50–61.Google Scholar

24. Rajagopalan, A. N., and Chaudhuri, S. 1999. An mrf model-based approach to simultaneous recovery of depth and restoration from defocused images. IEEE Trans. Pattern Anal. Mach. Intell. 21, 7, 577–589. Google ScholarDigital Library

25. Rokita, P. 1996. Generating depth-of-field effects in virtual reality applications. IEEE Computer Graphics and Applications 16, 2, 18–21. Google ScholarDigital Library

26. Sakurai, R. 2004. IrisFilter: http://www.reiji.net/.Google Scholar

27. Salvi, J., Pagès, J., and Batlle, J. 2004. Pattern codification strategies in structured light systems. Pattern Recognition 37, 4, 827–849.Google ScholarCross Ref

28. Subbarao, M., and Surya, G. 1994. Depth from defocus: A spatial domain approach. Int. J. Comput. Vision 13, 271–294. Google ScholarDigital Library

29. Subbarao, M., Wei, T., and Surya, G. 1995. Focused image recovery from two defocused images recorded with different camera settings. IEEE Trans. Image Processing 4, 12, 1613–1628. Google ScholarDigital Library

30. Wang, J., and Cohen, M. 2005. An iterative optimization approach for unified image segmentation and matting. In Proc. ICCV, 936–943. Google ScholarDigital Library

31. Zhang, L., and Nayar, S. 2006. Projection defocus analysis for scene capture and image display. In Proc. SIGGRAPH, 907–915. Google ScholarDigital Library