“A deep learning framework for character motion synthesis and editing”

Conference:

Type(s):

Title:

- A deep learning framework for character motion synthesis and editing

Session/Category Title: PLANTS & HUMANS

Presenter(s)/Author(s):

Moderator(s):

Abstract:

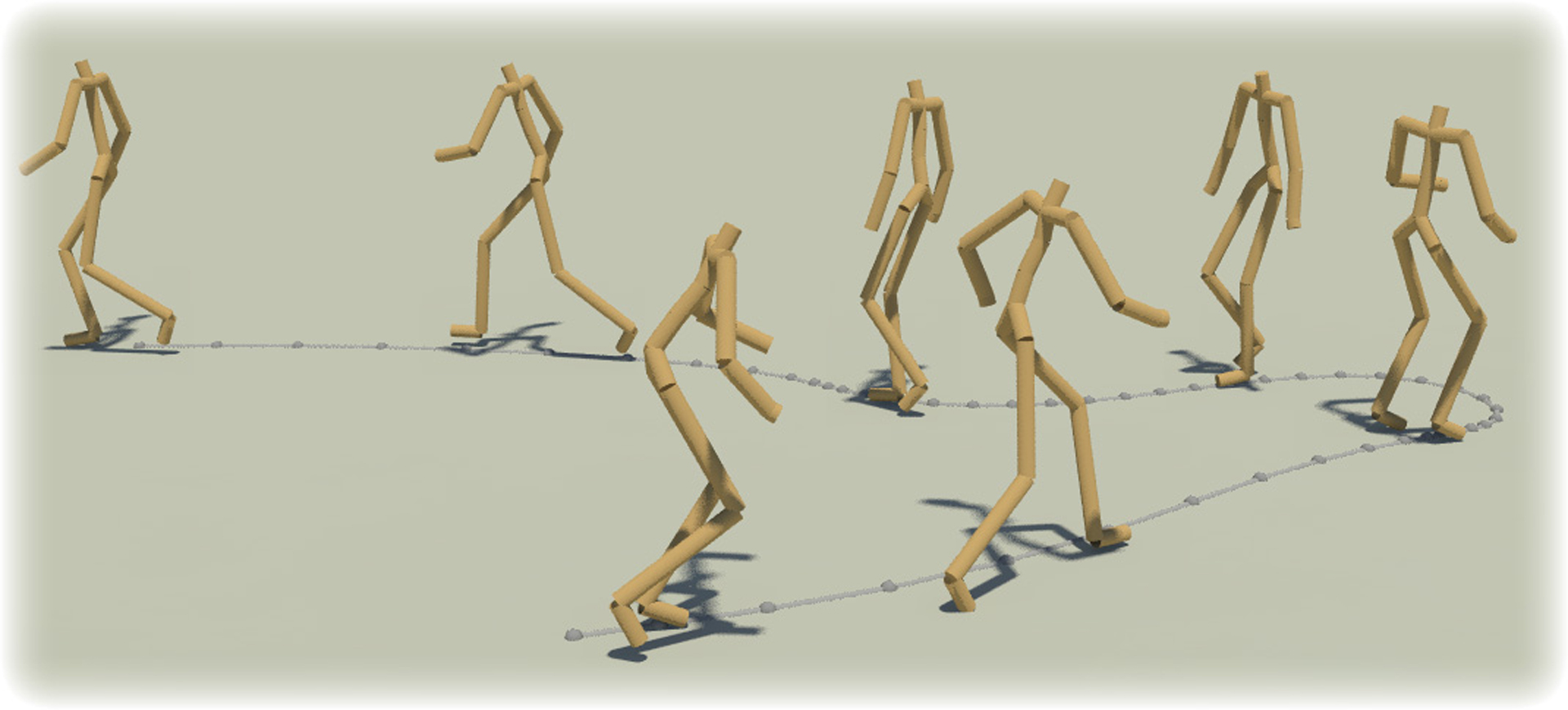

We present a framework to synthesize character movements based on high level parameters, such that the produced movements respect the manifold of human motion, trained on a large motion capture dataset. The learned motion manifold, which is represented by the hidden units of a convolutional autoencoder, represents motion data in sparse components which can be combined to produce a wide range of complex movements. To map from high level parameters to the motion manifold, we stack a deep feedforward neural network on top of the trained autoencoder. This network is trained to produce realistic motion sequences from parameters such as a curve over the terrain that the character should follow, or a target location for punching and kicking. The feedforward control network and the motion manifold are trained independently, allowing the user to easily switch between feedforward networks according to the desired interface, without re-training the motion manifold. Once motion is generated it can be edited by performing optimization in the space of the motion manifold. This allows for imposing kinematic constraints, or transforming the style of the motion, while ensuring the edited motion remains natural. As a result, the system can produce smooth, high quality motion sequences without any manual pre-processing of the training data.

References:

1. Allen, B. F., and Faloutsos, P. 2009. Evolved controllers for simulated locomotion. In Motion in games. Springer, 219–230. Google ScholarDigital Library

2. Arikan, O., and Forsyth, D. A. 2002. Interactive motion generation from examples. ACM Trans on Graph 21, 3, 483–490. Google ScholarDigital Library

3. Bergstra, J., Breuleux, O., Bastien, F., Lamblin, P., Pascanu, R., Desjardins, G., Turian, J., Warde-Farley, D., and Bengio, Y. 2010. Theano: a CPU and GPU math expression compiler. In Proc. of the Python for Scientific Computing Conference (SciPy). Oral Presentation.Google Scholar

4. CMU. Carnegie-Mellon Mocap Database. http://mocap.cs.cmu.edu/.Google Scholar

5. Du, Y., Wang, W., and Wang, L. 2015. Hierarchical recurrent neural network for skeleton based action recognition. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

6. Fragkiadaki, K., Levine, S., Felsen, P., and Malik, J. 2015. Recurrent network models for human dynamics. In Proc. of the IEEE International Conference on Computer Vision, 4346–4354. Google ScholarDigital Library

7. Gatys, L. A., Ecker, A. S., and Bethge, M. 2015. A neural algorithm of artistic style. CoRR abs/1508.06576.Google Scholar

8. Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. 2014. Generative adversarial nets. In Proc. of Advances in Neural Information Processing Systems. 2672–2680. Google ScholarDigital Library

9. Graves, A., Mohamed, A.-R., and Hinton, G. 2013. Speech recognition with deep recurrent neural networks. In Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE International Conference on, IEEE, 6645–6649.Google Scholar

10. Grochow, K., Martin, S. L., Hertzmann, A., and Popović, Z. 2004. Style-based inverse kinematics. ACM Trans on Graph 23, 3, 522–531. Google ScholarDigital Library

11. Hariharan, B., Arbeláez, P. A., Girshick, R. B., and Malik, J. 2014. Hypercolumns for object segmentation and fine-grained localization. CoRR abs/1411.5752.Google Scholar

12. Heck, R., and Gleicher, M. 2007. Parametric motion graphs. In Proc. of the 2007 Symposium on Interactive 3D Graphics and Games, ACM, 129–136. Google ScholarDigital Library

13. Hinton, G. 2012. A practical guide to training restricted boltzmann machines. In Neural Networks: Tricks of the Trade, G. Montavon, G. Orr, and K.-R. Mller, Eds., vol. 7700 of Lecture Notes in Computer Science. 599–619.Google Scholar

14. Holden, D., Saito, J., Komura, T., and Joyce, T. 2015. Learning motion manifolds with convolutional autoencoders. In SIGGRAPH Asia 2015 Technical Briefs, ACM, 18:1–18:4. Google ScholarDigital Library

15. Kim, M., Hyun, K., Kim, J., and Lee, J. 2009. Synchronized multi-character motion editing. ACM Trans on Graph 28, 3, 79. Google ScholarDigital Library

16. Kingma, D. P., and Ba, J. 2014. Adam: A method for stochastic optimization. CoRR abs/1412.6980.Google Scholar

17. Kovar, L., and Gleicher, M. 2004. Automated extraction and parameterization of motions in large data sets. ACM Trans on Graph 23, 3, 559–568. Google ScholarDigital Library

18. Kovar, L., Gleicher, M., and Pighin, F. 2002. Motion graphs. ACM Trans on Graph 21, 3, 473–482. Google ScholarDigital Library

19. Krizhevsky, A., Sutskever, I., and Hinton, G. E. 2012. Imagenet classification with deep convolutional neural networks. In Proc. of Advances in Neural Information Processing Systems, 1097–1105.Google Scholar

20. Lee, J., and Lee, K. H. 2004. Precomputing avatar behavior from human motion data. In Proc. of 2004 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, 79–87. Google ScholarDigital Library

21. Lee, J., and Shin, S. Y. 1999. A hierarchical approach to interactive motion editing for human-like figures. SIGGRAPH’99, 39–48. Google ScholarDigital Library

22. Lee, J., Chai, J., Reitsma, P. S., Hodgins, J. K., and Pollard, N. S. 2002. Interactive control of avatars animated with human motion data. ACM Trans on Graph 21, 3, 491–500. Google ScholarDigital Library

23. Lee, Y., Wampler, K., Bernstein, G., Popović, J., and Popović, Z. 2010. Motion fields for interactive character locomotion. ACM Trans on Graph 29, 6, 138. Google ScholarDigital Library

24. Levine, S., and Koltun, V. 2014. Learning complex neural network policies with trajectory optimization. In Proc. of the 31st International Conference on Machine Learning (ICML-14), 829–837.Google Scholar

25. Levine, S., Wang, J. M., Haraux, A., Popović, Z., and Koltun, V. 2012. Continuous character control with low-dimensional embeddings. ACM Trans on Graph 31, 4, 28. Google ScholarDigital Library

26. Min, J., and Chai, J. 2012. Motion graphs++: a compact generative model for semantic motion analysis and synthesis. ACM Trans on Graph 31, 6, 153. Google ScholarDigital Library

27. Min, J., Chen, Y.-L., and Chai, J. 2009. Interactive generation of human animation with deformable motion models. ACM Trans on Graph 29, 1, 9. Google ScholarDigital Library

28. Mittelman, R., Kuipers, B., Savarese, S., and Lee, H. 2014. Structured recurrent temporal restricted boltzmann machines. In Proc. of the 31st International Conference on Machine Learning (ICML-14), 1647–1655.Google Scholar

29. Mordatch, I., Lowrey, K., Andrew, G., Popovic, Z., and Todorov, E. 2015. Interactive control of diverse complex characters with neural networks. In Proc. of Advances in Neural Information Processing Systems. Google ScholarDigital Library

30. Mukai, T., and Kuriyama, S. 2005. Geostatistical motion interpolation. ACM Trans on Graph 24, 3, 1062–1070. Google ScholarDigital Library

31. Müller, M., Röder, T., Clausen, M., Eberhardt, B., Krüger, B., and Weber, A. 2007. Documentation mocap database hdm05. Tech. Rep. CG-2007-2, Universität Bonn, June.Google Scholar

32. Nair, V., and Hinton, G. E. 2010. Rectified linear units improve restricted boltzmann machines. In Proc. of the 27th International Conference on Machine Learning (ICML-10), 807–814.Google Scholar

33. Ofli, F., Chaudhry, R., Kurillo, G., Vidal, R., and Bajcsy, R. 2013. Berkeley mhad: A comprehensive multimodal human action database. In Applications of Computer Vision (WACV), 2013 IEEE Workshop on, 53–60. Google ScholarDigital Library

34. Rose, C., Cohen, M. F., and Bodenheimer, B. 1998. Verbs and adverbs: Multidimensional motion interpolation. IEEE Comput. Graph. Appl. 18, 5, 32–40. Google ScholarDigital Library

35. Rose III, C. F., Sloan, P.-P. J., and Cohen, M. F. 2001. Artist-directed inverse-kinematics using radial basis function interpolation. Computer Graphics Forum 20, 3, 239–250.Google ScholarCross Ref

36. Safonova, A., and Hodgins, J. K. 2007. Construction and optimal search of interpolated motion graphs. ACM Trans on Graph 26, 3, 106. Google ScholarDigital Library

37. Shin, H. J., and Oh, H. S. 2006. Fat graphs: constructing an interactive character with continuous controls. In Proc. of the 2006 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Eurographics Association, 291–298. Google ScholarDigital Library

38. Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., and Salakhutdinov, R. 2014. Dropout: A simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research 15, 1, 1929–1958. Google ScholarDigital Library

39. Tan, J., Gu, Y., Liu, C. K., and Turk, G. 2014. Learning bicycle stunts. ACM Trans on Graph 33, 4, 50. Google ScholarDigital Library

40. Taylor, G. W., and Hinton, G. E. 2009. Factored conditional restricted boltzmann machines for modeling motion style. In Proc. of the 26th International Conference on Machine Learning, ACM, 1025–1032. Google ScholarDigital Library

41. Taylor, G. W., Hinton, G. E., and Roweis, S. T. 2011. Two distributed-state models for generating high-dimensional time series. The Journal of Machine Learning Research 12, 1025–1068. Google ScholarDigital Library

42. Vincent, P., Larochelle, H., Lajoie, I., Bengio, Y., and Manzagol, P.-A. 2010. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. The Journal of Machine Learning Research 11, 3371–3408. Google ScholarDigital Library

43. Wang, J., Hertzmann, A., and Blei, D. M. 2005. Gaussian process dynamical models. In Proc. of Advances in Neural Information Processing Systems, 1441–1448.Google Scholar

44. Xia, S., Wang, C., Chai, J., and Hodgins, J. 2015. Realtime style transfer for unlabeled heterogeneous human motion. ACM Trans on Graph 34, 4, 119:1–119:10. Google ScholarDigital Library

45. Yamane, K., and Nakamura, Y. 2003. Natural motion animation through constraining and deconstraining at will. Visualization and Computer Graphics, IEEE Transactions on 9, 3, 352–360. Google ScholarDigital Library

46. Zeiler, M. D., and Fergus, R. 2014. Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014. Springer, 818–833.Google Scholar