“4D frequency analysis of computational cameras for depth of field extension” by Levin, Hasinoff, Durand, Freeman and Green

Conference:

Type(s):

Title:

- 4D frequency analysis of computational cameras for depth of field extension

Presenter(s)/Author(s):

Abstract:

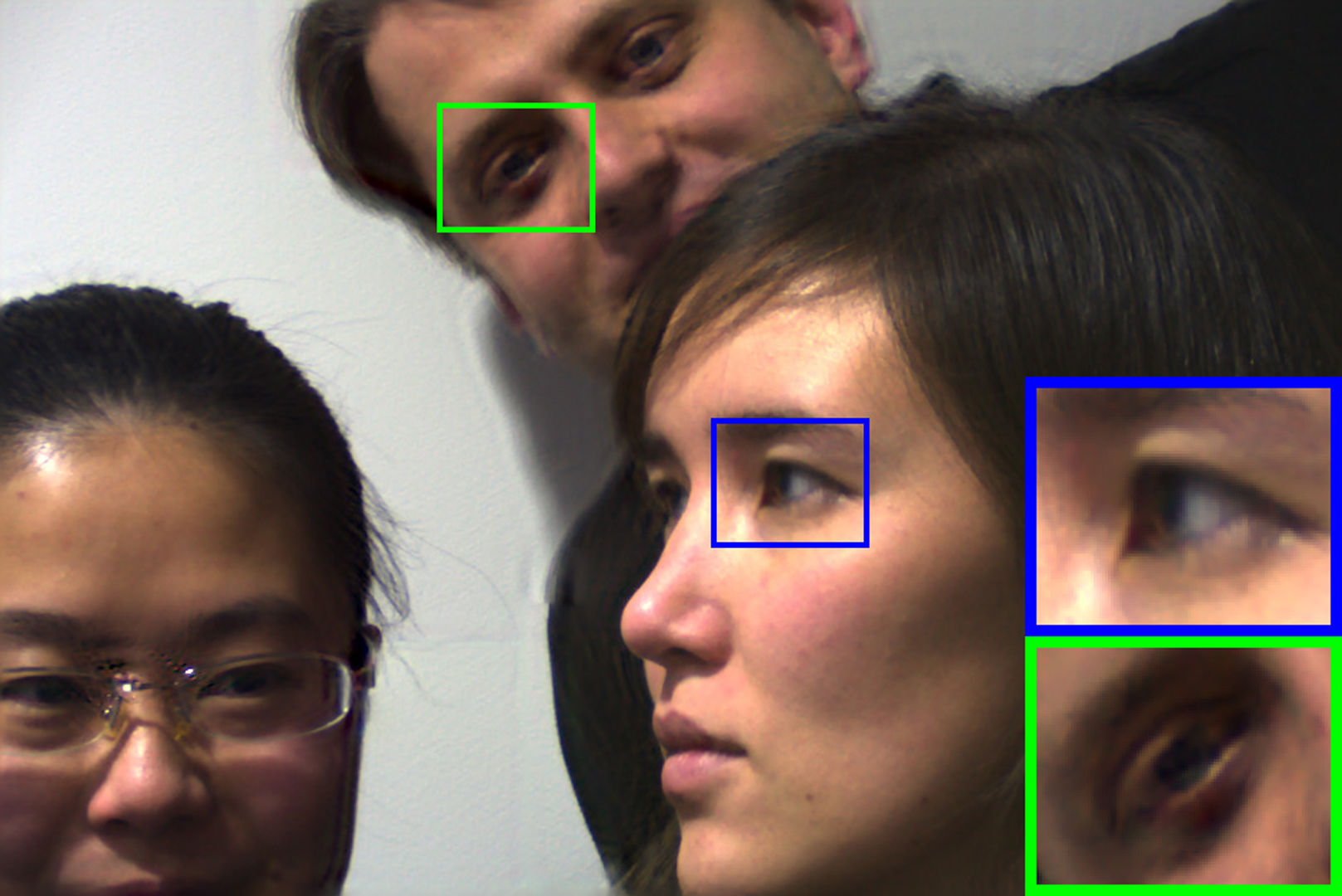

Depth of field (DOF), the range of scene depths that appear sharp in a photograph, poses a fundamental tradeoff in photography—wide apertures are important to reduce imaging noise, but they also increase defocus blur. Recent advances in computational imaging modify the acquisition process to extend the DOF through deconvolution. Because deconvolution quality is a tight function of the frequency power spectrum of the defocus kernel, designs with high spectra are desirable. In this paper we study how to design effective extended-DOF systems, and show an upper bound on the maximal power spectrum that can be achieved. We analyze defocus kernels in the 4D light field space and show that in the frequency domain, only a low-dimensional 3D manifold contributes to focus. Thus, to maximize the defocus spectrum, imaging systems should concentrate their limited energy on this manifold. We review several computational imaging systems and show either that they spend energy outside the focal manifold or do not achieve a high spectrum over the DOF. Guided by this analysis we introduce the lattice-focal lens, which concentrates energy at the low-dimensional focal manifold and achieves a higher power spectrum than previous designs. We have built a prototype lattice-focal lens and present extended depth of field results.

References:

1. Adams, A., and Levoy, M. 2007. General linear cameras with finite aperture. In EGSR. Google ScholarDigital Library

2. Agarwala, A., Dontcheva, M., Agrawala, M., Drucker, S., Colburn, A., Curless, B., Salesin, D., and Cohen, M. 2004. Interactive digital photomontage. In SIGGRAPH. Google ScholarDigital Library

3. Ben-Eliezer, E., Zalevsky, Z., Marom, E., and Konforti, N. 2005. Experimental realization of an imaging system with an extended depth of field. Applied Optics, 2792–2798.Google Scholar

4. Brenner, K., Lohmann, A., and Casteneda, J. O. 1983. The ambiguity function as a polar display of the OTF. Opt. Commun. 44, 323–326.Google ScholarCross Ref

5. Dowski, E., and Cathey, W. 1995. Extended depth of field through wavefront coding. Applied Optics 34, 1859–1866.Google ScholarCross Ref

6. FitzGerrell, A. R., Dowski, E., and Cathey, W. 1997. Defocus transfer function for circularly symmetric pupils. Applied Optics 36, 5796–5804.Google ScholarCross Ref

7. George, N., and Chi, W. 2003. Computational imaging with the logarithmic asphere: theory. J. Opt. Soc. Am. A 20, 2260–2273.Google ScholarCross Ref

8. Goodman, J. W. 1968. Introduction to Fourier Optics. McGraw-Hill Book Company.Google Scholar

9. Gu, X., Gortler, S. J., and Cohen, M. F. 1997. Polyhedral geometry and the two-plane parameterization. In EGSR. Google ScholarDigital Library

10. Hasinoff, S., and Kutulakos, K. 2008. Light-efficient photography. In ECCV. Google ScholarDigital Library

11. Hausler, G. 1972. A method to increase the depth of focus by two step image processing. Optics Communications, 3842.Google Scholar

12. Horn, B. K. P. 1968. Focusing. Tech. Rep. AIM-160, Massachusetts Institute of Technology.Google Scholar

13. Levin, A., Fergus, R., Durand, F., and Freeman, W. 2007. Image and depth from a conventional camera with a coded aperture. SIGGRAPH. Google ScholarDigital Library

14. Levin, A., Freeman, W., and Durand, F. 2008. Understanding camera trade-offs through a Bayesian analysis of light field projections. In ECCV. Google ScholarDigital Library

15. Levin, A., Freeman, W., and Durand, F. 2008. Understanding camera trade-offs through a Bayesian analysis of light field projections. MIT CSAIL TR 2008–049.Google Scholar

16. Levin, A., Sand, P., Cho, T. S., Durand, F., and Freeman, W. T. 2008. Motion invariant photography. SIGGRAPH. Google ScholarDigital Library

17. Levin, A., Hasinoff, S., Green, P., Durand, F., and Freeman, W. 2009. 4D frequency analysis of computational cameras for depth of field extension. MIT CSAIL TR 2009–019.Google Scholar

18. Levin, A., Weiss, Y., Durand, F., and Freeman, W. 2009. Understanding and evaluating blind deconvolution algorithms. In CVPR.Google Scholar

19. Levoy, M., and Hanrahan, P. M. 1996. Light field rendering. In SIGGRAPH. Google ScholarDigital Library

20. Nagahara, H., Kuthirummal, S., Zhou, C., and Nayar, S. 2008. Flexible Depth of Field Photography. In ECCV. Google ScholarDigital Library

21. Ng, R. 2005. Fourier slice photography. SIGGRAPH. Google ScholarDigital Library

22. Ogden, J., Adelson, E., Bergen, J. R., and Burt, P. 1985. Pyramid-based computer graphics. RCA Engineer 30, 5, 4–15.Google Scholar

23. Papoulis, A. 1974. Ambiguity function in fourier optics. Journal of the Optical Society of America A 64, 779–788.Google ScholarCross Ref

24. Rihaczek, A. W. 1969. Principles of high-resolution radar. McGraw-Hill.Google Scholar

25. Veeraraghavan, A., Raskar, R., Agrawal, A., Mohan, A., and Tumblin, J. 2007. Dappled photography: Maskenhanced cameras for heterodyned light fields and coded aperture refocusing. SIGGRAPH. Google ScholarDigital Library

26. Zhang, Z., and Levoy, M. 2009. Wigner distributions and how they relate to the light field. In ICCP.Google Scholar