“MaD: Mapping by Demonstration for Continuous Sonification” by Françoise, Schnell and Bevilacqua

Notice: Pod Template PHP code has been deprecated, please use WP Templates instead of embedding PHP. has been deprecated since Pods version 2.3 with no alternative available. in /data/siggraph/websites/history/wp-content/plugins/pods/includes/general.php on line 518

Conference:

- SIGGRAPH 2014

-

More from SIGGRAPH 2014:

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Type(s):

Entry Number: 17

Title:

- MaD: Mapping by Demonstration for Continuous Sonification

Presenter(s):

Description:

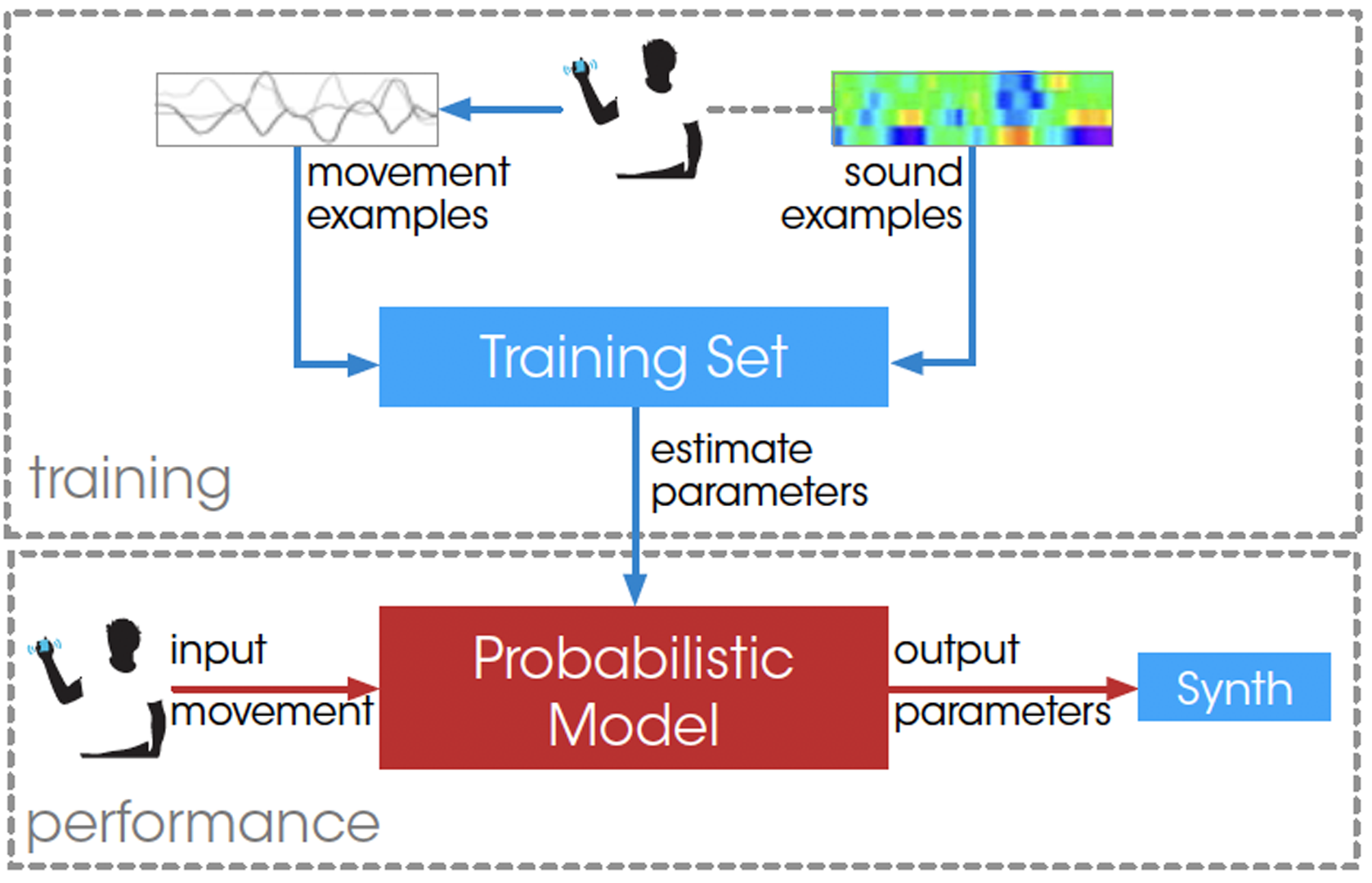

Gesture has become ubiquitous as an input modality in computer system, using multi-touch interfaces, cameras, or inertial measurement units. Whereas gesture control often necessitates the visual modality as a feedback mechanism, sound feedback has been significantly less explored. Nevertheless, sound can provide both discrete and continuous feedback on the gestures and the results of their action. We present here a system that significantly simplify and speedup the design of continuous sonification. Our approach is based on the concept of mapping by demonstration. This methodology enables designers to create motion-sound relationships intuitively, avoiding complex programming that is usually required in common approaches. Our system relies on multimodal machine learning techniques combined with hybrid sound synthesis. We believe that the system we describe opens new directions in the design of continuous motion-sound interactions. We developed prototypes related to different fields: performing arts, gaming, and soundguided rehabilitation.

References:

FRANÇOISE, J., CARAMIAUX, B., AND BEVILACQUA, F. 2012. A Hierarchical Approach for the Design of Gesture–to–Sound Mappings. In Proceedings of the 9th Sound and Music Computing Conference, 233–240.

FRANÇOISE, J., SCHNELL, N., AND BEVILACQUA, F. 2013. A Multimodal Probabilistic Model for Gesture–based Control of Sound Synthesis. In Proceedings of the 21st ACM international conference on Multimedia (MM’13), 705–708.

Acknowledgements:

We acknowledge support from the ANR project Legos 11-BS02- 012 and the Labex SMART (ANR-11-LABX-65).