“GauGAN: Semantic Image Synthesis with Spatially Adaptive Normalization” by Park, Liu, Wang and Zhu

Notice: Pod Template PHP code has been deprecated, please use WP Templates instead of embedding PHP. has been deprecated since Pods version 2.3 with no alternative available. in /data/siggraph/websites/history/wp-content/plugins/pods/includes/general.php on line 518

Conference:

- SIGGRAPH 2019

-

More from SIGGRAPH 2019:

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Type(s):

E-Tech Type(s):

- Adaptive Technologies

Entry Number: 02

Title:

- GauGAN: Semantic Image Synthesis with Spatially Adaptive Normalization

Developer(s):

Description:

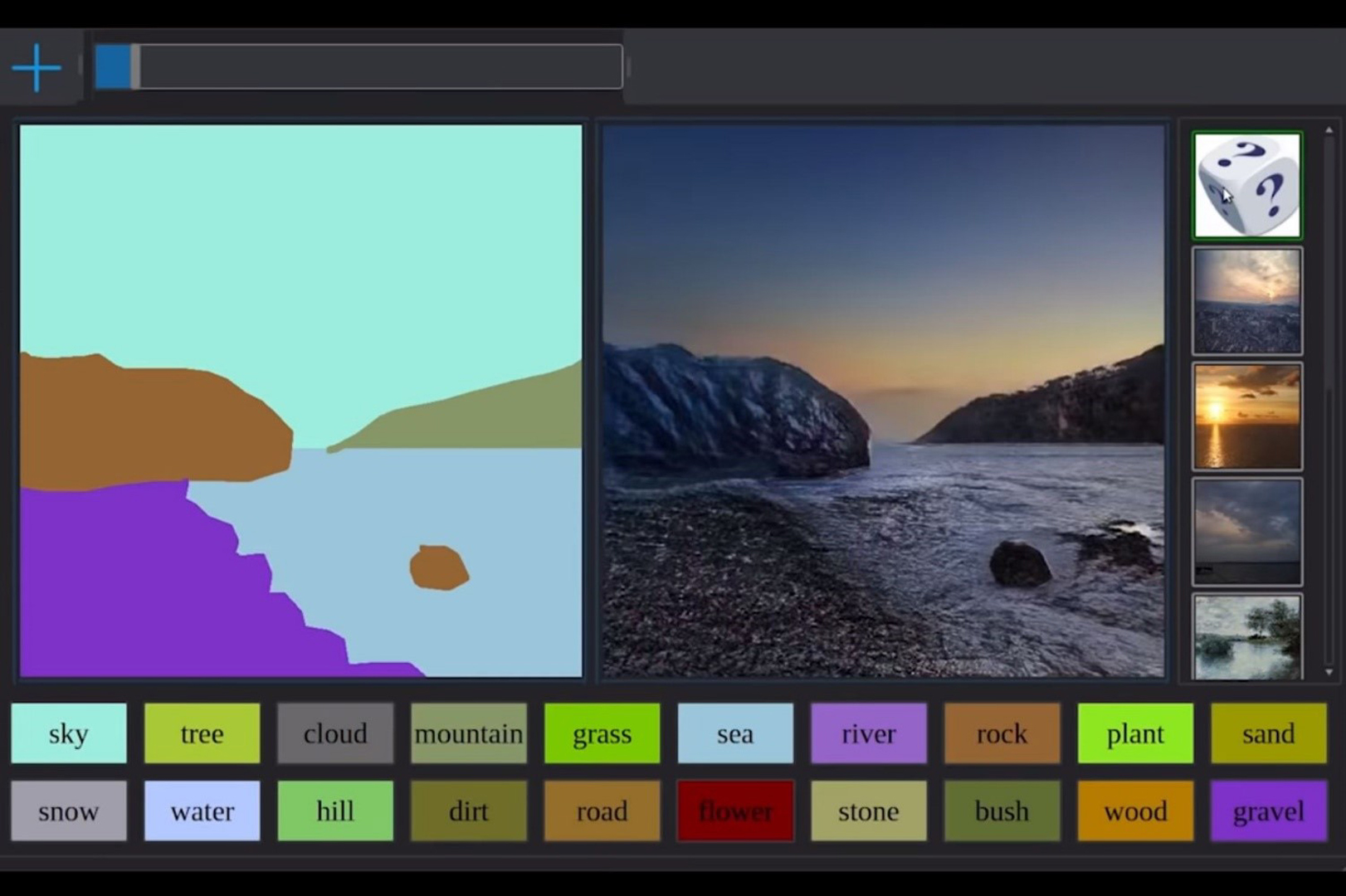

Won Audience choice and Best In Show Award

We propose GauGAN, a GAN-based image synthesis model that can generate photo-realistic images given an input semantic layout. It is built on spatially adaptive normalization, a simple but effective normalization layer. Previous methods directly feed the semantic layout as input to the deep network, which is then processed through stacks of convolution, normalization, and non-linearity layers. We show that this is sub-optimal as the normalization layers tend to “wash away” semantic information. To address the issue, we propose using the input layout for modulating the activations in normalization layers through a spatially- adaptive, learned transformation. Our proposed method outperforms the previous methods by a large margin. Furthermore, the new method enables natural extension to control the style of the synthesized images. Given a style guide image, our style encoder network captures the style into a latent code, which our image generator network combines with the semantic layout via spatially adaptive normalization to generate a photo-realistic image that respects both the style of the guide image and content of the semantic layout. Our method will enable people without drawing skills to effectively express their imagination. GauGAN in the inference time is a simple convolutional neural network. It runs real-time on most modern GPU cards. GauGAN is one of the recent research efforts in advancing GANs for real-time image rendering. We believe this is of interest to the SIGGRAPH and real-time communities.