“Better Face Communication” by Morishima

Notice: Pod Template PHP code has been deprecated, please use WP Templates instead of embedding PHP. has been deprecated since Pods version 2.3 with no alternative available. in /data/siggraph/websites/history/wp-content/plugins/pods/includes/general.php on line 518

Conference:

- SIGGRAPH 1995

-

More from SIGGRAPH 1995:

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Type(s):

Title:

- Better Face Communication

Program Title:

- Interactive Communities

Presenter(s):

Collaborator(s):

Project Affiliation:

- University of Toronto and Seikei University

Description:

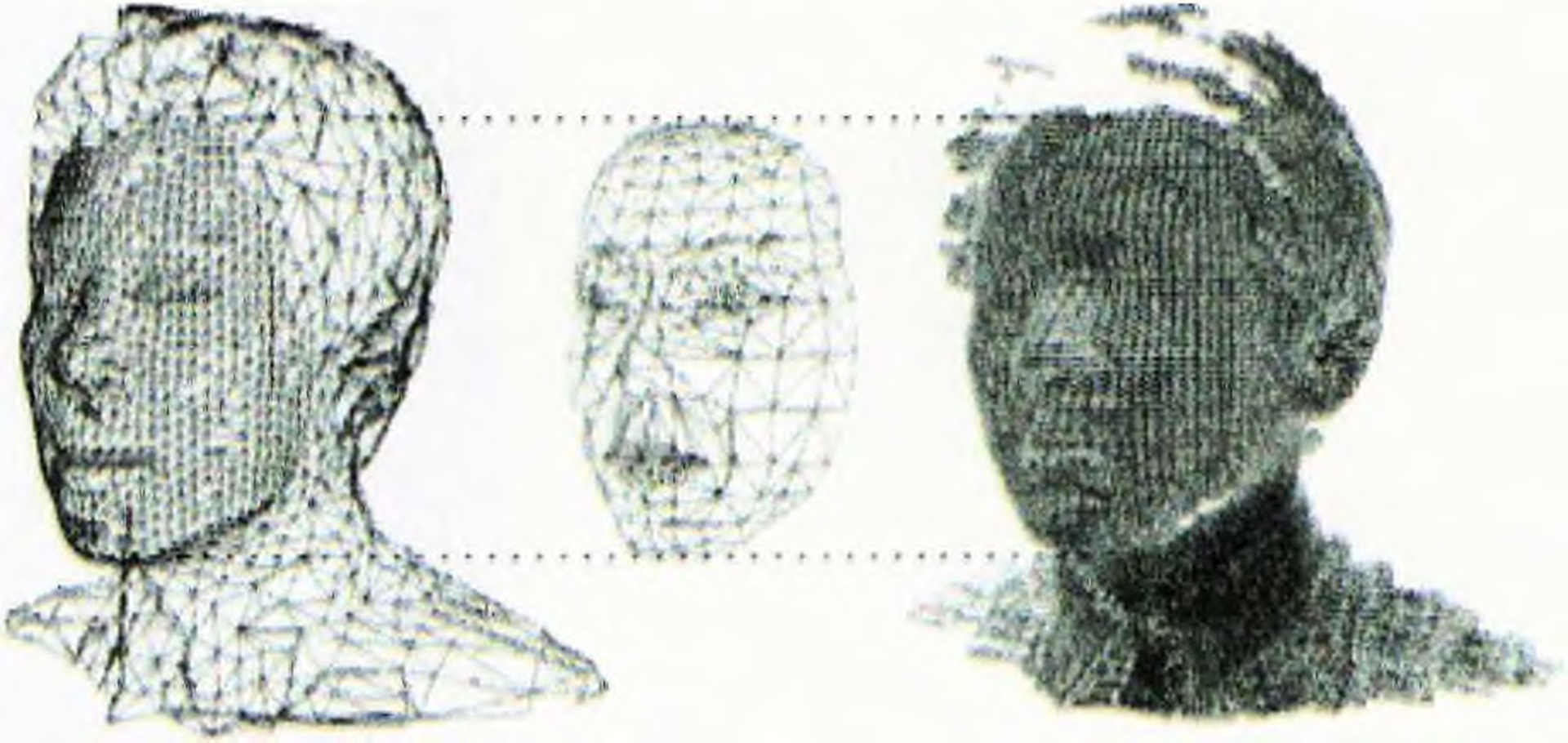

Better Face Communication presents visualization of a virtual face-to-face communication environment. A synthesized face is displayed with several kinds of expression, which echo words spoken in real time. The virtual face is natural and can be updated according to the participant’s image. Lip motion is also synchronized with the participant’s voice. Participants can converse with themselves or others in this environment.

In the model-making process, the image and training voice are recorded by camera and microphone. After adjustment for the feature positions along the outline of each programmed item on the face, a personal generic model is constructed, and texture is captured from the front image of the participant. At the same time, the participant can speak a few vowels into a microphone for the voice-training session.

In the voice analysis process, spectrum features are calculated frame by frame from the voice input. These parameters are applied to the input layer units of a neural network. Image feature parameters are obtained at the output layer units at 30 cycles per second. Mouth shape and jaw position are deformed by these parameters in this “media conversion” process.

In the image synthesis process, the 3D model is modified by several parameters related to facial expression and lip motion, and texture mapping is performed to display the synthesized face image. A 4000-polygon model can be synthesized faster than 30 frames per second by the Onyx Reality Engine2. Except for the mouth shape and jaw position, facial expression is applied manually. Image synthesis and voice analysis are performed synchronously through ethernet.

In the demonstration, communication and collaboration between the virtual operator and the participant control facial expressions. After a few minutes of training, the participant learns how to control facial expressions and lip movement with a pointing device and voice input.