“The Mouthesizer: A Facial Gesture Musical Interface” by Lyons, Haehnel and Tetsutani

Conference:

Title:

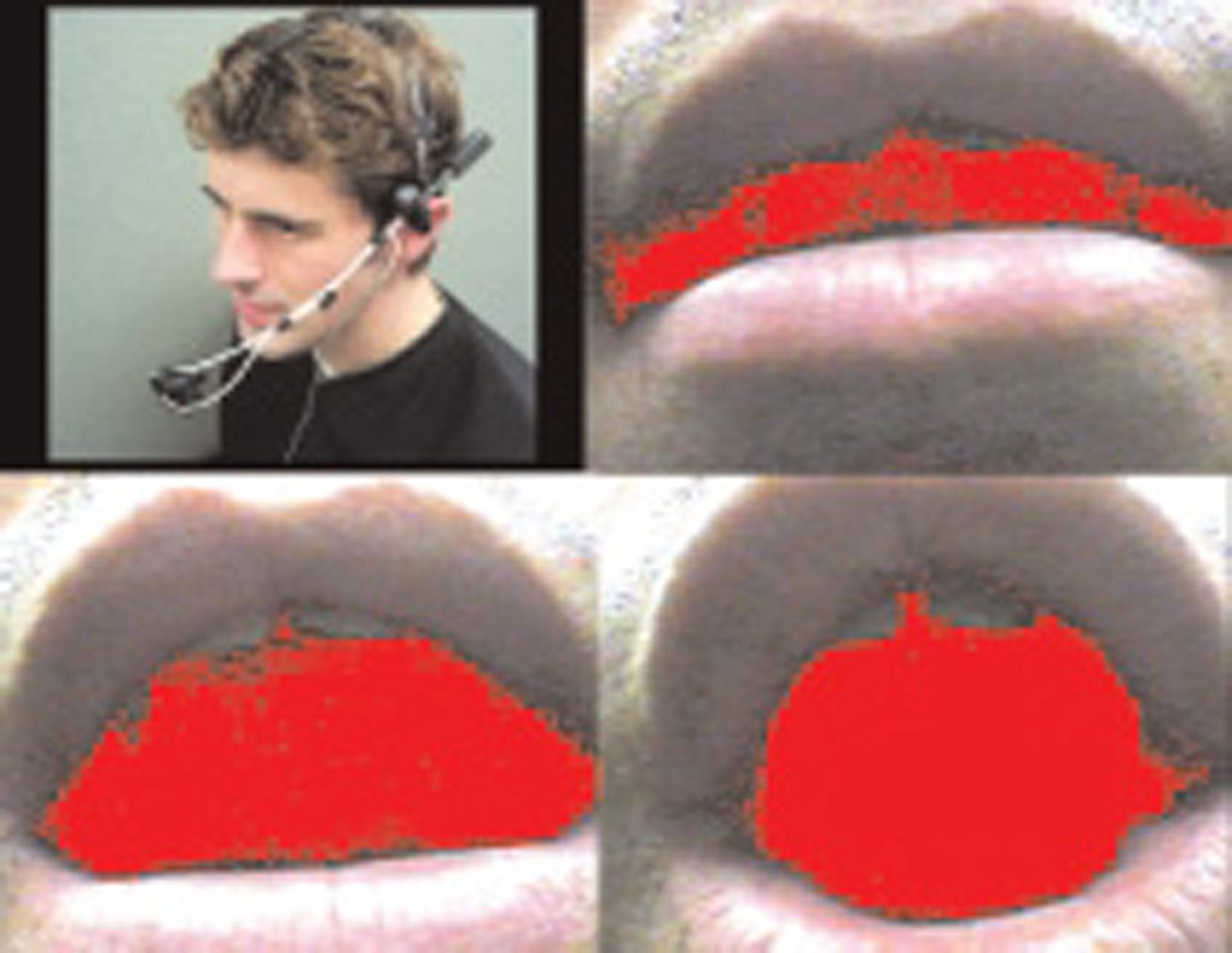

- The Mouthesizer: A Facial Gesture Musical Interface

Session/Category Title: Narrative Gestures

Presenter(s)/Author(s):

Interest Area:

- Technical

Abstract:

In this technical sketch we introduce a new interaction paradigm: using facial gestures and expressions to control musical sound. The mouthesizer, a prototype of this general concept, consists of a miniature head mounted camera which acquires video input from the region of the mouth, extracts the mouth shape using a simple computer vision algorithm, and converts shape parameters to MIDI commands to control a synthesizer or musical effects device.

References:

1. Wanderley, M. & Baffier, M., eds. (2000). Trends in gestural control of music, CD-ROM. IRCAM, Paris, 2000.

2. Cutler, M., Robair, G., & Bean. (2000). The outer limits: a survey of unconventional musical input devices. Electronic Musician, August 2000: 50 – 72.