“Gazecoppet: hierarchical gaze-communication in ambient space” by Yonezawa, Yamazoe, Utsumi and Abe

Conference:

Type(s):

Title:

- Gazecoppet: hierarchical gaze-communication in ambient space

Presenter(s)/Author(s):

Abstract:

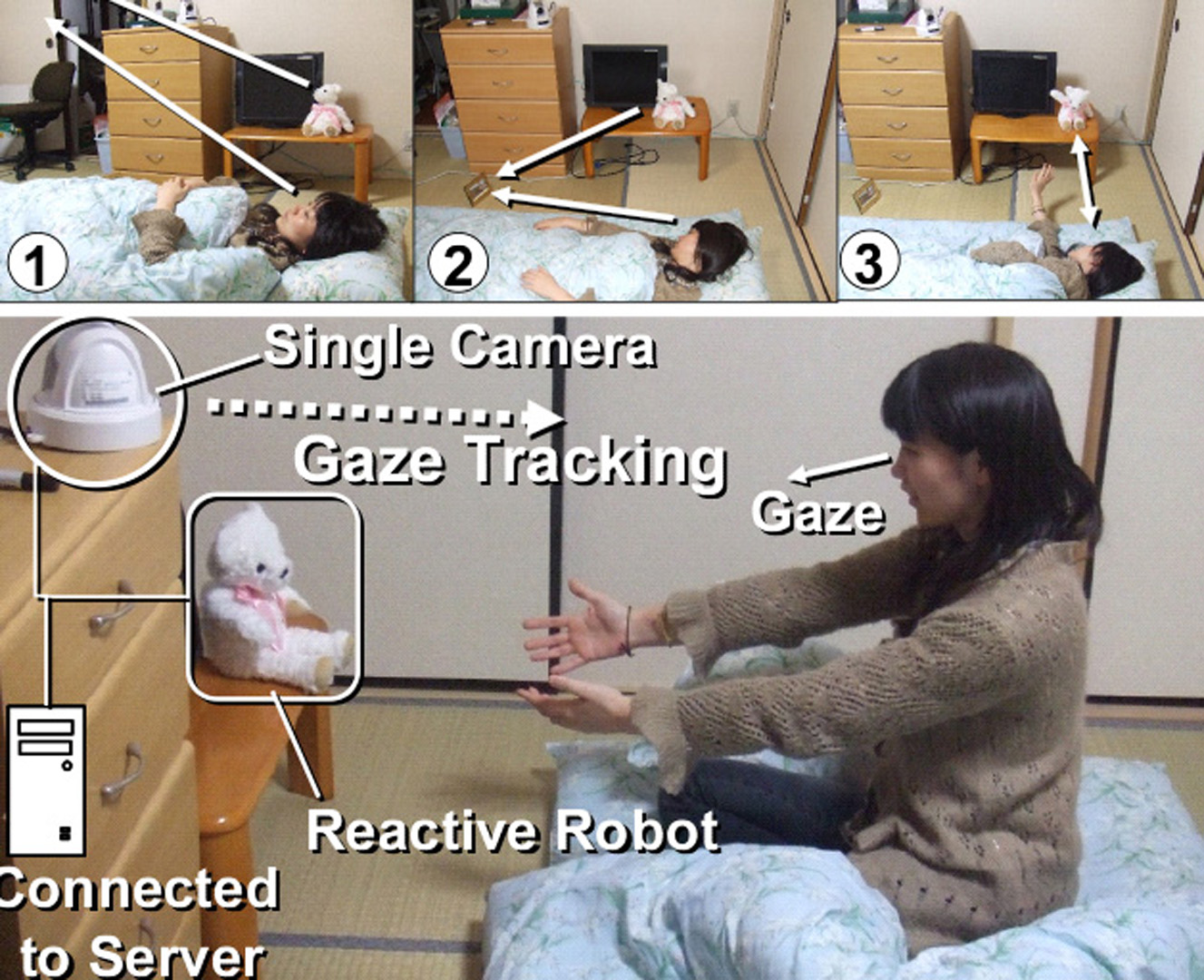

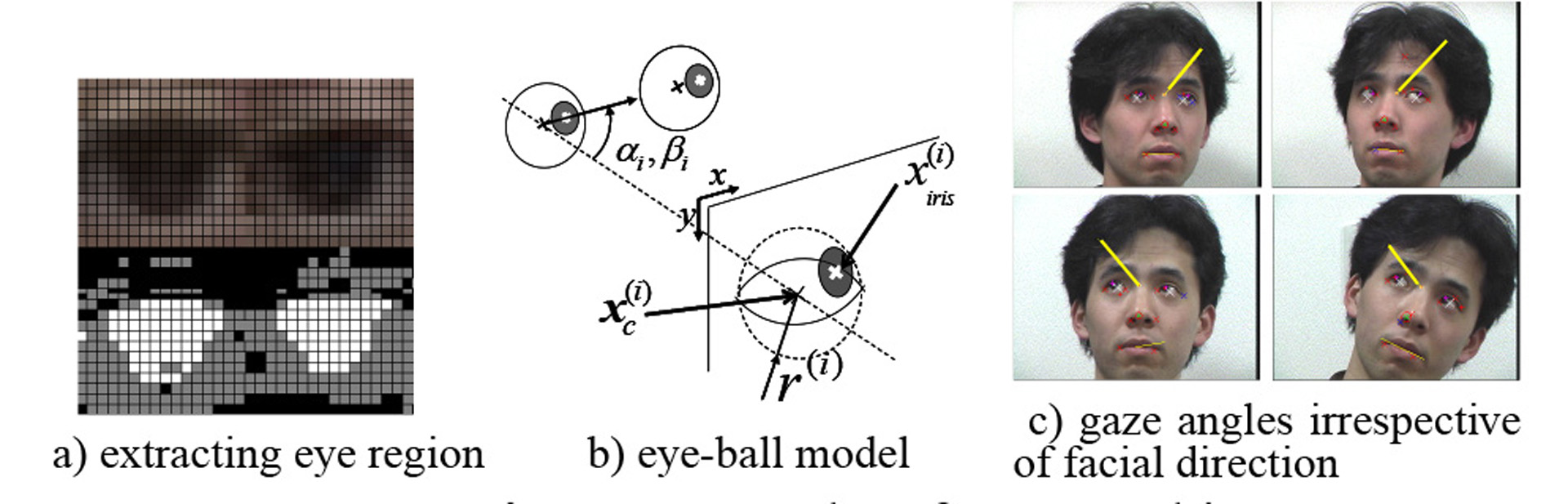

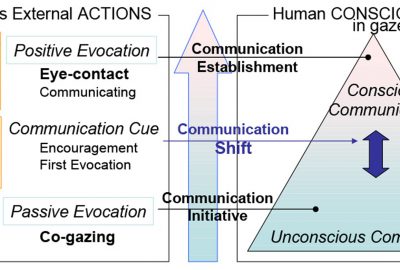

This research aims to naturally evoke human-robot communications in ambient space based on a hierarchical model of gazecommunication. The interactive ambient space is created with our remote gaze-tracking technology based on image analyses and our gaze-reactive robot system. A single remote camera detects the user’s gaze in unrestricted situations by using eye-ball estimation. The robot’s gaze reacts with both 1) “positive evocation” by direct eye contact with multimodal reactions and 2) “passive evocation” by indirect co-gazing (watching a common object or place) according to the user’s conscious/unconscious gaze.

References:

1. A. Kendon. “Some functions of gaze-direction in social interaction.” Acta Psychologica, Vol. 26, pp. 22–63, 1967.

2. H. Kojima. “Infanoid: A babybot that explores the social environment.” Socially Intelligent Agents, K. Dautenhahn and A. H. Bond and L. Canamero and B. Edmonds, Kluwer Academic Publishers, pp. 157–164, 2002.

3. A. Santella, M. Agrawala, D. DeCarlo, D. Salesin, and M. Cohen, “Gaze-Based Interaction for Semi-Automatic Photo Cropping.” In CHI 2006, pp. 771–780, 2006.

4. D. Li, J. Babcock, D. J. Parkhurst, “openEyes: A low-cost head-mounted eye-tracking solution.” Proc. ACM Eye Tracking Research and Applications Symposium, 2006. SensoMetoric Instruments, “3D-VOG.” http://www.smi.de/3d/index.htm

Additional Images:

- 2007 Posters: Yonezawa_Gazecoppet: Hierarchical Gaze-communication in Ambient Space