“Local anatomically-constrained facial performance retargeting” by Chandran, Ciccone, Gross and Bradley

Conference:

Type(s):

Title:

- Local anatomically-constrained facial performance retargeting

Presenter(s)/Author(s):

Abstract:

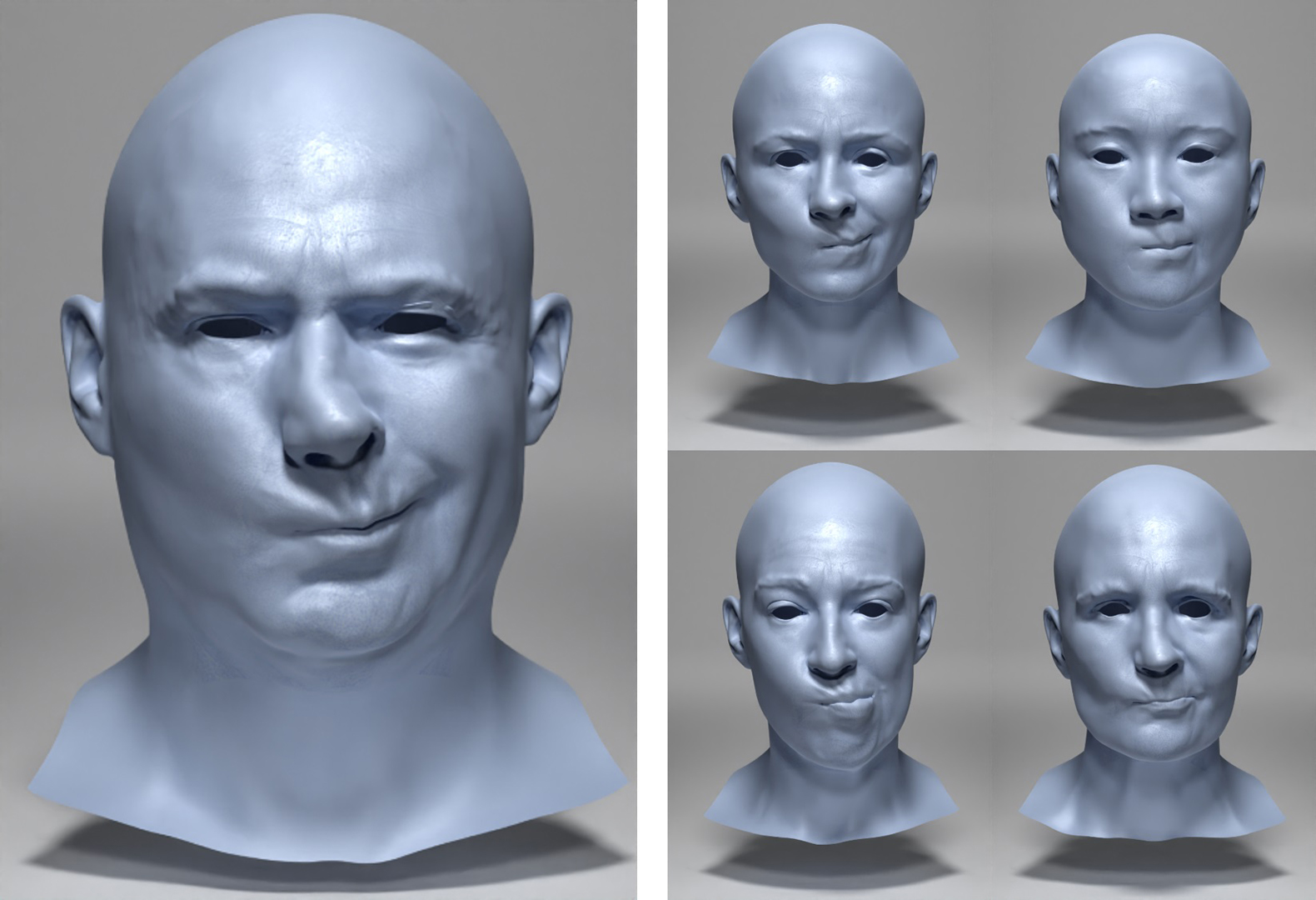

Generating realistic facial animation for CG characters and digital doubles is one of the hardest tasks in animation. A typical production workflow involves capturing the performance of a real actor using mo-cap technology, and transferring the captured motion to the target digital character. This process, known as retargeting, has been used for over a decade, and typically relies on either large blendshape rigs that are expensive to create, or direct deformation transfer algorithms that operate on individual geometric elements and are prone to artifacts. We present a new method for high-fidelity offline facial performance retargeting that is neither expensive nor artifact-prone. Our two step method first transfers local expression details to the target, and is followed by a global face surface prediction that uses anatomical constraints in order to stay in the feasible shape space of the target character. Our method also offers artists with familiar blendshape controls to perform fine adjustments to the retargeted animation. As such, our method is ideally suited for the complex task of human-to-human 3D facial performance retargeting, where the quality bar is extremely high in order to avoid the uncanny valley, while also being applicable for more common human-to-creature settings. We demonstrate the superior performance of our method over traditional deformation transfer algorithms, while achieving a quality comparable to current blendshape-based techniques used in production while requiring significantly fewer input shapes at setup time. A detailed user study corroborates the realistic and artifact free animations generated by our method in comparison to existing techniques.

References:

1. Kfir Aberman, Peizhuo Li, Dani Lischinski, Olga Sorkine-Hornung, Daniel Cohen-Or, and Baoquan Chen. 2020. Skeleton-Aware Networks for Deep Motion Retargeting. ACM Trans. Graphics (Proc. SIGGRAPH) 39, 4 (2020).Google ScholarDigital Library

2. Sameer Agarwal, Keir Mierle, and Others. 2010. Ceres Solver. http://ceres-solver.org.Google Scholar

3. Deepali Aneja, Bindita Chaudhuri, Alex Colburn, Gary Faigin, Linda Shapiro, and Barbara Mones. 2018. Learning to Generate 3D Stylized Character Expressions from Humans. In 2018 IEEE Winter Conference on Applications of Computer Vision (WACV). 160–169.Google ScholarCross Ref

4. Dafni Antotsiou, Guillermo Garcia-Hernando, and Tae-Kyun Kim. 2018. Task-Oriented Hand Motion Retargeting for Dexterous Manipulation Imitation. In ECCV Hands Workshop.Google Scholar

5. Ilya Baran, Daniel Vlasic, Eitan Grinspun, and Jovan Popović. 2009. Semantic Deformation Transfer. ACM Trans. Graphics (Proc. SIGGRAPH) 28, 3, Article 36 (2009).Google ScholarDigital Library

6. Thabo Beeler and Derek Bradley. 2014. Rigid Stabilization of Facial Expressions. ACM Trans. Graphics (Proc. SIGGRAPH) 33, 4, Article 44 (jul 2014).Google ScholarDigital Library

7. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W. Sumner, and Markus Gross. 2011. High-quality passive facial performance capture using anchor frames. ACM Trans. Graphics (Proc. SIGGRAPH) 30, Article 75 (2011). Issue 4.Google Scholar

8. Kiran S. Bhat, Rony Goldenthal, Yuting Ye, Ronald Mallet, and Michael Koperwas. 2013. High Fidelity Facial Animation Capture and Retargeting with Contours. In Proceedings of the 12th ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Association for Computing Machinery, 7–14.Google ScholarDigital Library

9. Mazen Al Borno, Ludovic Righetti, Michael J. Black, Scott L. Delp, Eugene Fiume, and Javier Romero. 2018. Robust Physics-based Motion Retargeting with Realistic Body Shapes. In ACM / Eurographics Symposium on Computer Animation.Google Scholar

10. Sofien Bouaziz and Mark Pauly. 2014. Semi-Supervised Facial Animation Retargeting.Google Scholar

11. Sofien Bouaziz, Yangang Wang, and Mark Pauly. 2013. Online Modeling for Realtime Facial Animation. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, Article 40 (2013).Google ScholarDigital Library

12. Chen Cao, Qiming Hou, and Kun Zhou. 2014. Displaced Dynamic Expression Regression for Real-Time Facial Tracking and Animation. ACM Trans. Graphics (Proc. SIGGRAPH) 33, 4, Article 43 (2014).Google ScholarDigital Library

13. Emma Carrigan, Eduard Zell, Cédric Guiard, and Rachel McDonnell. 2020. Expression Packing: As-Few-As-Possible Training Expressions for Blendshape Transfer. Computer Graphics Forum 39 (2020).Google Scholar

14. P. Chandran, D. Bradley, M. Gross, and T. Beeler. 2020. Semantic Deep Face Models. In 2020 International Conference on 3D Vision (3DV). IEEE Computer Society, 345–354.Google Scholar

15. Bindita Chaudhuri, Noranart Vesdapunt, and Baoyuan Wang. 2019. Joint face detection and facial motion retargeting for multiple faces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9719–9728.Google ScholarCross Ref

16. Lele Chen, Chen Cao, Fernando De la Torre, Jason M. Saragih, Chenliang Xu, and Yaser Sheikh. 2021. High-Fidelity Face Tracking for AR/VR via Deep Lighting Adaptation. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19–25, 2021. Computer Vision Foundation / IEEE, 13059–13069.Google ScholarCross Ref

17. Renwang Chen, Xuanhong Chen, Bingbing Ni, and Yanhao Ge. 2020a. SimSwap: An Efficient Framework For High Fidelity Face Swapping. Association for Computing Machinery, 2003–2011.Google Scholar

18. Renwang Chen, Xuanhong Chen, Bingbing Ni, and Yanhao Ge. 2020b. SimSwap: An Efficient Framework For High Fidelity Face Swapping. In MM ’20: The 28th ACM International Conference on Multimedia. ACM, 2003–2011.Google Scholar

19. Erika Chuang and Christoph Bregler. 2002. Performance Driven Facial Animation using Blendshape Interpolation. Computer Science Technical Report, Stanford University 2 (01 2002).Google Scholar

20. Timothy Costigan, Mukta Prasad, and Rachel McDonnell. 2014. Facial Retargeting Using Neural Networks. Association for Computing Machinery, 31–38.Google Scholar

21. Ludovic Dutreve, Alexandre Meyer, and Saïda Bouakaz. 2008. Feature Points Based Facial Animation Retargeting. In Proceedings of the 2008 ACM Symposium on Virtual Reality Software and Technology. Association for Computing Machinery, 197–200.Google ScholarDigital Library

22. Marco Fratarcangeli, Derek Bradley, Aurel Gruber, Gaspard Zoss, and Thabo Beeler. 2020. Fast Nonlinear Least Squares Optimization of Large-Scale Semi-Sparse Problems. Computer Graphics Forum (2020).Google Scholar

23. Graham Fyffe, Andrew Jones, Oleg Alexander, Ryosuke Ichikari, and Paul Debevec. 2015. Driving High-Resolution Facial Scans with Video Performance Capture. ACM Trans. Graphics (Proc. SIGGRAPH) 34, 1, Article 8 (2015).Google Scholar

24. Pablo Garrido, Levi Valgaerts, Ole Rehmsen, Thorsten Thormählen, Patrick Pérez, and Christian Theobalt. 2014. Automatic Face Reenactment. 2014 IEEE Conference on Computer Vision and Pattern Recognition (2014), 4217–4224.Google Scholar

25. Chunbao Ge, Yiqiang Chen, Changshui Yang, Baocai Yin, and W. Gao. 2005. Motion Retargeting for the Hand Gesture. In WSCG.Google Scholar

26. Yang Hong, Bo Peng, Haiyao Xiao, Ligang Liu, and Juyong Zhang. 2021. HeadNeRF: A Real-time NeRF-based Parametric Head Model. CoRR abs/2112.05637 (2021). arXiv:2112.05637Google Scholar

27. Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. 2017. Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion. ACM Trans. Graph. 36, 4, Article 94 (2017).Google ScholarDigital Library

28. Hyeongwoo Kim, Mohamed Elgharib, Hans-Peter Zollöfer, Michael Seidel, Thabo Beeler, Christian Richardt, and Christian Theobalt. 2019. Neural Style-Preserving Visual Dubbing. ACM Transactions on Graphics (TOG) 38, 6 (2019), 178:1–13.Google ScholarDigital Library

29. Paul Hyunjin Kim, Yeongho Seol, Jaewon Song, and Junyong Noh. 2011. Facial Retargeting by Adding Supplemental Blendshapes. In Pacific Graphics Short Papers, Bing-Yu Chen, Jan Kautz, Tong-Yee Lee, and Ming C. Lin (Eds.). The Eurographics Association.Google Scholar

30. Seonghyeon Kim, Sunjin Jung, Kwanggyoon Seo, Roger Blanco i Ribera, and Junyong Noh. 2021. Deep Learning-Based Unsupervised Human Facial Retargeting. Computer Graphics Forum 40, 7 (2021), 45–55.Google ScholarCross Ref

31. J. P. Lewis, K. Anjyo, Taehyun Rhee, M. Zhang, Frédéric H. Pighin, and Z. Deng. 2014. Practice and Theory of Blendshape Facial Models. In Computer Graphics Forum (Proc. Eurographics).Google Scholar

32. Hao Li, Thibaut Weise, and Mark Pauly. 2010. Example-Based Facial Rigging. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 3 (July 2010).Google ScholarDigital Library

33. Hao Li, Jihun Yu, Yuting Ye, and Chris Bregler. 2013. Realtime Facial Animation with On-the-Fly Correctives. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, Article 42 (2013).Google ScholarDigital Library

34. Tianye Li, Timo Bolkart, Michael J. Black, Hao Li, and Javier Romero. 2017. Learning a Model of Facial Shape and Expression from 4D Scans. ACM Trans. Graph. 36, 6, Article 194 (2017).Google ScholarDigital Library

35. Ko-Yun Liu, Wan-Chun Ma, Chun-Fa Chang, Chuan-Chang Wang, and Paul Debevec. 2011. A framework for locally retargeting and rendering facial performance. Computer Animation and Virtual Worlds 22, 2–3 (2011), 159–167.Google ScholarDigital Library

36. Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh. 2018. Deep appearance models for face rendering. ACM Transactions on Graphics 37, 4 (Aug 2018), 1–13.Google ScholarDigital Library

37. Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J. Black. 2015. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 34, 6 (2015), 248:1–248:16.Google Scholar

38. Shugao Ma, Tomas Simon, Jason M. Saragih, Dawei Wang, Yuecheng Li, Fernando De la Torre, and Yaser Sheikh. 2021. Pixel Codec Avatars. CoRR abs/2104.04638 (2021). arXiv:2104.04638Google Scholar

39. Yisroel Mirsky and Wenke Lee. 2020. The Creation and Detection of Deepfakes: A Survey. CoRR abs/2004.11138 (2020). arXiv:2004.11138Google Scholar

40. Saori Morishima, Ko Ayusawa, Eiichi Yoshida, and Gentiane Venture. 2016. Wholebody motion retargeting using constrained smoothing and functional principle component analysis. In Int. Conf. on Humanoid Robotics.Google Scholar

41. Lucio Moser, Chinyu Chien, Mark Williams, Jose Serra, Darren Hendler, and Doug Roble. 2021. Semi-Supervised Video-Driven Facial Animation Transfer for Production. ACM Trans. Graphics (Proc. SIGGRAPH) 40, 6, Article 222 (2021).Google ScholarDigital Library

42. Jacek Naruniec, Leonhard Helminger, Christopher Schroers, and Romann Weber. 2020-07. High-Resolution Neural Face Swapping for Visual Effects. Computer Graphics Forum 39, 4 (2020-07), 173 — 184.Google ScholarCross Ref

43. Yuval Nirkin, Yosi Keller, and Tal Hassner. 2019. FSGAN: Subject agnostic face swapping and reenactment. In Proceedings of the IEEE International Conference on Computer Vision. 7184–7193.Google ScholarCross Ref

44. Jun-yong Noh and Ulrich Neumann. 2001. Expression Cloning. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’01). Association for Computing Machinery, 277–288.Google Scholar

45. Jedrzej Orbik, Shile Li, and Dongheui Lee. 2021. Human hand motion retargeting for dexterous robotic hand. In Int. Conf. on Ubiquitous Robots.Google ScholarCross Ref

46. Ahmed A. A. Osman, Timo Bolkart, and Michael J. Black. 2020. STAR: Sparse Trained Articulated Human Body Regressor. In European Conference on Computer Vision (ECCV), Vol. LNCS 12355. 598–613.Google Scholar

47. L. Penco, B. Clement, V. Modugno, E. Mingo Hoffman, G. Nava, D. Pucci, Nikos G. Tsagarakis, J.-B. Mouret, and S. Ivaldi. 2018. Robust Real-Time Whole-Body Motion Retargeting from Human to Humanoid. In Int. Conf. on Humanoid Robotics.Google Scholar

48. Ivan Perov, Daiheng Gao, Nikolay Chervoniy, Kunlin Liu, Sugasa Marangonda, Chris Umé, Mr. Dpfks, Carl Shift Facenheim, Luis RP, Jian Jiang, Sheng Zhang, Pingyu Wu, Bo Zhou, and Weiming Zhang. 2021. DeepFaceLab: Integrated, flexible and extensible face-swapping framework. arXiv:2005.05535 [cs.CV]Google Scholar

49. Yurui Ren, Ge Li, Yuanqi Chen, Thomas H. Li, and Shan Liu. 2021. PIRenderer: Controllable Portrait Image Generation via Semantic Neural Rendering. arXiv:2109.08379 [cs.CV]Google Scholar

50. Roger Blanco i Ribera, Eduard Zell, J. P. Lewis, Junyong Noh, and Mario Botsch. 2017. Facial Retargeting with Automatic Range of Motion Alignment. ACM Trans. Graph. 36, 4, Article 154 (2017).Google ScholarDigital Library

51. Jun Saito. 2013. Smooth Contact-Aware Facial Blendshapes Transfer. In Proceedings of the Symposium on Digital Production. Association for Computing Machinery, 7–12.Google ScholarDigital Library

52. Igor Santesteban, Elena Garces, Miguel A. Otaduy, and Dan Casas. 2020. SoftSMPL: Data-driven Modeling of Nonlinear Soft-tissue Dynamics for Parametric Humans. Computer Graphics Forum (Proc. Eurographics) (2020).Google Scholar

53. Yeongho Seol, J.P. Lewis, Jaewoo Seo, Byungkuk Choi, Ken Anjyo, and Junyong Noh. 2012. Spacetime Expression Cloning for Blendshapes. ACM Trans. Graphics (Proc. SIGGRAPH) 31, 2, Article 14 (apr 2012).Google ScholarDigital Library

54. Yeongho Seol, Jaewoo Seo, Hyunjin Kim, J.P. Lewis, and Junyong Noh. 2011. Artist Friendly Facial Animation Retargeting. ACM Trans. Graphics (Proc. SIGGRAPH) 30 (12 2011), 162.Google Scholar

55. Mike Seymour, Chris Evans, and Kim Libreri. 2017. Meet Mike: Epic Avatars. In ACM SIGGRAPH 2017 VR Village. Association for Computing Machinery, Article 12.Google ScholarDigital Library

56. Jaewon Song, Byungkuk Choi, Yeongho Seol, and Junyong Noh. 2011. Characteristic Facial Retargeting. Comput. Animat. Virtual Worlds 22, 2–3 (2011), 187–194.Google ScholarDigital Library

57. Robert W. Sumner and Jovan Popović. 2004. Deformation Transfer for Triangle Meshes. ACM Trans. Graphics (Proc. SIGGRAPH) 23, 3 (2004), 399–405.Google ScholarDigital Library

58. Justus Thies, Michael Zollhofer, Marc Stamminger, Christian Theobalt, and Matthias Niessner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarDigital Library

59. Yuhan Wang, Xu Chen, Junwei Zhu, Wenqing Chu, Ying Tai, Chengjie Wang, Jilin Li, Yongjian Wu, Feiyue Huang, and Rongrong Ji. 2021. HifiFace: 3D Shape and Semantic Prior Guided High Fidelity Face Swapping. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI-21. International Joint Conferences on Artificial Intelligence Organization, 1136–1142.Google ScholarCross Ref

60. Thibaut Weise, Sofien Bouaziz, Hao Li, and Mark Pauly. 2011. Realtime Performance-Based Facial Animation. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 4, Article 77 (2011).Google ScholarDigital Library

61. Chenglei Wu, Derek Bradley, Markus Gross, and Thabo Beeler. 2016. An Anatomically-constrained Local Deformation Model for Monocular Face Capture. ACM Trans. Graphics (Proc. SIGGRAPH) 35, 4, Article 115 (2016).Google ScholarDigital Library

62. Feng Xu, Jinxiang Chai, Yilong Liu, and Xin Tong. 2014. Controllable High-Fidelity Facial Performance Transfer. ACM Trans. Graph. 33, 4, Article 42 (2014).Google ScholarDigital Library

63. Juyong Zhang, Keyu Chen, and Jianmin Zheng. 2022. Facial Expression Retargeting From Human to Avatar Made Easy. IEEE Transactions on Visualization and Computer Graphics 28, 2 (2022), 1274–1287.Google ScholarDigital Library

64. Jiangning Zhang, Xianfang Zeng, Mengmeng Wang, Yusu Pan, Liang Liu, Yong Liu, Yu Ding, and Changjie Fan. 2020. FReeNet: Multi-Identity Face Reenactment. In CVPR. 5326–5335.Google Scholar