“Deep reflectance fields: high-quality facial reflectance field inference from color gradient illumination” by Meka, Häne, Pandey, Zollhöfer, Fanello, et al. …

Conference:

Type(s):

Title:

- Deep reflectance fields: high-quality facial reflectance field inference from color gradient illumination

Session/Category Title:

- Relighting and View Synthesis

Presenter(s)/Author(s):

- Abhimitra Meka

- Christian Häne

- Rohit Pandey

- Michael Zollhöfer

- Sean Ryan Fanello

- Graham Fyffe

- Adarsh Kowdle

- Xueming Yu

- Jay Busch

- Jason Dourgarian

- Peter Denny

- Sofien Bouaziz

- Peter Lincoln

- Matt Whalen

- Geoff Harvey

- Jonathan Taylor

- Shahram Izadi

- Andrea Tagliasacchi

- Paul E. Debevec

- Christian Theobalt

- Julien P. C. Valentin

- Christoph Rhemann

Abstract:

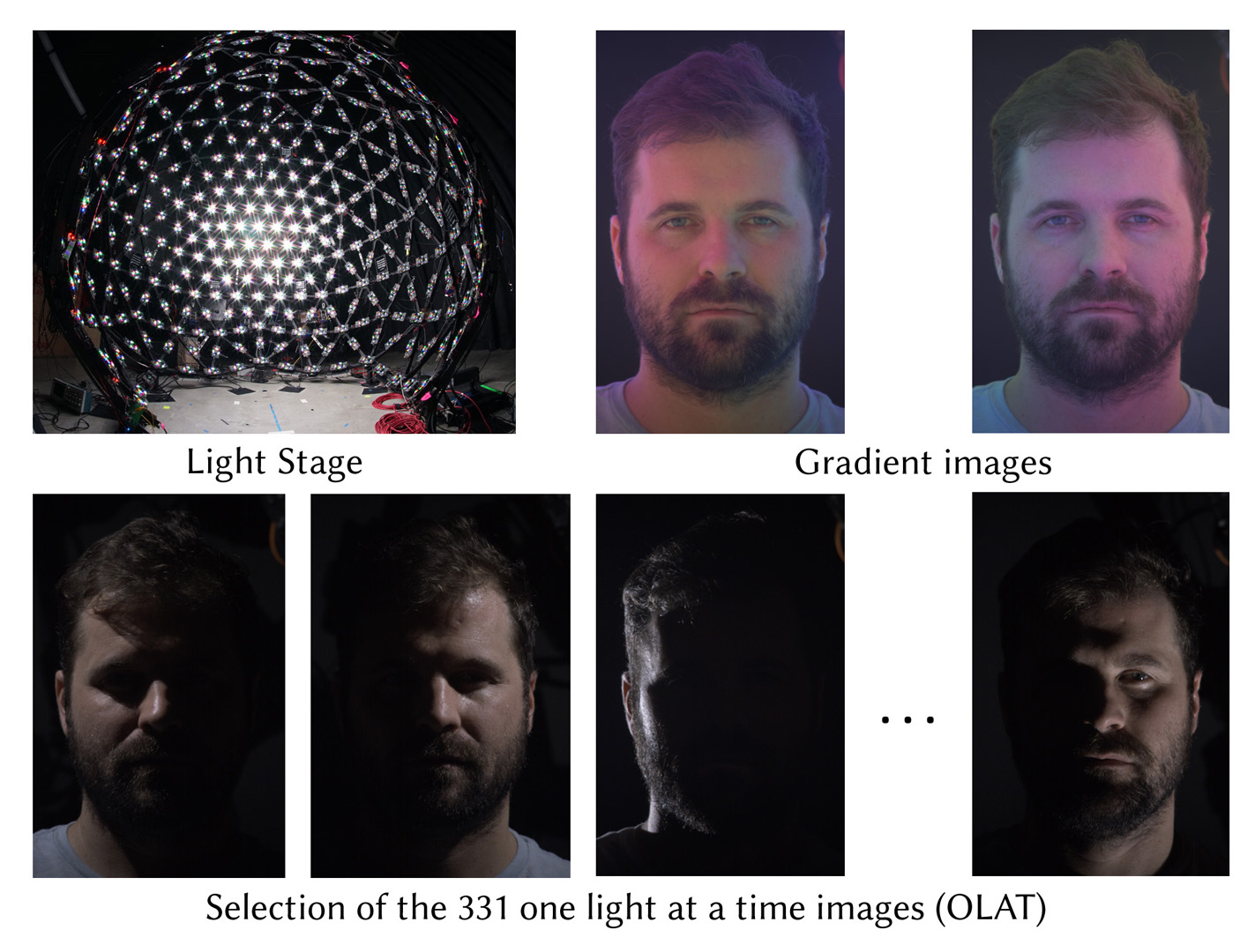

We present a novel technique to relight images of human faces by learning a model of facial reflectance from a database of 4D reflectance field data of several subjects in a variety of expressions and viewpoints. Using our learned model, a face can be relit in arbitrary illumination environments using only two original images recorded under spherical color gradient illumination. The output of our deep network indicates that the color gradient images contain the information needed to estimate the full 4D reflectance field, including specular reflections and high frequency details. While capturing spherical color gradient illumination still requires a special lighting setup, reduction to just two illumination conditions allows the technique to be applied to dynamic facial performance capture. We show side-by-side comparisons which demonstrate that the proposed system outperforms the state-of-the-art techniques in both realism and speed.

References:

1. Robert Anderson, David Gallup, Jonathan T. Barron, Janne Kontkanen, Noah Snavely, Carlos Hernández, Sameer Agarwal, and Steven M Seitz. 2016. Jump: Virtual Reality Video. SIGGRAPH Asia (2016). Google ScholarDigital Library

2. Jonathan T. Barron and Jitendra Malik. 2015. Shape, Illumination, and Reflectance from Shading. IEEE Trans. Pattern Anal. Mach. Intell. 37, 8 (2015).Google ScholarDigital Library

3. Pascal Bérard, Derek Bradley, Markus Gross, and Thabo Beeler. 2016. Lightweight Eye Capture Using a Parametric Model. ACM Trans. Graph. (Proc. SIGGRAPH) 35, 4, Article 117 (July 2016), 12 pages. Google ScholarDigital Library

4. Amit Bermano, Thabo Beeler, Yeara Kozlov, Derek Bradley, Bernd Bickel, and Markus Gross. 2015. Detailed Spatio-temporal Reconstruction of Eyelids. ACM Trans. Graph. (2015). Google ScholarDigital Library

5. Volker Blanz and Thomas Vetter. {n. d.}. A Morphable Model for the Synthesis of 3D Faces. In Proc. of the Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’99). Google ScholarDigital Library

6. Qifeng Chen and Vladlen Koltun. 2017. Photographic Image Synthesis with Cascaded Refinement Networks. ICCV (2017).Google Scholar

7. Paul Debevec. 2012. The Light Stages and Their Applications to Photoreal Digital Actors. In SIGGRAPH Asia. Singapore.Google Scholar

8. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000. Acquiring the Reflectance Field of a Human Face. In Proceedings of SIGGRAPH 2000 (SIGGRAPH ’00). Google ScholarDigital Library

9. Per Einarsson, Charles-Felix Chabert, Andrew Jones, Wan-Chun Ma, Bruce Lamond, Tim Hawkins, Mark Bolas, Sebastian Sylwan, and Paul Debevec. 2006. Relighting Human Locomotion with Flowed Reflectance Fields. In Proceedings of the 17th Eurographics Conference on Rendering Techniques (EGSR ’06). Google ScholarDigital Library

10. S. M. Ali Eslami, Danilo Jimenez Rezende, Frederic Besse, Fabio Viola, Ari S. Morcos, Marta Garnelo, Avraham Ruderman, Andrei A. Rusu, Ivo Danihelka, Karol Gregor, David P. Reichert, Lars Buesing, Theophane Weber, Oriol Vinyals, Dan Rosenbaum, Neil Rabinowitz, Helen King, Chloe Hillier, Matt Botvinick, Daan Wierstra, Koray Kavukcuoglu, and Demis Hassabis. 2018. Neural scene representation and rendering. Science 360, 6394 (2018).Google Scholar

11. Graham Fyffe and Paul Debevec. 2015. Single-Shot Reflectance Measurement from Polarized Color Gradient Illumination. In ICCP.Google Scholar

12. Graham Fyffe, Cyrus A. Wilson, and Paul Debevec. 2009. Cosine Lobe Based Relighting from Gradient Illumination Photographs. In SIGGRAPH ’09: Posters (SIGGRAPH ’09). Google ScholarDigital Library

13. Pablo Garrido, Levi Valgaert, Chenglei Wu, and Christian Theobalt. 2013. Reconstructing Detailed Dynamic Face Geometry from Monocular Video. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 32, 6, Article 158 (Nov. 2013), 10 pages. Google ScholarDigital Library

14. Pablo Garrido, Michael Zollhoefer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Perez, and Christian Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. (2016).Google Scholar

15. Abhijeet Ghosh, Graham Fyffe, Borom Tunwattanapong, Jay Busch, Xueming Yu, and Paul Debevec. 2011. Multiview Face Capture Using Polarized Spherical Gradient Illumination. ACM Trans. Graph. (2011). Google ScholarDigital Library

16. Paulo Gotardo, Jérémy Riviere, Derek Bradley, Abhijeet Ghosh, and Thabo Beeler. 2018. Practical Dynamic Facial Appearance Modeling and Acquisition. In SIGGRAPH Asia. Google ScholarDigital Library

17. Tim Hawkins, Andreas Wenger, Chris Tchou, Andrew Gardner, Fredrik Göransson, and Paul E Debevec. 2004. Animatable Facial Reflectance Fields. Rendering Techniques (2004). Google ScholarDigital Library

18. Liwen Hu, Chongyang Ma, Linjie Luo, and Hao Li. 2015. Single-View Hair Modeling Using A Hairstyle Database. ACM Trans. on Graphics (SIGGRAPH) (2015). Google ScholarDigital Library

19. Alexandru Eugen Ichim, Sofien Bouaziz, and Mark Pauly. 2015. Dynamic 3D Avatar Creation from Hand-held Video Input. ACM Trans. Graph. 34, 4, Article 45 (July 2015), 14 pages. Google ScholarDigital Library

20. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2016. Image-to-Image Translation with Conditional Adversarial Networks. arxiv (2016).Google Scholar

21. Yoshihiro Kanamori and Yuki Endo. 2018. Relighting Humans: Occlusion-aware Inverse Rendering for Full-body Human Images. In SIGGRAPH Asia. ACM. Google ScholarDigital Library

22. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. CoRR (2014).Google Scholar

23. Weicheng Kuo, Christian Häne, Esther Yuh, Pratik Mukherjee, and Jitendra Malik. 2018. PatchFCN for Intracranial Hemorrhage Detection. arXiv preprint arXiv:1806.03265 (2018).Google Scholar

24. Guannan Li, Chenglei Wu, Carsten Stoll, Yebin Liu, Kiran Varanasi, Qionghai Dai, and Christian Theobalt. 2013. Capturing Relightable Human Performances under General Uncontrolled Illumination. Computer Graphics Forum (Proc. EUROGRAPHICS 2013) (2013).Google ScholarCross Ref

25. Zhengqin Li, Kalyan Sunkavalli, and Manmohan Chandraker. 2018a. Materials for Masses: SVBRDF Acquisition with a Single Mobile Phone Image. In ECCV (Lecture Notes in Computer Science). Springer.Google Scholar

26. Zhengqin Li, Zexiang Xu, Ravi Ramamoorthi, Kalyan Sunkavalli, and Manmohan Chandraker. 2018b. Learning to Reconstruct Shape and Spatially-varying Reflectance from a Single Image. In SSIGGRAPH Asia. Google ScholarDigital Library

27. Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh. 2018. Deep Appearance Models for Face Rendering. ACM Trans. Graph. 37, 4, Article 68 (July 2018). Google ScholarDigital Library

28. Jonathan Long, Evan Shelhamer, and Trevor Darrell. 2015. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition.Google ScholarCross Ref

29. Wan-Chun Ma, Tim Hawkins, Pieter Peers, Charles-Felix Chabert, Malte Weiss, and Paul Debevec. 2007. Rapid Acquisition of Specular and Diffuse Normal Maps from Polarized Spherical Gradient Illumination. In Proceedings of the Eurographics Conference on Rendering Techniques (EGSR’07). Google ScholarDigital Library

30. Ricardo Martin-Brualla, Rohit Pandey, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Julien Valentin, Sameh Khamis, Philip Davidson, Anastasia Tkach, Peter Lincoln, Adarsh Kowdle, Christoph Rhemann, Dan B Goldman, Cem Keskin, Steve Seitz, Shahram Izadi, and Sean Fanello. 2018. LookinGood: Enhancing Performance Capture with Real-time NeuralRe-Rendering. In SIGGRAPH Asia. Google ScholarDigital Library

31. Abhimitra Meka, Gereon Fox, Michael Zollhöfer, Christian Richardt, and Christian Theobalt. 2017. Live User-Guided Intrinsic Video For Static Scene. IEEE Transactions on Visualization and Computer Graphics 23, 11 (2017).Google ScholarDigital Library

32. Abhimitra Meka, Maxim Maximov, Michael Zollhoefer, Avishek Chatterjee, Hans-Peter Seidel, Christian Richardt, and Christian Theobalt. 2018. LIME: Live Intrinsic Material Estimation. In Proceedings of Computer Vision and Pattern Recognition (CVPR). 11.Google ScholarCross Ref

33. Oliver Nalbach, Elena Arabadzhiyska, Dushyant Mehta, Hans-Peter Seidel, and Tobias Ritschel. 2017. Deep Shading: Convolutional Neural Networks for Screen-Space Shading. 36, 4 (2017). Google ScholarDigital Library

34. Giljoo Nam, Joo Ho Lee, Diego Gutierrez, and Min H. Kim. 2018. Practical SVBRDF Acquisition of 3D Objects with Unstructured Flash Photography. In SIGGRAPH Asia. Google ScholarDigital Library

35. Pieter Peers, Naoki Tamura, Wojciech Matusik, and Paul Debevec. 2007. Post-production Facial Performance Relighting Using Reflectance Transfer. In ACM SIGGRAPH 2007 Papers (SIGGRAPH ’07). ACM, Article 52. Google ScholarDigital Library

36. Peiran Ren, Yue Dong, Stephen Lin, Xin Tong, and Baining Guo. 2015. Image Based Relighting Using Neural Networks. ACM Trans. Graph. 34, 4 (July 2015). Google ScholarDigital Library

37. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation. MICCAI (2015).Google Scholar

38. Shunsuke Saito, Lingyu Wei, Liwen Hu, Koki Nagano, and Hao Li. 2017. Photorealistic Facial Texture Inference Using Deep Neural Networks. In CVPR. IEEE Computer Society, 2326–2335.Google Scholar

39. YiChang Shih, Sylvain Paris, Connelly Barnes, William T. Freeman, and Frédo Durand. 2014. Style Transfer for Headshot Portraits. ACM Trans. Graph. (2014). Google ScholarDigital Library

40. Zhixin Shu, Sunil Hadap, Eli Shechtman, Kalyan Sunkavalli, Sylvain Paris, and Dimitris Samaras. 2017. Portrait Lighting Transfer Using a Mass Transport Approach. ACM Trans. Graph. (2017).Google Scholar

41. K. Simonyan and A. Zisserman. 2014. Very Deep Convolutional Networks for Large-Scale Image Recognition. CoRR abs/1409.1556 (2014).Google Scholar

42. Christian Theobalt, Naveed Ahmed, Hendrik P. A. Lensch, Marcus A. Magnor, and Hans-Peter Seidel. 2007. Seeing People in Different Light-Joint Shape, Motion, and Reflectance Capture. IEEE TVCG 13, 4 (2007), 663–674. Google ScholarDigital Library

43. Justus Thies, Michael Zollhoefer, Marc Stamminger, Christian Theobalt, and Matthias Niessner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Proc. CVPR.Google ScholarDigital Library

44. Zhen Wen, Zicheng Liu, and T. S. Huang. 2003. Face relighting with radiance environment maps. In CVPR.Google Scholar

45. Andreas Wenger, Andrew Gardner, Chris Tchou, Jonas Unger, Tim Hawkins, and Paul Debevec. 2005. Performance Relighting and Reflectance Transformation with Time-multiplexed Illumination. In ACM SIGGRAPH 2005 Papers (SIGGRAPH ’05). Google ScholarDigital Library

46. Zexiang Xu, Kalyan Sunkavalli, Sunil Hadap, and Ravi Ramamoorthi. 2018. Deep image-based relighting from optimal sparse samples. ACM Trans. on Graphics (2018). Google ScholarDigital Library

47. Shuco Yamaguchi, Shunsuke Saito, Koki Nagano, Yajie Zhao, Weikai Chen, Kyle Olszewski, Shigeo Morishima, and Hao Li. 2018. High-fidelity Facial Reflectance and Geometry Inference from an Unconstrained Image. ACM Trans. Graph. 37, 4, Article 162 (July 2018). Google ScholarDigital Library

48. Meng Zhang, Menglei Chai, Hongzhi Wu, Hao Yang, and Kun Zhou. 2017. A Data-driven Approach to Four-view Image-based Hair Modeling. ACM ToG 36 (2017). Google ScholarDigital Library

49. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. CVPR (2018).Google Scholar

50. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In ICCV.Google Scholar