“Self-Cam: feedback from what would be your social partner” by Teeters, El Kaliouby and Picard

Conference:

Type(s):

Title:

- Self-Cam: feedback from what would be your social partner

Presenter(s)/Author(s):

Abstract:

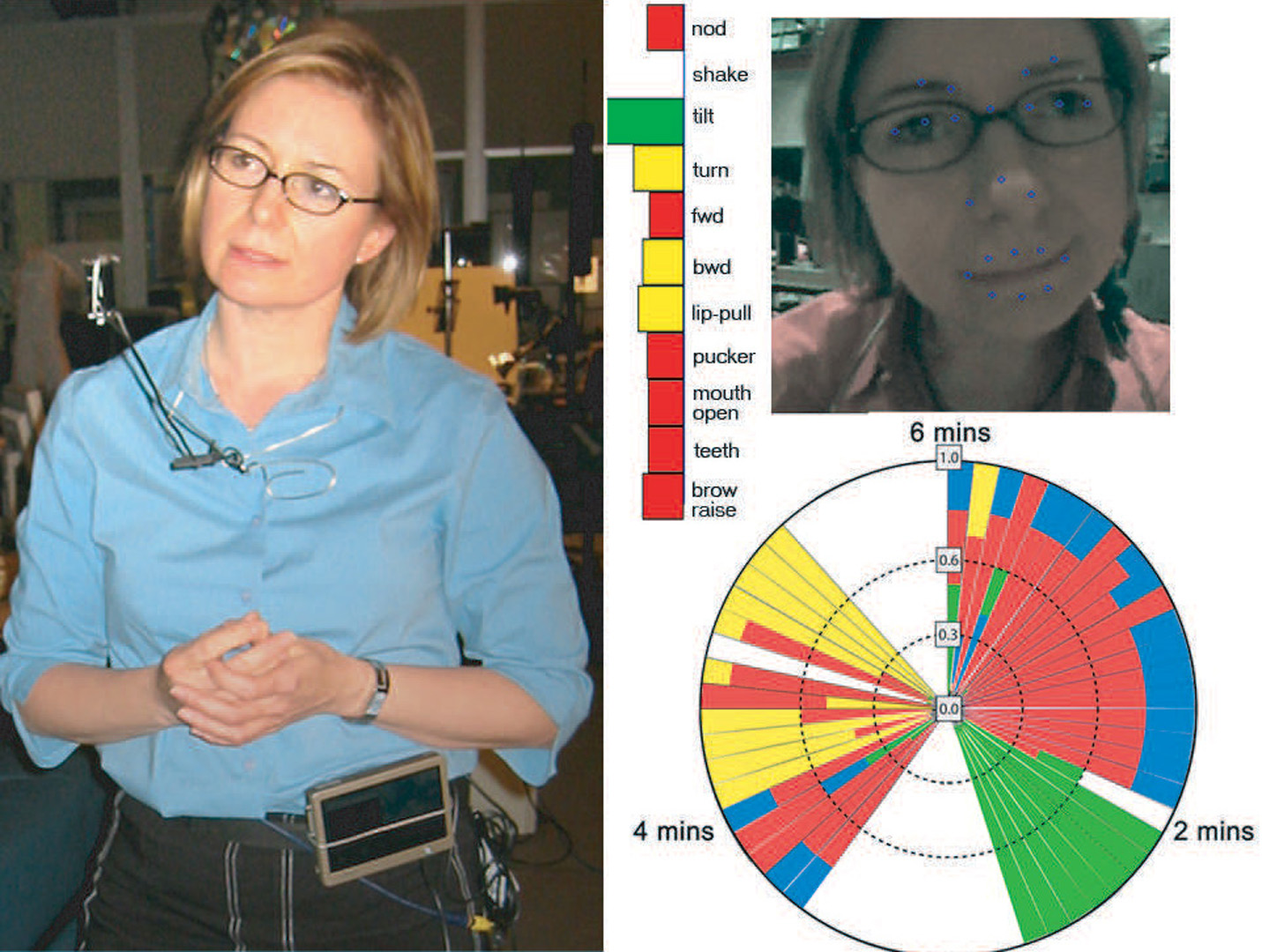

We present Self-Cam, a novel wearable camera system that analyzes, in real-time, the facial expressions and head gestures of its wearer and infers six underlying affective-cognitive states of mind: agreeing, disagreeing, interested, confused, concentrating, and thinking. A graphical interface summarizes the wearer’s states over time. The system allows you to explore what others may see in your face during natural interactions, e.g., “I looked ‘agreeing’ a lot when I spoke with that researcher I admire.” Self-Cams will be available for SIGGRAPH attendees to explore this technology’s novel point-of-view, and gain insight into what their facial expressions reveal during natural human interaction.

References:

1. Eyetap, 2004. http://www.eyetap.org.

2. El Kaliouby, R. (2005). Mind-Reading Machines: Automated Inference of Complex Mental States. Phd thesis, University of Cambridge, Computer Laboratory.