“Virtual Acoustic Environments: The Convolvotron” by Wenzel

Conference:

Experience Type(s):

Title:

- Virtual Acoustic Environments: The Convolvotron

Program Title:

- Demonstrations and Displays

Presenter(s):

Collaborator(s):

Description:

Due to the computational complexities associated with acoustic modeling, so far only free-field rooms (rooms without echoes) have been simulated interactively in real time. The Convolvotron, an illustration of the acoustic design studio of the future, is a completely new development in simulation of 3D audio systems and environments.

This real-time interactive simulation of a simple room allows users to manipulate various environmental characteristics that affect sound quality. For example, while listening through headphones, users can hear changes in sound quality as they expand and contract the walls of a virtual room, move around the room, and or change the position of a virtual musical instrument. By designing an artificial concert space, exploring the acoustic consequences of the design, and creating a new orchestral arrangement with a “live spatial mix” of individual musical tracks, the participant becomes the “architect” or “recording engineer.” The environmental parameters of the design are controlled with a mouse and an interactive, two-dimensional plan view, while a three-dimensional “window” on the space is achieved using a Fake Space Labs desktop BOOM viewer with real-time, stereoscopic computer graphics.

Human aural perception presents interesting challenges to simulation developers. As sound propagates from a loudspeaker to a listener’s ears, reflection and refraction effects tend to alter the sound in subtle ways. Cues to the left-right dimension are based primarily on interaural differences in intensity and time-of-arrival due to the horizontal arrangement of two spatially separated ears. Other effects, resulting from the interaction of incoming sound waves with each person’s outer ear structures, are dependent upon frequency and vary greatly with the direction of the sound source.

It is clear that listeners use these kinds of frequency-dependent effects to discriminate one location from another, especially in the vertical dimension, and the effects appear to be critical for achieving externalization (the “outside-the-head” sensation). Recent research indicates that all of these effects can be expressed as a single filtering operation analogous to the effects of a graphic equalizer in a stereo system. The exact nature of this filter can be measured bv a simple experiment in which a single, very short sound impulse, or click, is produced by a loudspeaker at a particular location. The acoustic shaping by the two ears in both intensity and phase (the Head-Related Transfer Function or HRTF) is then measured by recording the output of small probe microphones placed inside the listener’s ear canals. If the measurement of the two ears occurs simultaneously, the responses, when taken together as a pair of filters, include an estimate of the interaural difference cues.

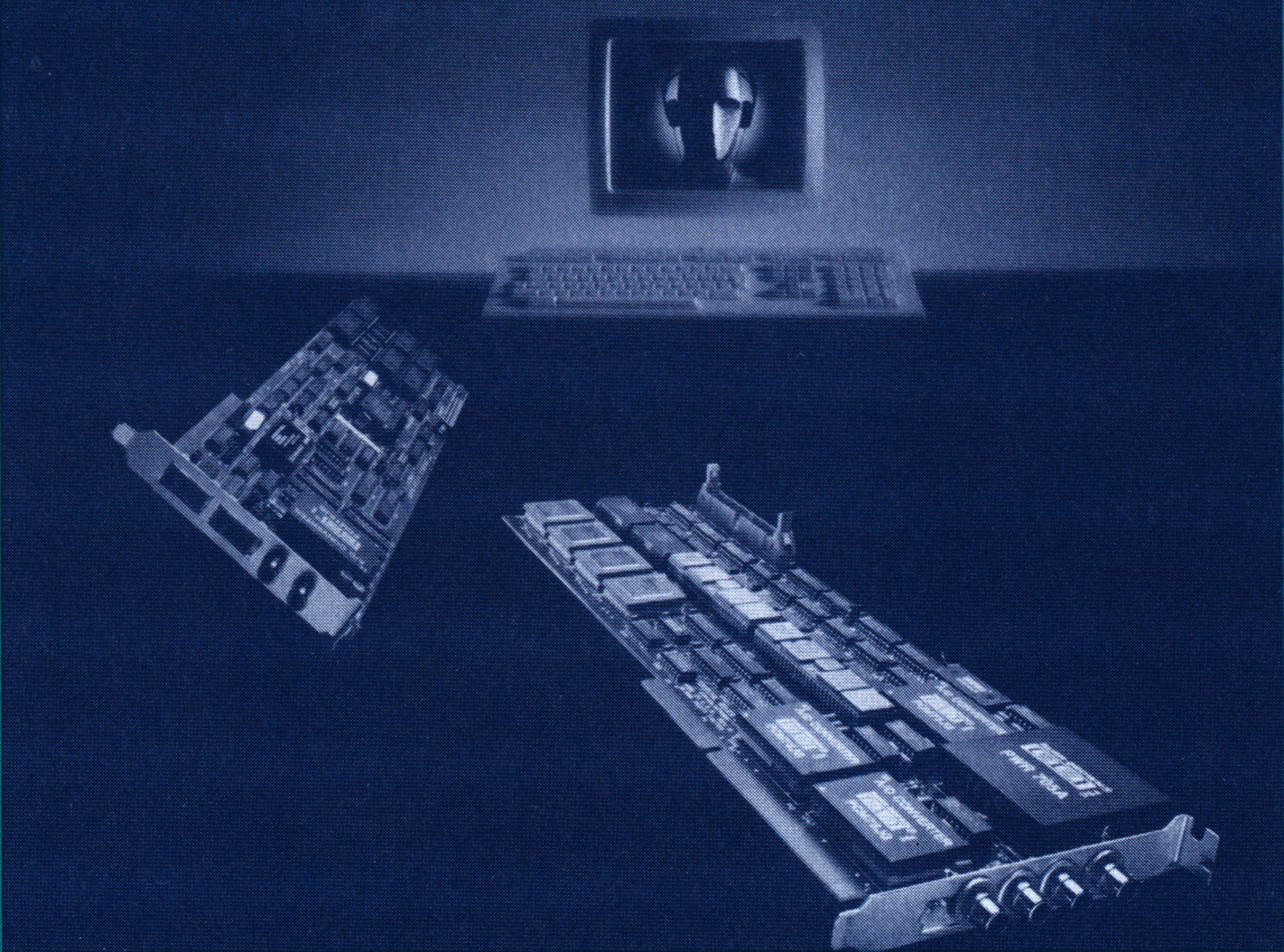

In The Convolvotron’s signal-processing system, designed by Crystal River Engineering in collaboration with NASA Ames, externalized, three-dimensional sound cues are synthesized over headphones using filters based on anéchoic (echoless) measurements of the HRTF for many different locations. Multiple independent and simultaneous sources can be generated in real time. Smooth, artifact-free motion trajectories and static locations at greater resolution than the empirical HRTFs are simulated by fourway linear interpolation and stabilized in virtual space relative to the listener using a head-tracking system or the BOOM viewer. Since the HRTFs are derived from individual people, in effect the system allows one to “listen through someone else’s ears”.

While the first Convolvotron implementation simulated only the direct paths of up to four virtual sources to the listener, it possessed a high degree of interactivity. In order to provide more realistic cues and reduce perceptual errors such as front-back reversals and failures of externalization, it is desirable to create the same level of interactivity in more complex acoustic environments.

The current implementation is based on the image model for simulating room characteristics and uses synthetic earl reflections in a kind of “ray-tracing” algorithm. The presence of a reflecting surface, such as a wall, is simulated by placing a delayed “image” source behind the surface to account for the reflection of the source signal (as if a virtual loudspeaker were mounted behind the wall). What the listener hears is the superposition of the direct path from the source with the image sources coming from all the reflectors in the environment. Arbitrary source, reflector, and listener positions with variable listener orientation are possible. Overall reflection properties, such as high-frequency absorption by surfaces, can also be simulated.

The human performance advantages of systems such as The Convolvotron include the ability to monitor information from all possible directions (not just the direction of gaze), enhanced intelligibility and segregation of multiple sound streams, and, in multi-sensory systems, information reinforcement combined with a greater sense of presence or realism. Just as a movie with sound is much more compelling and informationally-rich than a silent film, computer interfaces can be enhanced by an appropriate three-dimensional “sound track”.

In addition to simulation of acoustic environments for architectural design, music recording, and entertainment, possible applications include air traffic control and cockpit displays for aviation; applications involving encoded sound, such as the acoustic «visualization” of multi-dimensional data and alternative interfaces for the blind; and other telepresence environments, including advanced teleconferencing, shared electronic workspaces, and monitoring telerobotic activities in hazardous situations.

Other Information:

Sound Design: Earwax Productions

Hardware: Crystal River Engineering Convolvotron, Fake Space Labs BOOM 2 viewer, Silicon Graphics workstations

Application: Architectural acoustics

Type of System: Player, single-user

Interaction Class: Immersive, inclusive

Acknowledgements:

The authors gratefully acknowledge the important contributions of Fake Space Labs, Telepresence Research, and Phil Stone (Sterling Software, NASA Ames) for the visual displays in this demonstration.