“Real-time expression transfer for facial reenactment”

Conference:

Type(s):

Title:

- Real-time expression transfer for facial reenactment

Session/Category Title: Faces and Characters

Presenter(s)/Author(s):

Abstract:

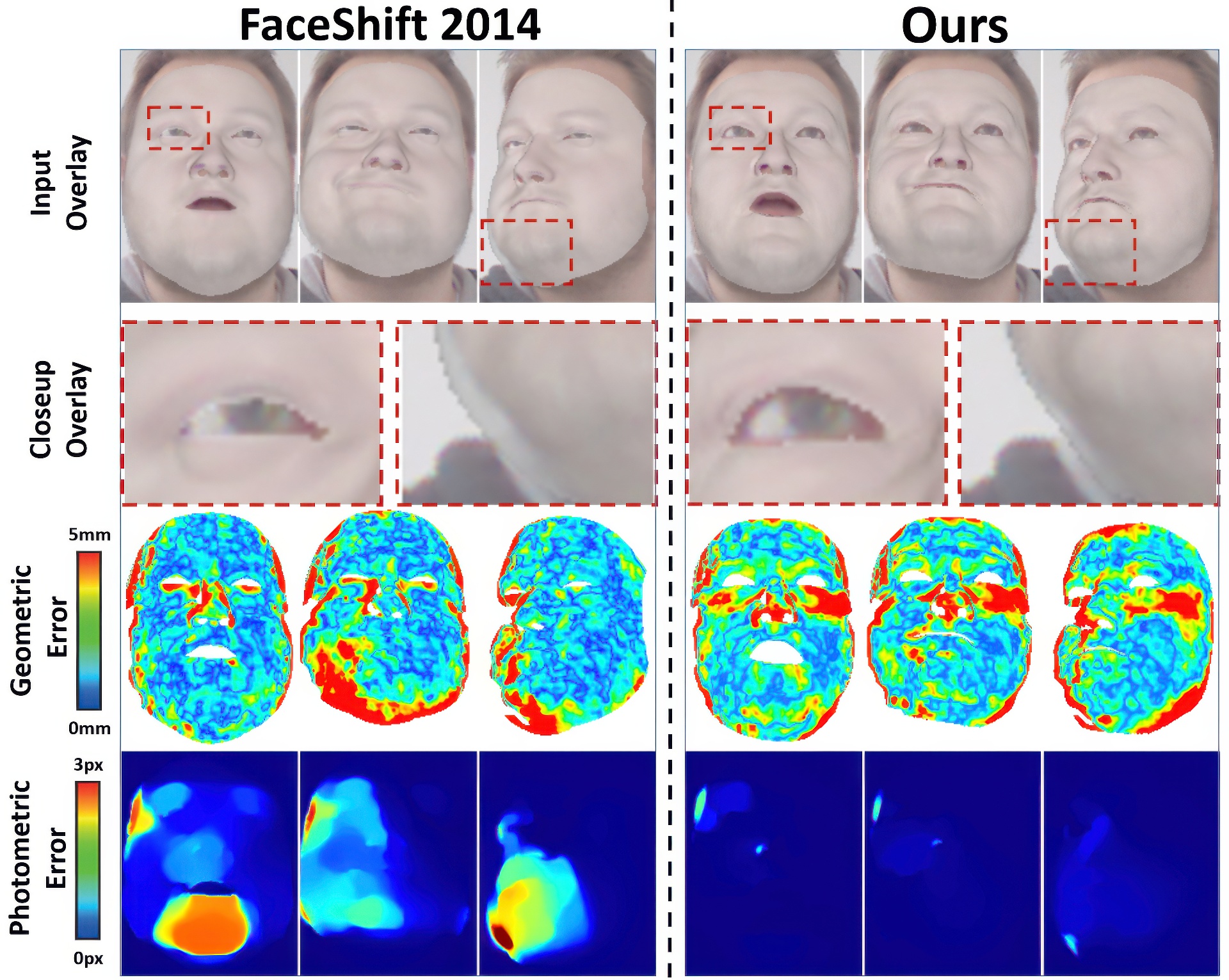

We present a method for the real-time transfer of facial expressions from an actor in a source video to an actor in a target video, thus enabling the ad-hoc control of the facial expressions of the target actor. The novelty of our approach lies in the transfer and photorealistic re-rendering of facial deformations and detail into the target video in a way that the newly-synthesized expressions are virtually indistinguishable from a real video. To achieve this, we accurately capture the facial performances of the source and target subjects in real-time using a commodity RGB-D sensor. For each frame, we jointly fit a parametric model for identity, expression, and skin reflectance to the input color and depth data, and also reconstruct the scene lighting. For expression transfer, we compute the difference between the source and target expressions in parameter space, and modify the target parameters to match the source expressions. A major challenge is the convincing re-rendering of the synthesized target face into the corresponding video stream. This requires a careful consideration of the lighting and shading design, which both must correspond to the real-world environment. We demonstrate our method in a live setup, where we modify a video conference feed such that the facial expressions of a different person (e.g., translator) are matched in real-time.

References:

1. Adelson, E. H., Anderson, C. H., Bergen, J. R., Burt, P. J., and Ogden, J. M. 1984. Pyramid methods in image processing. RCA engineer 29, 6, 33–41.

2. Alexander, O., Rogers, M., Lambeth, W., Chiang, M., and Debevec, P. 2009. The Digital Emily Project: photoreal facial modeling and animation. In ACM SIGGRAPH Courses, ACM, 12:1–12:15.

3. Beeler, T., Hahn, F., Bradley, D., Bickel, B., Beardsley, P., Gotsman, C., Sumner, R. W., and Gross, M. 2011. High-quality passive facial performance capture using anchor frames. ACM TOG 30, 4, 75.

4. Bickel, B., Botsch, M., Angst, R., Matusik, W., Otaduy, M., Pfister, H., and Gross, M. 2007. Multi-scale capture of facial geometry and motion. ACM TOG 26, 3, 33.

5. Blanz, V., and Vetter, T. 1999. A morphable model for the synthesis of 3d faces. In Proc. SIGGRAPH, ACM Press/Addison-Wesley Publishing Co., 187–194.

6. Blanz, V., Basso, C., Poggio, T., and Vetter, T. 2003. Reanimating faces in images and video. In Computer graphics forum, Wiley Online Library, 641–650.

7. Blanz, V., Scherbaum, K., Vetter, T., and Seidel, H.-P. 2004. Exchanging faces in images. In Computer Graphics Forum, Wiley Online Library, 669–676.

8. Borshukov, G., Piponi, D., Larsen, O., Lewis, J. P., and Tempelaar-Lietz, C. 2003. Universal capture: image-based facial animation for “The Matrix Reloaded”. In SIGGRAPH Sketches, ACM, 16:1–16:1.

9. Bouaziz, S., Wang, Y., and Pauly, M. 2013. Online modeling for realtime facial animation. ACM TOG 32, 4, 40.

10. Bradley, D., Heidrich, W., Popa, T., and Sheffer, A. 2010. High resolution passive facial performance capture. ACM TOG 29, 4, 41.

11. Burt, P. J., and Adelson, E. H. 1983. The Laplacian pyramid as a compact image code. IEEE Trans. Communications 31, 532–540.

12. Cao, C., Weng, Y., Lin, S., and Zhou, K. 2013. 3D shape regression for real-time facial animation. ACM TOG 32, 4, 41.

13. Cao, C., Hou, Q., and Zhou, K. 2014. Displaced dynamic expression regression for real-time facial tracking and animation. ACM TOG 33, 4, 43.

14. Cao, C., Weng, Y., Zhou, S., Tong, Y., and Zhou, K. 2014. Facewarehouse: A 3D facial expression database for visual computing. IEEE TVCG 20, 3, 413–425.

15. Chai, J.-X., Xiao, J., and Hodgins, J. 2003. Vision-based control of 3D facial animation. In Proc. SCA, Eurographics Association, 193–206.

16. Chen, Y., and Medioni, G. G. 1992. Object modelling by registration of multiple range images. Image and Vision Computing 10, 3, 145–155.

17. Chen, Y.-L., Wu, H.-T., Shi, F., Tong, X., and Chai, J. 2013. Accurate and robust 3d facial capture using a single rgbd camera. Proc. ICCV, 3615–3622.

18. Chuang, E., and Bregler, C. 2002. Performance-driven facial animation using blend shape interpolation. Tech. Rep. CS-TR-2002-02, Stanford University.

19. Cootes, T. F., Edwards, G. J., and Taylor, C. J. 2001. Active appearance models. IEEE TPAMI 23, 6, 681–685.

20. Dale, K., Sunkavalli, K., Johnson, M. K., Vlasic, D., Matusik, W., and Pfister, H. 2011. Video face replacement. ACM TOG 30, 6, 130.

21. Eisert, P., and Girod, B. 1998. Analyzing facial expressions for virtual conferencing. CGAA 18, 5, 70–78.

22. Fyffe, G., Jones, A., Alexander, O., Ichikari, R., and Debevec, P. 2014. Driving high-resolution facial scans with video performance capture. ACM TOG 34, 1, 8.

23. Garrido, P., Valgaerts, L., Wu, C., and Theobalt, C. 2013. Reconstructing detailed dynamic face geometry from monocular video. ACM TOG 32, 6, 158.

24. Garrido, P., Valgaerts, L., Rehmsen, O., Thormaehlen, T., Perez, P., and Theobalt, C. 2014. Automatic face reenactment. In Proc. CVPR.

25. Garrido, P., Valgaerts, L., Sarmadi, H., Steiner, I., Varanasi, K., Perez, P., and Theobalt, C. 2015. Vdub: Modifying face video of actors for plausible visual alignment to a dubbed audio track. In Computer Graphics Forum, Wiley-Blackwell.

26. Guenter, B., Grimm, C., Wood, D., Malvar, H., and Pighin, F. 1998. Making faces. In Proc. SIGGRAPH, ACM, 55–66.

27. Hsieh, P.-L., Ma, C., Yu, J., and Li, H. 2015. Unconstrained realtime facial performance capture. In Computer Vision and Pattern Recognition (CVPR).

28. Huang, H., Chai, J., Tong, X., and Wu, H.-T. 2011. Leveraging motion capture and 3D scanning for high-fidelity facial performance acquisition. ACM TOG 30, 4, 74.

29. Kemelmacher-Shlizerman, I., Sankar, A., Shechtman, E., and Seitz, S. M. 2010. Being John Malkovich. In Proc. ECCV, 341–353.

30. Kemelmacher-Shlizerman, I., Shechtman, E., Garg, R., and Seitz, S. M. 2011. Exploring photobios. ACM TOG 30, 4, 61.

31. Lewis, J., and Anjyo, K.-i. 2010. Direct manipulation blendshapes. IEEE CGAA 30, 4, 42–50.

32. Li, K., Xu, F., Wang, J., Dai, Q., and Liu, Y. 2012. A data-driven approach for facial expression synthesis in video. In Proc. CVPR, 57–64.

33. Li, H., Yu, J., Ye, Y., and Bregler, C. 2013. Realtime facial animation with on-the-fly correctives. ACM TOG 32, 4, 42.

34. Liu, Z., Shan, Y., and Zhang, Z. 2001. Expressive expression mapping with ratio images. In Proc. SIGGRAPH, ACM, 271–276.

35. Meyer, M., Barr, A., Lee, H., and Desbrun, M. 2002. Generalized barycentric coordinates on irregular polygons. Journal of Graphics Tools 7, 1, 13–22.

36. Müller, C. 1966. Spherical harmonics. Springer.

37. Pighin, F., and Lewis, J. 2006. Performance-driven facial animation. In ACM SIGGRAPH Courses.

38. Pighin, F., Hecker, J., Lischinski, D., Szeliski, R., and Salesin, D. 1998. Synthesizing realistic facial expressions from photographs. In Proc. SIGGRAPH, ACM Press/Addison-Wesley Publishing Co., 75–84.

39. Ramamoorthi, R., and Hanrahan, P. 2001. A signal-processing framework for inverse rendering. In Proc. SIGGRAPH, ACM, 117–128.

40. Saragih, J. M., Lucey, S., and Cohn, J. F. 2011. Deformable model fitting by regularized landmark mean-shift. IJCV 91, 2, 200–215.

41. Saragih, J. M., Lucey, S., and Cohn, J. F. 2011. Real-time avatar animation from a single image. In Automatic Face and Gesture Recognition Workshops, 213–220.

42. Shi, F., Wu, H.-T., Tong, X., and Chai, J. 2014. Automatic acquisition of high-fidelity facial performances using monocular videos. ACM TOG 33, 6, 222.

43. Sumner, R. W., and Popović, J. 2004. Deformation transfer for triangle meshes. ACM TOG 23, 3, 399–405.

44. Suwajanakorn, S., Kemelmacher-Shlizerman, I., and Seitz, S. M. 2014. Total moving face reconstruction. In Proc. ECCV, 796–812.

45. Valgaerts, L., Wu, C., Bruhn, A., Seidel, H.-P., and Theobalt, C. 2012. Lightweight binocular facial performance capture under uncontrolled lighting. ACM Trans. Graph. 31, 6, 187.

46. Vlasic, D., Brand, M., Pfister, H., and Popović, J. 2005. Face transfer with multilinear models. ACM TOG 24, 3, 426–433.

47. Wang, Y., Huang, X., Su Lee, C., Zhang, S., Li, Z., Samaras, D., Metaxas, D., Elgammal, A., and Huang, P. 2004. High resolution acquisition, learning and transfer of dynamic 3-D facial expressions. CGF 23, 677–686.

48. Weise, T., Li, H., Gool, L. J. V., and Pauly, M. 2009. Face/Off: live facial puppetry. In Proc. SCA, 7–16.

49. Weise, T., Bouaziz, S., Li, H., and Pauly, M. 2011. Realtime performance-based facial animation. 77.

50. Williams, L. 1990. Performance-driven facial animation. In Proc. SIGGRAPH, 235–242.

51. Wilson, C. A., Ghosh, A., Peers, P., Chiang, J.-Y., Busch, J., and Debevec, P. 2010. Temporal upsampling of performance geometry using photometric alignment. ACM TOG 29, 2, 17.

52. Xiao, J., Baker, S., Matthews, I., and Kanade, T. 2004. Real-time combined 2D+3D active appearance models. In Proc. CVPR, 535–542.

53. Zhang, L., Snavely, N., Curless, B., and Seitz, S. M. 2004. Spacetime faces: high resolution capture for modeling and animation. ACM TOG 23, 3, 548–558.

54. Zollhöfer, M., Niessner, M., Izadi, S., Rehmann, C., Zach, C., Fisher, M., Wu, C., Fitzgibbon, A., Loop, C., Theobalt, C., and Stamminger, M. 2014. Real-time Non-rigid Reconstruction using an RGB-D Camera. ACM TOG 33, 4, 156.