“VR facial animation via multiview image translation” by Wei, Saragih, Simon, Harley, Lombardi, et al. …

Conference:

Type(s):

Title:

- VR facial animation via multiview image translation

Session/Category Title:

- Neural Rendering

Presenter(s)/Author(s):

Abstract:

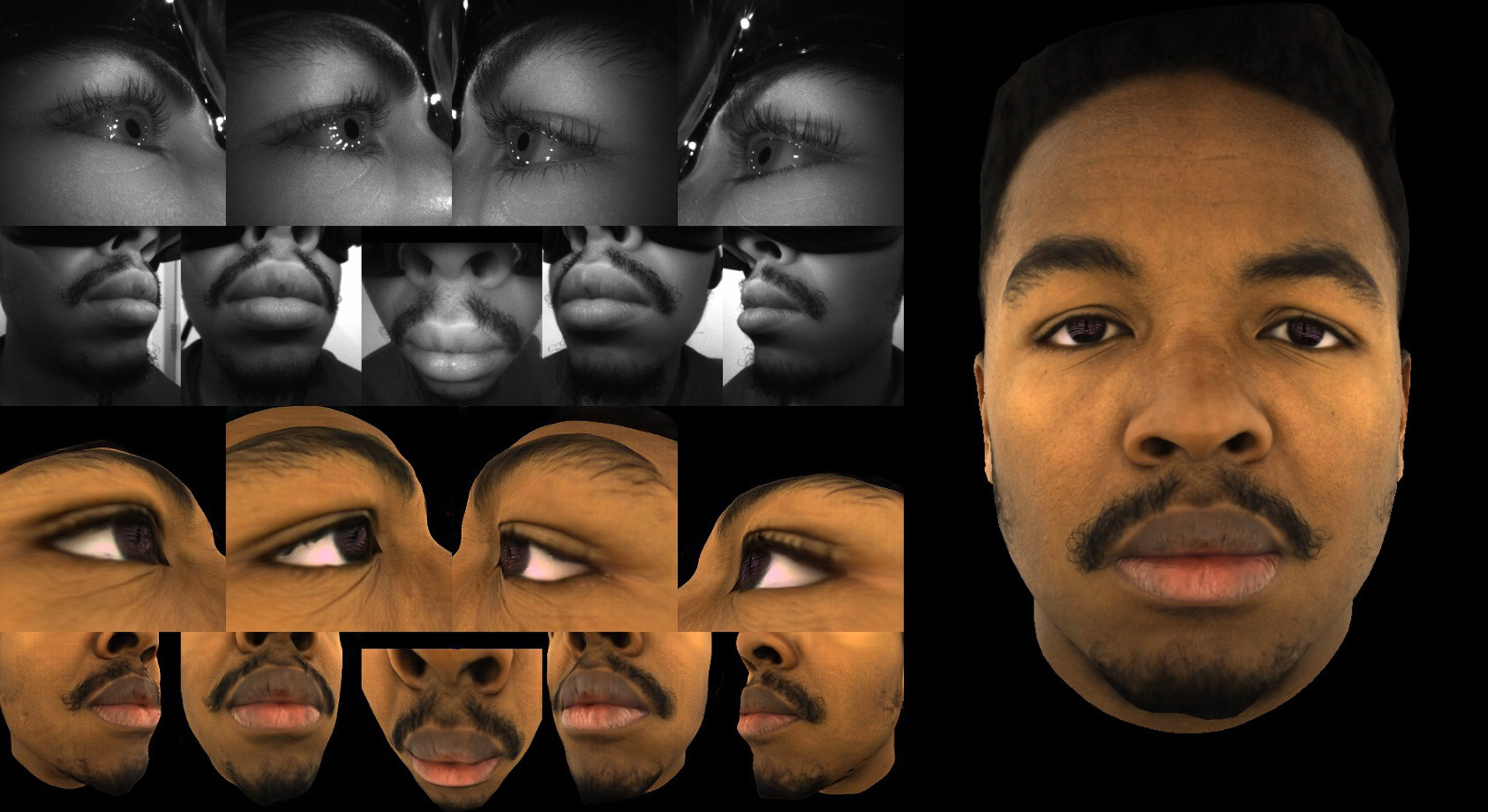

A key promise of Virtual Reality (VR) is the possibility of remote social interaction that is more immersive than any prior telecommunication media. However, existing social VR experiences are mediated by inauthentic digital representations of the user (i.e., stylized avatars). These stylized representations have limited the adoption of social VR applications in precisely those cases where immersion is most necessary (e.g., professional interactions and intimate conversations). In this work, we present a bidirectional system that can animate avatar heads of both users’ full likeness using consumer-friendly headset mounted cameras (HMC). There are two main challenges in doing this: unaccommodating camera views and the image-to-avatar domain gap. We address both challenges by leveraging constraints imposed by multiview geometry to establish precise image-to-avatar correspondence, which are then used to learn an end-to-end model for real-time tracking. We present designs for a training HMC, aimed at data-collection and model building, and a tracking HMC for use during interactions in VR. Correspondence between the avatar and the HMC-acquired images are automatically found through self-supervised multiview image translation, which does not require manual annotation or one-to-one correspondence between domains. We evaluate the system on a variety of users and demonstrate significant improvements over prior work.

References:

1. Aayush Bansal, Shugao Ma, Deva Ramanan, and Yaser Sheikh. 2018. Recycle-GAN: Unsupervised Video Retargeting. In IEEE European Conference on Computer Vision (ECCV).Google Scholar

2. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W. Sumner, and Markus Gross. 2011. High-quality Passive Facial Performance Capture Using Anchor Frames. ACM Transactions on Graphics (TOG) 30, 4, Article 75 (July 2011), 10 pages. Google ScholarDigital Library

3. BinaryVR. 2019. Real-time Facial Tracking. https://www.binaryvr.com/vr.Google Scholar

4. Volker Blanz and Thomas Vetter. 1999. A Morphable Model for the Synthesis of 3D Faces. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques. 187–194. Google ScholarDigital Library

5. Chen Cao, Qiming Hou, and Kun Zhou. 2014. Displaced Dynamic Expression Regression for Real-time Facial Tracking and Animation. ACM Transactions on Graphics (TOG) 33, 4 (July 2014), 43:1–43:10. Google ScholarDigital Library

6. Timothy F. Cootes, Gareth J. Edwards, and Christopher J. Taylor. 1998. Active Appearance Models. In IEEE European Conference on Computer Vision (ECCV). Google ScholarDigital Library

7. Dimensional Imaging. 2016. DI4D PRO System. http://www.di4d.com/systems/di4d-pro-system/.Google Scholar

8. Epic Games. 2017. Epic Games. https://www.epicgames.com.Google Scholar

9. Huan Fu, Mingming Gong, Chaohui Wang, Kayhan Batmanghelich, Kun Zhang, and Dacheng Tao. 2018. Geometry-Consistent Generative Adversarial Networks for One-Sided Unsupervised Domain Mapping. arXiv preprint arXiv:1706.00826 (2018).Google Scholar

10. Graham Fyffe, Andrew Jones, Oleg Alexander, Ryosuke Ichikari, and Paul Debevec. 2014. Driving High-Resolution Facial Scans with Video Performance Capture. ACM Transactions on Graphics (TOG) 34, 1, Article 8 (Dec. 2014), 8:1–8:14 pages. Google ScholarDigital Library

11. Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2016. Image Style Transfer Using Convolutional Neural Networks. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

12. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems (NIPS). Google ScholarDigital Library

13. Adam W. Harley, Shih-En Wei, Jason Saragih, and Katerina Fragkiadaki. 2019. Image Disentanglement and Uncooperative Re-Entanglement for High-Fidelity Image-to-Image Translation. arXiv preprint arXiv:1901.03628 (2019).Google Scholar

14. Hellblade. 2018. Hellblade. https://www.hellblade.com/.Google Scholar

15. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image Translation with Conditional Adversarial Networks. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

16. Hiroharu Kato, Yoshitaka Ushiku, and Tatsuya Harada. 2018. Neural 3D Mesh Renderer. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

17. Tejas D. Kulkarni, William F. Whitney, Pushmeet Kohli, and Josh Tenenbaum. 2015. Deep Convolutional Inverse Graphics Network. In Advances in Neural Information Processing Systems (NIPS). 2539–2547. Google ScholarDigital Library

18. Samuli Laine, Tero Karras, Timo Aila, Antti Herva, Shunsuke Saito, Ronald Yu, Hao Li, and Jaakko Lehtinen. 2017. Production-level Facial Performance Capture Using Deep Convolutional Neural Networks. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Article 10, 10 pages. Google ScholarDigital Library

19. Hao Li, Laura Trutoiu, Kyle Olszewski, Lingyu Wei, Tristan Trutna, Pei-Lun Hsieh, Aaron Nicholls, and Chongyang Ma. 2015. Facial Performance Sensing Head-mounted Display. ACM Transactions on Graphics (TOG) 34, 4, Article 47 (July 2015), 47:1–47:9 pages. Google ScholarDigital Library

20. Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh. 2018. Deep Appearance Models for Face Rendering. ACM Transactions on Graphics (TOG) 37, 4, Article 68 (July 2018), 13 pages. Google ScholarDigital Library

21. Magic Leap. 2018. Magic Leap. https://www.magicleap.com/.Google Scholar

22. Takeru Miyato, Toshiki Kataoka, Masanori Koyama, and Yuichi Yoshida. 2018. Spectral Normalization for Generative Adversarial Networks. In International Conference on Learning Representations (ICLR).Google Scholar

23. Franziska Mueller, Florian Bernard, Oleksandr Sotnychenko, Dushyant Mehta, Srinath Sridhar, Dan Casas, and Christian Theobalt. 2018. GANerated Hands for Real-time 3D Hand Tracking from Monocular RGB. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

24. Vinod Nair, Josh Susskind, and Geoffrey E. Hinton. 2008. Analysis-by-Synthesis by Learning to Invert Generative Black Boxes. In Proceedings of the 18th International Conference on Artificial Neural Networks (ICANN), Part I. 971–981. Google ScholarDigital Library

25. Kyle Olszewski, Joseph J. Lim, Shunsuke Saito, and Hao Li. 2016. High-fidelity Facial and Speech Animation for VR HMDs. ACM Transactions on Graphics (TOG) 35, 6, Article 221 (Nov. 2016), 14 pages. Google ScholarDigital Library

26. Jason M. Saragih, Simon Lucey, and Jeffrey F. Cohn. 2009. Face Alignment through Subspace Constrained Mean-shifts. In IEEE International Conference on Computer Vision (ICCV).Google Scholar

27. Mike Seymour, Chris Evans, and KimLibreri. 2017. MeetMike: Epic Avatars. In ACM SIGGRAPH 2017 VR Village. Article 12, 2 pages. Google ScholarDigital Library

28. Ayush Tewari, Michael Zollöfer, Hyeongwoo Kim, Pablo Garrido, Florian Bernard, Patrick Perez, and Christian Theobalt. 2017. MoFA: Model-based Deep Convolutional Face Autoencoder for Unsupervised Monocular Reconstruction. In IEEE International Conference on Computer Vision (ICCV).Google Scholar

29. Justus Thies, Michael Zollhöfer, Marc Stamminger, Christian Theobalt, and Matthias Nießner. 2016. Face2Face: Real-time Face Capture and Reenactment of RGB Videos. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarDigital Library

30. Justus Thies, Michael Zollhöfer, Marc Stamminger, Christian Theobalt, and Matthias Niessner. 2018. FaceVR: Real-Time Gaze-Aware Facial Reenactment in Virtual Reality. ACM Transactions on Graphics (TOG) 37, 2, Article 25 (June 2018), 25:1–25:15 pages. Google ScholarDigital Library

31. Unreal Engine 4. 2018. Unreal Engine 4. https://www.unrealengine.com/.Google Scholar

32. Shih-En Wei, Varun Ramakrishna, Takeo Kanade, and Yaser Sheikh. 2016. Convolutional Pose Machines. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

33. Jing Xiao, Jinxiang Chai, and Takeo Kanade. 2006. A Closed-Form Solution to Non-Rigid Shape and Motion Recovery. International Journal of Computer Vision (IJCV) 67, 2 (April 2006), 233–246. Google ScholarDigital Library

34. Xuehan Xiong and Fernando De la Torre. 2013. Supervised Descent Method and Its Applications to Face Alignment. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Google ScholarDigital Library

35. Ilker Yildirim, Winrich Freiwald, Tejas Kulkarni, and Joshua B. Tenenbaum. 2015. Efficient Analysis-by-synthesis in Vision: A Computational Framework, Behavioral Tests, and Comparison with Neural Representations. In Proceedings of 37th Annual Conference of the Cognitive Science Society.Google Scholar

36. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. 2017. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In IEEE International Conference on Computer Vision (ICCV).Google Scholar