“Volumetric appearance stylization with stylizing kernel prediction network” by Guo, Li, Zong, Liu, He, et al. …

Conference:

Type(s):

Title:

- Volumetric appearance stylization with stylizing kernel prediction network

Presenter(s)/Author(s):

Abstract:

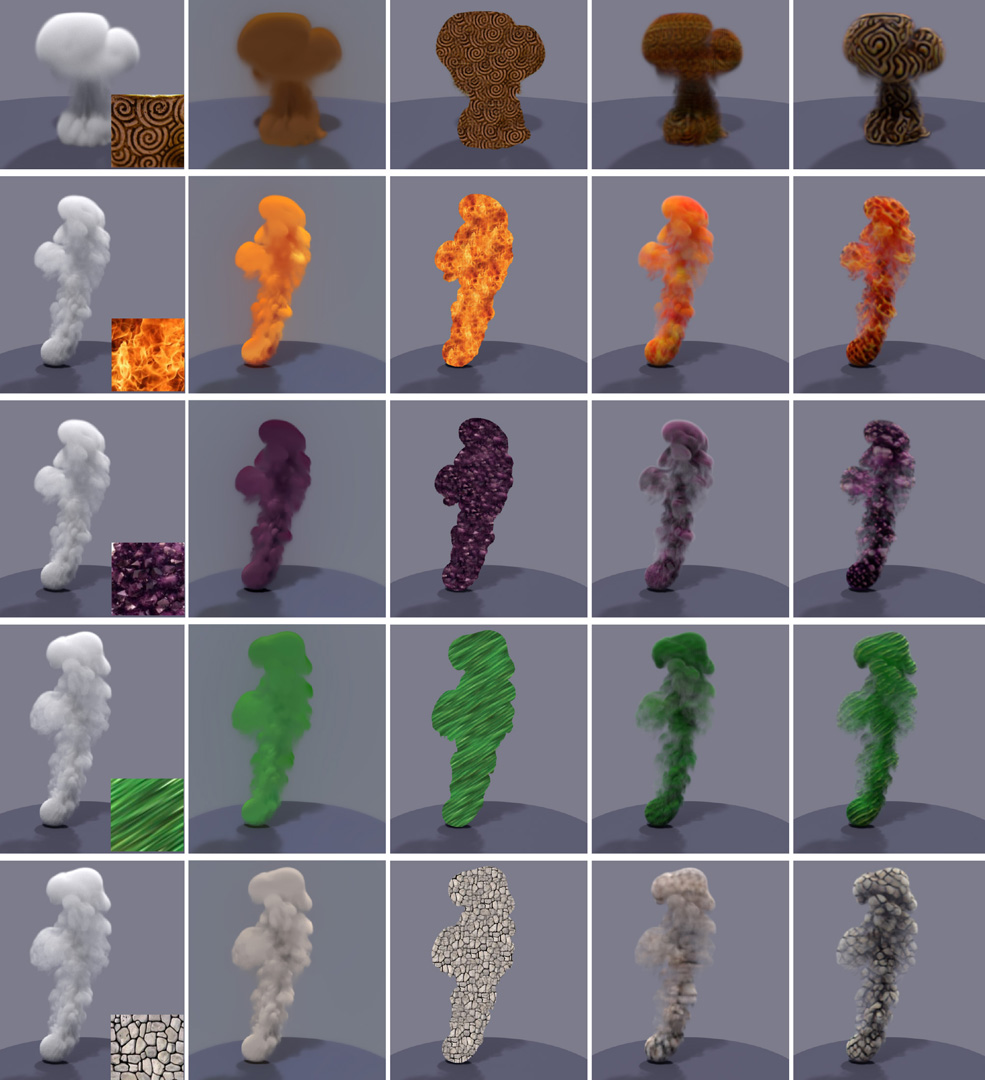

This paper aims to efficiently construct the volume of heterogeneous single-scattering albedo for a given medium that would lead to desired color appearance. We achieve this goal by formulating it as a volumetric style transfer problem in which an input 3D density volume is stylized using color features extracted from a reference 2D image. Unlike existing algorithms that require cumbersome iterative optimizations, our method leverages a feed-forward deep neural network with multiple well-designed modules. At the core of our network is a stylizing kernel predictor (SKP) that extracts multi-scale feature maps from a 2D style image and predicts a handful of stylizing kernels as a highly non-linear combination of the feature maps. Each group of stylizing kernels represents a specific style. A volume autoencoder (VolAE) is designed and jointly learned with the SKP to transform a density volume to an albedo volume based on these stylizing kernels. Since the autoencoder does not encode any style information, it can generate different albedo volumes with a wide range of appearance once training is completed. Additionally, a hybrid multi-scale loss function is used to learn plausible color features and guarantee temporal coherence for time-evolving volumes. Through comprehensive experiments, we validate the effectiveness of our method and show its superiority by comparing against state-of-the-arts. We show that with our method a novice user can easily create a diverse set of realistic translucent effects for 3D models (either static or dynamic), neglecting any cumbersome process of parameter tuning.

References:

1. Steve Bako, Thijs Vogels, Brian McWilliams, Mark Meyer, Jan Novák, Alex Harvill, Pradeep Sen, Tony DeRose, and Fabrice Rousselle. 2017. Kernel-Predicting Convolutional Networks for Denoising Monte Carlo Renderings. ACM Transactions on Graphics (TOG) (Proceedings of SIGGRAPH 2017) 36, 4 (July 2017).Google Scholar

2. Sai Bangaru, Tzu-Mao Li, and Frédo Durand. 2020. Unbiased Warped-Area Sampling for Differentiable Rendering. ACM Trans. Graph. 39, 6 (2020), 245:1–245:18.Google ScholarDigital Library

3. Adam W. Bargteil, Funshing Sin, Jonathan E. Michaels,, Goktekin, and James F. O’Brien. 2006. A Texture Synthesis Method for Liquid Animations. In Proceedings of Euro-graphics/ ACM SIGGRAPH Symposium on Computer Animation.Google Scholar

4. Blender. 2020. Blender – a 3D modelling and rendering package. Blender Foundation, Stichting Blender Foundation, Amsterdam. http://www.blender.orgGoogle Scholar

5. Robert Bridson. 2015. Fluid Simulation for Computer Graphics. CRC Press.Google ScholarDigital Library

6. Robert Bridson, Ronald Fedkiw, and Matthias Müller-Fischer. 2006. Fluid Simulation. In ACM SIGGRAPH 2006 Courses (SIGGRAPH ’06). Association for Computing Machinery, New York, NY, USA, 1–87.Google Scholar

7. Robert Carroll, Ravi Ramamoorthi, and Maneesh Agrawala. 2011. Illumination Decomposition for Material Recoloring with Consistent Interreflections. ACM Trans. Graph. 30, 4 (2011).Google ScholarDigital Library

8. Eva Cerezo, Frederic Pérez, Xavier Pueyo, Francisco J. Seron, and François X. Sillion. 2005. A survey on participating media rendering techniques. The Visual Computer 21, 5 (Jun 2005), 303–328.Google ScholarDigital Library

9. Dongdong Chen, Lu Yuan, Jing Liao, Nenghai Yu, and Gang Hua. 2017. StyleBank: An Explicit Representation for Neural Image Style Transfer. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

10. Tian Qi Chen and Mark Schmidt. 2016. Fast Patch-based Style Transfer of Arbitrary Style. CoRR abs/1612.04337 (2016).Google Scholar

11. Ming-Ming Cheng, Xiao-Chang Liu, Jie Wang, Shao-Ping Lu, Yu-Kun Lai, and Paul L. Rosin. 2020. Structure-Preserving Neural Style Transfer. IEEE Transactions on Image Processing 29 (2020), 909–920.Google ScholarDigital Library

12. Fabienne Christen, Byungsoo Kim, Vinicius C. Azevedo, and Barbara Solenthaler. 2020. Neural Smoke Stylization with Color Transfer. (may 2020).Google Scholar

13. Mengyu Chu and Nils Thuerey. 2017. Data-Driven Synthesis of Smoke Flows with CNN-Based Feature Descriptors. ACM Trans. Graph. 36, 4 (2017).Google ScholarDigital Library

14. M. Cimpoi, S. Maji, I. Kokkinos, S. Mohamed,, and A. Vedaldi. 2014. Describing Textures in the Wild. In Proceedings of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).Google Scholar

15. J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. 2009. ImageNet: A Large-Scale Hierarchical Image Database. In CVPR09.Google Scholar

16. Yoshinori Dobashi, Wataru Iwasaki, Ayumi Ono, Tsuyoshi Yamamoto, Yonghao Yue, and Tomoyuki Nishita. 2012. An Inverse Problem Approach for Automatically Adjusting the Parameters for Rendering Clouds Using Photographs. ACM Trans. Graph. 31, 6 (Nov. 2012), 145:1–145:10.Google ScholarDigital Library

17. Bo Dong, Yue Dong, Xin Tong, and Pieter Peers. 2015. Measurement-Based Editing of Diffuse Albedo with Consistent Interreflections. ACM Trans. Graph. 34, 4 (July 2015). Google ScholarDigital Library

18. David S. Ebert, F. Kenton Musgrave, Darwyn Peachey, Ken Perlin, and Steven Worley. 2002. Texturing and Modeling: A Procedural Approach (3rd ed.). Morgan Kaufmann Publishers Inc., San Francisco, CA, USA.Google ScholarDigital Library

19. Ronald Fedkiw, Jos Stam, and Henrik Wann Jensen. 2001. Visual Simulation of Smoke. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’01). Association for Computing Machinery, New York, NY, USA, 15–22.Google ScholarDigital Library

20. Jonathan Gagnon, François Dagenais, and Eric Paquette. 2016. Dynamic lapped texture for fluid simulations. The Visual Computer 32 (05 2016). Google ScholarDigital Library

21. L. A. Gatys, A. S. Ecker, and M. Bethge. 2016. Image Style Transfer Using Convolutional Neural Networks. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2414–2423.Google Scholar

22. S. W. Hasinoff and K. N. Kutulakos. 2007. Photo-Consistent Reconstruction of Semi-transparent Scenes by Density-Sheet Decomposition. IEEE Transactions on Pattern Analysis and Machine Intelligence 29, 5 (2007), 870–885.Google ScholarDigital Library

23. Milovš Hašan and Ravi Ramamoorthi. 2013. Interactive Albedo Editing in Path-Traced Volumetric Materials. ACM Trans. Graph. 32, 2 (April 2013).Google ScholarDigital Library

24. K. He, X. Zhang, S. Ren, and J. Sun. 2016. Deep Residual Learning for Image Recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 770–778.Google Scholar

25. L. G. Henyey and J. L. Greenstein. 1941. Diffuse radiation in the Galaxy. The Astrophysical Journal 93 (Jan 1941), 70–83.Google Scholar

26. G. E. Hinton and R. R. Salakhutdinov. 2006. Reducing the Dimensionality of Data with Neural Networks. Science 313, 5786 (2006), 504–507. Google ScholarCross Ref

27. Xun Huang and Serge Belongie. 2017. Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization, In International Conference on Computer Vision (ICCV), Venice, Italy (2017-10-22). International Conference on Computer Vision (ICCV), Venice, Italy. https://vision.cornell.edu/se3/wp-content/uploads/2017/08/adain.pdf Oral.Google ScholarCross Ref

28. Ivo Ihrke and Marcus Magnor. 2004. Image-Based Tomographic Reconstruction of Flames. In Proceedings of the 2004 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’04). Eurographics Association, 365–373.Google ScholarDigital Library

29. Wenzel Jakob. 2010. Mitsuba renderer. http://www.mitsuba-renderer.org.Google Scholar

30. Ondřej Jamriška, Jakub Fišer, Paul Asente, Jingwan Lu, Eli Shechtman, and Daniel Sýkora. 2015. LazyFluids: Appearance Transfer for Fluid Animations. ACM Transactions on Graphics 34, 4, Article 92 (2015).Google ScholarDigital Library

31. Y. Jing, Y. Yang, Z. Feng, J. Ye, Y. Yu, and M. Song. 2019. Neural Style Transfer: A Review. IEEE Transactions on Visualization and Computer Graphics (2019), 1–1.Google Scholar

32. Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Computer Vision – ECCV 2016, Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling (Eds.). Springer International Publishing, Cham, 694–711.Google Scholar

33. Hiroharu Kato, Yoshitaka Ushiku, and Tatsuya Harada. 2018. Neural 3D Mesh Renderer. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

34. Markus Kettunen, Erik Härkönen, and Jaakko Lehtinen. 2019. Deep Convolutional Reconstruction for Gradient-domain Rendering. ACM Trans. Graph. 38, 4 (July 2019), 126:1–126:12.Google ScholarDigital Library

35. Byungsoo Kim, Vinicius C. Azevedo, Markus Gross, and Barbara Solenthaler. 2019. Transport-Based Neural Style Transfer for Smoke Simulations. ACM Transactions on Graphics (TOG) 38, 6 (2019), 188.Google ScholarDigital Library

36. Byungsoo Kim, Vinicius C. Azevedo, Markus Gross, and Barbara Solenthaler. 2020. Lagrangian Neural Style Transfer for Fluids. ACM Transactions on Graphics 39, 4, Article 52 (2020), 10 pages. Google ScholarDigital Library

37. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980 (2015).Google Scholar

38. O. Klehm, I. Ihrke, H. Seidel, and E. Eisemann. 2014. Property and Lighting Manipulations for Static Volume Stylization Using a Painting Metaphor. IEEE Transactions on Visualization and Computer Graphics 20, 7 (2014), 983–995.Google ScholarDigital Library

39. V. Kwatra, D. Adalsteinsson, T. Kim, N. Kwatra, M. Carlson, and M. Lin. 2007. Texturing Fluids. IEEE Transactions on Visualization and Computer Graphics 13, 5 (2007), 939–952.Google ScholarDigital Library

40. Chuan Li and Michael Wand. 2016. Precomputed Real-Time Texture Synthesis with Markovian Generative Adversarial Networks. In Computer Vision – ECCV 2016, Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling (Eds.). Springer International Publishing, Cham, 702–716.Google ScholarCross Ref

41. Tzu-Mao Li, Miika Aittala, Frédo Durand, and Jaakko Lehtinen. 2018a. Differentiable Monte Carlo Ray Tracing through Edge Sampling. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 37, 6 (2018), 222:1–222:11.Google Scholar

42. Yijun Li, Chen Fang, Jimei Yang, Zhaowen Wang, Xin Lu, and Ming-Hsuan Yang. 2017a. Universal Style Transfer via Feature Transforms. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, 4-9 December 2017, Long Beach, CA, USA. 386–396.Google ScholarCross Ref

43. Yijun Li, Ming-Yu Liu, Xueting Li, Ming-Hsuan Yang, and Jan Kautz. 2018b. A Closed-Form Solution to Photorealistic Image Stylization. In Computer Vision – ECCV 2018, Vittorio Ferrari, Martial Hebert, Cristian Sminchisescu, and Yair Weiss (Eds.). Springer International Publishing, Cham, 468–483.Google ScholarDigital Library

44. Yanghao Li, Naiyan Wang, Jiaying Liu, and Xiaodi Hou. 2017b. Demystifying Neural Style Transfer. arXiv:1701.01036 [cs.CV]Google Scholar

45. Hsueh-Ti Derek Liu, Michael Tao, and Alec Jacobson. 2018. Paparazzi: Surface Editing by way of Multi-View Image Processing. ACM Transactions on Graphics (2018).Google Scholar

46. Shichen Liu, Tianye Li, Weikai Chen, and Hao Li. 2019. Soft Rasterizer: A Differentiable Renderer for Image-Based 3D Reasoning. In The IEEE International Conference on Computer Vision (ICCV).Google ScholarCross Ref

47. Matthew M. Loper and Michael J. Black. 2014. OpenDR: An Approximate Differentiable Renderer. In Computer Vision – ECCV 2014, David Fleet, Tomas Pajdla, Bernt Schiele, and Tinne Tuytelaars (Eds.). Springer International Publishing, Cham, 154–169.Google Scholar

48. Guillaume Loubet, Nicolas Holzschuch, and Wenzel Jakob. 2019. Reparameterizing Discontinuous Integrands for Differentiable Rendering. ACM Trans. Graph. 38, 6, Article 228 (Nov. 2019), 14 pages.Google ScholarDigital Library

49. Fujun Luan, Sylvain Paris, Eli Shechtman, and Kavita Bala. 2017. Deep Photo Style Transfer. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

50. Andrew L. Maas, Awni Y. Hannun, and Andrew Y. Ng. 2013. Rectifier nonlinearities improve neural network acoustic models. In ICML Workshop on Deep Learning for Audio, Speech and Language Processing.Google Scholar

51. Ben Mildenhall, Jonathan T. Barron, Jiawen Chen, Dillon Sharlet, Ren Ng, and Robert Carroll. 2018. Burst Denoising With Kernel Prediction Networks. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

52. Merlin Nimier-David, Delio Vicini, Tizian Zeltner, and Wenzel Jakob. 2019. Mitsuba 2: A Retargetable Forward and Inverse Renderer. ACM Trans. Graph. 38, 6 (2019).Google ScholarDigital Library

53. Makoto Okabe, Yoshinori Dobashi, Ken Anjyo, and Rikio Onai. 2015. Fluid Volume Modeling from Sparse Multi-view Images by Appearance Transfer. ACM Transactions on Graphics (Proc. SIGGRAPH 2015) 34, 4 (2015), 93:1–93:10.Google ScholarDigital Library

54. Keunhong Park, Konstantinos Rematas, Ali Farhadi, and Steven M. Seitz. 2018. PhotoShape: Photorealistic Materials for Large-Scale Shape Collections. ACM Trans. Graph. 37, 6, Article 192 (Nov. 2018).Google ScholarDigital Library

55. Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. 2017. Automatic differentiation in PyTorch.Google Scholar

56. Felix Petersen, Amit H. Bermano, Oliver Deussen, and Daniel Cohen-Or. 2019. Pix2Vex: Image-to-Geometry Reconstruction using a Smooth Differentiable Renderer. arXiv:1903.11149 [cs.CV]Google Scholar

57. Eric Risser, Pierre Wilmot, and Connelly Barnes. 2017. Stable and Controllable Neural Texture Synthesis and Style Transfer Using Histogram Losses. arXiv:1701.08893 [cs.GR]Google Scholar

58. M. Ruder, A. Dosovitskiy, and T. Brox. 2018. Artistic style transfer for videos and spherical images. International Journal of Computer Vision 126, 11 (Nov 2018), 1199–1219. http://lmb.informatik.uni-freiburg.de/Publications/2018/RDB18 online first.Google ScholarDigital Library

59. Syuhei Sato, Yoshinori Dobashi, Theodore Kim, and Tomoyuki Nishita. 2018. Example-based Turbulence Style Transfer. ACM Trans. Graph. 37, 4 (July 2018), 84:1–84:9.Google ScholarDigital Library

60. Thorsten-Walther Schmidt, Fabio Pellacini, Derek Nowrouzezahrai, Wojciech Jarosz, and Carsten Dachsbacher. 2016. State of the Art in Artistic Editing of Appearance, Lighting and Material. Computer Graphics Forum 35, 1 (2016), 216–233.Google ScholarDigital Library

61. Falong Shen, Shuicheng Yan, and Gang Zeng. 2018. Neural Style Transfer via Meta Networks. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

62. L. Shen, D. Zhu, S. Nadeem, Z. Wang, and A. E. Kaufman. 2018. Radiative Transport Based Flame Volume Reconstruction from Videos. IEEE Transactions on Visualization and Computer Graphics 24, 7 (2018), 2209–2222.Google ScholarCross Ref

63. Karen Simonyan and Andrew Zisserman. 2015. Very Deep Convolutional Networks for Large-Scale Image Recognition. In 3rd International Conference on Learning Representations, ICLR 2015.Google Scholar

64. Chunjin Song, Zhijie Wu, Yang Zhou, Minglun Gong, and Hui Huang. 2019. ETNet: Error Transition Network for Arbitrary Style Transfer. Conference on Neural Information Processing Systems (Proceedings of NeurIPS 2019) (2019).Google Scholar

65. Ying Song, Xin Tong, Fabio Pellacini, and Pieter Peers. 2009. SubEdit: A Representation for Editing Measured Heterogeneous Subsurface Scattering. ACM Trans. Graph. 28 (08 2009). Google ScholarDigital Library

66. Nils Thuerey and Tobias Pfaff. 2018. MantaFlow. http://mantaflow.com.Google Scholar

67. Adrien Treuille, Antoine McNamara, Zoran Popoviundefined, and Jos Stam. 2003. Keyframe Control of Smoke Simulations. ACM Trans. Graph. 22, 3 (2003), 716–723.Google ScholarDigital Library

68. Dmitry Ulyanov, Vadim Lebedev, Andrea, and Victor Lempitsky. 2016a. Texture Networks: Feed-forward Synthesis of Textures and Stylized Images. In Proceedings of The 33rd International Conference on Machine Learning (Proceedings of Machine Learning Research, Vol. 48), Maria Florina Balcan and Kilian Q. Weinberger (Eds.). PMLR, New York, New York, USA, 1349–1357.Google Scholar

69. Dmitry Ulyanov, Andrea Vedaldi, and Victor S. Lempitsky. 2016b. Instance Normalization: The Missing Ingredient for Fast Stylization. CoRR abs/1607.08022 (2016).Google ScholarDigital Library

70. Thijs Vogels, Fabrice Rousselle, Brian Mcwilliams, Gerhard Röthlin, Alex Harvill, David Adler, Mark Meyer, and Jan Novák. 2018. Denoising with Kernel Prediction and Asymmetric Loss Functions. ACM Trans. Graph. 37, 4 (July 2018), 124:1–124:15.Google ScholarDigital Library

71. You Xie, Erik Franz, Mengyu Chu, and Nils Thuerey. 2018. TempoGAN: A Temporally Coherent, Volumetric GAN for Super-Resolution Fluid Flow. ACM Trans. Graph. 37, 4 (2018).Google ScholarDigital Library

72. Cheng Zhang, Bailey Miller, Kai Yan, Ioannis Gkioulekas, and Shuang Zhao. 2020. Path-Space Differentiable Rendering. ACM Trans. Graph. 39, 4 (2020), 143:1–143:19.Google ScholarDigital Library

73. Cheng Zhang, Lifan Wu, Changxi Zheng, Ioannis Gkioulekas, Ravi Ramamoorthi, and Shuang Zhao. 2019. A Differential Theory of Radiative Transfer. ACM Trans. Graph. 38, 6 (2019).Google ScholarDigital Library