“Voice puppetry” by Brand

Conference:

Type(s):

Title:

- Voice puppetry

Presenter(s)/Author(s):

Abstract:

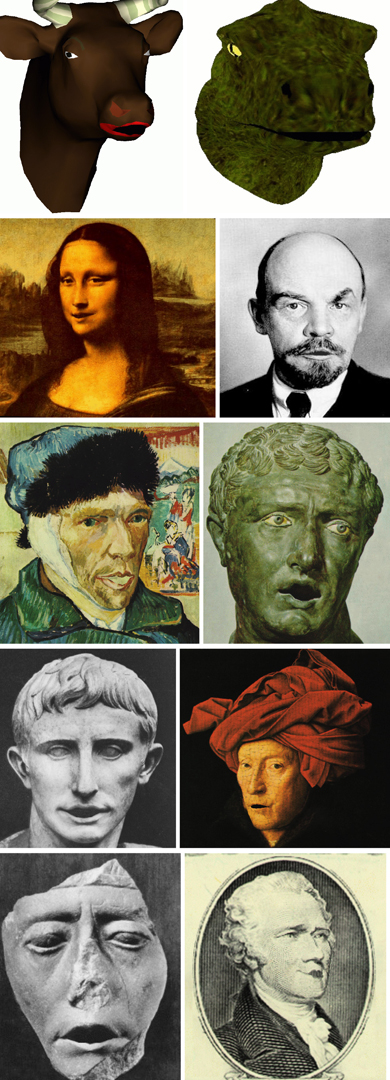

We introduce a method for predicting a control signal from another related signal, and apply it to voice puppetry: Generating full facial animation from expressive information in an audio track. The voice puppet learns a facial control model from computer vision of real facial behavior, automatically incorporating vocal and facial dynamics such as co-articulation. Animation is produced by using audio to drive the model, which induces a probability distribution over the manifold of possible facial motions. We present a linear-time closed-form solution for the most probable trajectory over this manifold. The output is a series of facial control parameters, suitable for driving many different kinds of animation ranging from video-realistic image warps to 3D cartoon characters.

References:

1. J.E. Ball and D.T. Ling. Spoken language processing in the Persona conversational assistant. In Proc. ESCA Workshop on Spoken Dialogue Systems, 1995.

2. L. Baum. An inequality and associated maximization technique in statistical estimation of probabilistic functions of Markov processes. Inequalities, 3:1-8, 1972.

3. C. Benoit, C. Abry, M.-A. Cathiard, T. Guiard-Marigny, and T. Lallouache. Read my lips: Where? How? When? And so.. What? In 8th Int. Congress on Event Perception and Action, Marseille, France, July 1995. Springer-Verlag.

4. M. Brand. Structure discovery in conditional probability models via an entropic prior and parameter extinction. Neural Computation (accepted 8/98), October 1997.

5. M. Brand. Pattern discovery via entropy minimization. In Proc. Artificial Intelligence and Statistics #7, Morgan Kaufmann Publishers. January 1999.

6. M. Brand. Shadow puppetry. Submitted to Int. Conf. on Computer Vision, ICCV ‘ 99, 1999.

7. C. Bregler, M. Covell, and M. Slaney. Video Rewrite: Driving visual speech with audio. In Proc. ACM SIGGRAPH ’97, 1997.

8. T. Chen and R. Rao. Audio-visual interaction in nultimedia communication. In Proc. ICASSP ‘ 97, 1997.

9. M.M. Cohen and D.W. Massaro. Modeling coarticulation in synthetic visual speech. In N.M. Thalmann and D. Thalmann, editors, Models and Techniques in Computer Animation. Springer-Verlag, 1993.

10. S. Curinga, F. Lavagetto, and F. Vignoli. Lip movement sythesis using time delay neural networks. In Proc. EUSIPCO ‘ 96, 1996.

11. E Ekman and W.V. Friesen. Manual for the Facial Action Coding System. Consulting Psychologists Press, Inc., Palo Alto, CA, 1978.

12. T. Ezzat and T. Poggio. MikeTalk: A talking facial display based on morphing visemes. In Proc. Computer Animation Conference, June 1998.

13. G.D. Forney. The Viterbi algorithm. Proc. IEEE, 6:268-278, 1973.

14. G.H. Golub and C.F. van Loan. Matrix Computations. Johns Hopkins, 1996. 3rd edition.

15. G. Hager and K. Toyama. The XVision system: A generalpurpose substrate for portable real-time vision applications. Computer Vision and Image Understanding, 69(1) pp. 23-37. 1997.

16. H. Hermansky and N. Morgan. RASTA processing of speech. IEEE Transactions on Speech and Audio Processing, 2(4):578-589, October 1994.

17. I. Katunobu and O. Hasegawa. An active multimodal interaction system. In Proc. ESCA Workshop on Spoken Dialogue Systems, 1995.

18. J.E Lewis. Automated lip-sync: Background and techniques. J. Visualization and Computer Animation, 2:118-122, 1991.

19. D.F. McAllister, R.D. Rodman, and D.L. Bitzer. Speaker independence in lip synchronization. In Proc. CompuGraphics ‘ 97, December 1997.

20. H. McGurk and J. MacDonald. Hearing lips and seeing voices. Nature, 264:746-748, 1976.

21. K. Stevens (MIT). Personal communication., 1998.

22. S. Morishima and H. Harashima. A media conversion from speech to facial image for intelligent man-machine interface. IEEE J. Selected Areas in Communications, 4:594-599, 1991.

23. F.I. Parke. A parametric model for human faces. Technical Report UTEC-CSc-75-047, University of Utah, 1974.

24. F.I. Parke. A model for human faces that allows speech synchronized animation. J. Computers and Graphics, 1(1): 1- 4, 1975.

25. M. Rydfalk. CANDIDE, a parameterised face. Technical Report LiTH-ISY-I-0866, Department of Electrical Engineering, Link/Sping University, Sweden, October 1987. Java demo available at http://www.bk.isy.liu.se/candide/candemo.html.

26. L.K. Saul and M.I. Jordan. A variational principle for model-based interpolation. Technical report, MIT Center for Biological and Computational Learning, 1996.

27. E.F. Walther. Lipreading. Nelson-Hall Inc., Chicago, 1982.

28. K. Waters and T. Levergood. DECface: A system for synthetic face applications. Multimedia Tools and Applications, 1:349- 366, 1995.

29. E. Yamamoto, S. Nakamura, and K. Shikano. Lip movement synthesis from speech based on hidden Markov models. In Proc. Int. Conf. on automatic face and gesture recognition, FG ’98, pages 154-159, Nara, Japan, 1998. IEEE Computer Society.