“Videoscapes: exploring sparse, unstructured video collections” by Tompkin, Kim, Kautz and Theobalt

Conference:

Type(s):

Title:

- Videoscapes: exploring sparse, unstructured video collections

Presenter(s)/Author(s):

Abstract:

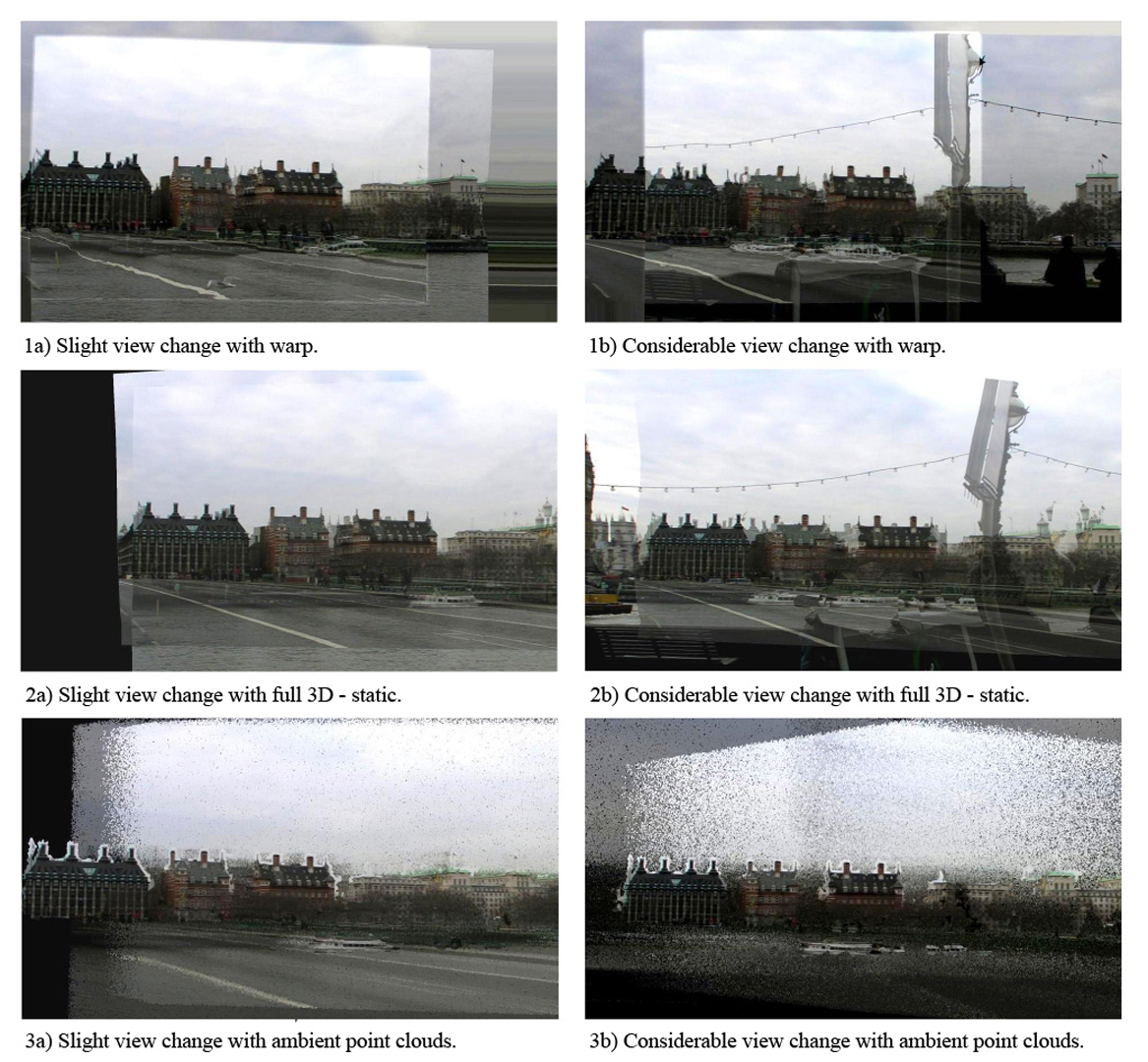

The abundance of mobile devices and digital cameras with video capture makes it easy to obtain large collections of video clips that contain the same location, environment, or event. However, such an unstructured collection is difficult to comprehend and explore. We propose a system that analyzes collections of unstructured but related video data to create a Videoscape: a data structure that enables interactive exploration of video collections by visually navigating — spatially and/or temporally — between different clips. We automatically identify transition opportunities, or portals. From these portals, we construct the Videoscape, a graph whose edges are video clips and whose nodes are portals between clips. Now structured, the videos can be interactively explored by walking the graph or by geographic map. Given this system, we gauge preference for different video transition styles in a user study, and generate heuristics that automatically choose an appropriate transition style. We evaluate our system using three further user studies, which allows us to conclude that Videoscapes provides significant benefits over related methods. Our system leads to previously unseen ways of interactive spatio-temporal exploration of casually captured videos, and we demonstrate this on several video collections.

References:

1. Agarwal, S., Snavely, N., Simon, I., Seitz, S., and Szeliski, R. 2009. Building Rome in a day. In Proc. ICCV, 72–79.Google Scholar

2. Aliaga, D., Funkhouser, T., Yanovsky, D., and Carlbom, I. 2003. Sea of images. IEEE Computer Graphics and Applications 23, 6, 22–30. Google ScholarDigital Library

3. Baatz, G., Köser, K., Chen, D., Grzeszczuk, R., and Pollefeys, M. 2010. Handling urban location recognition as a 2D homothetic problem. In Proc. ECCV, 266–279. Google ScholarDigital Library

4. Ballan, L., Brostow, G., Puwein, J., and Pollefeys, M. 2010. Unstructured video-based rendering: Interactive exploration of casually captured videos. ACM Trans. Graph. (Proc. SIGGRAPH) 29, 3, 87:1–87:11. Google ScholarDigital Library

5. Bell, D., Kuehnel, F., Maxwell, C., Kim, R., Kasraie, K., Gaskins, T., Hogan, P., and Coughlan, J. 2007. NASA World Wind: Opensource GIS for mission operations. In Proc. IEEE Aerospace Conference, 1–9.Google Scholar

6. Csurka, G., Bray, C., Dance, C., and Fan, L. 2004. Visual categorization with bags of keypoints. In Proc. ECCV, 1–22.Google Scholar

7. Datta, R., Joshi, D., Li, J., and Wang, J. Z. 2008. Image retrieval: Ideas, influences, and trends of the new age. ACM Comput. Surv. 40, 2. Google ScholarDigital Library

8. Debevec, P. E., Taylor, C. J., and Malik, J. 1996. Modeling and rendering architecture from photographs: a hybrid geometry- and image-based approach. In Proc. SIGGRAPH, 11–20. Google ScholarDigital Library

9. Dmytryk, E. 1984. On film editing. Focal Press.Google Scholar

10. Eisemann, M., Decker, B. D., Magnor, M., Bekaert, P., de Aguiar, E., Ahmed, N., Theobalt, C., and Sellent, A. 2008. Floating Textures. Computer Graphics Forum (Proc. Eurographics) 27, 2, 409–418.Google ScholarCross Ref

11. Farnebäck, G. 2003. Two-frame motion estimation based on polynomial expansion. In Proc. SCIA, 363–370. Google ScholarDigital Library

12. Frahm, J.-M., Pollefeys, M., Lazebnik, S., Gallup, D., Clipp, B., Ragurama, R., Wu, C., Zach, C., and Johnson, T. 2010. Fast robust large-scale mapping from video and internet photo collections. ISPRS Journal of Photogrammetry and Remote Sensing 65, 538–549.Google ScholarCross Ref

13. Frahm, J.-M., Georgel, P., Gallup, D., Johnson, T., Raguram, R., Wu, C., Jen, Y.-H., Dunn, E., Clipp, B., Lazebnik, S., and Pollefeys, M. 2010. Building Rome on a cloudless day. In Proc. ECCV, 368–381. Google ScholarDigital Library

14. Furukawa, Y., and Ponce, J. 2010. Accurate, dense, and robust multi-view stereopsis. IEEE TPAMI 32, 1362–1376. Google ScholarDigital Library

15. Furukawa, Y., Curless, B., Seitz, S., and Szeliski, R. 2010. Towards internet-scale multi-view stereo. In Proc. IEEE CVPR, 1434–1441.Google Scholar

16. Goesele, M., Snavely, N., Curless, B., Hoppe, H., and Seitz, S. M. 2007. Multi-view stereo for community photo collections. In Proc. ICCV, 1–8.Google Scholar

17. Goesele, M., Ackermann, J., Fuhrmann, S., Haubold, C., Klowsky, R., and Darmstadt, T. 2010. Ambient point clouds for view interpolation. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 95:1–95:6. Google ScholarDigital Library

18. Hartley, R. I., and Zisserman, A. 2004. Multiple View Geometry in Computer Vision, 2nd ed. Cambridge University Press. Google ScholarDigital Library

19. Heath, K., Gelfand, N., Ovsjanikov, M., Aanjaneya, M., and Guibas, L. J. 2010. Image webs: computing and exploiting connectivity in image collections. In Proc. IEEE CVPR, 3432–3439.Google Scholar

20. Kazhdan, M., Bolitho, M., and Hoppe, H. 2006. Poisson surface reconstruction. In Proc. Eurographics Symposium on Geometry Processing, 61–70. Google ScholarDigital Library

21. Kennedy, L., and Naaman, M. 2008. Generating diverse and representative image search results for landmarks. In Proc. WWW, 297–306. Google ScholarDigital Library

22. Kennedy, L., and Naaman, M. 2009. Less talk, more rock: automated organization of community-contributed collections of concert videos. In Proc. WWW, 311–320. Google ScholarDigital Library

23. Kimber, D., Foote, J., and Lertsithichai, S. 2001. Fly-about: spatially indexed panoramic video. In Proc. ACM Multimedia, 339–347. Google ScholarDigital Library

24. Lazebnik, S., Schmid, C., and Ponce., J. 2006. Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In Proc. IEEE CVPR, 2169–2178. Google ScholarDigital Library

25. Leung, T., and Malik, J. 2001. Representing and recognizing the visual appearance of materials using three-dimensional textons. IJCV 43, 29–44. Google ScholarDigital Library

26. Li, X., Wu, C., Zach, C., Lazebnik, S., and Frahm, J.-M. 2008. Modeling and recognition of landmark image collections using iconic scene graphs. In Proc. ECCV, 427–440. Google ScholarDigital Library

27. Lippman, A. 1980. Movie-maps: An application of the optical videodisc to computer graphics. Computer Graphics (Proc. SIGGRAPH) 14, 3, 32–42. Google ScholarDigital Library

28. Lipski, C., Linz, C., Neumann, T., and Magnor, M. 2010. High Resolution Image Correspondences for Video Post-Production. In Proc. Euro. Conf. Visual Media Prod., 33–39. Google ScholarDigital Library

29. McCurdy, N. J., and Griswold, W. G. 2005. A systems architecture for ubiquitous video. In Proc. International Conference on Mobile Systems, Applications, and Services, 1–14. Google ScholarDigital Library

30. Morvan, Y., and O’Sullivan, C. 2009. Handling occluders in transitions from panoramic images: A perceptual study. ACM Trans. Applied Perception 6, 4, 1–15. Google ScholarDigital Library

31. Murch, W. 2001. In the blink of an eye: a perspective on film editing. Silman-James Press.Google Scholar

32. Philbin, J., Sivic, J., and Zisserman, A. 2011. Geometric latent Dirichlet allocation on a matching graph for large-scale image datasets. IJCV 95, 2, 138–153. Google ScholarDigital Library

33. Pongnumkul, S., Wang, J., and Cohen, M. 2008. Creating map-based storyboards for browsing tour videos. In Proc. ACM Symposium on User Interface Software and Technology, 13–22. Google ScholarDigital Library

34. Saurer, O., Fraundorfer, F., and Pollefeys, M. 2010. OmniTour: Semi-automatic generation of interactive virtual tours from omnidirectional video. In Proc. 3DPVT, 1–8.Google Scholar

35. Schaefer, S., McPhail, T., and Warren, J. 2006. Image deformation using moving least squares. ACM Trans. Graphics (Proc. SIGGRAPH) 25, 3, 533–540. Google ScholarDigital Library

36. Sivic, J., and Zisserman, A. 2003. Video Google: A text retrieval approach to object matching in videos. In Proc. ICCV, 1470–1477. Google ScholarDigital Library

37. Snavely, N., Seitz, S. M., and Szeliski, R. 2006. Photo tourism: exploring photo collections in 3D. ACM Trans. Graph. (Proc. SIGGRAPH) 25, 3, 835–846. Google ScholarDigital Library

38. Snavely, N., Garg, R., Seitz, S. M., and Szeliski, R. 2008. Finding paths through the world’s photos. ACM Trans. Graphics (Proc. SIGGRAPH) 27, 3, 11–21. Google ScholarDigital Library

39. Thormählen, T. 2006. Zuverlässige Schätzung der Kamerabewegung aus einer Bildfolge. PhD thesis, University of Hannover. ‘Voodoo Camera Tracker’ can be downloaded from http://www.digilab.uni-hannover.de.Google Scholar

40. Torgerson, W. S. 1958. Theory and Methods of Scaling. Wiley, New York.Google Scholar

41. Toyama, K., Logan, R., Roseway, A., and Anandan, P. 2003. Geographic location tags on digital images. In Proc. ACM Multimedia, 156–166. Google ScholarDigital Library

42. Vangorp, P., Chaurasia, G., Laffont, P.-Y., Fleming, R., and Drettakis, G. 2011. Perception of visual artifacts in image-based rendering of façades. Computer Graphics Forum (Proceedings of the Eurographics Symposium on Rendering) 30, 4 (07), 1241–1250. Google ScholarDigital Library

43. Veas, E., Mulloni, A., Kruijff, E., Regenbrecht, H., and Schmalstieg, D. 2010. Techniques for view transition in multi-camera outdoor environments. In Proc. Graphics Interface, 193–200. Google ScholarDigital Library

44. von Luxburg, U. 2007. A tutorial on spectral clustering. Statistics and Computing 17, 4, 395–416. Google ScholarDigital Library

45. Weyand, T., and Leibe, B. 2011. Discovering favorite views of popular places with iconoid shift. In Proc. ICCV. Google ScholarDigital Library

46. Zamir, A. R., and Shah, M. 2010. Accurate image localization based on Google maps street view. In Proc. ECCV, 255–268. Google ScholarDigital Library