“Text-based editing of talking-head video” by Fried, Tewari, Zollhöfer, Finkelstein, Shechtman, et al. …

Conference:

Type(s):

Title:

- Text-based editing of talking-head video

Session/Category Title:

- Neural Rendering

Presenter(s)/Author(s):

Abstract:

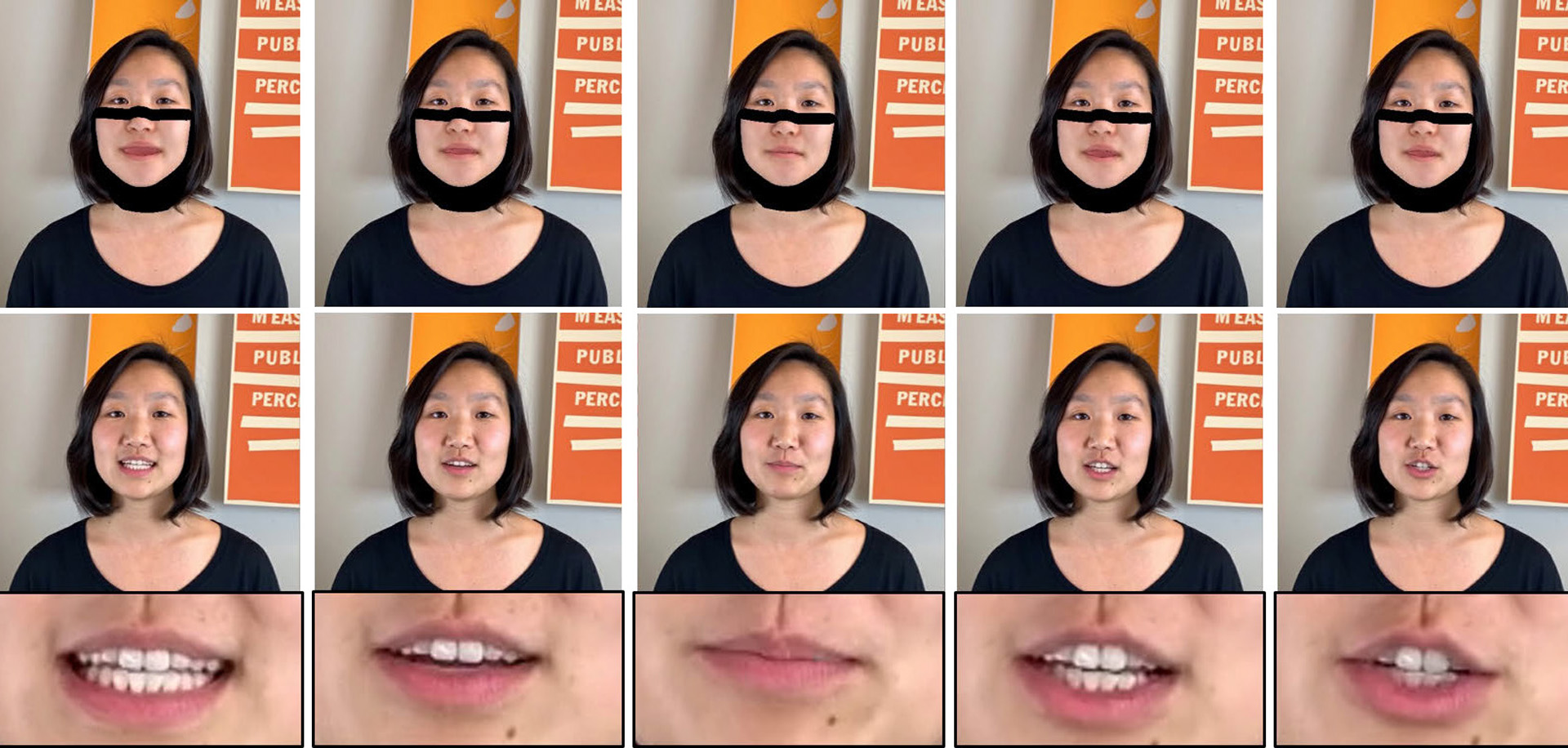

Editing talking-head video to change the speech content or to remove filler words is challenging. We propose a novel method to edit talking-head video based on its transcript to produce a realistic output video in which the dialogue of the speaker has been modified, while maintaining a seamless audio-visual flow (i.e. no jump cuts). Our method automatically annotates an input talking-head video with phonemes, visemes, 3D face pose and geometry, reflectance, expression and scene illumination per frame. To edit a video, the user has to only edit the transcript, and an optimization strategy then chooses segments of the input corpus as base material. The annotated parameters corresponding to the selected segments are seamlessly stitched together and used to produce an intermediate video representation in which the lower half of the face is rendered with a parametric face model. Finally, a recurrent video generation network transforms this representation to a photorealistic video that matches the edited transcript. We demonstrate a large variety of edits, such as the addition, removal, and alteration of words, as well as convincing language translation and full sentence synthesis.

References:

1. Annosoft. 2008. Lipsync Tool. (2008). http://www.annosoft.com/docs/Visemes17.htmlGoogle Scholar

2. Hadar Averbuch-Elor, Daniel Cohen-Or, Johannes Kopf, and Michael F. Cohen. 2017. Bringing Portraits to Life. ACM Transactions on Graphics (SIGGRAPH Asia) 36, 6 (November 2017), 196:1–13. Google ScholarDigital Library

3. Aayush Bansal, Shugao Ma, Deva Ramanan, and Yaser Sheikh. 2018. Recycle-GAN: Unsupervised Video Retargeting. In ECCV.Google Scholar

4. Floraine Berthouzoz, Wilmot Li, and Maneesh Agrawala. 2012. Tools for Placing Cuts and Transitions in Interview Video. ACM Trans. Graph. 31, 4, Article 67 (July 2012), 8 pages. Google ScholarDigital Library

5. Volker Blanz, Kristina Scherbaum, Thomas Vetter, and Hans-Peter Seidel. 2004. Exchanging Faces in Images. Computer Graphics Forum (Eurographics) 23, 3 (September 2004), 669–676.Google ScholarCross Ref

6. Volker Blanz and Thomas Vetter. 1999. A Morphable Model for the Synthesis of 3D Faces. In Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH). 187–194. Google ScholarDigital Library

7. James Booth, Anastasios Roussos, Allan Ponniah, David Dunaway, and Stefanos Zafeiriou. 2018. Large Scale 3D Morphable Models. International Journal of Computer Vision 126, 2 (April 2018), 233–254. Google ScholarDigital Library

8. Christoph Bregler, Michele Covell, and Malcolm Slaney. 1997. Video Rewrite: Driving Visual Speech with Audio. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’97). ACM Press/Addison-Wesley Publishing Co., New York, NY, USA, 353–360. Google ScholarDigital Library

9. Chen Cao, Derek Bradley, Kun Zhou, and Thabo Beeler. 2015. Real-time High-fidelity Facial Performance Capture. ACM Transactions on Graphics (SIGGRAPH) 34, 4 (July 2015), 46:1–9. Google ScholarDigital Library

10. Caroline Chan, Shiry Ginosar, Tinghui Zhou, and Alexei A. Efros. 2018. Everybody Dance Now. arXiv e-prints (August 2018). arXiv:1808.07371Google Scholar

11. Yao-Jen Chang and Tony Ezzat. 2005. Transferable Videorealistic Speech Animation. In Symposium on Computer Animation (SCA). 143–151. Google ScholarDigital Library

12. Qifeng Chen and Vladlen Koltun. 2017. Photographic Image Synthesis with Cascaded Refinement Networks. In International Conference on Computer Vision (ICCV). 1520–1529.Google Scholar

13. Pengfei Dou, Shishir K. Shah, and Ioannis A. Kakadiaris. 2017. End-To-End 3D Face Reconstruction With Deep Neural Networks. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

14. Pif Edwards, Chris Landreth, Eugene Fiume, and Karan Singh. 2016. JALI: An Animator-centric Viseme Model for Expressive Lip Synchronization. ACM Trans. Graph. 35, 4, Article 127 (July 2016), 11 pages. Google ScholarDigital Library

15. Tony Ezzat, Gadi Geiger, and Tomaso Poggio. 2002. Trainable Videorealistic Speech Animation. ACM Transactions on Graphics (SIGGRAPH) 21, 3 (July 2002), 388–398. Google ScholarDigital Library

16. Graham Fyffe, Andrew Jones, Oleg Alexander, Ryosuke Ichikari, and Paul Debevec. 2014. Driving High-Resolution Facial Scans with Video Performance Capture. ACM Transactions on Graphics 34, 1 (December 2014), 8:1–14. Google ScholarDigital Library

17. J. S. Garofolo, L. F. Lamel, W. M. Fisher, J. G. Fiscus, D. S. Pallett, and N. L. Dahlgren. 1993. DARPA TIMIT Acoustic Phonetic Continuous Speech Corpus CDROM. (1993). http://www.ldc.upenn.edu/Catalog/LDC93S1.htmlGoogle Scholar

18. Pablo Garrido, Levi Valgaerts, Ole Rehmsen, Thorsten Thormaehlen, Patrick Pérez, and Christian Theobalt. 2014. Automatic Face Reenactment. In CVPR. 4217–4224. Google ScholarDigital Library

19. Pablo Garrido, Levi Valgaerts, Hamid Sarmadi, Ingmar Steiner, Kiran Varanasi, Patrick Pérez, and Christian Theobalt. 2015. VDub: Modifying Face Video of Actors for Plausible Visual Alignment to a Dubbed Audio Track. Computer Graphics Forum (Eurographics) 34, 2 (May 2015), 193–204. Google ScholarDigital Library

20. Pablo Garrido, Michael Zollhöfer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Pérez, and Christian Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. ACM Transactions on Graphics 35, 3 (June 2016), 28:1–15. Google ScholarDigital Library

21. Jiahao Geng, Tianjia Shao, Youyi Zheng, Yanlin Weng, and Kun Zhou. 2018. Warp-guided GANs for Single-photo Facial Animation. In SIGGRAPH Asia 2018 Technical Papers (SIGGRAPH Asia ’18). ACM, New York, NY, USA, Article 231, 231:1–231:12 pages. Google ScholarDigital Library

22. Kyle Genova, Forrester Cole, Aaron Maschinot, Aaron Sarna, Daniel Vlasic, and William T. Freeman. 2018. Unsupervised Training for 3D Morphable Model Regression. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

23. Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems. Google ScholarDigital Library

24. Y. Guo, J. Zhang, J. Cai, B. Jiang, and J. Zheng. 2018. CNN-based Real-time Dense Face Reconstruction with Inverse-rendered Photo-realistic Face Images. IEEE Transactions on Pattern Analysis and Machine Intelligence (2018), 1–1.Google Scholar

25. Andrew J Hunt and Alan W Black. 1996. Unit selection in a concatenative speech synthesis system using a large speech database. In Acoustics, Speech, and Signal Processing, 1996. ICASSP-96. Conference Proceedings., 1996 IEEE International Conference on, Vol. 1. IEEE, 373–376. Google ScholarDigital Library

26. IBM. 2016. IBM Speech to Text Service. https://www.ibm.com/smarterplanet/us/en/ibmwatson/developercloud/doc/speech-to-text/. (2016). Accessed 2016-12-17.Google Scholar

27. Alexandru Eugen Ichim, Sofien Bouaziz, and Mark Pauly. 2015. Dynamic 3D Avatar Creation from Hand-held Video Input. ACM Transactions on Graphics (SIGGRAPH) 34, 4 (July 2015), 45:1–14. Google ScholarDigital Library

28. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In Conference on Computer Vision and Pattern Recognition (CVPR). 5967–5976.Google Scholar

29. Zeyu Jin, Gautham J Mysore, Stephen Diverdi, Jingwan Lu, and Adam Finkelstein. 2017. VoCo: text-based insertion and replacement in audio narration. ACM Transactions on Graphics (TOG) 36, 4 (2017), 96. Google ScholarDigital Library

30. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In International Conference on Learning Representations (ICLR).Google Scholar

31. Ira Kemelmacher-Shlizerman. 2013. Internet-Based Morphable Model. In International Conference on Computer Vision (ICCV). 3256–3263. Google ScholarDigital Library

32. Ira Kemelmacher-Shlizerman, Aditya Sankar, Eli Shechtman, and Steven M. Seitz. 2010. Being John Malkovich. In European Conference on Computer Vision (ECCV). 341–353. Google ScholarDigital Library

33. Hyeongwoo Kim, Pablo Garrido, Ayush Tewari, Weipeng Xu, Justus Thies, Matthias Nießner, Patrick Pérez, Christian Richardt, Michael Zollhöfer, and Christian Theobalt. 2018a. Deep Video Portraits. ACM Transactions on Graphics (TOG) 37, 4 (2018), 163. Google ScholarDigital Library

34. H. Kim, P. Garrido, A. Tewari, W. Xu, J. Thies, N. Nießner, P. Pérez, C. Richardt, M. Zollhöfer, and C. Theobalt. 2018b. Deep Video Portraits. ACM Transactions on Graphics 2018 (TOG) (2018). Google ScholarDigital Library

35. Mackenzie Leake, Abe Davis, Anh Truong, and Maneesh Agrawala. 2017. Computational Video Editing for Dialogue-driven Scenes. ACM Trans. Graph. 36, 4, Article 130 (July 2017), 14 pages. Google ScholarDigital Library

36. Vladimir I Levenshtein. 1966. Binary codes capable of correcting deletions, insertions, and reversals. In Soviet physics doklady, Vol. 10. 707–710.Google Scholar

37. Kai Li, Qionghai Dai, Ruiping Wang, Yebin Liu, Feng Xu, and Jue Wang. 2014. A Data-Driven Approach for Facial Expression Retargeting in Video. IEEE Transactions on Multimedia 16, 2 (February 2014), 299–310. Google ScholarDigital Library

38. Kang Liu and Joern Ostermann. 2011. Realistic facial expression synthesis for an image-based talking head. In International Conference on Multimedia and Expo (ICME). Google ScholarDigital Library

39. L. Liu, W. Xu, M. Zollhoefer, H. Kim, F. Bernard, M. Habermann, W. Wang, and C. Theobalt. 2018. Neural Animation and Reenactment of Human Actor Videos. ArXiv e-prints (September 2018). arXiv:1809.03658Google Scholar

40. Zicheng Liu, Ying Shan, and Zhengyou Zhang. 2001. Expressive Expression Mapping with Ratio Images. In Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH). 271–276. Google ScholarDigital Library

41. Ricardo Martin-Brualla, Rohit Pandey, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Julien Valentin, Sameh Khamis, Philip Davidson, Anastasia Tkach, Peter Lincoln, Adarsh Kowdle, Christoph Rhemann, Dan B Goldman, Cem Keskin, Steve Seitz, Shahram Izadi, and Sean Fanello. 2018. LookinGood: Enhancing Performance Capture with Real-time Neural Re-rendering. ACM Trans. Graph. 37, 6, Article 255 (December 2018), 14 pages. Google ScholarDigital Library

42. Wesley Mattheyses, Lukas Latacz, and Werner Verhelst. 2010. Optimized photorealistic audiovisual speech synthesis using active appearance modeling. In Auditory-Visual Speech Processing. 8–1.Google Scholar

43. Mehdi Mirza and Simon Osindero. 2014. Conditional Generative Adversarial Nets. (2014). https://arxiv.org/abs/1411.1784 arXiv:1411.1784.Google Scholar

44. Koki Nagano, Jaewoo Seo, Jun Xing, Lingyu Wei, Zimo Li, Shunsuke Saito, Aviral Agarwal, Jens Fursund, and Hao Li. 2018. paGAN: Real-time Avatars Using Dynamic Textures. In SIGGRAPH Asia 2018 Technical Papers (SIGGRAPH Asia ’18). ACM, New York, NY, USA, Article 258, 12 pages. Google ScholarDigital Library

45. Robert Ochshorn and Max Hawkins. 2016. Gentle: A Forced Aligner. https://lowerquality.com/gentle/. (2016). Accessed 2018-09-25.Google Scholar

46. Kyle Olszewski, Zimo Li, Chao Yang, Yi Zhou, Ronald Yu, Zeng Huang, Sitao Xiang, Shunsuke Saito, Pushmeet Kohli, and Hao Li. 2017. Realistic Dynamic Facial Textures from a Single Image using GANs. In International Conference on Computer Vision (ICCV). 5439–5448.Google ScholarCross Ref

47. Amy Pavel, Dan B Goldman, Björn Hartmann, and Maneesh Agrawala. 2016. VidCrit: Video-based Asynchronous Video Review. In Proc. of UIST. ACM, 517–528. Google ScholarDigital Library

48. Amy Pavel, Colorado Reed, Björn Hartmann, and Maneesh Agrawala. 2014. Video Digests: A Browsable, Skimmable Format for Informational Lecture Videos. In Proc. of UIST. 573–582. Google ScholarDigital Library

49. Alec Radford, Luke Metz, and Soumith Chintala. 2016. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In International Conference on Learning Representations (ICLR).Google Scholar

50. Elad Richardson, Matan Sela, and Ron Kimmel. 2016. 3D Face Reconstruction by Learning from Synthetic Data. In International Conference on 3D Vision (3DV). 460–469.Google ScholarCross Ref

51. Elad Richardson, Matan Sela, Roy Or-El, and Ron Kimmel. 2017. Learning Detailed Face Reconstruction from a Single Image. In Conference on Computer Vision and Pattern Recognition (CVPR). 5553–5562.Google ScholarCross Ref

52. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI). 234–241.Google Scholar

53. Joseph Roth, Yiying Tong Tong, and Xiaoming Liu. 2017. Adaptive 3D Face Reconstruction from Unconstrained Photo Collections. IEEE Transactions on Pattern Analysis and Machine Intelligence 39, 11 (November 2017), 2127–2141.Google ScholarDigital Library

54. Steve Rubin, Floraine Berthouzoz, Gautham J Mysore, Wilmot Li, and Maneesh Agrawala. 2013. Content-based tools for editing audio stories. In Proceedings of the 26th annual ACM symposium on User interface software and technology. 113–122. Google ScholarDigital Library

55. Matan Sela, Elad Richardson, and Ron Kimmel. 2017. Unrestricted Facial Geometry Reconstruction Using Image-to-Image Translation. In International Conference on Computer Vision (ICCV). 1585–1594.Google Scholar

56. Jonathan Shen, Ruoming Pang, Ron J Weiss, Mike Schuster, Navdeep Jaitly, Zongheng Yang, Zhifeng Chen, Yu Zhang, Yuxuan Wang, Rj Skerrv-Ryan, et al. 2018. Natural tts synthesis by conditioning wavenet on mel spectrogram predictions. In ICASSP. IEEE, 4779–4783.Google Scholar

57. Fuhao Shi, Hsiang-Tao Wu, Xin Tong, and Jinxiang Chai. 2014. Automatic Acquisition of High-fidelity Facial Performances Using Monocular Videos. ACM Transactions on Graphics (SIGGRAPH Asia) 33, 6 (November 2014), 222:1–13. Google ScholarDigital Library

58. Hijung Valentina Shin, Wilmot Li, and Frédo Durand. 2016. Dynamic Authoring of Audio with Linked Scripts. In Proc. of UIST. 509–516. Google ScholarDigital Library

59. Qianru Sun, Ayush Tewari, Weipeng Xu, Mario Fritz, Christian Theobalt, and Bernt Schiele. 2018. A Hybrid Model for Identity Obfuscation by Face Replacement. In European Conference on Computer Vision (ECCV).Google ScholarCross Ref

60. Supasorn Suwajanakorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. 2017. Synthesizing Obama: Learning Lip Sync from Audio. ACM Trans. Graph. 36, 4, Article 95 (July 2017), 13 pages. Google ScholarDigital Library

61. Sarah Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica Hodgins, and Iain Matthews. 2017. A Deep Learning Approach for Generalized Speech Animation. ACM Trans. Graph. 36, 4, Article 93 (July 2017), 11 pages. Google ScholarDigital Library

62. Ayush Tewari, Michael Zollhöfer, Florian Bernard, Pablo Garrido, Hyeongwoo Kim, Patrick Perez, and Christian Theobalt. 2018a. High-Fidelity Monocular Face Reconstruction based on an Unsupervised Model-based Face Autoencoder. IEEE Transactions on Pattern Analysis and Machine Intelligence (2018), 1–1.Google Scholar

63. Ayush Tewari, Michael Zollhöfer, Pablo Garrido, Florian Bernard, Hyeongwoo Kim, Patrick Pérez, and Christian Theobalt. 2018b. Self-supervised Multi-level Face Model Learning for Monocular Reconstruction at over 250 Hz. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

64. Ayush Tewari, Michael Zollhöfer, Hyeongwoo Kim, Pablo Garrido, Florian Bernard, Patrick Pérez, and Christian Theobalt. 2017. MoFA: Model-based Deep Convolutional Face Autoencoder for Unsupervised Monocular Reconstruction. In ICCV. 3735–3744.Google Scholar

65. Justus Thies, Michael Zollhöfer, Marc Stamminger, Christian Theobalt, and Matthias Nießner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Conference on Computer Vision and Pattern Recognition (CVPR). 2387–2395.Google ScholarDigital Library

66. Anh Tuan Tran, Tal Hassner, Iacopo Masi, and Gerard Medioni. 2017. Regressing Robust and Discriminative 3D Morphable Models with a very Deep Neural Network. In Conference on Computer Vision and Pattern Recognition (CVPR). 1493–1502.Google ScholarCross Ref

67. Anh Truong, Floraine Berthouzoz, Wilmot Li, and Maneesh Agrawala. 2016. Quickcut: An interactive tool for editing narrated video. In Proc. of UIST. 497–507. Google ScholarDigital Library

68. Aäron Van Den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew W Senior, and Koray Kavukcuoglu. 2016. WaveNet: A generative model for raw audio. In SSW. 125.Google Scholar

69. Daniel Vlasic, Matthew Brand, Hanspeter Pfister, and Jovan Popović. 2005. Face Transfer with Multilinear Models. ACM Transactions on Graphics (SIGGRAPH) 24, 3 (July 2005), 426–433. Google ScholarDigital Library

70. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018a. Video-to-Video Synthesis. In Advances in Neural Information Processing Systems (NeurIPS). Google ScholarDigital Library

71. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018b. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In CVPR.Google Scholar

72. O. Wiles, A.S. Koepke, and A. Zisserman. 2018. X2Face: A network for controlling face generation by using images, audio, and pose codes. In European Conference on Computer Vision.Google Scholar

73. Jiahong Yuan and Mark Liberman. 2008. Speaker identification on the SCOTUS corpus. The Journal of the Acoustical Society of America 123, 5 (2008), 3878–3878.Google ScholarCross Ref

74. Heiga Zen, Keiichi Tokuda, and Alan W Black. 2009. Statistical parametric speech synthesis. speech communication 51, 11 (2009), 1039–1064. Google ScholarDigital Library

75. Yang Zhou, Zhan Xu, Chris Landreth, Evangelos Kalogerakis, Subhransu Maji, and Karan Singh. 2018. Visemenet: Audio-driven Animator-centric Speech Animation. ACM Trans. Graph. 37, 4, Article 161 (July 2018), 161:1–161:10 pages. Google ScholarDigital Library

76. M. Zollhöfer, J. Thies, P. Garrido, D. Bradley, T. Beeler, P. Pérez, M. Stamminger, M. Nießner, and C. Theobalt. 2018. State of the Art on Monocular 3D Face Reconstruction, Tracking, and Applications. Computer Graphics Forum (Eurographics State of the Art Reports 2018) 37, 2 (2018).Google Scholar