“Synthetic depth-of-field with a single-camera mobile phone” by Wadhwa, Garg, Feldman, Kanazawa, Carroll, et al. …

Conference:

Type(s):

Title:

- Synthetic depth-of-field with a single-camera mobile phone

Session/Category Title: Computational Photos and Videos

Presenter(s)/Author(s):

- Neal Wadhwa

- Rahul Garg

- Bryan E. Feldman

- Nori Kanazawa

- Robert Carroll

- Yair Movshovitz-Attias

- Jonathan T. Barron

- Yael Pritch

- Marc Levoy

Moderator(s):

Entry Number: 64

Abstract:

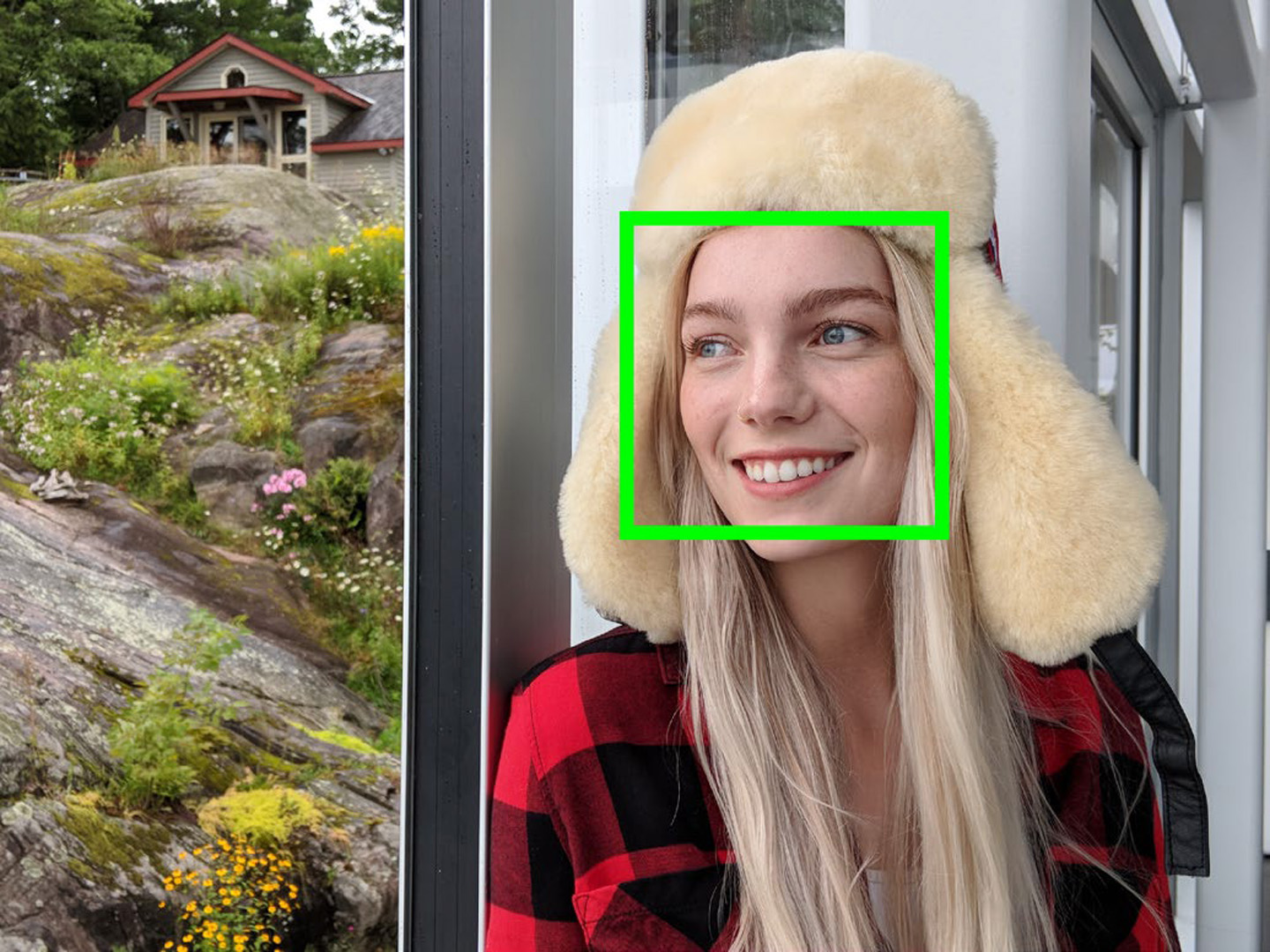

Shallow depth-of-field is commonly used by photographers to isolate a subject from a distracting background. However, standard cell phone cameras cannot produce such images optically, as their short focal lengths and small apertures capture nearly all-in-focus images. We present a system to computationally synthesize shallow depth-of-field images with a single mobile camera and a single button press. If the image is of a person, we use a person segmentation network to separate the person and their accessories from the background. If available, we also use dense dual-pixel auto-focus hardware, effectively a 2-sample light field with an approximately 1 millimeter baseline, to compute a dense depth map. These two signals are combined and used to render a defocused image. Our system can process a 5.4 megapixel image in 4 seconds on a mobile phone, is fully automatic, and is robust enough to be used by non-experts. The modular nature of our system allows it to degrade naturally in the absence of a dual-pixel sensor or a human subject.

References:

1. Martín Abadi, Ashish Agarwal, Paul Barham, Eugene Brevdo, et al. 2015. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. https://www.tensorflow.org/Google Scholar

2. Edward H Adelson and James R Bergen. 1985. Spatiotemporal energy models for the perception of motion. JOSA A (1985).Google Scholar

3. Edward H Adelson and John YA Wang. 1992. Single lens stereo with a plenoptic camera. TPAMI (1992). Google ScholarDigital Library

4. Robert Anderson, David Gallup, Jonathan T Barron, Janne Kontkanen, Noah Snavely, Carlos Hernández, Sameer Agarwal, and Steven M Seitz. 2016. Jump: Virtual Reality Video. SIGGRAPH Asia (2016).Google ScholarDigital Library

5. Jonathan T Barron, Andrew Adams, YiChang Shih, and Carlos Hernández. 2015. Fast bilateral-space stereo for synthetic defocus. CVPR (2015).Google Scholar

6. J. T. Barron and J. Malik. 2015. Shape, illumination, and reflectance from shading. TPAMI (2015).Google Scholar

7. Jonathan T Barron and Ben Poole. 2016. The fast bilateral solver. ECCV (2016).Google Scholar

8. Qifeng Chen, Dingzeyu Li, and Chi-Keung Tang. 2013. KNN matting. TPAMI (2013). Google ScholarDigital Library

9. David Eigen, Christian Puhrsch, and Rob Fergus. 2014. Depth Map Prediction from a Single Image Using a Multi-scale Deep Network. NIPS (2014). Google ScholarDigital Library

10. Ravi Garg, Vijay Kumar B.G., Gustavo Carneiro, and Ian Reid. 2016. Unsupervised CNN for Single View Depth Estimation: Geometry to the Rescue. ECCV (2016).Google Scholar

11. Ross Girshick. 2015. Fast R-CNN. ICCV (2015). Google ScholarDigital Library

12. Clément Godard, Oisin Mac Aodha, and Gabriel J. Brostow. 2017. Unsupervised Monocular Depth Estimation with Left-Right Consistency. CVPR (2017).Google Scholar

13. Steven J Gortler, Radek Grzeszczuk, Richard Szeliski, and Michael F Cohen. 1996. The lumigraph. SIGGRAPH (1996). Google ScholarDigital Library

14. Hyowon Ha, Sunghoon Im, Jaesik Park, Hae-Gon Jeon, and In So Kweon. 2016. High-quality Depth from Uncalibrated Small Motion Clip. CVPR (2016).Google Scholar

15. Samuel W Hasinoff, Dillon Sharlet, Ryan Geiss, Andrew Adams, Jonathan T Barron, Florian Kainz, Jiawen Chen, and Marc Levoy. 2016. Burst photography for high dynamic range and low-light imaging on mobile cameras. SIGGRAPH (2016).Google Scholar

16. Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. 2017. Mask R-CNN. ICCV (2017).Google Scholar

17. Carlos Hernández. 2014. Lens Blur in the new Google Camera app. http://research.googleblog.com/2014/04/lens-blur-in-new-google-camera-app.html.Google Scholar

18. Derek Hoiem, Alexei A. Efros, and Martial Hebert. 2005. Automatic Photo Pop-up. SIGGRAPH (2005). Google ScholarDigital Library

19. B. K. P. Horn. 1975. Obtaining shape from shading information. The Psychology of Computer Vision (1975). Google ScholarDigital Library

20. David E. Jacobs, Jongmin Baek, and Marc Levoy. 2012. Focal Stack Compositing for Depth of Field Control. Stanford Computer Graphics Laboratory Technical Report 2012-1 (2012).Google Scholar

21. H. G. Jeon, J. Park, G. Choe, J. Park, Y. Bok, Y. W. Tai, and I. S. Kweon. 2015. Accurate depth map estimation from a lenslet light field camera. CVPR (2015).Google Scholar

22. Neel Joshi and Larry Zitnick. 2014. Micro-Baseline Stereo. Technical Report.Google Scholar

23. Johannes Kopf, Michael F Cohen, Dani Lischinski, and Matt Uyttendaele. 2007. Joint bilateral upsampling. ACM TOG (2007). Google ScholarDigital Library

24. M. Kraus and M. Strengert. 2007. Depth-of-Field Rendering by Pyramidal Image Processing. Computer Graphics Forum (2007).Google Scholar

25. Sungkil Lee, Gerard Jounghyun Kim, and Seungmoon Choi. 2009. Real-Time Depth-of-Field Rendering Using Anisotropically Filtered Mipmap Interpolation. IEEE TVCG (2009). Google ScholarDigital Library

26. Marc Levoy and Pat Hanrahan. 1996. Light field rendering. SIGGRAPH (1996). Google ScholarDigital Library

27. Fayao Liu, Chunhua Shen, Guosheng Lin, and Ian Reid. 2016. Learning Depth from Single Monocular Images Using Deep Convolutional Neural Fields. TPAMI (2016). Google ScholarDigital Library

28. Jonathan Long, Evan Shelhamer, and Trevor Darrell. 2015. Fully Convolutional Networks for Semantic Segmentation. CVPR (2015).Google Scholar

29. Alejandro Newell, Kaiyu Yang, and Jia Deng. 2016. Stacked Hourglass Networks for Human Pose Estimation. ECCV (2016).Google Scholar

30. Ren Ng, Marc Levoy, Mathieu Brédif, Gene Duval, Mark Horowitz, and Pat Hanrahan. 2005. Light field photography with a hand-held plenoptic camera. (2005).Google Scholar

31. George Papandreou, Tyler Zhu, Nori Kanazawa, Alexander Toshev, Jonathan Tompson. Chris Bregler, and Kevin Murphy. 2017. Towards Accurate Multi-person Pose Estimation in the Wild. CVPR (2017).Google Scholar

32. Jonathan Ragan-Kelley, Connelly Barnes, Andrew Adams, Sylvain Paris, Frédo Durand, and Saman Amarasinghe. 2013. Halide: a language and compiler for optimizing parallelism, locality, and recomputation in image processing pipelines. ACM SIGPLAN Notices (2013). Google ScholarDigital Library

33. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation. MICCAI (2015).Google Scholar

34. Ashutosh Saxena, Min Sun, and Andrew Y. Ng. 2009. Make3D: Learning 3D Scene Structure from a Single Still Image. TPAMI (2009). Google ScholarDigital Library

35. Daniel Scharstein and Richard Szeliski. 2002. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. IJCV (2002). Google ScholarDigital Library

36. Xiaoyong Shen, Aaron Hertzmann, Jiaya Jia, Sylvain Paris, Brian Price, Eli Shechtman, and Ian Sachs. 2016a. Automatic portrait segmentation for image stylization. Computer Graphics Forum (2016).Google Scholar

37. Xiaoyong Shen, Xin Tao, Hongyun Gao, Chao Zhou, and Jiaya Jia. 2016b. Deep Automatic Portrait Matting. ECCV (2016).Google Scholar

38. Sudipta N. Sinha, Daniel Scharstein, and Richard Szeliski. 2014. Efficient High-Resolution Stereo Matching using Local Plane Sweeps. CVPR (2014). Google ScholarDigital Library

39. S. Suwajanakorn, C. Hernandez, and S. M. Seitz. 2015. Depth from focus with your mobile phone. CVPR (2015).Google Scholar

40. H. Tang, S. Cohen, B. Price, S. Schiller, and K. N. Kutulakos. 2017. Depth from Defocus in the Wild. CVPR (2017).Google Scholar

41. Michael W Tao, Sunil Hadap, Jitendra Malik, and Ravi Ramamoorthi. 2013. Depth from combining defocus and correspondence using light-field cameras. ICCV (2013). Google ScholarDigital Library

42. Jonathan Tompson, Arjun Jain, Yann LeCun, and Christoph Bregler. 2014. Joint Training of a Convolutional Network and a Graphical Model for Human Pose Estimation. NIPS (2014). Google ScholarDigital Library

43. Subarna Tripathi, Maxwell Collins, Matthew Brown, and Serge J. Belongie. 2017. Pose2Instance: Harnessing Keypoints for Person Instance Segmentation. CoRR abs/1704.01152 (2017).Google Scholar

44. Junyuan Xie, Ross Girshick, and Ali Farhadi. 2016. Deep3D: Fully Automatic 2D-to-3D Video Conversion with Deep Convolutional Neural Networks. ECCV (2016).Google Scholar

45. N. Xu, B. Price, S. Cohen, and T. Huang. 2017. Deep Image Matting. CVPR (2017).Google Scholar

46. Fisher Yu and David Gallup. 2014. 3D Reconstruction from Accidental Motion. CVPR (2014). Google ScholarDigital Library

47. Tinghui Zhou, Matthew Brown, Noah Snavely, and David G. Lowe. 2017. Unsupervised Learning of Depth and Ego-Motion from Video. CVPR (2017).Google Scholar

48. Bingke Zhu, Yingying Chen, Jinqiao Wang, Si Liu, Bo Zhang, and Ming Tang. 2017. Fast Deep Matting for Portrait Animation on Mobile Phone. ACM Multimedia (2017). Google ScholarDigital Library