“Scalable inside-out image-based rendering”

Conference:

Type(s):

Title:

- Scalable inside-out image-based rendering

Session/Category Title:

- High Resolution

Presenter(s)/Author(s):

Abstract:

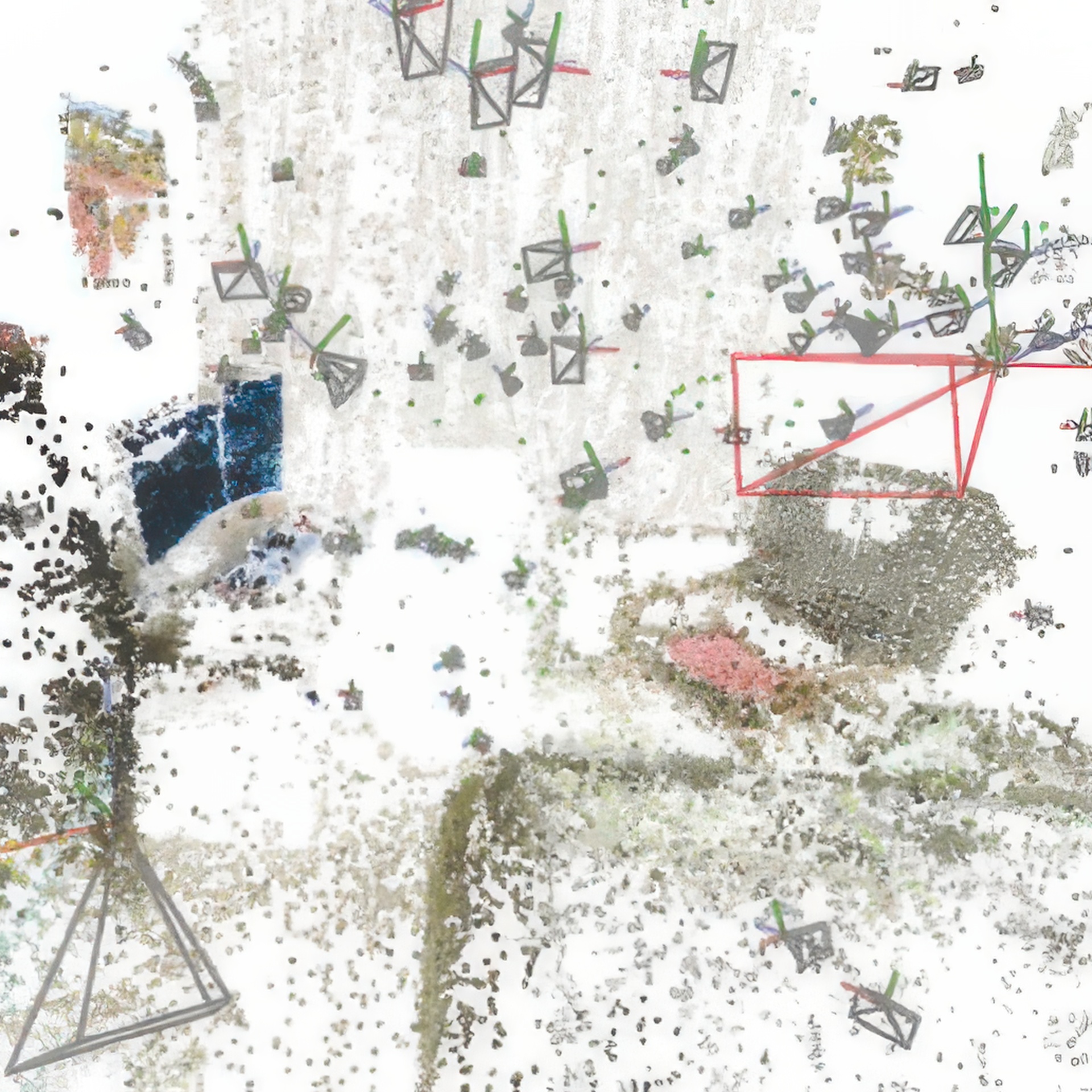

Our aim is to give users real-time free-viewpoint rendering of real indoor scenes, captured with off-the-shelf equipment such as a high-quality color camera and a commodity depth sensor. Image-based Rendering (IBR) can provide the realistic imagery required at real-time speed. For indoor scenes however, two challenges are especially prominent. First, the reconstructed 3D geometry must be compact, but faithful enough to respect occlusion relationships when viewed up close. Second, man-made materials call for view-dependent texturing, but using too many input photographs reduces performance. We customize a typical RGB-D 3D surface reconstruction pipeline to produce a coarse global 3D surface, and local, per-view geometry for each input image. Our tiled IBR preserves quality by economizing on the expected contributions that entire groups of input pixels make to a final image. The two components are designed to work together, giving real-time performance, while hardly sacrificing quality. Testing on a variety of challenging scenes shows that our inside-out IBR scales favorably with the number of input images.

References:

1. Aliaga, D. G., Funkhouser, T., Yanovsky, D., and Carlbom, I. 2002. Sea of images. In Vis., IEEE, 331–338.

2. Arikan, M., Preiner, R., Scheiblauer, C., Jeschke, S., and Wimmer, M. 2014. Large-scale point-cloud visualization through localized textured surface reconstruction. IEEE Trans. Vis. Comput. Graphics 20, 9, 1280–1292. Cross Ref

3. Arikan, M., Preiner, R., and Wimmer, M. 2016. Multi-depth-map raytracing for efficient large-scene reconstruction. IEEE Trans. Vis. Comput. Graphics 22, 2, 1127–1137.

4. Bahmutov, G., Popescu, V., and Mudure, M. 2006. Efficient large scale acquisition of building interiors. Comp. Graph Forum 25, 3, 655–662. Cross Ref

5. Bhat, P., Zitnick, C. L., Snavely, N., Agarwala, A., Agrawala, M., Cohen, M., Curless, B., and Kang, S. B. 2007. Using photographs to enhance videos of a static scene. In EGSR, Eurographics Association, 327–338.

6. Birklbauer, C., Opelt, S., and Bimber, O. 2013. Rendering gigaray light fields. Comp. Graph. Forum 32, 2, 469–478. Cross Ref

7. Buehler, C., Bosse, M., McMillan, L., Gortler, S., and Cohen, M. 2001. Unstructured lumigraph rendering. In SIGGRAPH, ACM, 425–432.

8. Chaurasia, G., Duchene, S., Sorkine-Hornung, O., and Drettakis, G. 2013. Depth synthesis and local warps for plausible image-based navigation. ACM Trans. Graph. 32, 3, 30:1–30:12.

9. Chen, W.-C., Bouguet, J.-Y., Chu, M. H., and Grzeszczuk, R. 2002. Light field mapping: efficient representation and hardware rendering of surface light fields. ACM Trans. Graph. 21, 3, 447–456.

10. Choi, S., Zhou, Q.-Y., and Koltun, V. 2015. Robust reconstruction of indoor scenes. In CVPR, IEEE, 5556–5565.

11. Choi, S., Zhou, Q.-Y., Miller, S., and Koltun, V. 2016. A large dataset of object scans. arXiv 1602.02481.

12. Dai, A., Niessner, M., Zollhöfer, M., Izadi, S., and Theobalt, C. 2016. BundleFusion: Real-time globally consistent 3D reconstruction using online surface re-integration. arXiv 1604.01093.

13. Davis, A., Levoy, M., and Durand, F. 2012. Unstructured light fields. Comp. Graph. Forum 31, 2, 305–314.

14. Debevec, P., Yu, Y., and Borshukov, G. 1998. Efficient view-dependent image-based rendering with projective texture-mapping. In Rendering Workshop, Springer, 105–116.

15. Eisemann, M., De Decker, B., Magnor, M., Bekaert, P., De Aguiar, E., Ahmed, N., Theobalt, C., and Sellent, A. 2008. Floating textures. Comp. Graph. Forum 27, 2, 409–418. Cross Ref

16. Fehn, C. 2004. Depth-image-based rendering (DIBR), compression, and transmission for a new approach on 3D-TV. In SPIE 5291, 93–104.

17. Fitzgibbon, A., Wexler, Y., and Zisserman, A. 2005. Image-based rendering using image-based priors. Int. J. Comput. Vision 63, 2, 141–151.

18. Flynn, J., Neulander, I., Philbin, J., and Snavely, N. 2016. DeepStereo: Learning to predict new views from the world’s imagery. In CVPR, IEEE, 5515–5524.

19. Furukawa, Y., and Ponce, J. 2010. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 32, 8, 1362–1376.

20. Furukawa, Y., Curless, B., Seitz, S. M., and Szeliski, R. 2009. Reconstructing building interiors from images. In ICCV, IEEE, 80–87.

21. Galliani, S., Lasinger, K., and Schindler, K. 2015. Massively parallel multiview stereopsis by surface normal diffusion. In ICCV, IEEE, 873–881.

22. Garland, M., and Heckbert, P. S. 1997. Surface simplification using quadric error metrics. In SIGGRAPH, ACM, 209–216.

23. Goesele, M., Snavely, N., Curless, B., Hoppe, H., and Seitz, S. M. 2007. Multi-view stereo for community photo collections. In ICCV, IEEE, 1–8.

24. Goesele, M., Ackermann, J., Fuhrmann, S., Haubold, C., Klowsky, R., Steedly, D., and Szeliski, R. 2010. Ambient point clouds for view interpolation. ACM Trans. Graph. 29, 4, 95:1–95:6.

25. Goldlücke, B., Aubry, M., Kolev, K., and Cremers, D. 2014. A super-resolution framework for high-accuracy multiview reconstruction. Int. J. Comput. Visionn 106, 2, 172–191.

26. Gortler, S. J., Grzeszczuk, R., Szeliski, R., and Cohen, M. F. 1996. The lumigraph. In SIGGRAPH, ACM, 43–54.

27. Henry, P., Krainin, M., Herbst, E., Ren, X., and Fox, D. 2012. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Rob. Res. 31, 5, 647–663.

28. Kazhdan, M., and Hoppe, H. 2013. Screened poisson surface reconstruction. ACM Trans. Graph. 32, 3, 29:1–29:13.

29. Kopf, J., Langguth, F., Scharstein, D., Szeliski, R., and Goesele, M. 2013. Image-based rendering in the gradient domain. ACM Trans. Graph. 32, 6, 199:1–199:9.

30. Lensch, H. P., Heidrich, W., and Seidel, H.-P. 2001. A silhouette-based algorithm for texture registration and stitching. Graph. Models 63, 4, 245–262.

31. Lensch, H., Kautz, J., Goesele, M., Heidrich, W., and Seidel, H.-P. 2003. Image-based reconstruction of spatial appearance and geometric detail. ACM Trans. Graph. 22, 2, 234–257.

32. Levoy, M., and Hanrahan, P. 1996. Light field rendering. In SIGGRAPH, ACM, 31–42.

33. Ma, Z., He, K., Wei, Y., Sun, J., and Wu, E. 2013. Constant time weighted median filtering for stereo matching and beyond. In ICCV, IEEE, 49–56.

34. Matusik, W., Buehler, C., Raskar, R., Gortler, S. J., and McMillan, L. 2000. Image-based visual hulls. In SIGGRAPH, ACM, 369–374.

35. McMillan, L., and Bishop, G. 1995. Plenoptic modeling: An image-based rendering system. In SIGGRAPH, ACM, 39–46.

36. Moulon, P., Monasse, P., and Marlet, R. 2013. Adaptive structure from motion with a contrario model estimation. In ACCV, Springer, 257–270.

37. Nair, R., Ruhl, K., Lenzen, F., Meister, S., Schäfer, H., Garbe, C. S., Eisemann, M., Magnor, M., and Kondermann, D. 2013. A survey on time-of-flight stereo fusion. In Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications. Springer, 105–127.

38. Newcombe, R. A., Izadi, S., Hilliges, O., Molyneaux, D., Kim, D., Davison, A. J., Kohi, P., Shotton, J., Hodges, S., and Fitzgibbon, A. 2011. KinectFusion: Real-time dense surface mapping and tracking. In ISMAR, IEEE, 127–136.

39. Niessner, M., Zollhöfer, M., Izadi, S., and Stamminger, M. 2013. Real-time 3D reconstruction at scale using voxel hashing. ACM Trans. Graph. 32, 6, 169:1–169:11.

40. Olsson, O., and Assarsson, U. 2011. Tiled shading. J. Graphics, GPU, and Game Tools 15, 4, 235–251.

41. Ortiz-Cayon, R., Djelouah, A., and Drettakis, G. 2015. A Bayesian approach for selective image-based rendering using superpixels. In 3DV, IEEE, 469–477.

42. Pujades, S., Devernay, F., and Goldluecke, B. 2014. Bayesian view synthesis and image-based rendering principles. In CVPR, IEEE, 3906–3913.

43. Sankar, A., and Seitz, S. 2012. Capturing indoor scenes with smartphones. In UIST, ACM, 403–412.

44. Shum, H.-Y., Chan, S.-C., and Kang, S. B. 2008. Image-based rendering. Springer.

45. Sinha, S. N., Steedly, D., and Szeliski, R. 2009. Piecewise planar stereo for image-based rendering. In ICCV, IEEE, 1881–1888.

46. Stich, T., Linz, C., Wallraven, C., Cunningham, D., and Magnor, M. 2011. Perception-motivated interpolation of image sequences. ACM Trans. Applied Perception 8, 2, 11:1–11:25.

47. Uyttendaele, M., Criminisi, A., Kang, S. B., Winder, S., Szeliski, R., and Hartley, R. 2004. Image-based interactive exploration of real-world environments. IEEE Comput. Graph. Appl. 24, 3, 52–63.

48. Vanhoey, K., Sauvage, B., Génevaux, O., Larue, F., and Dischler, J.-M. 2013. Robust fitting on poorly sampled data for surface light field rendering and image relighting. Comp. Graph. Forum 32, 6, 101–112.

49. Vázquez, P.-P., Feixas, M., Sbert, M., and Heidrich, W. 2003. Automatic view selection using viewpoint entropy and its application to image-based modelling. Comp. Graph. Forum 22, 4, 689–700. Cross Ref

50. Waechter, M., Moehrle, N., and Goesele, M. 2014. Let there be color! Large-scale texturing of 3D reconstructions. In ECCV. Springer, 836–850.

51. Wei, J., Resch, B., and Lensch, H. P. 2014. Multi-view depth map estimation with cross-view consistency. In BMVC, BMVA Press.

52. Wood, D. N., Azuma, D. I., Aldinger, K., Curless, B., Duchamp, T., Salesin, D. H., and Stuetzle, W. 2000. Surface light fields for 3D photography. In SIGGRAPH, ACM, 287–296.

53. Xiao, J., Owens, A., and Torralba, A. 2013. SUN3D: A database of big spaces reconstructed using SfM and object labels. In ICCV, IEEE, 1625–1632.

54. Zhou, Q.-Y., and Koltun, V. 2014. Color map optimization for 3D reconstruction with consumer depth cameras. ACM Trans. Graph. 33, 4, 155:1–155:10.

55. Zhou, Q.-Y., Miller, S., and Koltun, V. 2013. Elastic fragmens for dense scene reconstruction. In Proc. ICCV, IEEE, 473–480.

56. Zitnick, C. L., and Kang, S. B. 2007. Stereo for image-based rendering using image over-segmentation. Int. J. Comput. Vision 75, 1, 49–65.