“Scalable image-based indoor scene rendering with reflections” by Xu, Wu, Zu, Huang, Yang, et al. …

Conference:

Type(s):

Title:

- Scalable image-based indoor scene rendering with reflections

Presenter(s)/Author(s):

Abstract:

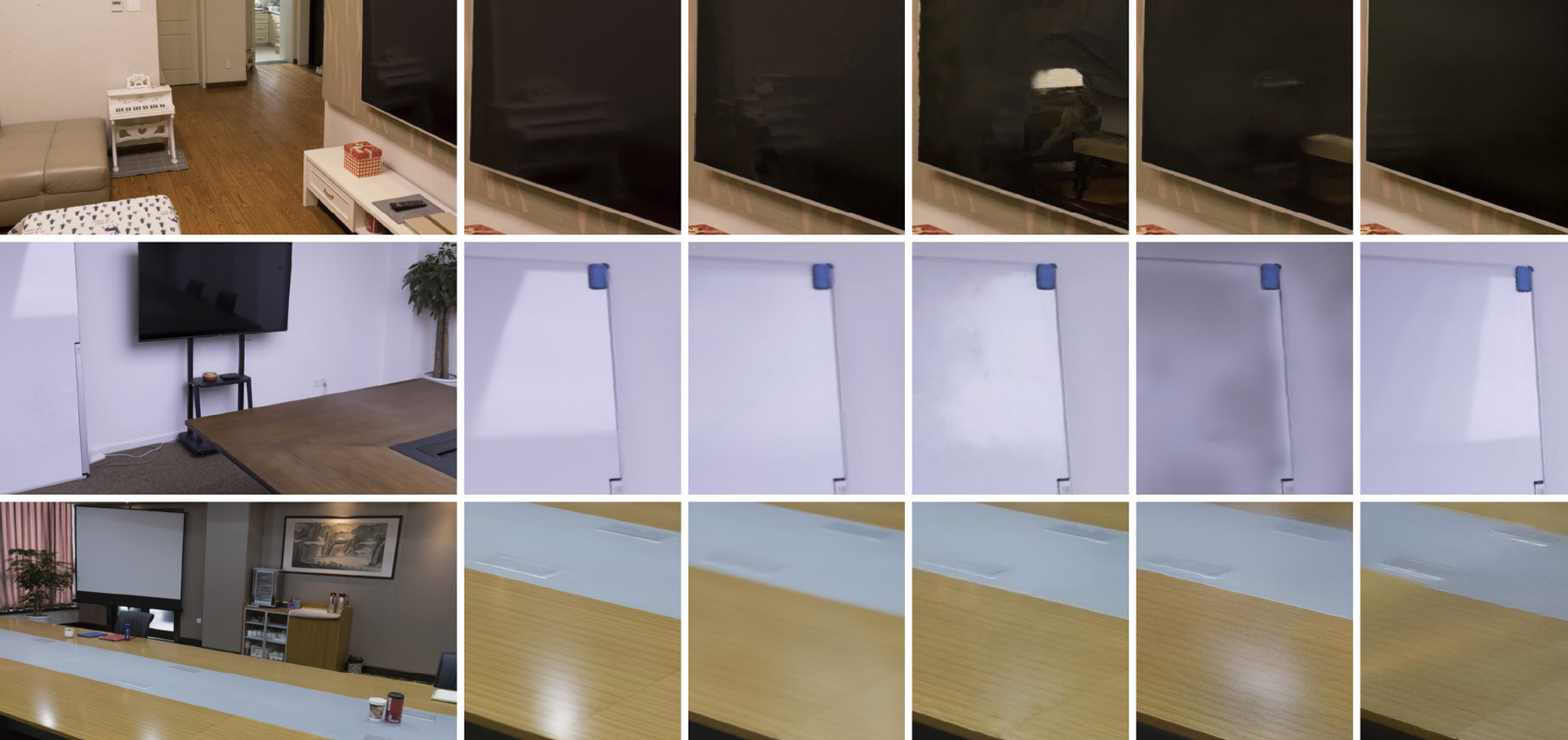

This paper proposes a novel scalable image-based rendering (IBR) pipeline for indoor scenes with reflections. We make substantial progress towards three sub-problems in IBR, namely, depth and reflection reconstruction, view selection for temporally coherent view-warping, and smooth rendering refinements. First, we introduce a global-mesh-guided alternating optimization algorithm that robustly extracts a two-layer geometric representation. The front and back layers encode the RGB-D reconstruction and the reflection reconstruction, respectively. This representation minimizes the image composition error under novel views, enabling accurate renderings of reflections. Second, we introduce a novel approach to select adjacent views and compute blending weights for smooth and temporal coherent renderings. The third contribution is a supersampling network with a motion vector rectification module that refines the rendering results to improve the final output’s temporal coherence. These three contributions together lead to a novel system that produces highly realistic rendering results with various reflections. The rendering quality outperforms state-of-the-art IBR or neural rendering algorithms considerably.

References:

1. S. Agarwal, K. Mierle, and Others. 2010. Ceres Solver. http://ceres-solver.org.Google Scholar

2. M. Broxton, J. Flynn, R. Overbeck, D. Erickson, P. Hedman, M. Duvall, J. Dourgarian, J. Busch, M. Whalen, and P. Debevec. 2020. Immersive Light Field Video with a Layered Mesh Representation. ACM Trans. Graph. 39, 4 (2020), 15.Google ScholarDigital Library

3. C. Buehler, M. Bosse, L. McMillan, S. Gortler, and M. Cohen. 2001. Unstructured lumigraph rendering. In ACM Trans. Graph. 425–432.Google Scholar

4. J. Caballero, C. Ledig, A. P. Aitken, A. Acosta, J. Totz, Z. Wang, and W. Shi. 2017. Real-Time Video Super-Resolution with Spatio-Temporal Networks and Motion Compensation. In CVPR, IEEE. 2848–2857.Google Scholar

5. CapturingReality. 2016. Reality capture, http://capturingreality.com.Google Scholar

6. C. R. A. Chaitanya, A. S. Kaplanyan, C. Schied, M. Salvi, A. Lefohn, D. Nowrouzezahrai, and T. Aila. 2017. Interactive Reconstruction of Monte Carlo Image Sequences Using a Recurrent Denoising Autoencoder. ACM Trans. Graph. 36, 4, Article 98 (2017), 12 pages.Google ScholarDigital Library

7. G. Chaurasia, S. Duchene, O. Sorkine-Hornung, and G. Drettakis. 2013. Depth synthesis and local warps for plausible image-based navigation. ACM Trans. Graph. 32, 3 (2013), 1–12.Google ScholarDigital Library

8. G. Chaurasia, O. Sorkine-Hornung, and G. Drettakis. 2011. Silhouette-Aware Warping for Image-Based Rendering. In Computer Graphics Forum, Vol. 30. 1223–1232.Google ScholarDigital Library

9. S. E. Chen and L. Williams. 1993. View Interpolation for Image Synthesis. In SIGGRAPH, ACM. 279–288.Google Scholar

10. P. E. Debevec, C. J. Taylor, and J. Malik. 1996. Modeling and rendering architecture from photographs: A hybrid geometry-and image-based approach. In SIGGRAPH, ACM. 11–20.Google Scholar

11. M. Desbrun, M. Meyer, P. Schröder, and A. H. Barr. 1999. Implicit Fairing of Irregular Meshes Using Diffusion and Curvature Flow. In SIGGRAPH, ACM. 317–324.Google Scholar

12. P. Dollár and C. L. Zitnick. 2015. Fast Edge Detection Using Structured Forests. IEEE Trans. PAMI 37, 8 (2015), 1558–1570.Google ScholarCross Ref

13. C. Dong, C. C. Loy, K. He, and X. Tang. 2014. Learning a deep convolutional network for image super-resolution. In ECCV, Springer. 184–199.Google Scholar

14. S. Dong, K. Xu, Q. Y. Zhou, A. Tagliasacchi, S. Xin, M. Nießner, and B. Chen. 2019. Multi-Robot Collaborative Dense Scene Reconstruction. ACM Trans. Graph. 38, 4, Article 84 (2019), 16 pages.Google ScholarDigital Library

15. A. Edelsten, P. Jukarainen, and A. Patney. 2019. Truly next-gen: Adding deep learning to games and graphics. In In NVIDIA Sponsored Sessions (Game Developers Conference).Google Scholar

16. J. Flynn, M. Broxton, P. Debevec, M. DuVall, G. Fyffe, R. Overbeck, N. Snavely, and R. Tucker. 2019. Deepview: View synthesis with learned gradient descent. In CVPR, IEEE. 2367–2376.Google Scholar

17. J. Flynn, I. Neulander, J. Philbin, and N. Snavely. 2016. Deepstereo: Learning to predict new views from the world’s imagery. In CVPR, IEEE. 5515–5524.Google Scholar

18. D. Fuoli, S. Gu, and R. Timofte. 2019. Efficient Video Super-Resolution through Recurrent Latent Space Propagation. In ICCV, IEEE Workshop. 3476–3485.Google Scholar

19. Y. Furukawa, B. Curless, S. M. Seitz, and R. Szeliski. 2009. Reconstructing building interiors from images. In ICCV, IEEE. 80–87.Google Scholar

20. Y. Furukawa and J. Ponce. 2010. Accurate, Dense, and Robust Multiview Stereopsis. IEEE Trans. PAMI 32, 8 (2010), 1362–1376.Google ScholarDigital Library

21. M. Garland and P. S. Heckbert. 1997. Surface Simplification Using Quadric Error Metrics. In SIGGRAPH, ACM. 209–216.Google Scholar

22. M. Goesele, J. Ackermann, S. Fuhrmann, C. Haubold, R. Klowsky, D. Steedly, and R. Szeliski. 2010. Ambient Point Clouds for View Interpolation. In SIGGRAPH, ACM. Article 95, 6 pages.Google Scholar

23. M. Goesele, N. Snavely, B. Curless, H. Hoppe, and S. M. Seitz. 2007. Multi-View Stereo for Community Photo Collections. In ICCV, IEEE. 1–8.Google Scholar

24. S. J. Gortler, R. Grzeszczuk, R. Szeliski, and M. F. Cohen. 1996. The lumigraph. In SIGGRAPH, ACM. 43–54.Google Scholar

25. X. Guo, X. Cao, and Y. Ma. 2014. Robust separation of reflection from multiple images. In CVPR, IEEE. 2187–2194.Google Scholar

26. M. Haris, G. Shakhnarovich, and N. Ukita. 2019. Recurrent Back-Projection Network for Video Super-Resolution. In CVPR, IEEE. 3892–3901.Google Scholar

27. R. I. Hartley and A. Zisserman. 2004. Multiple View Geometry in Computer Vision (second ed.). Cambridge University Press, ISBN: 0521540518.Google Scholar

28. J. He, S. Zhang, M. Yang, Y. Shan, and T. Huang. 2019. BDCN: Bi-Directional Cascade Network for Perceptual Edge Detection. In CVPR, IEEE. 3828–3837.Google Scholar

29. P. Hedman, S. Alsisan, R. Szeliski, and J. Kopf. 2017. Casual 3D Photography. ACM Trans. Graph. 36, 6, Article 234 (2017), 15 pages.Google ScholarDigital Library

30. P. Hedman, J. Philip, T. Price, J. M. Frahm, G. Drettakis, and G. Brostow. 2018. Deep blending for free-viewpoint image-based rendering. ACM Trans. Graph. 37, 6 (2018), 1–15.Google ScholarDigital Library

31. P. Hedman, T. Ritschel, G. Drettakis, and G. Brostow. 2016. Scalable inside-out image-based rendering. ACM Trans. Graph. 35, 6 (2016), 1–11.Google ScholarDigital Library

32. H. Hirschmuller. 2008. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. PAMI 30, 2 (2008), 328–341.Google ScholarDigital Library

33. A. Hosni, C. Rhemann, M. Bleyer, C. Rother, and M. Gelautz. 2011. Fast cost-volume filtering for visual correspondence and beyond. In CVPR, IEEE. 3017–3024.Google Scholar

34. T. Igarashi, T. Moscovich, and J. F. Hughes. 2005. As-rigid-as-possible shape manipulation. ACM Trans. Graph. 24, 3 (2005), 1134–1141.Google ScholarDigital Library

35. J. Kopf, F. Langguth, D. Scharstein, R. Szeliski, and M. Goesele. 2013. Image-based rendering in the gradient domain. ACM Trans. Graph. 32, 6 (2013), 1–9.Google ScholarDigital Library

36. C. Ledig, L. Theis, F. Huszar, J. Caballero, A. Cunningham, A. Acosta, A. Aitken, A. Tejani, J. Totz, and Z. Wang. 2017. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In CVPR, IEEE. 105–114.Google Scholar

37. M. Levoy and P. Hanrahan. 1996. Light field rendering. In SIGGRAPH, ACM. 31–42.Google Scholar

38. C. Li, Y. Yang, K. He, S. Lin, and J. E. Hopcroft. 2020. Single Image Reflection Removal through Cascaded Refinement. In CVPR, IEEE. 3565–3574.Google Scholar

39. Y. Li and M. S. Brown. 2013. Exploiting Reflection Change for Automatic Reflection Removal. In ICCV, IEEE.Google Scholar

40. D. B. Lindell, J. N. P. Martel, and G. Wetzstein. 2020. AutoInt: Automatic Integration for Fast Neural Volume Rendering. arXiv preprint arXiv:2012.01714 (2020).Google Scholar

41. L. Liu, J. Gu, K. Z. Lin, T. S. Chua, and C. Theobalt. 2020a. Neural Sparse Voxel Fields. NeurIPS (2020).Google Scholar

42. Y. L. Liu, W. S. Lai, M. H. Yang, Y. Y. Chuang, and J. B. Huang. 2020b. Learning to See Through Obstructions. In CVPR, IEEE. 14215–14224.Google Scholar

43. S. Lombardi, T. Simon, J. Saragih, G. Schwartz, A. Lehrmann, and Y. Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Trans. Graph. 38, 4CD (2019), 65.1–65.14.Google ScholarDigital Library

44. W. Matusik, C. Buehler, R. Raskar, S. J. Gortler, and L. McMillan. 2000. Image-Based Visual Hulls. In SIGGRAPH, ACM. 6.Google Scholar

45. W. Matusik, H. Pfister, A. Ngan, P. Beardsley, R. Ziegler, and L. Mcmillan. 2002. Image-Based 3D Photography Using Opacity Hulls. ACM Trans. Graph. 21, 3 (2002), 427–437.Google ScholarDigital Library

46. M. Meshry, D. B. Goldman, S. Khamis, H. Hoppe, R. Pandey, N. Snavely, and R. Martin-Brualla. 2019. Neural rerendering in the wild. In CVPR, IEEE. 6878–6887.Google Scholar

47. B. Mildenhall, P. P. Srinivasan, R. Ortiz-Cayon, N. K. Kalantari, R. Ramamoorthi, R. Ng, and A. Kar. 2019. Local light field fusion: Practical view synthesis with prescriptive sampling guidelines. ACM Trans. Graph. 38, 4 (2019), 1–14.Google ScholarDigital Library

48. B. Mildenhall, P. P. Srinivasan, M. Tancik, J. T. Barron, R. Ramamoorthi, and N. Ren. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In ECCV, Springer.Google Scholar

49. R. A. Newcombe, S. Izadi, O. Hilliges, D. Molyneaux, and A. W. Fitzgibbon. 2011. Kinectfusion: Real-time dense surface mapping and tracking. In 2011 10th IEEE international symposium on mixed and augmented reality. IEEE, 127–136.Google Scholar

50. Nvidia. 2017–2018. Nvidia Corporation. TensorRT. https://developer.nvidia.com/tensorrt.Google Scholar

51. R. Ortiz-Cayon, A. Djelouah, and G. Drettakis. 2015. A Bayesian Approach for Selective Image-Based Rendering Using Superpixels. In 2015 International Conference on 3D Vision. 469–477.Google Scholar

52. E. Penner and L. Zhang. 2017. Soft 3D reconstruction for view synthesis. ACM Trans. Graph. 36, 6 (2017), 1–11.Google ScholarDigital Library

53. N. C. Rakotonirina and A. Rasoanaivo. 2020. ESRGAN+: Further Improving Enhanced Super-Resolution Generative Adversarial Network. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 3637–3641.Google Scholar

54. J. Revaud, P. Weinzaepfel, Z. Harchaoui, and C. Schmid. 2015. Epicflow: Edge-preserving interpolation of correspondences for optical flow. In CVPR, IEEE. 1164–1172.Google Scholar

55. G. Riegler and V. Koltun. 2020. Free View Synthesis. In ECCV, Springer.Google Scholar

56. G. Riegler and V. Koltun. 2021. Stable View Synthesis. In CVPR, IEEE.Google Scholar

57. S. Rodriguez, S. Prakash, P. Hedman, and G. Drettakis. 2020. Image-Based Rendering of Cars using Semantic Labels and Approximate Reflection Flow. Proc. ACM Comput. Graph. Interact. 3 (2020).Google Scholar

58. M. S. Sajjadi, Vemulapalli, and M. R., Brown. 2018. Frame-Recurrent Video Super-Resolution. In CVPR, IEEE. 6626–6634.Google Scholar

59. J. L. Schonberger and J. M. Frahm. 2016. Structure-from-Motion Revisited. In CVPR, IEEE. 4104–4113.Google Scholar

60. J. L. Schönberger, E. Zheng, J. M. Frahm, and M. Pollefeys. 2016. Pixelwise View Selection for Unstructured Multi-View Stereo. In ECCV, Springer, Vol. 9907. 501–518.Google Scholar

61. J. Shade, S. Gortler, L. He, and R. Szeliski. 1998. Layered depth images. In SIGGRAPH, ACM. 231–242.Google Scholar

62. H. Y. Shum and S. B. Kang. 2000. A Review of Image-based Rendering Techniques. Technical Report. Microsoft.Google Scholar

63. S. N. Sinha, J. Kopf, M. Goesele, D. Scharstein, and R. Szeliski. 2012. Image-based rendering for scenes with reflections. ACM Trans. Graph. 31, 4 (2012), 1–10.Google ScholarDigital Library

64. S. N. Sinha, D. Steedly, and R. Szeliski. 2009. Piecewise planar stereo for image-based rendering. In ICCV, IEEE. 1881-1888.Google Scholar

65. V. Sitzmann, M. Zollhöfer, and G. Wetzstein. 2019. Scene representation networks: Continuous 3d-structure-aware neural scene representations. In Advances in Neural Information Processing Systems. 1121–1132.Google Scholar

66. P. P. Srinivasan, R. Tucker, J. T. Barron, R. Ramamoorthi, R. Ng, and N. Snavely. 2019. Pushing the boundaries of view extrapolation with multiplane images. In CVPR, IEEE. 175–184.Google Scholar

67. R. Szeliski. 2006. Image Alignment and Stitching: A Tutorial. MSR-TR-2004-92.Google Scholar

68. X. Tao, H. Gao, R. Liao, J. Wang, and J. Jia. 2017. Detail-Revealing Deep Video Super-Resolution. In ICCV, IEEE. 4482–4490.Google Scholar

69. N. Tatarchuk, B. Karis, M. Drobot, N. Schulz, J. Charles, and T. Mader. 2014. Advances in Real-Time Rendering in Games, Part I (Full Text Not Available). In ACM SIGGRAPH 2014 Courses. Article 10, 1 pages.Google Scholar

70. A. Tewari, O. Fried, J. Thies, V. Sitzmann, S. Lombardi, K. Sunkavalli, R. Martin-Brualla, T. Simon, J. Saragih, M. Nießner, R. Pandey, S. Fanello, G. Wetzstein, J.-Y. Zhu, C. Theobalt, M. Agrawala, E. Shechtman, D. B Goldman, and M. Zollhfer. 2020. State of the Art on Neural Rendering. Computer Graphics Forum 39, 2 (2020), 701–727.Google ScholarCross Ref

71. J. Thies, M. Zollhöfer, and M. Nießner. 2019a. Deferred Neural Rendering: Image Synthesis Using Neural Textures. ACM Trans. Graph. 38, 4, Article 66 (July 2019), 12 pages.Google ScholarDigital Library

72. J. Thies, M. Zollhöfer, and M. Nießner. 2019b. Deferred neural rendering: Image synthesis using neural textures. ACM Trans. Graph. 38, 4 (2019), 1–12.Google ScholarDigital Library

73. X. Wang, K. Chan, K. Yu, C. Dong, and C. C. Loy. 2019. EDVR: Video Restoration With Enhanced Deformable Convolutional Networks. In CVPR, IEEE Workshop. 1954–1963.Google Scholar

74. Z. Wang, J. Chen, and S. C. H Hoi. 2020. Deep Learning for Image Super-resolution: A Survey. IEEE Trans. PAMI (2020), 1–1.Google ScholarCross Ref

75. T. Whelan, M. Goesele, S. J. Lovegrove, J. Straub, S. Green, R. Szeliski, S. Butterfield, S. Verma, R. A. Newcombe, M. Goesele, et al. 2018. Reconstructing scenes with mirror and glass surfaces. ACM Trans. Graph. 37, 4 (2018), 102–1.Google ScholarDigital Library

76. D. N. Wood, D. I. Azuma, K. Aldinger, B. Curless, T. Duchamp, D. H. Salesin, and W. Stuetzle. 2000. Surface light fields for 3D photography. In SIGGRAPH, ACM. 287–296.Google Scholar

77. L. Xiao, S. Nouri, M. Chapman, A. Fix, D. Lanman, and A. Kaplanyan. 2020. Neural supersampling for real-time rendering. ACM Trans. Graph. 39, 4 (2020), 142–1.Google ScholarDigital Library

78. K. Xu, L. Zheng, Z. Yan, G. Yan, E. Zhang, M. Niessner, O. Deussen, D. Cohen-Or, and H. Huang. 2017. Autonomous Reconstruction of Unknown Indoor Scenes Guided by Time-Varying Tensor Fields. ACM Trans. Graph. 36, 6 (2017), 15.Google ScholarDigital Library

79. Z. Xu, S. Bi, K. Sunkavalli, S. Hadap, H. Su, and R. Ramamoorthi. 2019. Deep view synthesis from sparse photometric images. ACM Trans. Graph. 38, 4 (2019), 1–13.Google ScholarDigital Library

80. T. Xue, M. Rubinstein, C. Liu, and W. T. Freeman. 2015. A computational approach for obstruction-free photography. ACM Trans. Graph. 34, 4 (2015), 1–11.Google ScholarDigital Library

81. J. Yang, D. Gong, L. Liu, and Q. Shi. 2018. Seeing deeply and bidirectionally: A deep learning approach for single image reflection removal. In ECCV, Springer. 654–669.Google Scholar

82. C. Zhang and T. Chen. 2003. A survey on image-based rendering. Signal Processing Image Communication 19 (2003), 1–28.Google ScholarCross Ref

83. T. Zhou, R. Tucker, J. Flynn, G. Fyffe, and N. Snavely. 2018. Stereo magnification: Learning view synthesis using multiplane images. In SIGGRAPH, ACM.Google Scholar