“Robust task-based control policies for physics-based characters”

Conference:

Type(s):

Title:

- Robust task-based control policies for physics-based characters

Session/Category Title:

- Character animation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

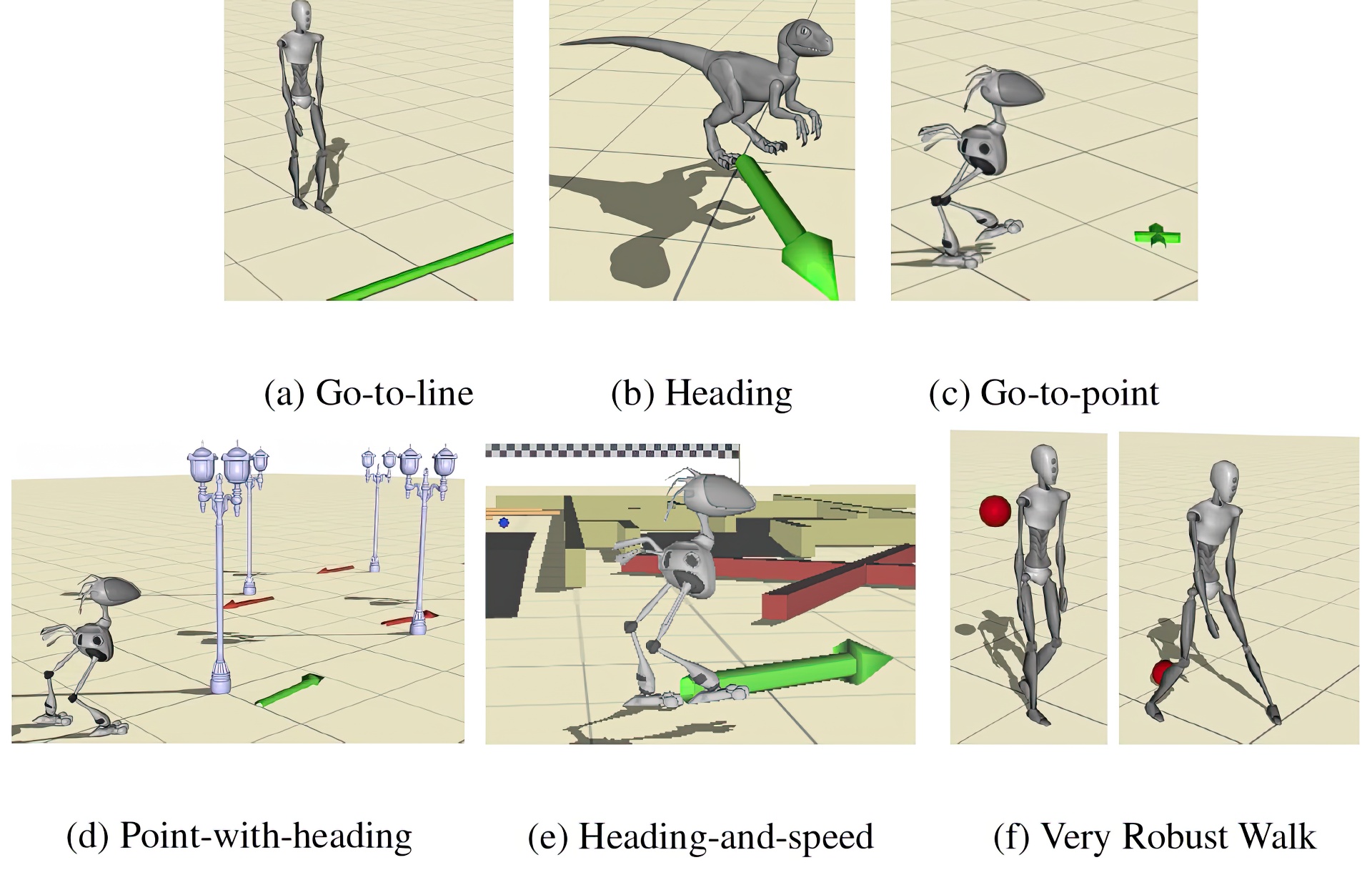

We present a method for precomputing robust task-based control policies for physically simulated characters. This allows for characters that can demonstrate skill and purpose in completing a given task, such as walking to a target location, while physically interacting with the environment in significant ways. As input, the method assumes an abstract action vocabulary consisting of balance-aware, step-based controllers. A novel constrained state exploration phase is first used to define a character dynamics model as well as a finite volume of character states over which the control policy will be defined. An optimized control policy is then computed using reinforcement learning. The final policy spans the cross-product of the character state and task state, and is more robust than the conrollers it is constructed from. We demonstrate real-time results for six locomotion-based tasks and on three highly-varied bipedal characters. We further provide a game-scenario demonstration.

References:

1. Abe, Y., da Silva, M., and Popović, J. 2007. Multiobjective control with frictional contacts. In Proc. ACM SIGGRAPH/EG Symposium on Computer Animation, 249–258. Google ScholarDigital Library

2. Atkeson, C. G., and Morimoto, J. 2003. Nonparametric representation of policies and value functions: A trajectory-based approach. In Advances in Neural Information Processing Systems 15, 1611–1618.Google Scholar

3. Atkeson, C. G., and Stephens, B. 2007. Random sampling of states in dynamic programming. In Proc. Neural Information Processing Systems Conf.Google Scholar

4. Byl, K., and Tedrake, R. 2008. Approximate optimal control of the compass gait on rough terrain. In Proc. IEEE Int’l Conf. on Robotics and Automation.Google Scholar

5. Chestnutt, J., Lau, M., Cheung, K. M., Kuffner, J., Hodgins, J. K., and Kanade, T. 2005. Footstep planning for the Honda ASIMO humanoid. In Proc. IEEE Int’l Conf. on Robotics and Automation.Google Scholar

6. Chestnutt, J. 2007. Navigation Planning for Legged Robots. PhD thesis, Carnegie Mellon University.Google Scholar

7. Choi, M., Lee, J., and Shin, S. 2003. Planning biped locomotion using motion capture data and probabilistic roadmaps. ACM Transactions on Graphics 22, 2, 182–203. Google ScholarDigital Library

8. Coros, S., Beaudoin, P., Yin, K., and van de Panne, M. 2008. Synthesis of constrained walking skills. ACM Trans. on Graphics (Proc. SIGGRAPH ASIA) 27, 5, Article 113. Google ScholarDigital Library

9. da Silva, M., Abe, Y., and Popović, J. 2008. Interactive simulation of stylized human locomotion. ACM Transactions on Graphics (Proc. SIGGRAPH) 27, 3, Article 82. Google ScholarDigital Library

10. da Silva, M., Durand, F., and Popovic, J. 2009. Linear Bellman combination for control of character animation. ACM Trans. on Graphics (Proc. SIGGRAPH) 28, 3, Article 82. Google ScholarDigital Library

11. Ernst, D., Geurts, P., and Wehenkel, L. 2005. Tree-based batch mode reinforcement learning. Journal of Machine Learning Research 6, 503–556. Google ScholarDigital Library

12. Faloutsos, P., van de Panne, M., and Terzopoulos, D. 2001. Composable controllers for physics-based character animation. In Proc. ACM SIGGRAPH, 251–260. Google ScholarDigital Library

13. Hodgins, J., Wooten, W., Brogan, D., and O’Brien, J. 1995. Animating human athletics. In Proc. ACM SIGGRAPH, 71–78. Google ScholarDigital Library

14. Ikemoto, L., Arikan, O., and Forsyth, D. A. 2005. Learning to move autonomously in a hostile world. Tech. Rep. UCB/CSD-05-1395, EECS Department, University of California, Berkeley, Jun.Google Scholar

15. Kajita, S., Kanehiro, F., Kaneko, K., Fujiwara, K., Harada, K., Yokoi, K., and Hirukawa, H. 2003. Biped walking pattern generation by using preview control of zero-moment point. In Proc. IEEE Int’l Conf. on Robotics and Automation.Google Scholar

16. Khatib, O., Sentis, L., Park, J., and Warren, J. 2004. Whole body dynamic behavior and control of human-like robots. International Journal of Humanoid Robotics 1, 1, 29–43.Google ScholarCross Ref

17. Laszlo, J. F., van de Panne, M., and Fiume, E. 1996. Limit cycle control and its application to the animation of balancing and walking. In Proc. ACM SIGGRAPH, 155–162. Google ScholarDigital Library

18. Lau, M., and Kuffner, J. J. 2005. Behavior planning for character animation. In ACM SIGGRAPH/EG Symposium on Computer Animation. Google ScholarDigital Library

19. Lau, M., and Kuffner, J. 2006. Precomputed search trees: Planning for interactive goal-driven animation. In ACM SIGGRAPH/EG Symposium on Computer Animation, 299–308. Google ScholarDigital Library

20. Lee, J., and Lee, K. H. 2004. Precomputing avatar behavior from human motion data. ACM SIGGRAPH/EG Symposium on Computer Animation, 79–87. Google ScholarDigital Library

21. Lo, W., and Zwicker, M. 2008. Real-time planning for parameterized human motion. In ACM SIGGRAPH/EG Symposium on Computer Animation. Google ScholarDigital Library

22. McCann, J., and Pollard, N. 2007. Responsive characters from motion fragments. ACM Transactions on Graphics (Proc. SIGGRAPH) 26, 3, Article 6. Google ScholarDigital Library

23. Morimoto, J., and Atkeson, C. G. 2007. Learning biped locomotion: Application of poincare-map-based reinforcement leraning. IEEE Robotics&Automation Magazine 14, 2, 41–51.Google Scholar

24. Morimoto, J., Atkeson, C. G., Endo, G., and Cheng, G. 2007. Improving humanoid locomotive performance with learnt approximated dynamics via guassian processes for regression. In Proc. IEEE Int’l Conf. on Robotics and Automation.Google Scholar

25. Muico, U., Lee, Y., Popovic’, J., and Popovic’, Z. 2009. Contact-aware nonlinear control of dynamic characters. ACM Transactions on Graphics (Proc. SIGGRAPH) 28, 3, Article 81. Google ScholarDigital Library

26. ODE. Open dynamics engine, http://www.ode.org/.Google Scholar

27. Raibert, M. H., and Hodgins, J. K. 1991. Animation of dynamic legged locomotion. In Proc. ACM SIGGRAPH, 349–358. Google ScholarDigital Library

28. Sharon, D., and van de Panne, M. 2005. Synthesis of controllers for stylized planar bipedal walking. In Proc. IEEE Int’l Conf. on Robotics and Automation.Google Scholar

29. Sok, K. W., Kim, M., and Lee, J. 2007. Simulating biped behaviors from human motion data. ACM Trans. on Graphics (Proc. SIGGRAPH) 26, 3, Article 107. Google ScholarDigital Library

30. Sutton, R., and Barto, A. 1998. Reinforcement Learning: An Introduction. MIT Press. Google ScholarDigital Library

31. Tedrake, R., Zhang, T., and Seung, H. 2004. Stochastic policy gradient reinforcement learning on a simple 3D biped. In Proc. Int’l Conf. on Intelligent Robots and Systems, vol. 3.Google Scholar

32. Treuille, A., Lee, Y., and Popović, Z. 2007. Near-optimal character animation with continuous control. ACM Transactions on Graphics (Proc. SIGGRAPH) 26, 3, Article 7. Google ScholarDigital Library

33. Yin, K., Loken, K., and van de Panne, M. 2007. SIMBICON: Simple biped locomotion control. ACM Transactions on Graphics (Proc. SIGGRAPH) 26, 3, Article 105. Google ScholarDigital Library

34. Yoshida, E., Belousov, I., Esteves, C., and Laumond, J. 2005. Humanoid motion planning for dynamic tasks. In Humanoid Robots.Google Scholar

35. Zhao, L., and Safonova, A. 2008. Achieving good connectivity in motion graphs. In ACM SIGGRAPH/EG Symposium on Computer Animation. Google ScholarDigital Library

36. Zordan, V., Majkowska, A., Chiu, B., and Fast, M. 2005. Dynamic response for motion capture animation. ACM Transactions on Graphics (Proc. SIGGRAPH) 24, 3, 697–701. Google ScholarDigital Library