“Real-time 3D rendering using depth-based geometry reconstruction and view-dependent texture mapping” by Chen, Bolas and Suma

Conference:

Type(s):

Title:

- Real-time 3D rendering using depth-based geometry reconstruction and view-dependent texture mapping

Presenter(s)/Author(s):

Entry Number:

- 80

Abstract:

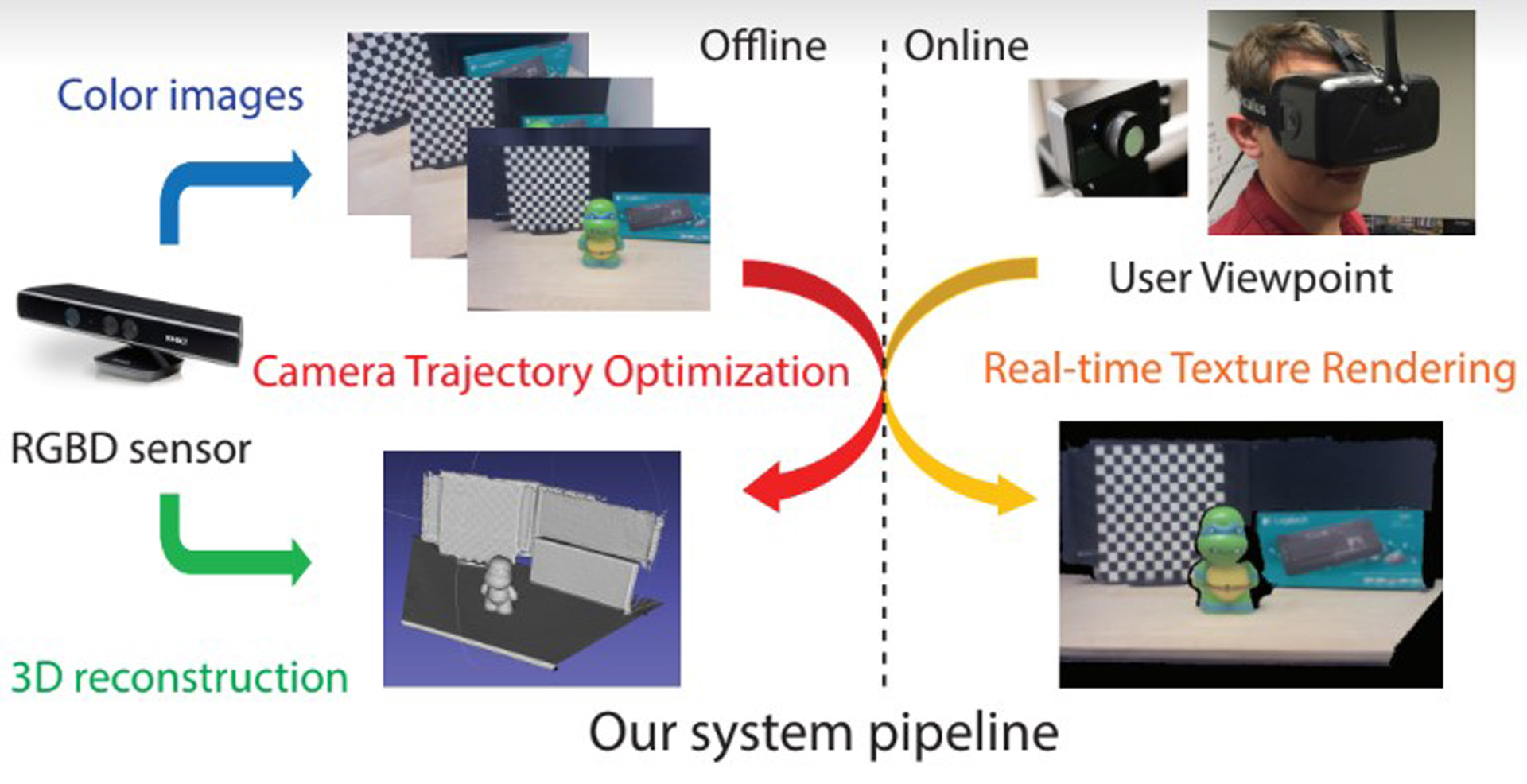

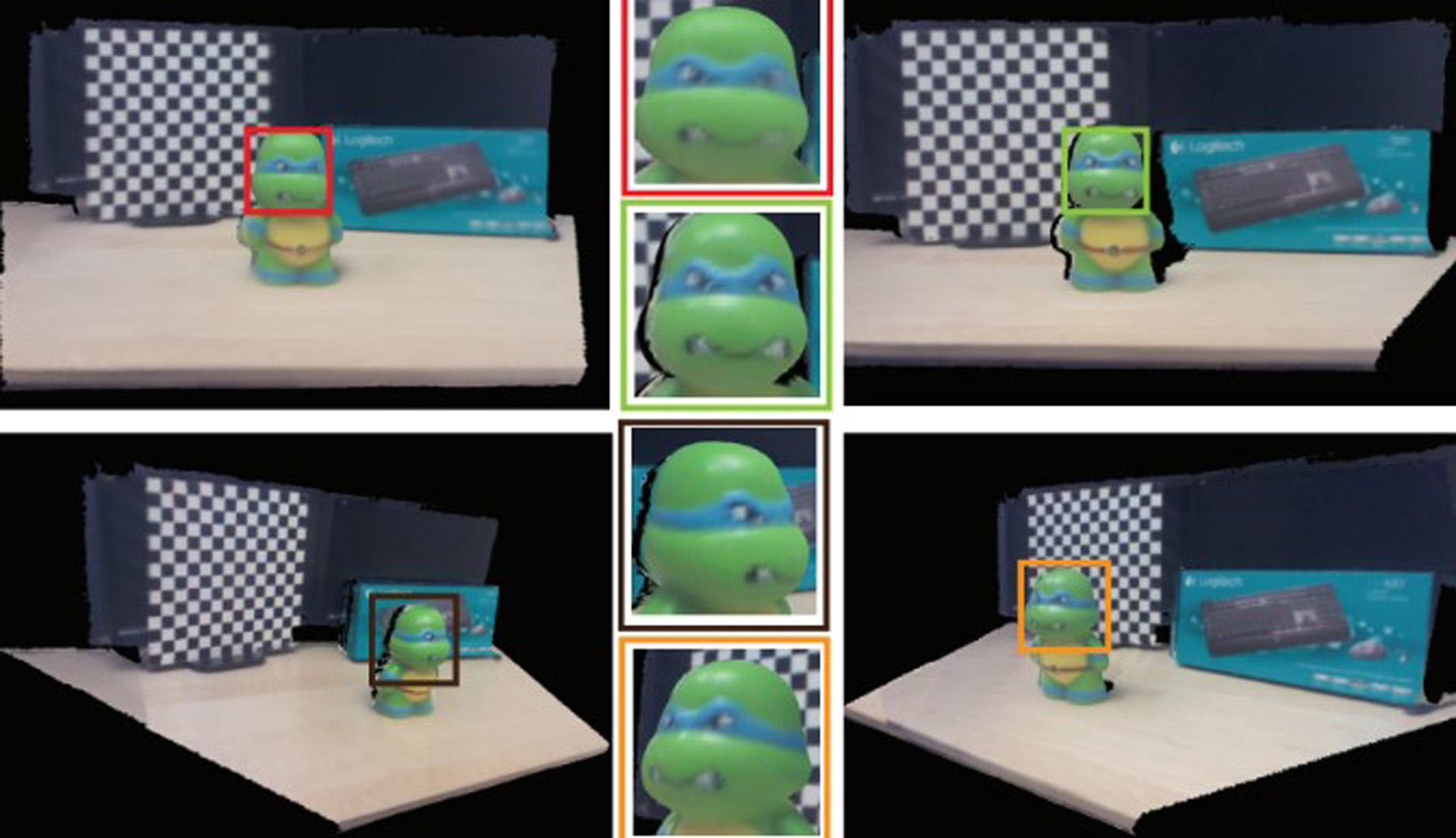

With the recent proliferation of high-fidelity head-mounted displays (HMDs), there is increasing demand for realistic 3D content that can be integrated into virtual reality environments. However, creating photorealistic models is not only difficult but also time consuming. A simpler alternative involves scanning objects in the real world and rendering their digitized counterpart in the virtual world. Capturing objects can be achieved by performing a 3D scan using widely available consumer-grade RGB-D cameras. This process involves reconstructing the geometric model from depth images generated using a structured light or time-of-flight sensor. The colormap is determined by fusing data from multiple color images captured during the scan. Existing methods compute the color of each vertex by averaging the colors from all these images. Blending colors in this manner creates low-fidelity models that appear blurry. (Figure 1 right). Furthermore, this approach also yields textures with fixed lighting that is baked on the model. This limitation becomes more apparent when viewed in head-tracked virtual reality, as the illumination (e.g. specular reflections) does not change appropriately based on the user’s viewpoint.