“Quality-driven poisson-guided autoscanning” by Wu, Sun, Long, Huang, Cohen-Or, et al. …

Conference:

Type(s):

Title:

- Quality-driven poisson-guided autoscanning

Session/Category Title: Data In, Surface Out

Presenter(s)/Author(s):

Abstract:

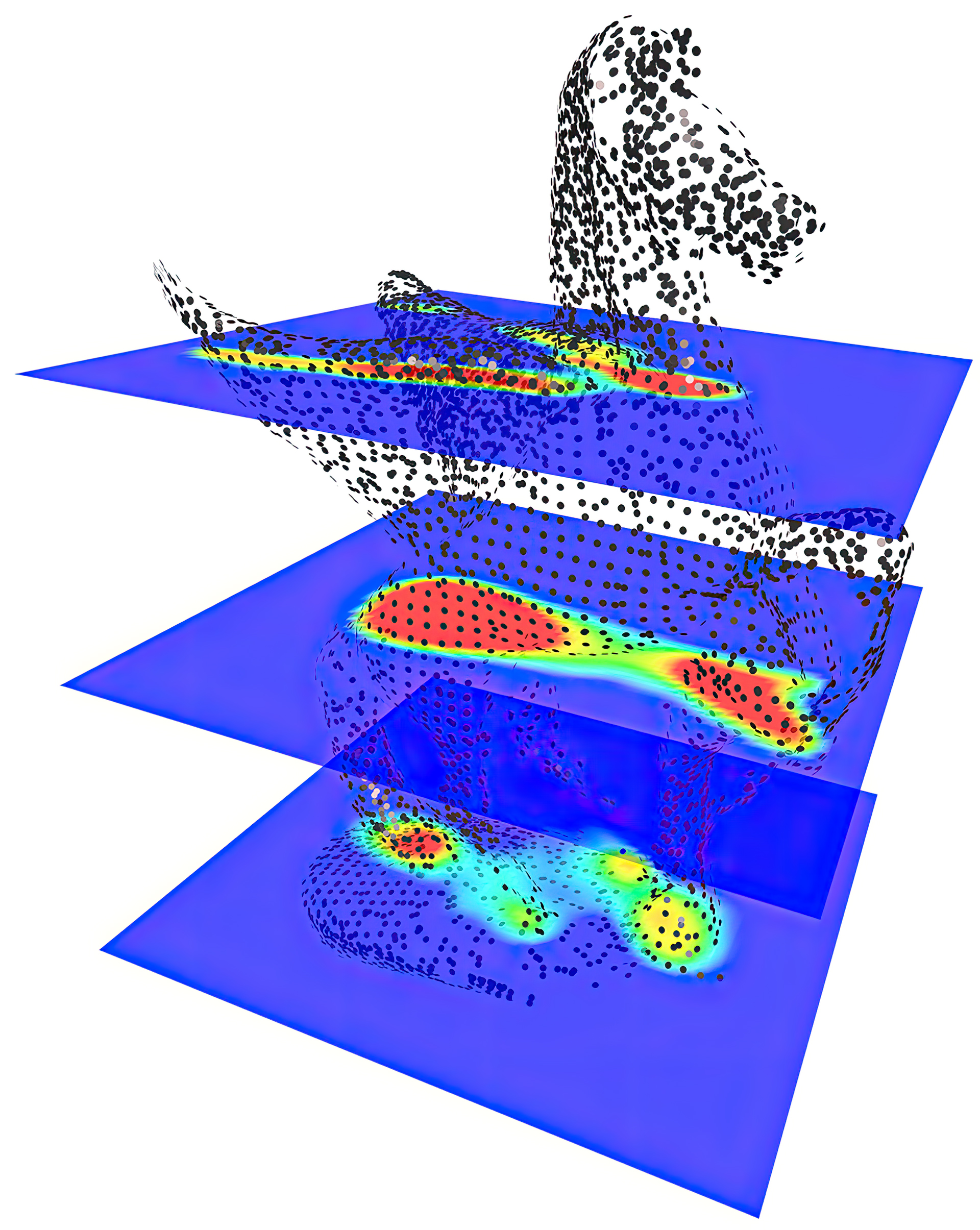

We present a quality-driven, Poisson-guided autonomous scanning method. Unlike previous scan planning techniques, we do not aim to minimize the number of scans needed to cover the object’s surface, but rather to ensure the high quality scanning of the model. This goal is achieved by placing the scanner at strategically selected Next-Best-Views (NBVs) to ensure progressively capturing the geometric details of the object, until both completeness and high fidelity are reached. The technique is based on the analysis of a Poisson field and its geometric relation with an input scan. We generate a confidence map that reflects the quality/fidelity of the estimated Poisson iso-surface. The confidence map guides the generation of a viewing vector field, which is then used for computing a set of NBVs. We applied the algorithm on two different robotic platforms, a PR2 mobile robot and a one-arm industry robot. We demonstrated the advantages of our method through a number of autonomous high quality scannings of complex physical objects, as well as performance comparisons against state-of-the-art methods.

References:

1. Banta, J. E., Wong, L. R., Dumont, C., and Abidi, M. A. 2000. A next-best-view system for autonomous 3D object reconstruction. IEEE Trans. Systems, Man & Cybernetics 30, 5, 589–598.

2. Berger, M., Levine, J. A., Nonato, L. G., Taubin, G., and Silva, C. T. 2013. A benchmark for surface reconstruction. ACM Trans. on Graphics (Proc. of SIGGRAPH) 32, 2, 20:1–20:17.

3. Berger, M., Tagliasacchi, A., Seversky, L. M., Alliez, P., Levine, J. A., Sharf, A., and Silva, C. 2014. State of the art in surface reconstruction from point clouds. Eurographics STAR, 165–185.

4. Blaer, P. S., and Allen, P. K. 2007. Data acquisition and view planning for 3D modeling tasks. In Proc. IEEE Int. Conf. on Intelligent Robots & Systems, 417–422.

5. Carr, J. C., Beatson, R. K., Cherrie, J. B., Mitchell, T. J., Fright, W. R., McCallum, B. C., and Evans, T. R. 2001. Reconstruction and representation of 3D objects with radial basis functions. SIGGRAPH, 67–76.

6. Chen, S., Li, Y., and Kwok, N. M. 2011. Active vision in robotic systems: A survey of recent developments. Int. J. Robotic Research 30, 1343–1377.

7. Chen, J., Bautembach, D., and Izadi, S. 2013. Scalable real-time volumetric surface reconstruction. ACM Trans. on Graphics (Proc. of SIGGRAPH) 32, 4, 113:1–113:16.

8. Connolly, C. 1985. The determination of next best views. In Proc. IEEE Int. Conf. on Robotics & Automation, vol. 2, 432–435.Cross Ref

9. Corsini, M., Cignoni, P., and Scopigno, R. 2012. Efficient and flexible sampling with blue noise properties of triangular meshes. IEEE Trans. Visualization & Computer Graphics 18, 6, 914–924.

10. Davis, J., Marschner, S. R., Garr, M., and Levoy, M. 2002. Filling holes in complex surfaces using volumetric diffusion. In 3DPVT, 428–441.

11. Dunn, E., and Frahm, J.-M. 2009. Next best view planning for active model improvement. In Proc. Conf. on British Machine Vision, 1–11.

12. Gschwandtner, M., Kwitt, R., Uhl, A., and Pree, W. 2011. Blensor: blender sensor simulation toolbox. In Advances in Visual Computing. 199–208.

13. Harary, G., Tal, A., and Grinspun, E. 2014. Context-based coherent surface completion. ACM Trans. on Graphics 33, 1, 5:1–5:12.

14. Huang, H., Li, D., Zhang, H., Ascher, U., and Cohen-Or, D. 2009. Consolidation of unorganized point clouds for surface reconstruction. ACM Trans. on Graphics (Proc. of SIGGRAPH Asia) 28, 5, 176:1–176:7.

15. Huang, H., Wu, S., Cohen-Or, D., Gong, M., Zhang, H., Li, G., and Chen, B. 2013. L1-medial skeleton of point cloud. ACM Trans. on Graphics (Proc. of SIGGRAPH) 32, 4, 65:1–65:8.

16. Kazhdan, M., and Hoppe, H. 2013. Screened poisson surface reconstruction. ACM Trans. on Graphics 32, 1, 29:1–29:13.

17. Kazhdan, M., Bolitho, M., and Hoppe, H. 2006. Poisson surface reconstruction. Proc. Eurographics Symp. on Geometry Processing, 61–70.

18. Khalfaoui, S., Seulin, R., Fougerolle, Y., and Fofi, D. 2013. An efficient method for fully automatic 3D digitization of unknown objects. Computers in Industry 64, 9, 1152–1160.

19. Kim, Y., Mitra, N., Huang, Q., and Guibas, L. J. 2013. Guided real-time scanning of indoor environments. Computer Graphics Forum (Proc. Pacific Conf. on Computer Graphics & Applications) 32, 7.

20. Kriegel, S., Rink, C., Tim, B., Narr, A., Suppa, M., and Hirzinger, G. 2013. Efficient next-best-scan planning for autonomous 3D surface reconstruction of unknown objects. J. Real-Time Image Processing, 1–21.

21. Li, H., Vouga, E., Gudym, A., Luo, L., Barron, J. T., and Gusev, G. 2013. 3D self-portraits. ACM Trans. on Graphics (Proc. of SIGGRAPH Asia) 32, 6, 187:1–187:9.

22. Lipman, Y., Cohen-Or, D., Levin, D., and Tal-Ezer, H. 2007. Parameterization-free projection for geometry reconstruction. ACM Trans. on Graphics (Proc. of SIGGRAPH) 26, 3, 22:1–22:6.

23. Low, K.-L., and Lastra, A. 2006. An adaptive hierarchical next-best-view algorithm for 3D reconstruction of indoor scenes. In Proc. Pacific Conf. on Computer Graphics & Applications.

24. Masuda, T. 2002. Registration and integration of multiple range images by matching signed distance fields for object shape modeling. Computer Vision and Image Understanding 87, 1, 51–65.

25. Maver, J., and Bajcsy, R. 1993. Occlusions as a guide for planning the next view. IEEE Trans. Pattern Analysis & Machine Intelligence 15, 5, 417–433.

26. Mullen, P., de Goes, F., Desbrun, M., Cohen-Steiner, D., and Alliez, P. 2010. Signing the unsigned: Robust surface reconstruction from raw pointsets. Computer Graphics Forum (Proc. Eurographics Symp. on Geometry Processing) 29, 5, 1733–1741.

27. Newcombe, R. A., Davison, A. J., Izadi, S., Kohli, P., Hilliges, O., Shotton, J., Molyneaux, D., Hodges, S., Kim, D., and Fitzgibbon, A. 2011. KinectFusion: Real-time dense surface mapping and tracking. In Proc. IEEE Int. Symp. on Mixed and augmented reality, 127–136.

28. Pauly, M., Mitra, N. J., and Guibas, L. J. 2004. Uncertainty and variability in point cloud surface data. In Proc. Eurographics Conf. on Point-Based Graphics, 77–84.

29. Pito, R. 1996. A sensor-based solution to the next best view problem. In Proc. IEEE Int. Conf. on Pattern Recognition, vol. 1, 941–945.

30. Sagawa, R., and Ikeuchi, K. 2008. Hole filling of a 3D model by flipping signs of a signed distance field in adaptive resolution. IEEE Trans. Pattern Analysis & Machine Intelligence 30, 4, 686–699.

31. Scott, W. R., Roth, G., and Rivest, J.-F. 2003. View planning for automated three-dimensional object reconstruction and inspection. ACM Computing Surveys 35, 1, 64–96.

32. Seversky, L. M., and Yin, L. 2012. A global parity measure for incomplete point cloud data. In Computer Graphics Forum (Proc. Pacific Conf. on Computer Graphics & Applications), vol. 31, 2097–2106.

33. Sharf, A., Alexa, M., and Cohen-Or, D. 2004. Context-based surface completion. ACM Trans. on Graphics (Proc. of SIGGRAPH) 23, 3, 878–887.

34. Tagliasacchi, A., Zhang, H., and Cohen-Or, D. 2009. Curve skeleton extraction from incomplete point cloud. ACM Trans. on Graphics (Proc. of SIGGRAPH) 28, 3, 71:1–71:9.

35. Trucco, E., Umasuthan, M., Wallace, A., and Androberto, V. 1997. Model-based planning of optimal sensor placements for inspection. In Proc. IEEE Int. Conf. on Robotics & Automation, vol. 13, 182–194.Cross Ref

36. Vásquez-Gómez, J. I., López-Damian, E., and Sucar, L. E. 2009. View planning for 3D object reconstruction. In Proc. IEEE Int. Conf. on Intelligent Robots & Systems, 4015–4020.

37. Wenhardt, S., Deutsch, B., Angelopoulou, E., and Niemann, H. 2007. Active visual object reconstruction using D-, E-, and T-optimal next best views. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition, 1–7.

38. Wheeler, M. D., Sato, Y., and Ikeuchi, K. 1998. Consensus surfaces for modeling 3D objects from multiple range images. In Proc. Int. Conf. on Computer Vision, 917–924.

39. Zhou, Q.-Y., and Koltun, V. 2013. Dense scene reconstruction with points of interest. ACM Trans. on Graphics (Proc. of SIGGRAPH) 32, 4, 112:1–112:8.