“Phase-functioned neural networks for character control” by Holden, Komura and Saito

Conference:

Type(s):

Title:

- Phase-functioned neural networks for character control

Session/Category Title:

- Learning to Move

Presenter(s)/Author(s):

Moderator(s):

Abstract:

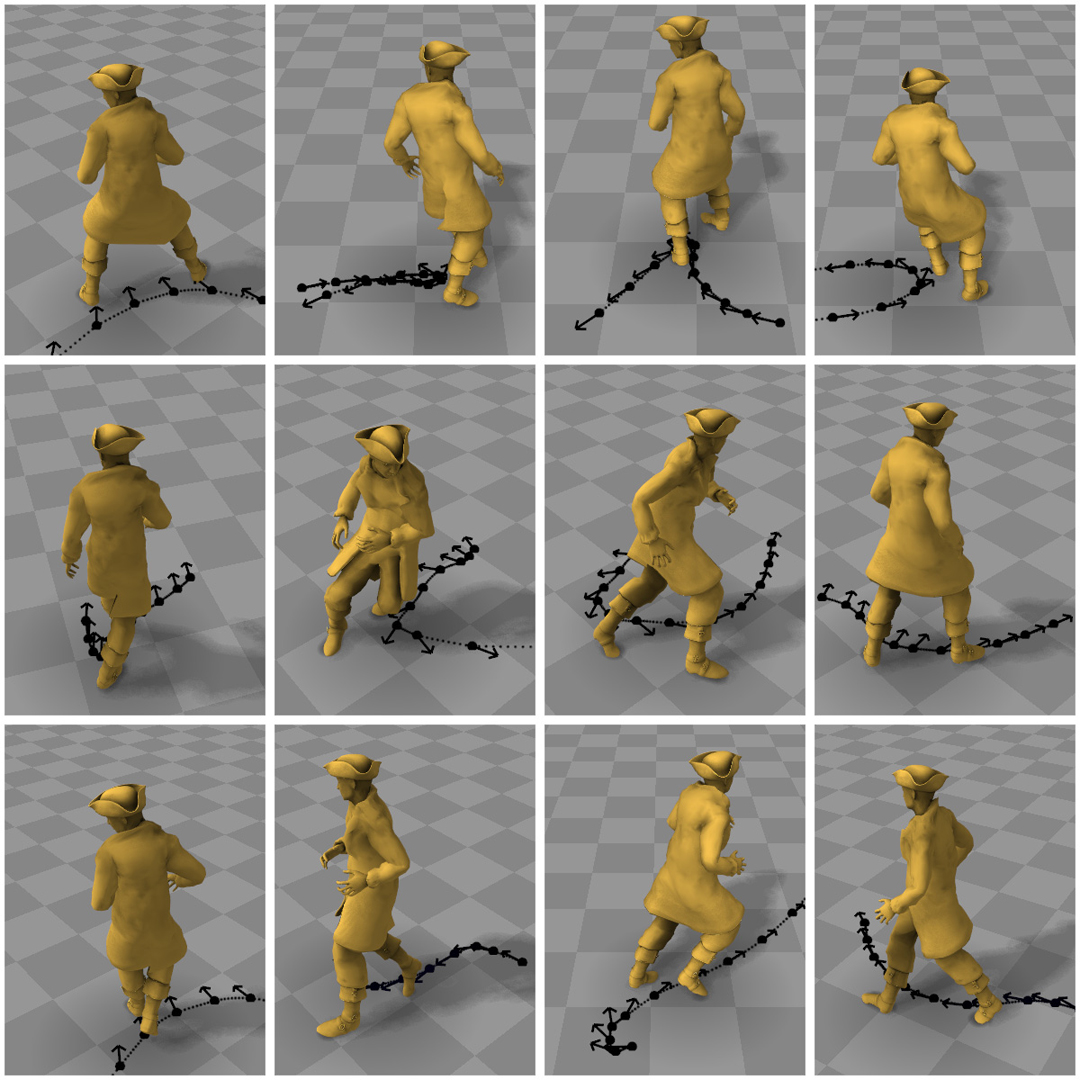

We present a real-time character control mechanism using a novel neural network architecture called a Phase-Functioned Neural Network. In this network structure, the weights are computed via a cyclic function which uses the phase as an input. Along with the phase, our system takes as input user controls, the previous state of the character, the geometry of the scene, and automatically produces high quality motions that achieve the desired user control. The entire network is trained in an end-to-end fashion on a large dataset composed of locomotion such as walking, running, jumping, and climbing movements fitted into virtual environments. Our system can therefore automatically produce motions where the character adapts to different geometric environments such as walking and running over rough terrain, climbing over large rocks, jumping over obstacles, and crouching under low ceilings. Our network architecture produces higher quality results than time-series autoregressive models such as LSTMs as it deals explicitly with the latent variable of motion relating to the phase. Once trained, our system is also extremely fast and compact, requiring only milliseconds of execution time and a few megabytes of memory, even when trained on gigabytes of motion data. Our work is most appropriate for controlling characters in interactive scenes such as computer games and virtual reality systems.

References:

1. Rami Ali Al-Asqhar, Taku Komura, and Myung Geol Choi. 2013. Relationship Descriptors for Interactive Motion Adaptation. In Proc. SCA. 45–53. Google ScholarDigital Library

2. James Bergstra, Olivier Breuleux, Fr?d?ric Bastien, Pascal Lamblin, Razvan Pascanu, Guillaume Desjardins, Joseph Turian, David Warde-Farley, and Yoshua Bengio. 2010. Theano: a CPU and GPU Math Expression Compiler. In Proc. of the Python for Scientific Computing Conference (SciPy). Oral Presentation.Google Scholar

3. Mario Botsch and Leif Kobbelt. 2005. Real-Time Shape Editing using Radial Basis Functions. Computer Graphics Forum (2005). Google ScholarCross Ref

4. Jinxiang Chai and Jessica K. Hodgins. 2005. Performance Animation from Low-dimensional Control Signals. ACM Trans on Graph 24, 3 (2005). Google ScholarDigital Library

5. Jinxiang Chai and Jessica K. Hodgins. 2007. Constraint-based motion optimization using a statistical dynamic model. ACM Trans on Graph 26, 3 (2007). Google ScholarDigital Library

6. Simon Clavet. 2016. Motion Matching and The Road to Next-Gen Animation. In Proc. of GDC 2016.Google Scholar

7. Djork-Arn? Clevert, Thomas Unterthiner, and Sepp Hochreiter. 2015. Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs). CoRR abs/1511.07289 (2015). http://arxiv.org/abs/1511.07289Google Scholar

8. Stelian Coros, Philippe Beaudoin, Kang Kang Yin, and Michiel van de Pann. 2008. Synthesis of constrained walking skills. ACM Trans on Graph 27, 5 (2008), 113. Google ScholarDigital Library

9. Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent network models for human dynamics. In Proc. ICCV. 4346–4354. Google ScholarDigital Library

10. Helmut Grabner, Juergen Gall, and Luc Van Gool. 2011. What makes a chair a chair?. In Proc. IEEE CVPR. 1529–1536. Google ScholarDigital Library

11. F. Sebastin Grassia. 1998. Practical Parameterization of Rotations Using the Exponential Map. J. Graph. Tools 3, 3 (March 1998), 29–48. Google ScholarDigital Library

12. Keith Grochow, Steven L Martin, Aaron Hertzmann, and Zoran Popovi?. 2004. Style-based inverse kinematics. ACM Trans on Graph 23, 3 (2004), 522–531. Google ScholarDigital Library

13. Abhinav Gupta, Scott Satkin, Alexei A Efros, and Martial Hebert. 2011. From 3d scene geometry to human workspace. In Proc. IEEE CVPR. 1961–1968. Google ScholarDigital Library

14. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Trans on Graph 35, 4 (2016). Google ScholarDigital Library

15. Daniel Holden, Jun Saito, Taku Komura, and Thomas Joyce. 2015. Learning Motion Manifolds with Convolutional Autoencoders. In SIGGRAPH Asia 2015 Technical Briefs. Article 18, 4 pages. Google ScholarDigital Library

16. Nicholas R Howe, Michael E Leventon, and William T Freeman. 1999. Bayesian Reconstruction of 3D Human Motion from Single-Camera Video.. In Proc. NIPS. http://papers.nips.cc/paper/1698-bayesian-reconstruction-of-3d-human-motion-from-single-camera-videoGoogle Scholar

17. Changgu Kang and Sung-Hee Lee. 2014. Environment-Adaptive Contact Poses for Virtual Characters. In Computer Graphics Forum, Vol. 33. Wiley Online Library, 1–10. Google ScholarDigital Library

18. Mubbasir Kapadia, Xu Xianghao, Maurizio Nitti, Marcelo Kallmann, Stelian Coros, Robert W Sumner, and Markus Gross. 2016. Precision: precomputing environment semantics for contact-rich character animation. In Proc. I3D. 29–37. Google ScholarDigital Library

19. Vladimir G. Kim, Siddhartha Chaudhuri, Leonidas Guibas, and Thomas Funkhouser. 2014. Shape2pose: Human-centric shape analysis. ACM Trans on Graph 33, 4 (2014), 120. Google ScholarDigital Library

20. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980 (2014). http://arxiv.org/abs/1412.6980Google Scholar

21. Tejas D Kulkarni, William F Whitney, Pushmeet Kohli, and Josh Tenenbaum. 2015. Deep convolutional inverse graphics network. In Proc. NIPS. 2539–2547. http://papers.nips.cc/paper/5851-deep-convolutional-inverse-graphics-network.pdfGoogle ScholarDigital Library

22. Manfred Lau and James J Kufner. 2005. Behavior planning for character animation. In Proc. SCA. Google ScholarDigital Library

23. Jehee Lee, Jinxiang Chai, Paul SA Reitsma, Jessica K Hodgins, and Nancy S Pollard. 2002. Interactive control of avatars animated with human motion data. ACM Trans on Graph 21, 3 (2002), 491–500. Google ScholarDigital Library

24. Jehee Lee and Kang Hoon Lee. 2004. Precomputing avatar behavior from human motion data. Proc. SCA (2004), 79–87. Google ScholarDigital Library

25. Kang Hoon Lee, Myung Geol Choi, and Jehee Lee. 2006. Motion patches: building blocks for virtual environments annotated with motion data. ACM Trans on Graph 25, 3 (2006), 898–906. Google ScholarDigital Library

26. Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popovi?, and Zoran Popovi?. 2010. Motion fields for interactive character locomotion. ACM Trans on Graph 29, 6 (2010), 138. Google ScholarDigital Library

27. Sergey Levine, Jack M Wang, Alexis Haraux, Zoran Popovi?, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Trans on Graph 31, 4 (2012), 28. Google ScholarDigital Library

28. Libin Liu, Michiel van de Panne, and KangKang Yin. 2016. Guided Learning of Control Graphs for Physics-Based Characters. ACM Trans on Graph 35, 3 (2016). Google ScholarDigital Library

29. Libin Liu, KangKang Yin, Michiel van de Panne, Tianjia Shao, and Weiwei Xu. 2010. Sampling-based contact-rich motion control. ACM Trans on Graph 29, 4 (2010), 128. Google ScholarDigital Library

30. Wan-Yen Lo and Matthias Zwicker. 2008. Real-time planning for parameterized human motion. In Proc. I3D. 29–38. http://dl.acm.org/citation.cfm?id=1632592.1632598Google Scholar

31. Roland Memisevic. 2013. Learning to relate images. IEEE PAMI 35, 8 (2013), 1829–1846. Google ScholarDigital Library

32. Jianyuan Min and Jinxiang Chai. 2012. Motion graphs++: a compact generative model for semantic motion analysis and synthesis. ACM Trans on Graph 31, 6 (2012), 153. Google ScholarDigital Library

33. Tomohiko Mukai. 2011. Motion rings for interactive gait synthesis. In Proc. I3D. 125–132. Google ScholarDigital Library

34. Tomohiko Mukai and Shigeru Kuriyama. 2005. Geostatistical motion interpolation. ACM Trans on Graph 24, 3 (2005), 1062–1070. Google ScholarDigital Library

35. Sang Il Park, Hyun Joon Shin, and Sung Yong Shin. 2002. On-line locomotion generation based on motion blending. In Proc. SCA. 105–111. Google ScholarDigital Library

36. Xue Bin Peng, Glen Berseth, and Michiel van de Panne. 2016. Terrain-Adaptive Locomotion Skills Using Deep Reinforcement Learning. ACM Trans on Graph 35, 4 (2016). Google ScholarDigital Library

37. Carl Edward Rasmussen and Zoubin Ghahramani. 2002. Infinite mixtures of Gaussian process experts. In Proc. NIPS. 881–888. http://papers.nips.cc/paper/2055-infnite-mixtures-of-gaussian-process-expertsGoogle Scholar

38. Charles Rose, Michael F. Cohen, and Bobby Bodenheimer. 1998. Verbs and Adverbs: Multidimensional Motion Interpolation. IEEE Comput. Graph. Appl. 18, 5 (1998), 32–40. Google ScholarDigital Library

39. Alla Safonova and Jessica K Hodgins. 2007. Construction and optimal search of interpolated motion graphs. ACM Trans on Graph 26, 3 (2007), 106. Google ScholarDigital Library

40. Alla Safonova, Jessica K Hodgins, and Nancy S Pollard. 2004. Synthesizing physically realistic human motion in low-dimensional, behavior-specific spaces. ACM Trans on Graph 23, 3 (2004), 514–521. Google ScholarDigital Library

41. Manolis Savva, Angel X. Chang, Pat Hanrahan, Matthew Fisher, and Matthias Nie?ner. 2016. PiGraphs: Learning Interaction Snapshots from Observations. ACM Trans on Graph 35, 4 (2016). Google ScholarDigital Library

42. Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. The Journal of Machine Learning Research 15, 1 (2014), 1929–1958. http://dl.acm.org/citation.cfm?id=2627435.2670313Google ScholarDigital Library

43. Jochen Tautges, Arno Zinke, Bj?rn Kr?ger, Jan Baumann, Andreas Weber, Thomas Helten, Meinard M?ller, Hans-Peter Seidel, and Bernd Eberhardt. 2011. Motion reconstruction using sparse accelerometer data. ACM Trans on Graph 30, 3 (2011), 18. Google ScholarDigital Library

44. Graham W Taylor and Geoffrey E Hinton. 2009. Factored conditional restricted Boltzmann machines for modeling motion style. In Proc. ICML. ACM, 1025–1032. Google ScholarDigital Library

45. Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2008. Gaussian Process Dynamical Models for Human Motion. IEEE PAMI 30, 2 (2008), 283–298. Google ScholarDigital Library

46. Shihong Xia, Congyi Wang, Jinxiang Chai, and Jessica Hodgins. 2015. Realtime style transfer for unlabeled heterogeneous human motion. ACM Trans on Graph 34, 4 (2015), 119. Google ScholarDigital Library