“PanoSynthVR: View Synthesis From A Single Input Panorama with Multi-Cylinder Images” by Gadgil, John, Zollmann and Ventura

Conference:

Type(s):

Title:

- PanoSynthVR: View Synthesis From A Single Input Panorama with Multi-Cylinder Images

Presenter(s)/Author(s):

Entry Number:

- 11

Abstract:

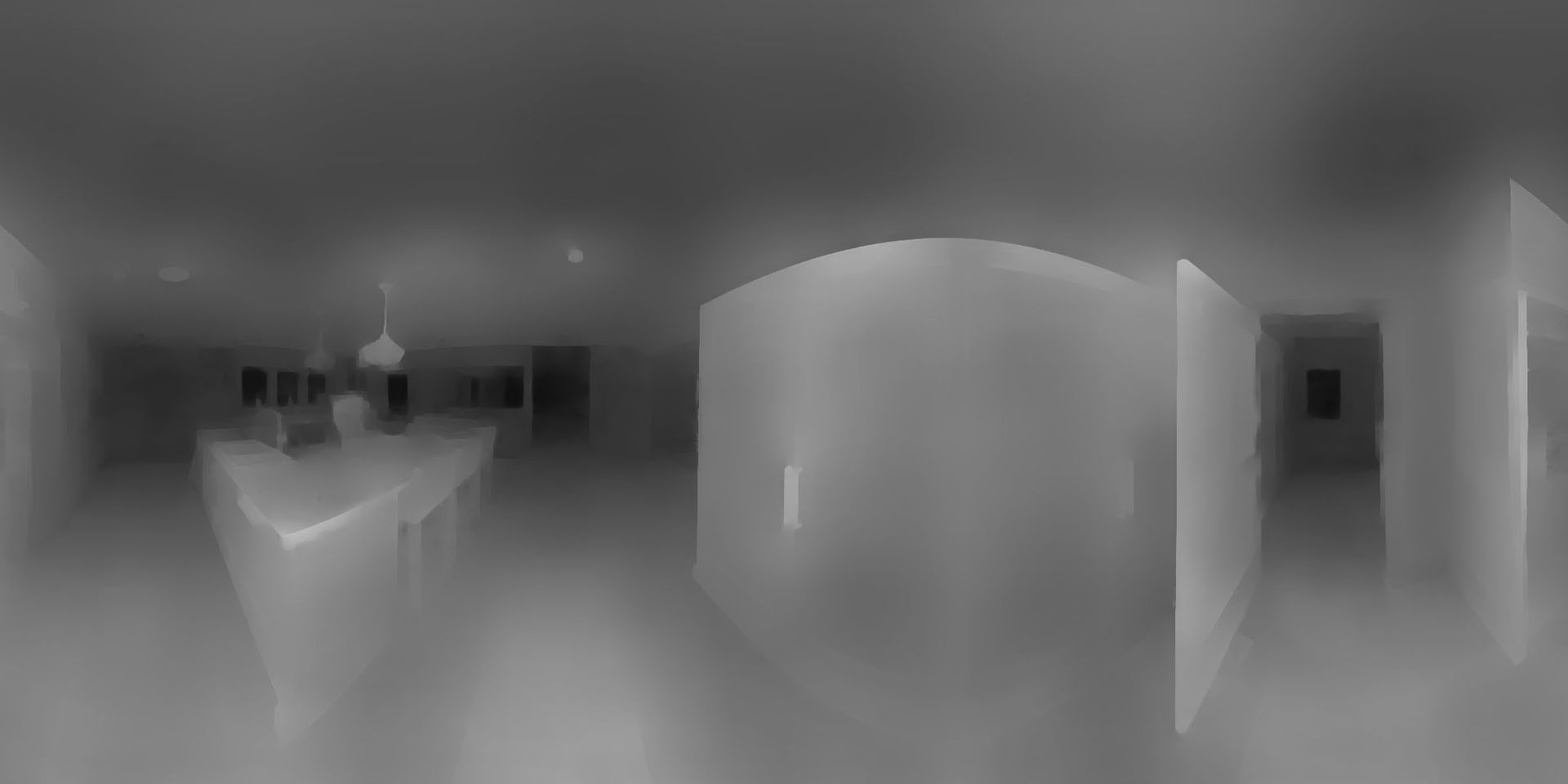

We introduce a method to automatically convert a single panoramic input into a multi-cylinder image representation that supports real-time, free-viewpoint view synthesis for virtual reality. We apply an existing convolutional neural network trained on pinhole images to a cylindrical panorama with wrap padding to ensure agreement between the left and right edges. The network outputs a stack of semi-transparent panoramas at varying depths which can be easily rendered and composited with over blending. Initial experiments show that the method produces convincing parallax and cleaner object boundaries than a textured mesh representation.

Website:

Acknowledgements:

This work was partially supported by NSF Award No. 1924008 and the New Zealand Marsden Council through Grant UOO1724.