“On-set performance capture of multiple actors with a stereo camera” by Wu, Stoll, Valgaerts and Theobalt

Conference:

Type(s):

Title:

- On-set performance capture of multiple actors with a stereo camera

Session/Category Title:

- Dynamics for People, Plants and Clothes

Presenter(s)/Author(s):

Abstract:

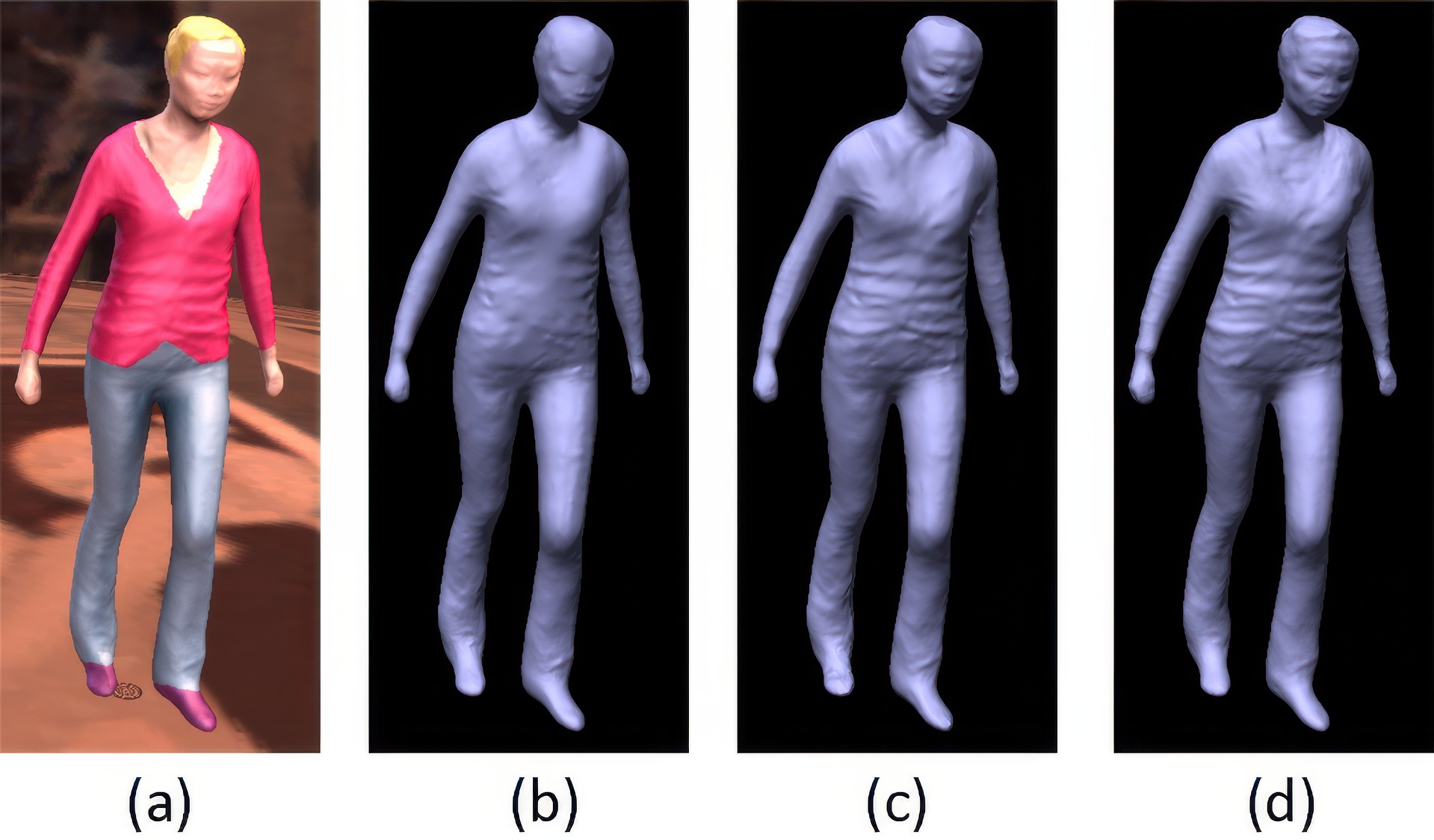

State-of-the-art marker-less performance capture algorithms reconstruct detailed human skeletal motion and space-time coherent surface geometry. Despite being a big improvement over marker-based motion capture methods, they are still rarely applied in practical VFX productions as they require ten or more cameras and a studio with controlled lighting or a green screen background. If one was able to capture performances directly on a general set using only the primary stereo camera used for principal photography, many possibilities would open up in virtual production and previsualization, the creation of virtual actors, and video editing during post-production. We describe a new algorithm which works towards this goal. It is able to track skeletal motion and detailed surface geometry of one or more actors from footage recorded with a stereo rig that is allowed to move. It succeeds in general sets with uncontrolled background and uncontrolled illumination, and scenes in which actors strike non-frontal poses. It is one of the first performance capture methods to exploit detailed BRDF information and scene illumination for accurate pose tracking and surface refinement in general scenes. It also relies on a new foreground segmentation approach that combines appearance, stereo, and pose tracking results to segment out actors from the background. Appearance, segmentation, and motion cues are combined in a new pose optimization framework that is robust under uncontrolled lighting, uncontrolled background and very sparse camera views.

References:

1. Balan, A., Sigal, L., Black, M., Davis, J., and Haussecker, H. 2007. Detailed human shape and pose from images. In Proc. CVPR.

2. Beeler, T., Bradley, D., Zimmer, H., and Gross, M. 2012. Improved reconstruction of deforming surfaces by cancelling ambient occlusion. In Proc. ECCV, 30–43.

3. Boykov, Y., and Funka-Lea, G. 2006. Graph cuts and efficient N-D image segmentation. IJCV 70, 2, 109–131.

4. Bradley, D., Popa, T., Sheffer, A., Heidrich, W., and Boubekeur, T. 2008. Markerless garment capture. ACM TOG (Proc. SIGGRAPH) 27, 3, 99:1–99:9.

5. Bradley, D., Heidrich, W., Popa, T., and Sheffer, A. 2010. High resolution passive facial performance capture. ACM TOG (Proc. SIGGRAPH) 29, 3, 41:1–41:10.

6. Bray, M., Kohli, P., and Torr, P. H. S. 2006. POSECUT: simultaneous segmentation and 3D pose estimation of humans using dynamic graph-cuts. In Proc. ECCV, 642–655.

7. Bregler, C., Malik, J., and Pullen, K. 2004. Twist based acquisition and tracking of animal and human kinematics. IJCV 56, 3, 179–194.

8. Brox, T., Rosenhahn, B., Cremers, D., and Seidel, H.-P. 2006. High accuracy optical flow serves 3-D pose tracking: exploiting contour and flow based constraints. In Proc. ECCV, 98–111.

9. Brox, T., Rosenhahn, B., Gall, J., and Cremers, D. 2010. Combined region and motion-based 3D tracking of rigid and articulated objects. IEEE TPAMI 32, 3, 402–415.

10. Cagniart, C., Boyer, E., and Ilic, S. 2010. Free-form mesh tracking: a patch-based approach. In Proc. CVPR, 1339–1346.

11. Davis, J. E., Yang, R., and Wang, L. 2005. BRDF invariant stereo using light transport constancy. In Proc. ICCV, 436–443.

12. de Aguiar, E., Stoll, C., Theobalt, C., Ahmed, N., Seidel, H.-P., and Thrun, S. 2008. Performance capture from sparse multi-view video. ACM TOG (Proc. of SIGGRAPH) 27, 98:1–98:10.

13. Debevec, P. 1998. Rendering synthetic objects into real scenes: bridging traditional and image-based graphics with global illumination and high dynamic range photography. In Proc. SIGGRAPH, 189–198.

14. Deutscher, J., Blake, A., and Reid, I. 2000. Articulated body motion capture by annealed particle filtering. In Proc. CVPR, 1144–1149.

15. Gall, J., Rosenhahn, B., and Seidel, H.-P. 2008. Human Motion: Understanding, Modelling, Capture and Animation. ch. An Introduction to Interacting Simulated Annealing, 319–343.

16. Gall, J., Stoll, C., Aguiar, E., Theobalt, C., Rosenhahn, B., and Seidel, H.-P. 2009. Motion capture using joint skeleton tracking and surface estimation. In Proc. CVPR, 1746–1753.

17. Ganapathi, V., Plagemann, C., Koller, D., and Thrun, S. 2010. Real time motion capture using a single time-of-flight camera. In Proc. CVPR, 755–762.

18. Hasler, N., Rosenhahn, B., Thormählen, T., Wand, M., Gall, J., and Seidel, H.-P. 2009. Markerless motion capture with unsynchronized moving cameras. In Proc. CVPR, 224–231.

19. Kajiya, J. T. 1986. The rendering equation. In Proc. SIGGRAPH, 143–150.

20. Li, G., Wu, C., Stoll, C., Liu, Y., Varanasi, K., Dai, Q., and Theobalt, C. 2013. Capturing relightable human performances under general uncontrolled illumination. CGF (Proc. EUROGRAPHICS) 32, 275–284.

21. Liao, M., Zhang, Q., Wang, H., Yang, R., and Gong, M. 2009. Modeling deformable objects from a single depth camera. In Proc. ICCV, 167–174.

22. Liu, Y., Stoll, C., Gall, J., Seidel, H.-P., and Theobalt, C. 2011. Markerless motion capture of interacting characters using multi-view image segmentation. In Proc. CVPR, 1249–1256.

23. Matusik, W., Buehler, C., Raskar, R., Gortler, S. J., and McMillan, L. 2000. Image-based visual hulls. In Proc. SIGGRAPH, 369–374.

24. Moeslund, T., Hilton, A., and Krüger, V. 2006. A survey of advances in vision-based human motion capture and analysis. CVIU 104, 2, 90–126.

25. Plankers, R., and Fua, P. 2001. Tracking and modeling people in video sequences. CVIU 81, 3, 285–302.

26. Popa, T., South-Dickinson, I., Bradley, D., Sheffer, A., and Heidrich, W. 2010. Globally consistent space-time reconstruction. CGF (Proc. SGP) 29, 5, 1633–1642.

27. Poppe, R. 2007. Vision-based human motion analysis: An overview. CVIU 108, 1–2, 4–18.

28. Ramamoorthi, R., and Hanrahan, P. 2001. A signal-processing framework for inverse rendering. In Proc. SIGGRAPH, 117–128.

29. Ramamoorthi, R., and Hanrahan, P. 2002. Frequency space environment map rendering. Proc SIGGRAPH 21, 3, 517–526.

30. Rother, C., Kolmogorov, V., and Blake, A. 2004. “Grab-Cut”: interactive foreground extraction using iterated graph cuts. ACM TOG 23, 3, 309–314.

31. Shotton, J., Fitzgibbon, A., Cook, M., Sharp, T., Finocchio, M., Moore, R., Kipman, A., and Blake, A. 2011. Real-time human pose recognition in parts from single depth images. In Proc. CVPR, 1297–1304.

32. Sigal, L., Balan, A., and Black, M. 2010. HumanEva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. IJCV 87, 4–27.

33. Starck, J., and Hilton, A. 2007. Surface capture for performance based animation. IEEE CGA 27, 3, 21–31.

34. Stoll, C., Hasler, N., Gall, J., Seidel, H.-P., and Theobalt, C. 2011. Fast articulated motion tracking using a sums of gaussians body model. In Proc. ICCV, 951–958.

35. Taylor, J., Shotton, J., Sharp, T., and Fitzgibbon, A. W. 2012. The Vitruvian manifold: Inferring dense correspondences for one-shot human pose estimation. In Proc. CVPR, 103–110.

36. Tevs, A., Berner, A., Wand, M., Ihrke, I., Bokeloh, M., Kerber, J., and Seidel, H.-P. 2012. Animation cartography: intrinsic reconstruction of shape and motion. ACM TOG 31, 2, 12:1–12:15.

37. Theobalt, C., Ahmed, N., Lensch, H., Magnor, M., and Seidel, H. P. 2007. Seeing people in different light — joint shape, motion, and reflectance capture. IEEE TVCG 13, 3, 663–674.

38. Torrance, K. E., and Sparrow, E. M. 1967. Theory for off-specular reflection from roughened surfaces. J. Opt. Soc. Am. 57, 9, 1105–1112.

39. Valgaerts, L., Bruhn, A., Zimmer, H., Weickert, J., Stoll, C., and Theobalt, C. 2010. Joint estimation of motion, structure and geometry from stereo sequences. In Proc. ECCV, 568–581.

40. Valgaerts, L., Wu, C., Bruhn, A., Seidel, H.-P., and Theobalt, C. 2012. Lightweight binocular facial performance capture under uncontrolled lighting. In ACM TOG (Proc. SIGGRAPH Asia), vol. 31, 187:1–187:11.

41. Vlasic, D., Baran, I., Matusik, W., and Popović, J. 2008. Articulated mesh animation from multi-view silhouettes. ACM TOG (Proc. SIGGRAPH) 27, 3, 97:1–97:9.

42. Vlasic, D., Peers, P., Baran, I., Debevec, P., Popovic, J., Rusinkiewicz, S., and Matusik, W. 2009. Dynamic shape capture using multi-view photometric stereo. ACM TOG (Proc. SIGGRAPH Asia) 28, 5, 174:1–174:11.

43. Waschbüsch, M., Würmlin, S., Cotting, D., Sadlo, F., and Gross, M., 2005. Scalable 3D video of dynamic scenes.

44. Wei, X., and Chai, J. 2010. Videomocap: modeling physically realistic human motion from monocular video sequences. ACM TOG (Proc. SIGGRAPH) 29, 4, 42:1–42:10.

45. Wei, X., Zhang, P., and Chai, J. 2012. Accurate realtime full-body motion capture using a single depth camera. ACM Trans. Graph. 31, 6, 188:1–188:12.

46. Wu, C., Varanasi, K., Liu, Y., Seidel, H.-P., and Theobalt, C. 2011. Shading-based dynamic shape refinement from multi-view video under general illumination. In Proc. IEEE ICCV, 1108–1115.

47. Wu, C., Varanasi, K., and Theobalt, C. 2012. Full body performance capture under uncontrolled and varying illumination: A shading-based approach. In Proc. ECCV, 757–770.

48. Zhang, R., Tsai, P., Cryer, J., and Shah, M. 1999. Shape from shading: A survey. IEEE TPAMI 21, 8, 690–706.

49. Zitnick, C. L., Kang, S. B., Uyttendaele, M., Winder, S., and Szeliski, R. 2004. High-quality video view interpolation using a layered representation. ACM TOG (Proc. SIGGRAPH) 23, 3, 600–608.