“GestureDiffuCLIP: Gesture Diffusion Model With CLIP Latents” by Ao, Zhang and Liu

Conference:

Type(s):

Title:

- GestureDiffuCLIP: Gesture Diffusion Model With CLIP Latents

Session/Category Title:

- Character Animation: Knowing What To Do With Your Hands

Presenter(s)/Author(s):

Moderator(s):

Abstract:

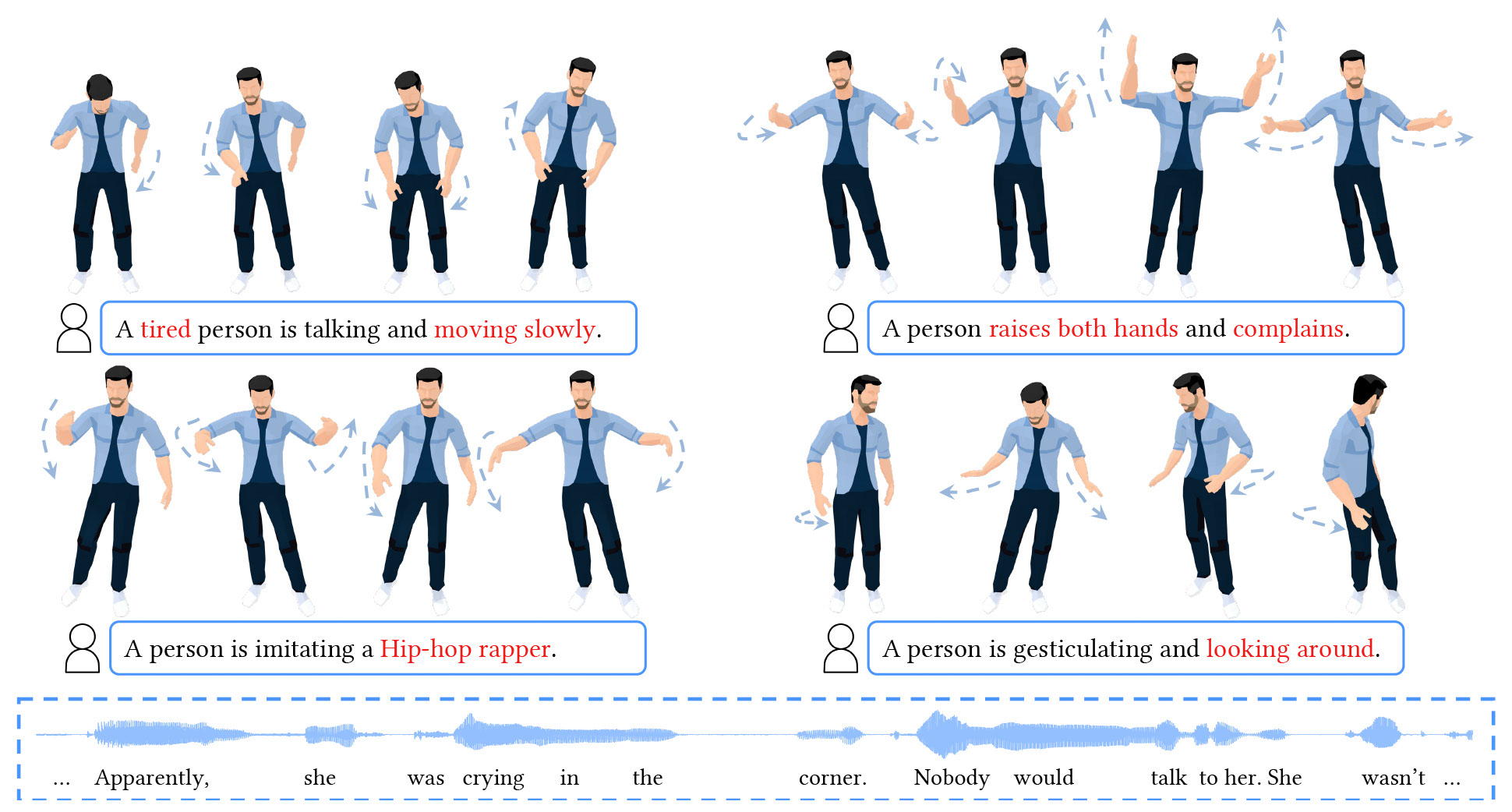

We introduce GestureDiffuCLIP, a CLIP-guided, co-speech gesture synthesis system that creates stylized gestures in harmony with speech semantics and rhythm using arbitrary style prompts. Our highly adaptable system supports style prompts in the form of short texts, motion sequences, or video clips and provides body part-specific style control.

ACM Digital Library Publication:

Overview Page:

Submit a story:

If you would like to submit a story about this presentation, please contact us: historyarchives@siggraph.org