“Fast Facial Animation from Video” by Navarro, Kneubuehler, Verhulsdonck, Bois, Welch, et al. …

Conference:

Title:

- Fast Facial Animation from Video

Session/Category Title:

- Facial Animation

Presenter(s)/Author(s):

- Iñaki Navarro

- Dario Kneubuehler

- Tijmen Verhulsdonck

- Eloi du Bois

- William Welch

- Ian Sachs

- Kiran S. Bhat

- Vivek Verma

Interest Area:

- Production & Animation, Research / Education, AI / Machine Learning, and VR / AR

Entry Number:

- 25

Abstract:

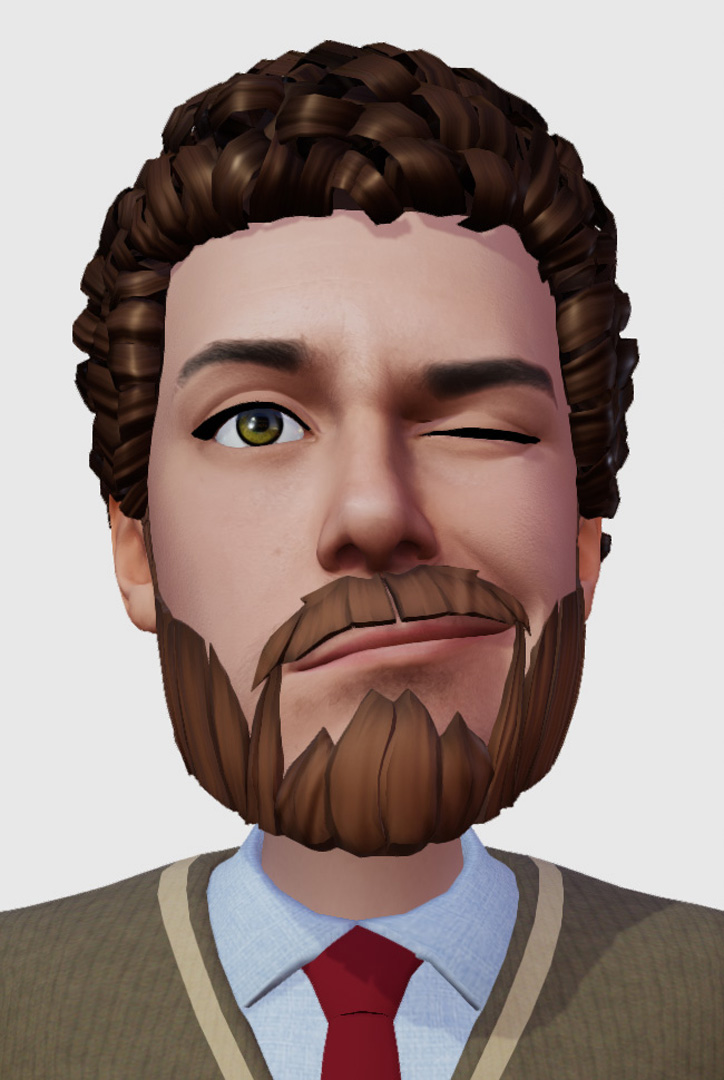

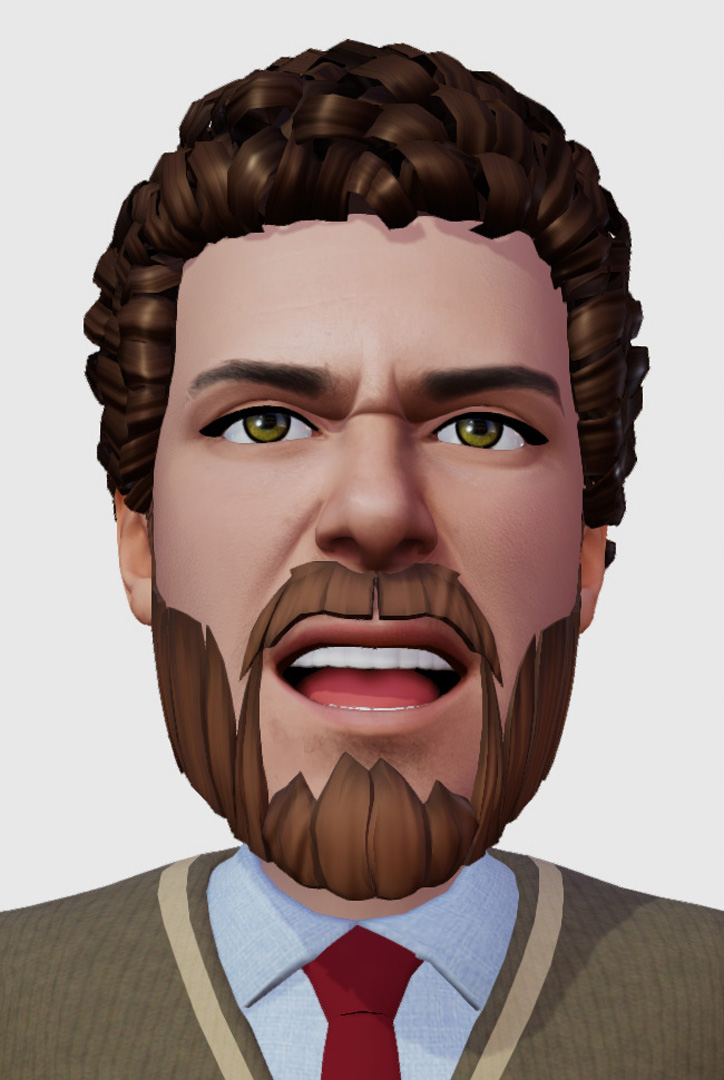

Real time facial animation for virtual 3D characters has important applications such as AR/VR, interactive 3D entertainment, previsualization and video conferencing. Yet despite important research breakthroughs in facial tracking and performance capture, there are very few commercial examples of real-time facial animation applications in the consumer market. Mass adoption re- quires realtime performance on commodity hardware and visually pleasing animation that is robust to real world conditions, without requiring manual calibration. We present an end-to-end deep learning framework for regressing facial animation weights from video that addresses most of these challenges. Our formulation is fast (3.2 ms), utilizes images of real human faces along with millions of synthetic rendered frames to train the network on real-world scenarios, and produces jitter-free visually pleasing animations.

References:

Apple. 2021. ARKit Developer Documentation. https://developer.apple.com/ documentation/arkit/arfaceanchor

Daniel Cudeiro, Timo Bolkart, Cassidy Laidlaw, Anurag Ranjan, and Michael Black. 2019. Capture, Learning, and Synthesis of 3D Speaking Styles. In Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 10101–10111. http: //voca.is.tue.mpg.de/

Ivan Grishchenko, Artsiom Ablavatski, Yury Kartynnik, Karthik Raveendran, and Matthias Grundmann. 2020. Attention Mesh: High-fidelity Face Mesh Prediction in Real-time. arXiv:2006.10962 [cs.CV]

Xiaojie Guo, Siyuan Li, Jiawan Zhang, Jiayi Ma, Lin Ma, Wei Liu, and Haibin Ling. 2019. PFLD: A Practical Facial Landmark Detector. CoRR abs/1902.10859 (2019). arXiv:1902.10859 http://arxiv.org/abs/1902.10859

Sina Honari, Pavlo Molchanov, Stephen Tyree, Pascal Vincent, Christopher Pal, and Jan Kautz. 2018. Improving Landmark Localization with Semi-Supervised Learning. arXiv:1709.01591 [cs.CV]

Additional Images:

- 2021 Talks: Navarro_Fast Facial Animation from Video

- 2021 Talks: Navarro_Fast Facial Animation from Video

- 2021 Talks: Navarro_Fast Facial Animation from Video

- 2021 Talks: Navarro_Fast Facial Animation from Video

- 2021 Talks: Navarro_Fast Facial Animation from Video